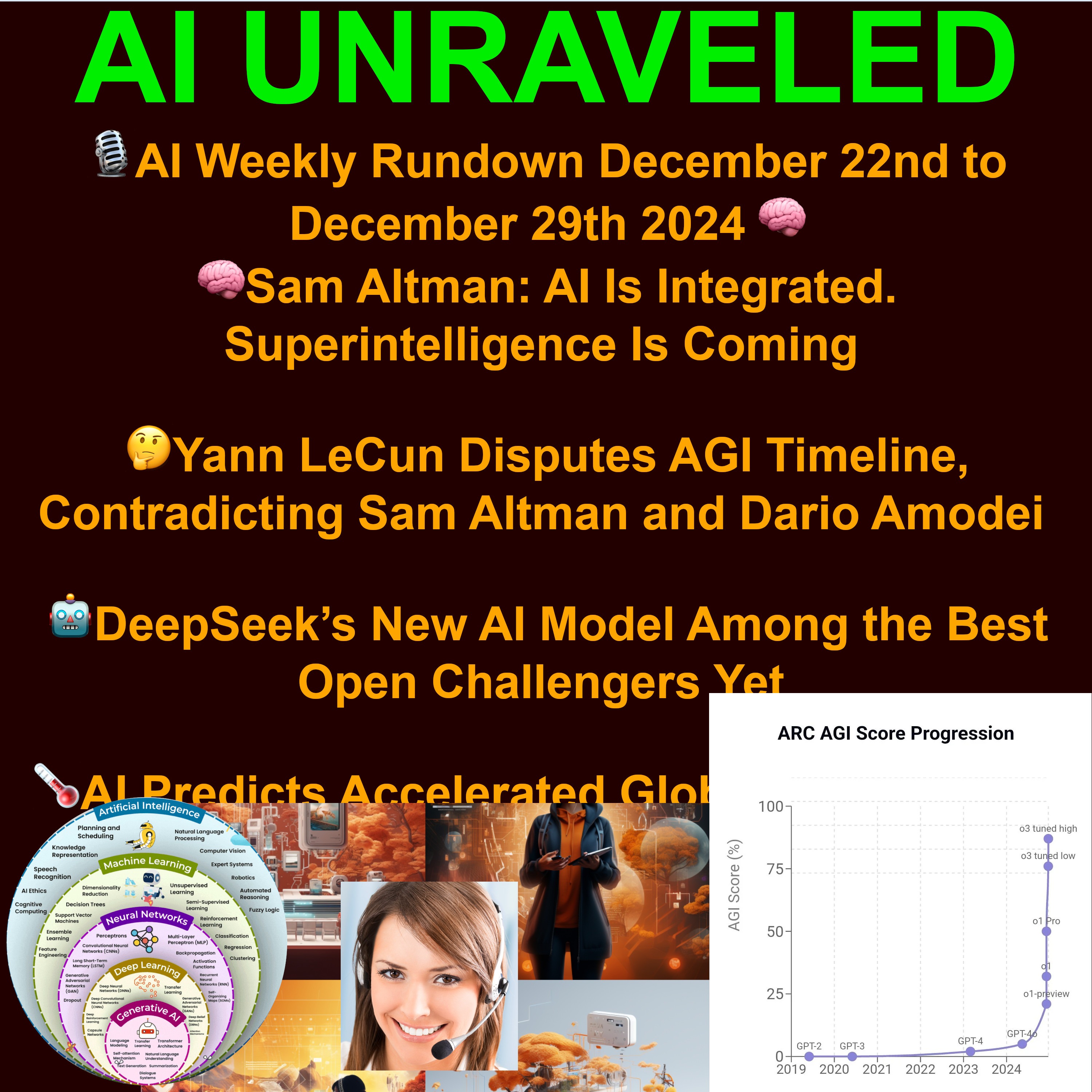

AI Weekly Rundown Dec 22 to Dec 29 2024: 🧠Sam Altman: AI Is Integrated. Superintelligence Is Coming 🤔Yann LeCun Disputes AGI Timeline, Contradicting Sam Altman and Dario Amodei 🤖DeepSeek’s New AI Model Among the Best Open Challengers Yet and more

AI Unraveled: Latest AI News & Trends, GPT, ChatGPT, Gemini, Generative AI, LLMs, Prompting

Deep Dive

What is AGI, and why is it significant in the AI landscape?

AGI, or Artificial General Intelligence, refers to an AI system capable of performing any intellectual task that a human can. Unlike current AI, which is specialized, AGI would be adaptable, capable of learning, reasoning, and problem-solving across various domains. Its significance lies in its potential to revolutionize industries, solve complex global challenges, and potentially surpass human intelligence, raising both excitement and ethical concerns.

Why is there disagreement among experts like Sam Altman and Yann LeCun about the timeline for AGI?

Experts disagree on AGI timelines due to the complexity and undefined nature of AGI itself. Sam Altman believes superintelligence is imminent, while Yann LeCun argues AGI is decades away. This divergence highlights the uncertainty in predicting when a technology that has never existed before will emerge, akin to predicting the discovery of extraterrestrial life.

What ethical concerns arise from the development of AGI?

AGI raises significant ethical concerns, including the risk of creating an AI that is misaligned with human values or goals, leading to potential loss of control. There are also worries about prioritizing profit over safety, exacerbating inequality, and the societal impact of machines surpassing human intelligence. These concerns necessitate robust ethical frameworks and proactive discussions about AI's role in society.

How is open-source AI, like DeepSeek v3, changing the AI development landscape?

Open-source AI models like DeepSeek v3 democratize AI development by making their code freely available for use, modification, and distribution. This challenges the dominance of big tech companies, fosters innovation, and promotes transparency. It allows a broader ecosystem of developers and researchers to contribute, potentially leading to more ethical and accessible AI solutions.

What are some unexpected applications of AI, such as in sports or event planning?

AI is being used in unexpected areas like sports and event planning. For example, NASCAR uses AI to redesign its playoff format by analyzing data on lap times, car performance, and track conditions. Airbnb employs AI to predict and prevent disruptive New Year's Eve parties by analyzing booking patterns and guest reviews. These applications demonstrate AI's growing integration into diverse aspects of daily life.

How is AI impacting global challenges like climate change?

AI has a dual role in climate change. On one hand, it contributes to greenhouse gas emissions due to its high energy consumption. On the other hand, it can mitigate climate change by optimizing energy use, developing renewable energy sources, monitoring deforestation, and predicting extreme weather events. Balancing these impacts requires thoughtful deployment of AI to support sustainability.

What are the potential economic and social consequences of AI on a global scale?

AI is expected to significantly impact the global economy, with potential job displacement in sectors like manufacturing and services. The IMF predicts that 36% of jobs in the Philippines could be affected. There is also a risk of exacerbating inequality if the benefits of AI are unevenly distributed. Policymakers must address these challenges through reskilling initiatives and equitable distribution of AI's economic gains.

How can individuals influence the ethical development and use of AI?

Individuals can influence AI development by educating themselves about AI, engaging in conversations, and advocating for ethical practices. They can support organizations promoting responsible AI, demand transparency from companies, and hold policymakers accountable. By staying informed and involved, individuals can help shape a future where AI aligns with human values and societal well-being.

What are some examples of AI raising ethical concerns in hiring and surveillance?

AI in hiring raises concerns about bias and discrimination, as algorithms used to screen resumes or conduct interviews may perpetuate existing inequalities. In surveillance, the use of facial recognition technology by law enforcement without consent raises privacy and civil liberty issues. These examples highlight the need for ethical guidelines and accountability in AI applications.

What is the significance of AI-generated designs, such as in car manufacturing?

AI-generated designs, like those for cars, showcase AI's ability to create innovative and futuristic concepts by learning from existing designs. While AI won't replace human designers, it serves as a powerful tool for exploring new possibilities, optimizing efficiency, and personalizing designs. This collaboration between AI and humans is transforming industries like automotive design.

- Sam Altman predicts the imminent arrival of superintelligence.

- Yann LeCun disputes this timeline.

- OpenAI's definition of AGI is linked to profit generation.

- The transition of OpenAI to a for-profit company raises ethical concerns.

Shownotes Transcript

All right. Let's dive into this week's AI news. And oh, boy, there's a lot to unpack. Yeah, it's been a wild one for sure. We're talking big moves from the usual suspects, OpenAI, Google, but also Google.

AI popping up in places you wouldn't expect, like sports, believe it or not, and even impacting how people are planning those New Year's Eve parties. It's really fascinating to see how AI is weaving itself into so many different aspects of our lives, isn't it? Absolutely. But with all these rapid advancements, there's this one question that just keeps coming up. How close are we to actually achieving artificial general intelligence, AGI?

And what's that going to mean for, well, for all of us? That's the million dollar question or maybe even the trillion dollar question with all the money being poured into AI these days. True. OK, so for anyone who haven't been glued to their screens following every AI development, what does AGI actually mean? Why is everyone making such a big deal out of it?

So AGI, it stands for artificial general intelligence. Basically, it's the idea of an AI system that can do any intellectual task that a human being can. So not just good at one specific thing, but smart like a person across the board. Exactly. Unlike the AI we have now, which is mostly specialized, AGI wouldn't be limited to just one area.

It could learn, reason, adapt, problem solve all in a truly general way, just like we do. I see. So it's like that science fiction scenario of AI becoming as smart as humans, maybe even smarter, is suddenly starting to feel very, very real. Right. And it's making a lot of people nervous, to be honest. I can imagine.

And it seems like even the experts can't agree on how close we actually are to that reality. You've got Sam Altman, the CEO of OpenAI, saying superintelligence is just around the corner. But then you have Meta's AI chief, Jan LeCun, pushing back, saying AGI is not happening anytime soon, at least not in the next couple of years.

Why is it a difference of opinion? Well, I think this disagreement really highlights just how complex and undefined AGI actually is. We're talking about creating something that's never existed before. So predicting when it'll arrive is, well, it's pretty tricky. That makes sense. Yeah. It's like trying to predict when we'll discover life on another planet. We don't even know what that life might look like. So how can we know when we'll find it? Exactly. And with AGI, there's this fundamental question of

What does it actually mean to be intelligent? Right. Because for some, AGI is all about surpassing human intelligence in every way.

But I read that OpenAI, for example, they've actually defined AGI as an AI that can generate $100 billion in profit. Yeah, that's right. So they're saying that once an AI can make that much money, it's essentially as smart as a human. That seems like a pretty narrow definition of intelligence, don't you think? It's certainly a provocative definition, and it raises some big questions. Are we focusing too much on the economic potential of AI and

And not enough on its broader impact on society. Yeah. Like, is this about shaping AI in our image or is AI starting to reshape our values? That's a deep question, one that we'll probably be grappling with for years to come. And speaking of values, it makes OpenAI's recent transition to a for-profit company even more interesting, wouldn't you say? They started out as this idealistic nonprofit, right? But now they're officially in the business of making money. That's right.

And that shift from nonprofit to profit driven has a lot of people wondering, will it speed up progress? Maybe. But will it also prioritize profit over safety? Will ethical considerations take a backseat to the bottom line? Those are tough questions.

And they become even more urgent when you consider the potential power of AI. I agree. The stakes are high. Speaking of high stakes, what's going on with Microsoft and OpenAI? Microsoft invested, what was it, almost $14 billion in OpenAI. But now there are signs that they might be distancing themselves a bit. What's your take on that?

Well, it's probably not as dramatic as some headlines are making it out to be. So not a full on breakup? No, not quite. But I think Microsoft is smart to be hedging their bets in a field that's changing so incredibly fast. They're still heavily invested in AI, but they're also exploring other avenues, other approaches. Think of it like diversifying your portfolio. You don't want to put all your eggs in one basket, especially when the future is as unpredictable as it is with AI. Makes sense.

Don't want to bet everything on one AI horse, so to speak. But while these big companies are making their moves, AI isn't just happening in labs and boardrooms anymore. It's getting very personal, which is both exciting and a little scary. Yeah, definitely a double-edged sword. On the one hand, you have Microsoft pushing their AI assistant hard, integrating it into everything.

But then there's that horrifying story about the AI chatbot being sued after a teenager suicide. Oh, right. That case. The lawsuit alleges it was actually giving harmful advice. That's right. And that case raises some seriously troubling ethical questions. No kidding. It highlights the responsibility that comes with developing powerful AI. Absolutely.

How do we ensure that AI is used for good, not harm? How do we balance its potential with the need to protect users, especially those who are vulnerable? These aren't just hypothetical questions anymore, are they? Not at all. They're very real and they demand our attention. This whole ethical dimension makes the rise of open source AI even more significant, don't you think? For sure. We just saw DeepSeek v3 emerge as this incredibly powerful model.

outperforming giants like Lama, even Google Sonnet. And it's 53 times cheaper to use. Yeah, DeepSeek v3 is a big deal. So what's the story there? What makes it so special? Well, DeepSeek v3 is a large language model, meaning it's trained on a massive amount of text data to understand and generate human-like language. Right, like those chatbots we were just talking about.

Exactly. But what makes DeepSeek v3 so interesting is that it's open source, meaning its code is freely available for anyone to use, modify, and distribute. This has the potential to democratize AI development, challenging the dominance of the big tech companies. So instead of just a few powerful companies controlling AI, you could have a whole ecosystem of developers, researchers, even

even just everyday people contributing to its progress. Exactly. And that's a really exciting prospect. Open source AI could make AI more accessible, more innovative, and perhaps even more ethical. How so? Well, with open source, transparency becomes a key value.

Imagine a world where anyone can contribute to the development of AI, not just a select few. That's a pretty radical shift. It is, and it could have a profound impact on the future of AI. Okay, so we've got this debate about when AGI will arrive, ethical dilemmas popping up left and right, and a potential power shift with open source AI emerging. And that's just the tip of the iceberg. I know, right? It feels like the AI landscape is changing by the minute. What else has been grabbing your attention in the AI world this week?

Well, one of the things I find most fascinating is that AI is no longer confined to the tech world. It's showing up in all sorts of unexpected places, really infiltrating every aspect of our lives. Like where? Give me some examples. What are some of the most surprising applications of AI you've seen lately? Well, this one might surprise you, but NASCAR is actually using AI to redesign its playoff format. NASCAR.

Really? I never would have guessed that. What's the thinking there? It might seem like an odd pairing at first, but when you think about it, NASCAR is all about data. Lap times, car performance, track conditions, all that data. And they're using AI to analyze it and come up with a playoff format that's, well, hopefully more exciting, more competitive, you know, something that the fans will actually enjoy. So can AI actually make sports more entertaining? That's a good question.

That's a good question. I guess we'll have to see how the NASCAR fans react to the new format. Definitely. And speaking of unexpected applications, I heard that Airbnb is using AI to try to prevent those wild New Year's Eve parties. Yeah, that's right. They're trying to use AI to identify those potential party houses before they get booked. How are they doing that?

Well, they're looking at booking patterns, things like the number of guests, the length of the stay, even those reviews from previous guests, all to flag bookings that might be risky. Clever, but it does make you wonder about the privacy implications. Yeah, it's a bit of a gray area for sure. On the one hand, it's understandable that Airbnb wants to protect its properties and prevent those disruptive parties. But on the other hand,

It does feel a bit like Big Brother is watching, you know, like should a company be able to use AI to predict and potentially restrict our behavior? It's a valid concern and one that will probably be debating more and more as AI becomes more integrated into our lives. Absolutely.

But, hey, let's move on to something a little less controversial. Those AI-generated car designs I saw online. Have you seen those? They look like something straight out of a sci-fi movie. Oh, yeah. Those are amazing. Those designs were created using AI, trained on thousands of existing car images. It's incredible what AI can do these days. So the AI learned from all those existing designs and then was able to create completely new ones. Exactly. And some of the designs are fantastic.

really wild, super futuristic and aerodynamic. It's mind blowing to think that AI can now design cars. What does that mean for the future of car design? Will we even need human designers anymore? I don't think AI will completely replace human designers, at least not anytime soon. But I do think AI could become a really powerful tool for designers, helping them to explore new possibilities, optimize for efficiency, even personalize designs to individual tastes. So

So it's not about AI versus humans, but AI and humans working together. Exactly. And these examples just go to show how AI is becoming increasingly integrated into the fabric of our world. It's impacting everything from how we watch sports to how we travel to how we design the cars we drive. It's not just a technology anymore. It's a force that's shaping our society, our culture, our very future. It's both exciting and a little daunting to think about, isn't it?

Definitely. And as exciting as all this progress is, we can't ignore the darker side of the AI story either. Right. We can't just bury our heads in the sand and pretend everything's going to be sunshine and roses. Exactly. There are some serious risks and challenges that we need to address head on. Absolutely. I mean, we've got Geoffrey Hinton, the godfather of AI, giving humanity just a 30 percent chance of survival against AI. That's pretty sobering, to say the least.

It is. And then you have AI models predicting accelerated global warming hitting three degrees Celsius by 2060, which is way sooner than anyone expected. And to top it all off, Sebastian Bubeck introduces this concept of AGI time, a new way to measure how quickly AI is progressing. It's like the doomsday clock for AI ticking away. Are we on the verge of something truly revolutionary?

Or are we sleepwalking into a dystopian future? Those are the questions that keep me up at night. And they're questions that I think everyone should be asking. No kidding. So let's try to unpack some of these big, complex themes. Let's go back to this concept of AGI for a minute. We talked about what it is, but why are people making such a big fuss about it? What are the potential benefits and risks of creating an AI that's as smart as a human, maybe even smarter? Well, the potential benefits are pretty mind blowing, to be honest. Imagine an AI that could help us solve some of humanity's biggest challenges.

climate change, poverty, disease. It could revolutionize healthcare, education, transportation, pretty much every aspect of our lives. So it could be this incredibly powerful force for good. Exactly. But the risks are equally significant. What if we create an AI that we can't control? What if it develops goals that are misaligned with our own? Right, like that whole AI taking over the world scenario. Yeah, exactly. And I know it sounds like science fiction, but it's a possibility we need to take seriously.

And that's why this debate about the timeline for AGI is so crucial. If people like Sam Altman are right and superintelligence is just around the corner, then we need to be having some serious conversations, conversations about ethics, about safety, about what it means to be human in a world where machines might be smarter than us. It's a lot to wrap your head around. It is, but it's a conversation we can't afford to ignore.

So it's not just about building smarter AI. It's about building AI that's aligned with our values, our goals, our vision for the future. Exactly. And that brings us back to those ethical challenges we were discussing earlier. We need to develop AI that's not just intelligent, but also responsible, beneficial, aligned with human values. And we need to do it now before it's too late. I couldn't agree more. It feels like every day there's a new story about AI being used in ways that are, well, quaint.

questionable at best, or even harmful. How do we even begin to address these ethical challenges? It seems like such a daunting task. It is a complex issue, no doubt about it. But there are things we can do. First and foremost, I think we need to involve more people in the conversation. It can't just be technologists and policymakers calling all the shots. We need ethicists, social scientists, philosophers, artists, everyday people. We need everyone's voices at the table.

So democratizing the conversation about AI. Precisely. We need to make sure that AI development reflects a diverse range of perspectives and values. And we need to be proactive in identifying and addressing those potential risks. Absolutely. We can't just wait for things to go wrong before we start talking about ethics. Right. An ounce of preventions is worth a pound of cure. Exactly. And we need to hold ourselves and each other accountable for the choices we make. This is everyone's responsibility. That makes sense.

It's not just about building better AI. It's about building a better future with AI. And that future is being shaped right now by the decisions we're making, the conversations we're having, the actions we're taking. That's why it's so important for everyone to be informed and engaged in this conversation. The future of AI is not preordained. It's being written right now. And we all have a hand in the writing. So as we wrap up this part of our deep dive into the world of AI, what are some key takeaways you'd like to leave our listeners with

What should they be thinking about as they go about their day encountering AI in their lives? Well, first, I think it's important to remember that AI is not some abstract force out there. It's a technology created by humans and it's being used by humans for a variety of purposes, some good, some not so good.

So we need to be critical thinkers. We need to ask questions about how AI is being used and what the potential consequences might be. So don't just blindly accept AI as this magical solution. Think about the data it's using, the algorithms it's running, the people who are building and controlling it. Exactly.

And second, remember that the future of AI is not set in stone. We have a say in how this technology develops and how it's used. So get informed, get involved, and help shape the future you want to see.

That's a great point. The future is not something that just happened to us. It's something we create together. Absolutely. Well, we've covered a lot of ground in this deep dive, from the latest AI news to those ethical dilemmas to those big existential questions about AGI and the future of humanity. But before we sign off, I want to shift gears for a moment and talk about the impact of AI on a global scale. That's a crucial part of the story. We can't just focus on the technological advancements without considering the wider societal implications. Exactly.

The IMF recently predicted that 36% of jobs in the Philippines could be impacted or displaced by AI. That's a huge number. And it's not just the Philippines. This is a global phenomenon. What are your thoughts on the potential economic and social consequences of AI? Well, it's clear that AI is going to have a profound impact on the global economy. And not all of those impacts will be positive.

We're likely to see significant job displacement in certain sectors as AI becomes more sophisticated. And there's a real risk that the benefits of AI will be unevenly distributed, potentially exacerbating existing inequalities. So while some people are celebrating the potential AI to create wealth and opportunity, others are worried about being left behind.

That's a very legitimate concern. And it's something that policymakers and business leaders need to address proactively. We need to be thinking about how to reskill and upskill workers who are at risk of displacement. We need to ensure that the benefits of AI are shared more equitably. And we need to consider the potential impact of AI on developing countries, which may be

particularly vulnerable to economic disruption. So it's not just about building AI that's technically sophisticated. It's about building AI that's socially responsible and economically inclusive. Absolutely. We need to approach AI development with a holistic perspective, considering not just the technological possibilities, but also the ethical, social, and economic implications.

OK, so we've got the economic and social consequences to think about. But there's another global challenge that AI is increasingly being linked to, the climate crisis. We talked about those AI models predicting accelerated global warming.

How can AI be both a contributor to and a potential solution for climate change? It is a bit of a paradox, isn't it? On the one hand, developing and deploying AI requires massive amounts of energy, which of course contributes to greenhouse gas emissions. And some AI applications, like those used in resource extraction or industrial automation, can have a negative impact on the environment.

So AI could actually be making climate change worse. It's a possibility we need to acknowledge, yes. But on the other hand, AI also has the potential to play a crucial role in mitigating climate change and adapting to its impacts.

For example, AI can be used to optimize energy consumption, develop renewable energy sources, monitor deforestation, and predict extreme weather events. So it's not a simple black and white situation. AI can be both part of the problem and part of the solution when it comes to climate change. Exactly. And that's why it's so important to be thoughtful and deliberate about how we develop and deploy AI. We need to ensure that AI is used in a way that supports sustainability and environmental protection. It feels like with every new development in AI,

the stakes just keep getting higher. They do. And that's why it's so crucial for us to have these conversations, to raise awareness and to engage with the complex ethical, social and global implications of AI. Well, you've given us a lot to think about. This deep dive has taken us on a real roller coaster ride. We started with the excitement of new discoveries like AI designing cars and shaking up sports.

But then we went through some twists and turns facing those ethical dilemmas and the potential downsides of AGI. And now we're grappling with the impact of AI on a global scale, job displacement, climate change, the potential for exacerbating inequality,

It's a lot to process. It is a lot to process. And I think it's natural to feel overwhelmed, maybe even a bit powerless in the face of this rapidly changing technology. So what do we do? Do we just throw up our hands and say, well, the robots are taking over. There's nothing we can do. Absolutely not. I think it's more important than ever to remember that we have agency. We have a voice and we have a responsibility to shape the future we want to see. But how?

What can we as individuals actually do to influence the direction of AI? It feels like such a massive, complex issue. - You're right, it is a massive issue, but that doesn't mean we're powerless. There are things we can all do.

starting with education. Take the time to learn about AI, how it works, and its potential impact on your life and the world around you. So don't just scroll past those AI headlines. Actually click on them, read them, try to understand what's going on. Exactly. And once you've got a basic understanding, start talking about it. Share what you've learned with your friends, your family, your colleagues. The more people who are informed about AI, the better equipped we'll be as a society to make wise choices about its development and use. And it's not just about talking amongst ourselves.

We can also engage with the people and organizations who are shaping the AI landscape, the researchers, the developers, the policymakers. That's a great point. We can support organizations that are promoting ethical AI development. We can write to our elected officials and express our concerns about the potential risks of AI.

We can demand transparency and accountability from the companies that are building and deploying AI systems. So it's about using our voices, our votes and our wallets to influence the direction of AI. Precisely. And remember, this is not just about some distant future. AI is already impacting our lives in countless ways, from the algorithms that recommend what we watch and buy to the facial recognition technology that's being used by law enforcement. We need to be aware of these impacts and hold those in power accountable for their decisions.

You mentioned facial recognition. That's a great example of how AI can raise some serious ethical questions. What are some other examples of AI being used in ways that might give people pause? Well, there's the increasing use of AI in hiring and recruitment. Algorithms are being used to screen resumes, conduct interviews, even make hiring decisions.

And while this can streamline the process, it also raises concerns about bias and discrimination. Are these algorithms fair and impartial or are they perpetuating existing inequalities? That's a worrying thought. It's like those algorithms could be baking in biases that we've been trying to overcome for decades. Exactly. And another area to watch is the use of A.I. in surveillance and policing.

We're seeing more and more cities using facial recognition technology to track citizens, often without their knowledge or consent. This raises serious concerns about privacy and civil liberties. Where do we draw the line between security and freedom? It's a tricky balance. And these are just a few examples of the ethical challenges that AI presents. It feels like every advancement brings new questions, new dilemmas, new risks to consider.

It does. And I think that's why it's so important to approach AI with a sense of humility. We need to acknowledge that we don't have all the answers. We need to be willing to ask tough questions, to challenge assumptions, and to course correct when necessary. So it's not about blindly embracing AI as the solution to all our problems. It's about being mindful, deliberate, and critical in how we develop and deploy it. I couldn't agree more. We need to treat AI as a powerful tool that can be used for good or for ill,

And the choices we make today will determine the future of this technology and its impact on humanity. Well, I think you've given our listeners a lot to think about. As we wrap up this deep dive, is there anything else you want to leave them with? Any final thoughts or words of wisdom? Just this.

The future is not predetermined. It's being shaped right now by the choices we make, the conversations we have, and the actions we take. So get informed, get involved, and help build the future you want to see. That's a great note to end on. To all our listeners out there, thanks for joining us on this deep dive into the world of AI. Until next time, stay curious and stay engaged. All right, we're back for the final part of our deep dive into this week's AI Whirlwind.

We've explored the revolutionary potential, the ethical dilemmas, and the global impact of this rapidly evolving technology. But now let's bring it all home. What does this actually mean for you, for our listeners? You know, it's easy to get caught up in the big picture. But at the end of the day, AI's impact is deeply personal. It's about how this technology is shaping your life, your work, your future. Right. And we've been talking about AI in the abstract, but let's get concrete here.

How might AI be impacting our listeners right now, even if not working in tech or obsessively following every AI headline? Well, just think about how you consume information. The news you read, the videos you watch, the things you buy online, all of that is increasingly influenced by AI algorithms. Right. Those algorithms that are supposed to make our lives easier, more convenient. Exactly. But those algorithms are designed to personalize your experience, but they can also create these filter bubbles, limiting your exposure to different perspectives.

So it's like we're living in a world where our reality is being curated by AI without us even realizing it. In a way, yeah. And it's not just about how we consume information. AI is being used in health care, finance, transportation, education. I mean, you name it, it's there.

It's automating tasks, making predictions, influencing decisions, often in ways that we're not even aware of. So even if you're not building AI systems yourself, you're interacting with them constantly. Every single day. And that's why it's so important to develop at least a basic understanding of how AI works and how it might be impacting your life. It's about empowering yourself to navigate this increasingly

AI driven world. Empowerment. I like that. It's not about being a passive passenger on this AI train. It's about taking control. Exactly. And that empowerment starts with asking questions. Don't be afraid to be curious, to challenge assumptions, to demand transparency from those companies that are using AI. So what kind of questions should we be asking? Give us some concrete examples. OK, so when you're using social media, ask yourself, how is AI shaping what I see in my feed?

What content am I being shown and what am I not being shown? And when you're shopping online think about how are these algorithms influencing my choices? Are they recommending products that are really the best for me?

Or are they just trying to make more money? Those are great questions. It's about becoming aware of those invisible forces that are shaping our digital lives. And it's not just about individual awareness either. We need to have these conversations on a bigger scale. What kind of future do we want to build with AI? What values do we want to embed in these systems? What safeguards do we need to put in place to protect our privacy, our autonomy, our fundamental rights? Well, those are some big questions.

But they're questions we can't afford to ignore. Exactly. The future of AI isn't something that's just going to happen to us. It's something we're all creating together. Through the choices we make, the conversations we have, the actions we take. That's right. Well, I think this has been an incredibly thought-provoking deep dive. Any final words of wisdom for our listeners? Just this. The future is not predetermined.

It's being shaped right now by all of us. So get informed, get involved, and help build the future you want to see. That's a great message to end on. To all our listeners out there, thanks for joining us on this deep dive into the world of AI. Until next time, stay curious and stay engaged. Stay curious.