NeurIPS 2024 亲历者对谈 / INDIGO TALK - EP15

INDIGO TALK

Deep Dive

What were the key highlights of Ilya Sutskever's speech at NeurIPS 2024?

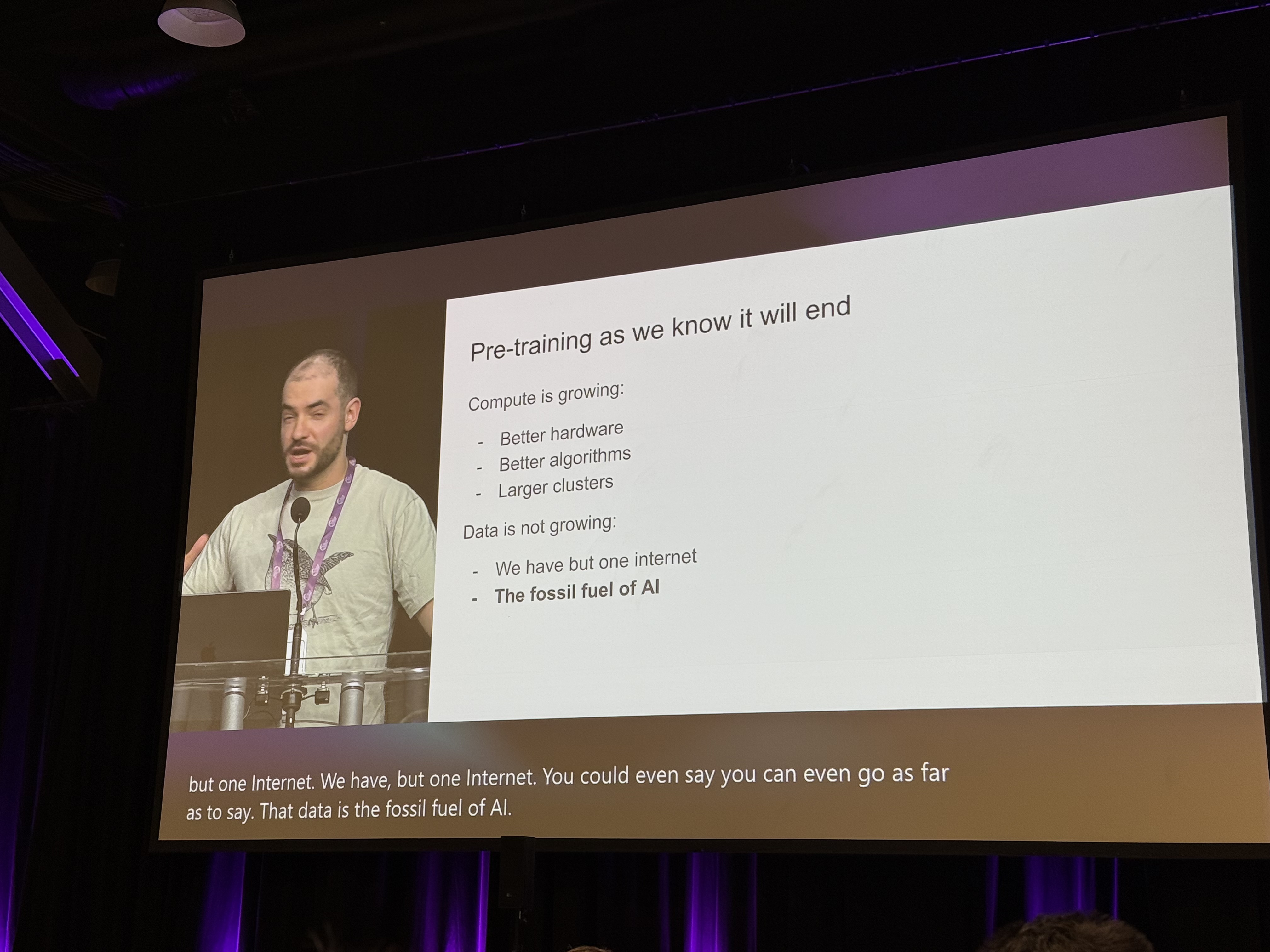

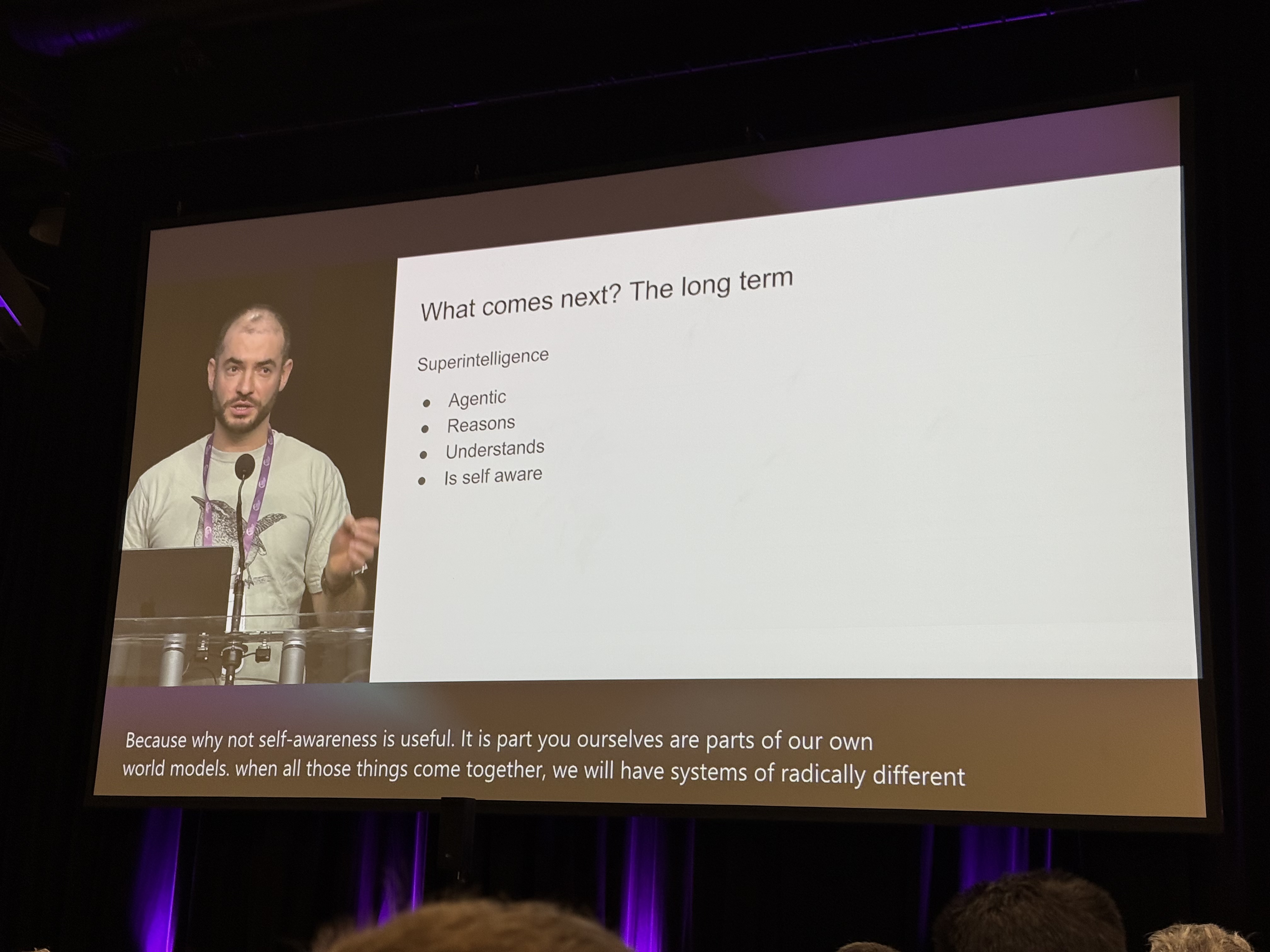

Ilya Sutskever discussed the potential limitations of pre-training in AI, suggesting it may be reaching a bottleneck. He proposed three future directions: Agents, synthetic data, and inference-time computation. He emphasized that data is the new 'fossil fuel' for AI and hinted that pre-training is just the first step toward achieving intelligence. He also explored the possibility of AI developing consciousness, questioning 'Why not?' if consciousness is beneficial.

What is the significance of the 'Test of Time' award at NeurIPS 2024?

The 'Test of Time' award at NeurIPS 2024 recognized two highly influential papers from 2014: Ian Goodfellow's work on Generative Adversarial Networks (GANs) and Ilya Sutskever's paper on Sequence to Sequence Learning with Neural Networks. These papers were pivotal in shaping the field of AI, with GANs revolutionizing generative models and Sequence to Sequence learning laying the groundwork for modern NLP.

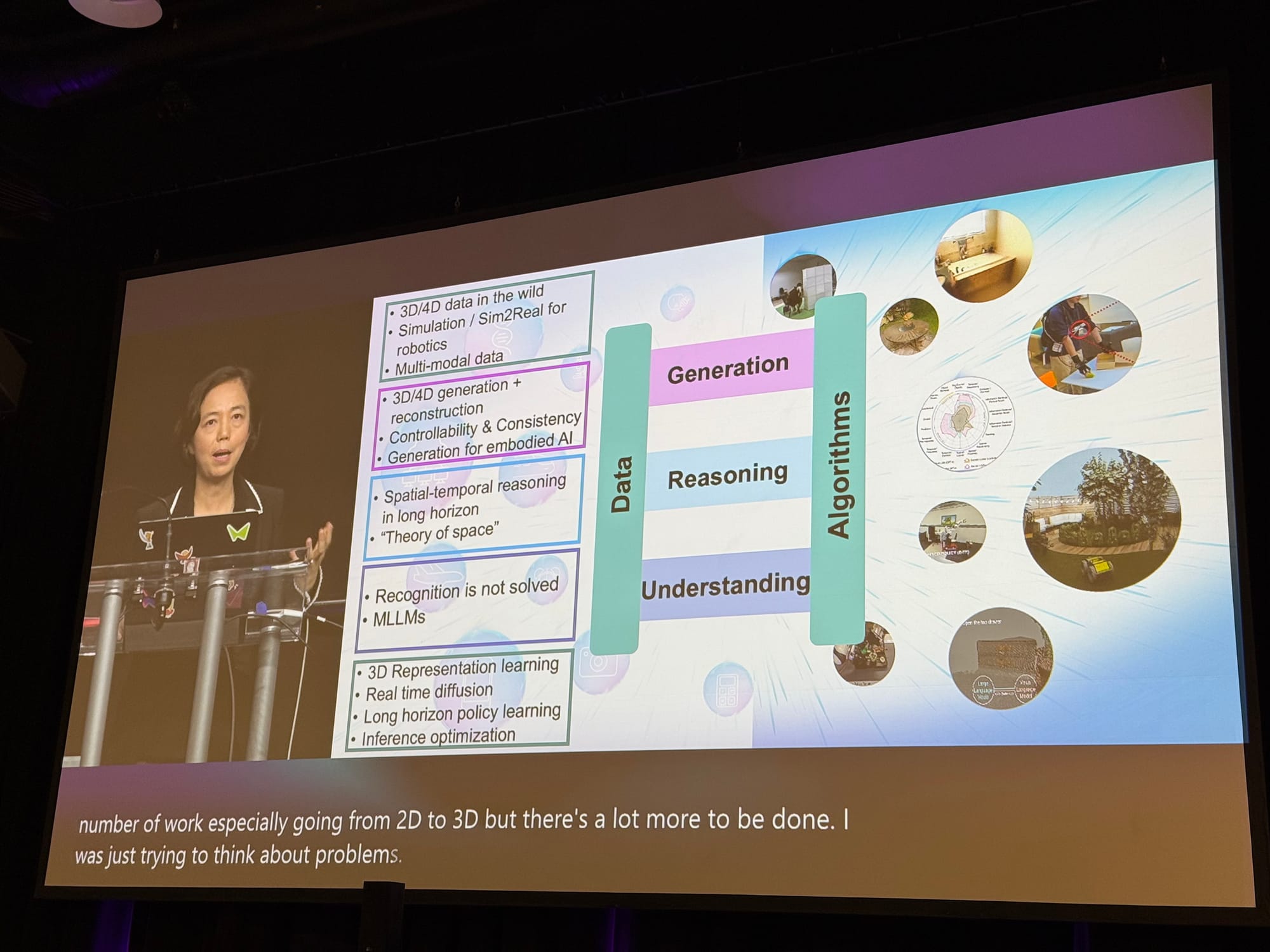

What did Fei-Fei Li emphasize in her talk on spatial intelligence?

Fei-Fei Li introduced the concept of 'Digital Cousin,' emphasizing the need for robots to understand multi-dimensional information such as material, depth, and tactile feedback. She highlighted the importance of virtual world testing for faster generalization and discussed the challenges and opportunities in SIM2REAL (simulation to reality) applications. She also stressed that AI should augment human capabilities rather than replace them.

What were the key advancements in Google's Gemini 2.0 as discussed by Jeff Dean?

Jeff Dean highlighted Gemini 2.0's advancements in multimodal capabilities, including native audio input/output and integrated image generation. He also discussed Project Astra, a personal AI assistant, and Project Mariner, an automated web interaction system. Dean emphasized the importance of coding skills and the need for specialized hardware beyond current TPUs to support future AI developments.

Why is spatial intelligence considered a critical area for future AI development?

Spatial intelligence is crucial because it enables AI systems to understand and interact with the physical world in 3D, which is essential for tasks like robotics and real-world navigation. Fei-Fei Li and other experts argue that moving beyond 2D data to 3D understanding will unlock more complex applications, such as autonomous vehicles and advanced robotics, making it a key area for future AI breakthroughs.

What challenges does the AI industry face regarding data scarcity?

The AI industry faces significant challenges with data scarcity, as current models rely heavily on internet data, which is finite and largely consists of 'result data' rather than process data. Ilya Sutskever likened data to 'fossil fuel,' emphasizing its limited nature. To overcome this, researchers are exploring synthetic data and new methods like inference-time computation to continue scaling AI models.

What is the role of synthetic data in the future of AI?

Synthetic data is seen as a potential solution to the limitations of real-world data. It can be generated to supplement existing datasets, especially in areas where real data is scarce or expensive to collect. However, there are concerns about its diversity and authenticity, as synthetic data is often based on existing distributions and may not introduce truly novel features.

How does Jeff Dean view the future of AI and its impact on jobs?

Jeff Dean believes AI will automate routine and repetitive tasks, freeing humans to focus on more creative and innovative work. He sees AI as a tool to augment human capabilities rather than replace jobs entirely. This shift could lead to a more efficient and productive society, where humans engage in deeper, more meaningful tasks while AI handles mundane responsibilities.

What is the 'Digital Cousin' concept introduced by Fei-Fei Li?

Fei-Fei Li's 'Digital Cousin' concept refers to creating virtual environments that simulate real-world conditions but with variations in factors like lighting, material, and texture. This allows AI systems to generalize better and faster by training in diverse, simulated scenarios. Unlike 'Digital Twin,' which replicates exact conditions, 'Digital Cousin' introduces controlled variations to enhance adaptability.

What are the implications of AI's potential to develop consciousness?

The possibility of AI developing consciousness raises profound questions about the nature of intelligence and ethics. Ilya Sutskever suggested that if consciousness is beneficial, there's no reason AI couldn't develop it. This idea challenges traditional views of AI as purely computational and opens up discussions about the ethical and philosophical implications of creating conscious machines.

- NeurIPS has grown from 300 attendees in 2014 to an estimated 15000+ in 2024.

- Over 15000 papers were submitted, with 4-5000 accepted.

- Key attendees included Fei-Fei Li, Jeff Dean, and Ilya Sutskever.

- The "Test of Time" award recognized seminal papers from 2014: GANs and Sequence to Sequence learning.

Shownotes Transcript

INDIGO TALK 第十五期,邀请两位来自硅谷的神秘嘉宾,给大家带来第一手会议报道和深度解读。我们一起探讨了 Ilya Sutskever 关于大模型超越预训练的新思路、李飞飞教授对空间智能的革新性观点,以及 Jeff Dean 展示的 Gemini 2.0 的多项突破。看看刚刚结束的 AI 学术界最重要的会议 - NEURIPS 2024 会如何重新定义了 AI 的发展方向?一定要听这场及时的深度对谈。

本期嘉宾

Jay(硅谷 AI 创业者 行业需要 身份保密)

Sonya)(投资人 前 Meta)

Indigo)(数字镜像博主)

时间轴与内容概要

02:15 NeurIPS 会议概况

- 介绍 NeurIPS 37 年历史

- 从 2014 年前 300 人到现在可能有几万人参与

- 今年约 15000 篇论文提交,录取 4-5 千篇

04:04 重要嘉宾与获奖论文

- 李飞飞出席主会场的分享

- Jeff Dean(Google)在 Turing AI 参与对谈

- Ilya Sutskever(OpenAI 前首席科学家,现 SSI 创始人)出席颁奖

- 2014 年的论文 GAN 和 Sequence to Sequence 获得了"时间检验奖"

06:55 Ilya Sutskever 的演讲解析

- 讨论了预训练(pre-training)可能达到瓶颈

- 提出三个方向:Agents、合成数据、推理时间计算

- 强调了“data is the new fossil fuel in the future”

- 智力与脑容量体重的线性对比,暗示了预训练只是我们实现智能的第一步

- 探讨了意识的可能性和 "Why not" 的观点

18:50 关于数据和意识的深入讨论

- 分析了 Rachel Suddon 的观点

- 探讨了目标和意识的关系

- 讨论了 Scaling Law 的未来发展

- 以及物理世界数据的重要性

26:25 李飞飞的空间智能分享解析

- 提出"Digital Cousin"概念

- 强调了机器人需要理解的多维度信息:材质、深度、触觉等

- 讨论了虚拟世界测试的重要性

- SIM2REAL(模拟到现实)的挑战和机遇

37:52 Jeff Dean 的分享与 Google Gemini

- Gemini 2.0 的多模态能力

- Google 的技术积累和优势

- 讨论了 Android XR 平台

- Project Astra 和 Marina的发展方向

- Jeff Dean 强调了代码能力的重要性

54:28 AI 行业展望和对未来的思考

- SemiAnalysis 的 GPU Rich

- 什么样的公司在 AI 时代更有优势?

- 人类在 AI 时代的定位和价值

- 人与人之间连接的重要性

- 对物质更加富足的未来世界的预测

最后总结:人类永远有解决不完的问题,因此我们不会缺工作的,关键是要做什么样的工作 。。。

对谈中的精彩发言

Ilya Sutskever: "我们现在只有一个互联网(Only one internet)"。他用这句话形象地说明了当前 AI 训练数据面临的瓶颈,暗示未来需要探索新的数据来源和训练方法。

Ilya Sutskever 关于意识的观点:"如果意识有好处(if consciousness is beneficial),为什么 AI 就不能发展出意识呢?"这个问题引发了深入的讨论。

李飞飞:"我们不是要 replace human,而是要 empower human"。她通过划掉 "replace" 强调 AI 的本质是增强人类能力而非替代人类。

Jeff Dean 分享 Gemini 发展时说:"我们要让模型不仅是处理单一模态,而是像人类一样自然地理解和生成多模态内容。"

Jay 关于数据的洞察:"现在互联网上的数据都是结果数据,没有过程的数据。所以说机器永远都是快闪现出来,然后一个结果给你。"

Jay 谈空间智能:"数据采集是非常重要也是非常有挑战的一环,2D 的数据需要去直接推断 3D 的结构并不容易。"

Sonya 对未来的展望:"未来的社会是一个物质非常富足的世界,因为无论从医疗护理还是日常生活,AI 都能帮助我们解决基础需求。"

主持人的精彩总结:"人类总有解决不完的问题,所以说我们永远都会有工作的,只是什么工作而已。"

Ilya Sutskever 的演讲摘要

我把录音稿的核心观点给大家按时序整理下:

回顾十年前工作(2014年)

- 他们的工作核心是三个要素:自回归模型、大型神经网络和大规模数据集

- 提出了Deep Load Hypothesis(深度负载假说) - 认为如果一个有 10 层的神经网络,就能完成人类在一瞬间能做到的任何事情

- 当时选择 10 层是因为那时只能训练 10 层的网络

- 真正相信了如果训练好自回归神经网络,就能获得想要的序列分布

技术的演进

- 这十年从 LSTM(被描述为"90度旋转的ResNet”)发展到了 Transformer

- 提出了Scaling hypothesis(扩展假说) - 如果有足够大的数据集和神经网络,成功是必然的

- 强调了Connectionism(连接主义)的重要性 - 人工神经元和生物神经元的相似性,让我们相信大型神经网络可以完成人类的很多任务

预训练时代及其局限

- GPT-2、GPT-3等预训练模型推动了领域进展

- 预训练时代终将结束,因为数据增长有限:“我们只有一个互联网”

- Ilya 将数据比作"AI的化石燃料" - 这是有限资源

未来展望:

- Agent(智能代理)可能是未来方向之一

- 合成数据的重要性在上升

- 推理时计算(如O1模型)展现出了潜力

- 借鉴生物学启示,人类祖先(如早期智人等)的脑容量与体重的比例关系有着不同的斜率,这意味着在进化过程中,确实可能出现与之前完全不同的发展路径,我们目前在AI领域看到的扩展方式可能只是我们发现的第一种扩展方式;

超级智能的特点:

- 未来AI系统将具有真正的代理性(Agentic)

- 将具备推理能力,但推理越多越不可预测

- 能够从有限数据中理解事物

- 将具备自我意识(如果意识有必要的话就让它有吧)

- 这些特性结合后会产生与现有系统完全不同的质的飞跃

在问答环节也讨论了生物启发和 hallucination(幻觉)问题:

从抽象层面看,生物启发的AI某种程度上是非常成功的(如学习机制),但生物启发仅限于很基础的层面("让我们使用神经元”),如果有人发现大家都忽略了大脑中的某些重要机制,应该去研究,也许会有新的突破;

Ilya 认为未来具有推理能力的模型可能能够自我纠正hallucination(幻觉)问题;

关于 SSI 就给了点上面的暗示,然后什么都没说了。。

李飞飞演讲的核心观点

视觉智能的进化历程:从最基本的理解(Understanding),到推理(Reasoning),再到生成(Generation);这个进化过程伴随着数据和算法的共同发展。

视觉智能的进化历程:从最基本的理解(Understanding),到推理(Reasoning),再到生成(Generation);这个进化过程伴随着数据和算法的共同发展。

从 2D 到 3D 的转变:

- 目前的 AI 主要停留在 "flat world"(2D 世界)的层面

- 真实世界是 3D 的,要实现真正的视觉智能,必须走向 Spatial Intelligence(空间智能)

- 3D 空间理解对于实现更复杂的任务(如机器人操作)至关重要

AI 的社会价值:

- AI 不应该被视为"替代"(replace)人类,而应该是"增强"(augment)人类能力

- 举例说明 AI 如何增强医疗保健、残障人士辅助、创意工作等领域

对 Spatial Intelligence 的看法与期待:

技术方向:

- 3D/4D 数据采集和模拟

- 多模态数据整合

- 3D 生成与重建

- 空间时序推理

- 表征学习

- 实时策略学习和优化

应用领域:

- 机器人学习与控制

- 实时场景理解

- 空间推理

- 3D 内容生成

未来展望:

- Spatial Intelligence 将成为连接感知、学习和行动的关键

- 需要更多的 3D 数据集和仿真环境

- 强调物理世界的真实交互比单纯的 2D 理解更重要

- 期待能够实现更复杂的空间-时间推理能力

李飞飞特别强调,真实世界的交互和理解远比 2D 世界更复杂,但同时也更有意义。她认为 Spatial Intelligence 是未来AI发展的重要方向,将帮助 AI 系统更好地理解和交互真实世界。

Jeff Dean 的访谈内容摘要

早期神经网络经验:

- Jeff 的第一次接触是在 1990 年,当时他在明尼苏达大学读本科;

- 上了一门并行计算课程,其中介绍了神经网络;

- 撰写了一篇关于神经网络并行训练策略的荣誉论文;

- 实现了模型并行和数据并行的早期版本;

- 很早就意识到需要更多的计算能力(“一百万倍,而不是 32 倍”);

谷歌大脑的发展:

- 2001 年左右在谷歌遇到了吴恩达;

- 启动了用于大规模神经网络训练的“DisBelief”项目;

- 使用 2000 台计算机 / 16000个核心来训练早期的计算机视觉和语音模型;

- 早期就有了“更大的模型 + 更多的数据 = 更好的结果”的见解;

- 专注于扩大训练规模并解决实际问题;

DeepMind 的整合(最初是互补的)

Brain 团队:大规模训练,实际应用

DeepMind:小规模模型,强化学习

- 在 2022 年底左右,随着研究领域的融合而合并;

- 促成了结合两支团队专业知识的 Gemini 项目;

- 选择 DeepMind 的名称是为了获得更好的公众认知;

近期发展(Gemini 2.0)

- 包括原生音频输入/输出的新功能;

- 集成了图像生成功能;

- Astra 项目:具有多模态功能的个人 AI 助手;

- Mariner 项目:自动化网络交互系统;

- 专注于安全防护栏和受控部署;

Jeff 强调的未来趋势:

- 更多交错的多模态处理;

- 需要超越当前 TPU 的专用硬件;

- 对更模块化和稀疏的模型架构的兴趣;

- 认为软件工程会发展但仍然至关重要;

获奖论文

Generative Adversarial Networks)

Sequence to Sequence Learning with Neural Networks)

对谈中推荐的视频

WTF is Artificial Intelligence Really? | Yann LeCun x Nikhil Kamath | People by WTF Ep #4)

Rich Sutton’s new path for AI | Approximately Correct Podcast)

Gemini 2.0 and the evolution of agentic AI with Oriol Vinyals)