“IAPS: Mapping Technical Safety Research at AI Companies” by Zach Stein-Perlman

LessWrong (30+ Karma)

Shownotes Transcript

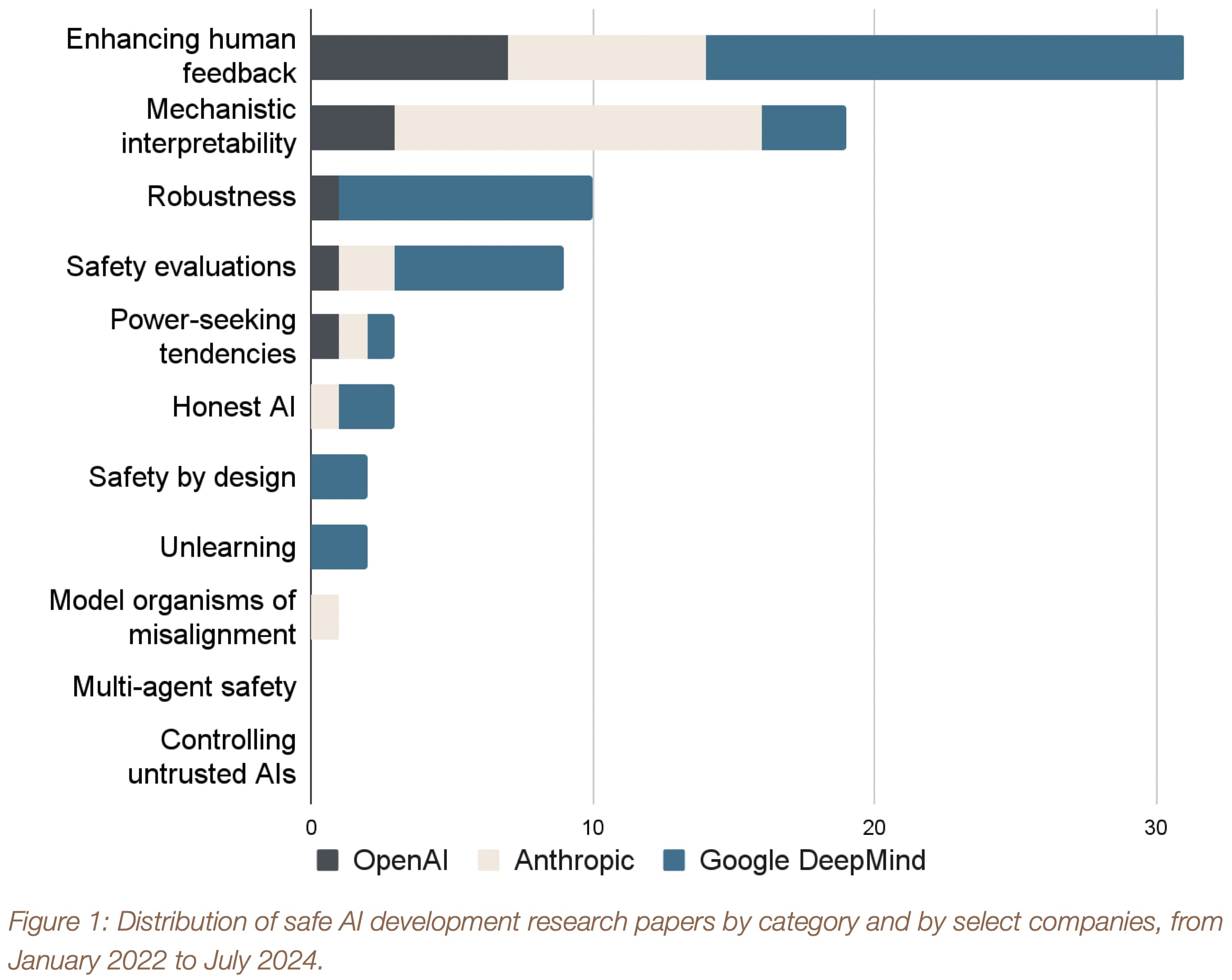

This is a link post. As artificial intelligence (AI) systems become more advanced, concerns about large-scale risks from misuse or accidents have grown. This report analyzes the technical research into safe AI development being conducted by three leading AI companies: Anthropic, Google DeepMind, and OpenAI.

We define “safe AI development” as developing AI systems that are unlikely to pose large-scale misuse or accident risks. This encompasses a range of technical approaches aimed at ensuring AI systems behave as intended and do not cause unintended harm, even as they are made more capable and autonomous.

We analyzed all papers published by the three companies from January 2022 to July 2024 that were relevant to safe AI development, and categorized the 80 included papers into nine safety approaches. Additionally, we noted two categories representing nascent approaches explored by academia and civil society, but not currently represented in any research papers by these [...]

The original text contained 1 image which was described by AI.

First published: October 24th, 2024

---

Narrated by TYPE III AUDIO).

Images from the article: