Shownotes Transcript

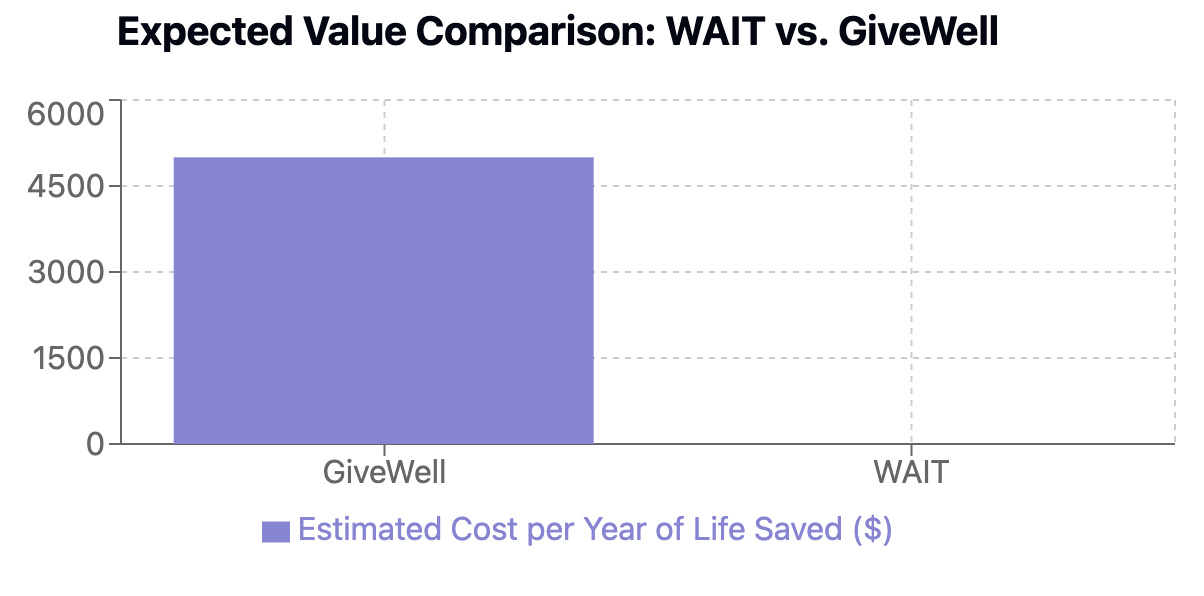

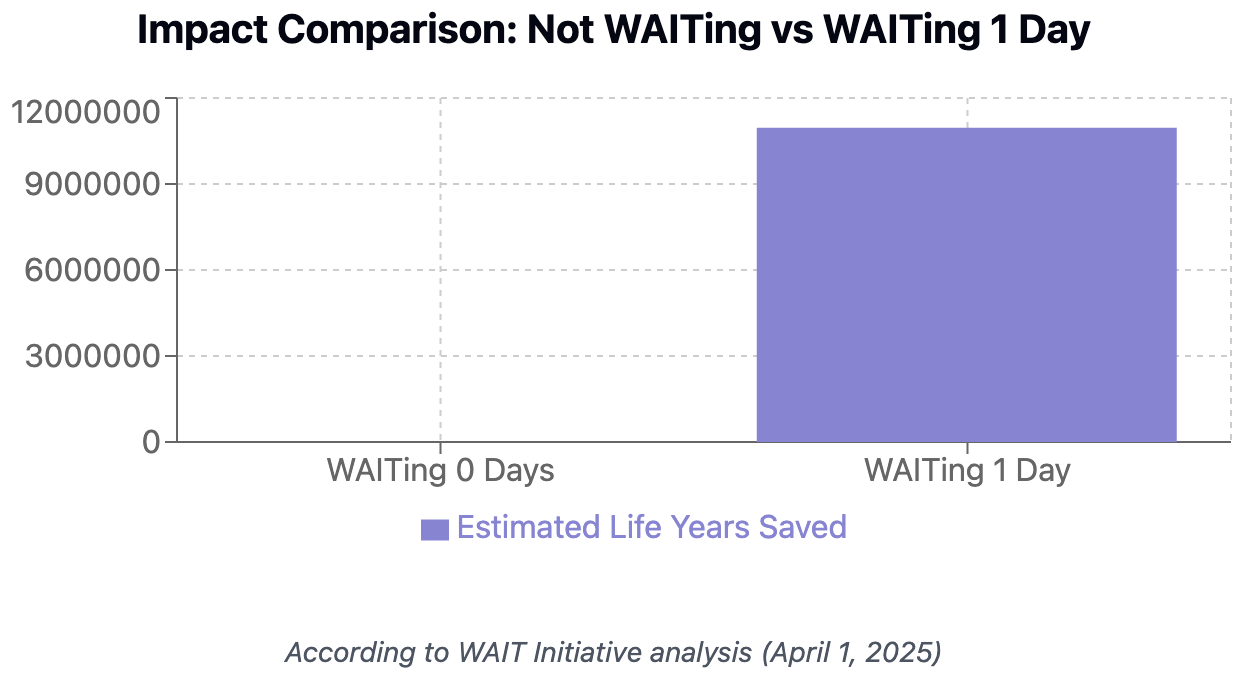

The EA/rationality community has struggled to identify robust interventions for mitigating existential risks from advanced artificial intelligence. In this post, I identify a new strategy for delaying the development of advanced AI while saving lives roughly 2.2 million times [-5 times, 180 billion times] as cost-effectively as leading global health interventions, known as the WAIT (Wasting AI researchers' Time) Initiative. This post will discuss the advantages of WAITing, highlight early efforts to WAIT, and address several common questions. early logo draft courtesy of claude ** Theory of Change**

Our high-level goal is to systematically divert AI researchers' attention away from advancing capabilities towards more mundane and time-consuming activities. This approach simultaneously (a) buys AI safety researchers time to develop more comprehensive alignment plans while also (b) directly saving millions of life-years in expectation (see our cost-effectiveness analysis below). Some examples of early interventions we're piloting include:

- Bureaucratic Enhancement: Increasing administrative [...]

Outline:

(00:50) Theory of Change

(03:43) Cost-Effectiveness Analysis

(04:52) Answers to Common Questions

First published: April 1st, 2025

Source: https://www.lesswrong.com/posts/9jd5enh9uCnbtfKwd/introducing-wait-to-save-humanity)

---

Narrated by TYPE III AUDIO).

Images from the article:

)

) )

) )

) )

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.