Shownotes Transcript

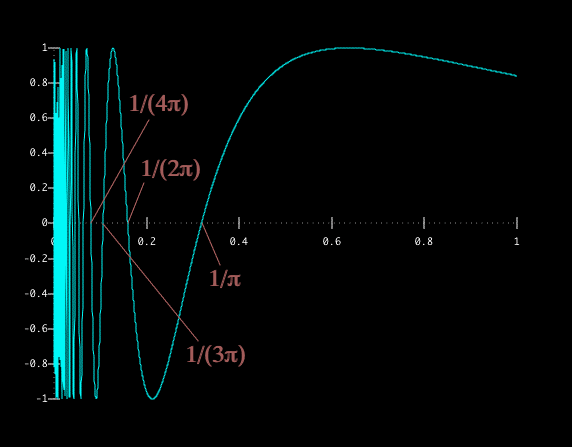

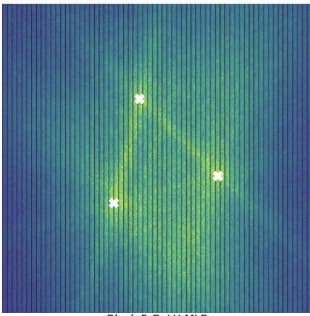

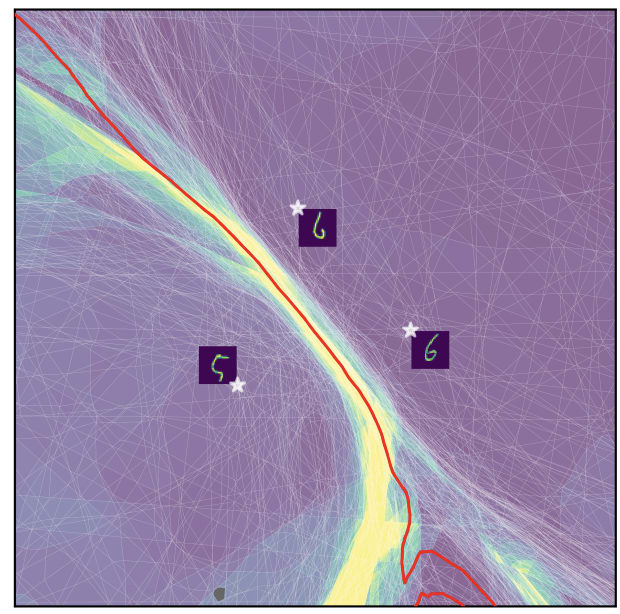

[Epistemic status: slightly ranty. This is a lightly edited slack chat, and so may be lower-quality.] I am surprised by the perennial spikes in excitement about "polytopes" and "tropical geometry on activation space" in machine learning and interpretability[1]. I'm not going to discuss tropical geometry in this post in depth (and might save it for later -- it will have to wait until I'm in a less rant-y mood[2]). As I'll explain below, I think some interesting questions and insights are extractable by suitably weakening the "polytopes" picture, and a core question it opens up (that of "statistical geometry" -- see below) is very deep and worth studying much more systematically. However if taken directly as a study of rigid mathematical objects (polytopes) that appear as locally linear domains in neural net classification, what you are looking at is, to leading order, a geometric form of noise.

January 25th, 2025

Source: https://www.lesswrong.com/posts/GdCCiWQWnQCWq9wBE/on-polytopes)

---

Narrated by TYPE III AUDIO).

Images from the article: