Shownotes Transcript

(Audio version here (read by the author), or search for "Joe Carlsmith Audio" on your podcast app. This is the third essay in a series that I’m calling “How do we solve the alignment problem?”. I’m hoping that the individual essays can be read fairly well on their own, but see this introduction for a summary of the essays that have been released thus far, and for a bit more about the series as a whole.)

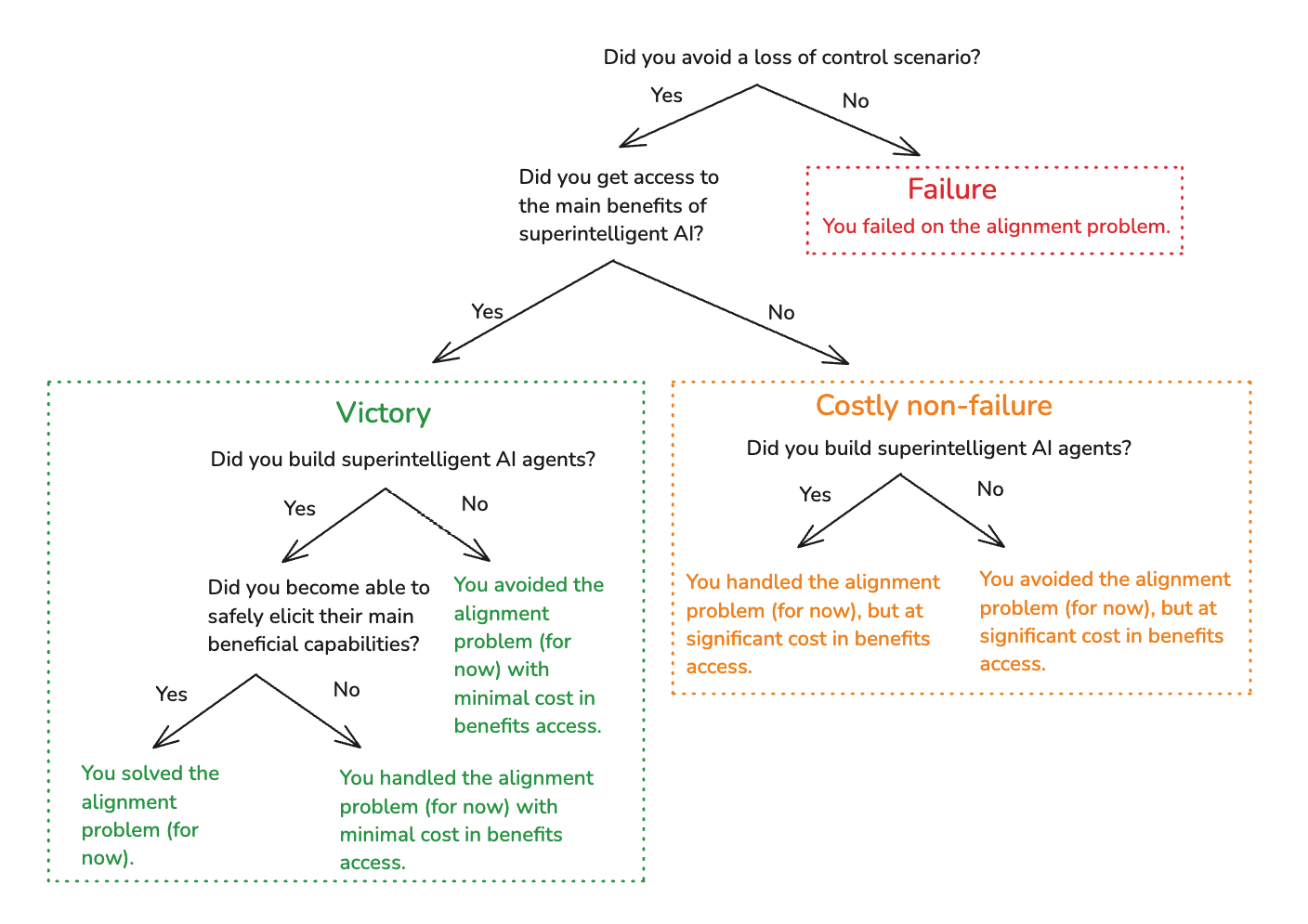

** 1. Introduction** The first essay in this series defined the alignment problem; the second tried to clarify when this problem arises. In this essay, I want to lay out a high-level picture of how I think about getting from here either to a solution, or to some acceptable alternative. In particular:

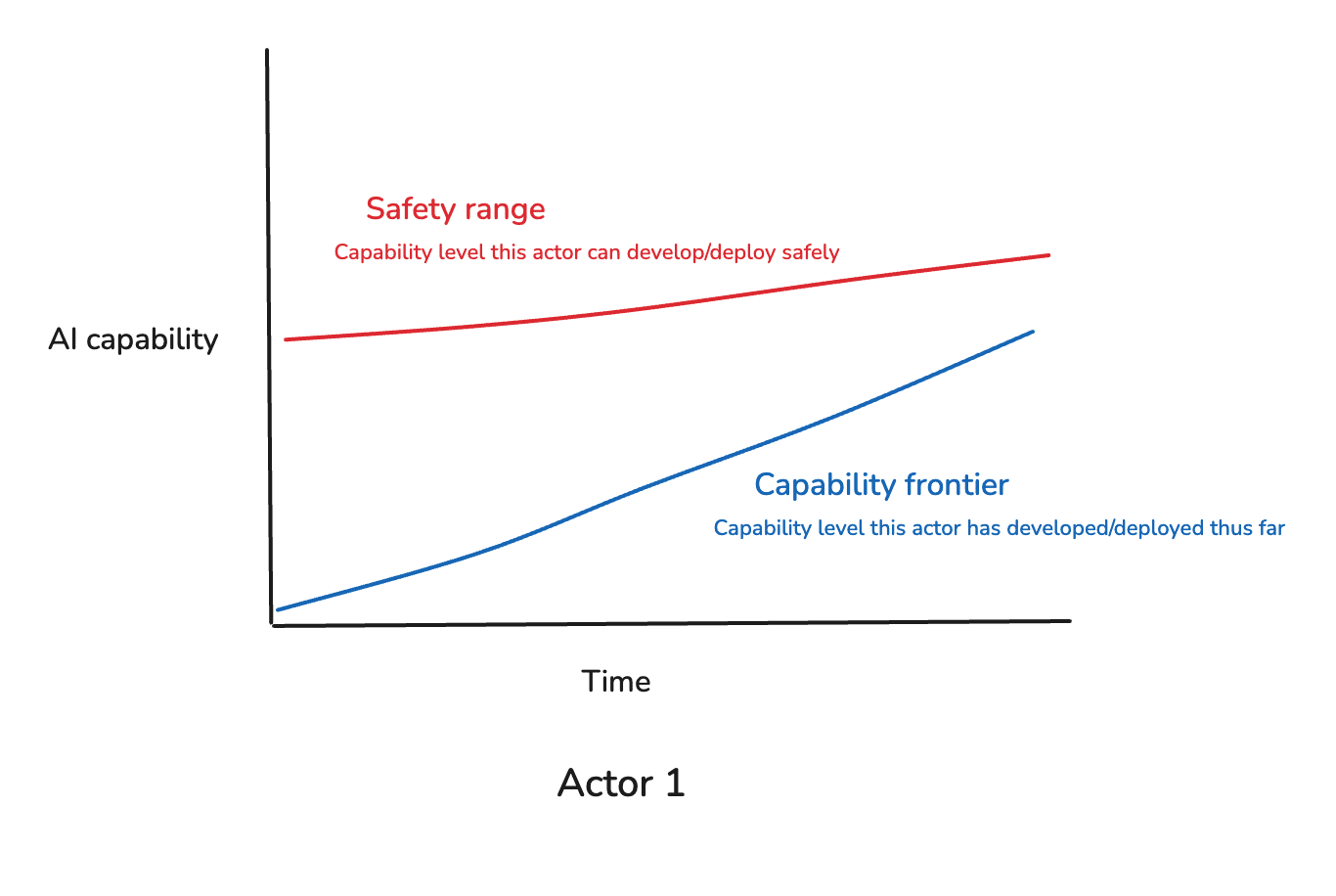

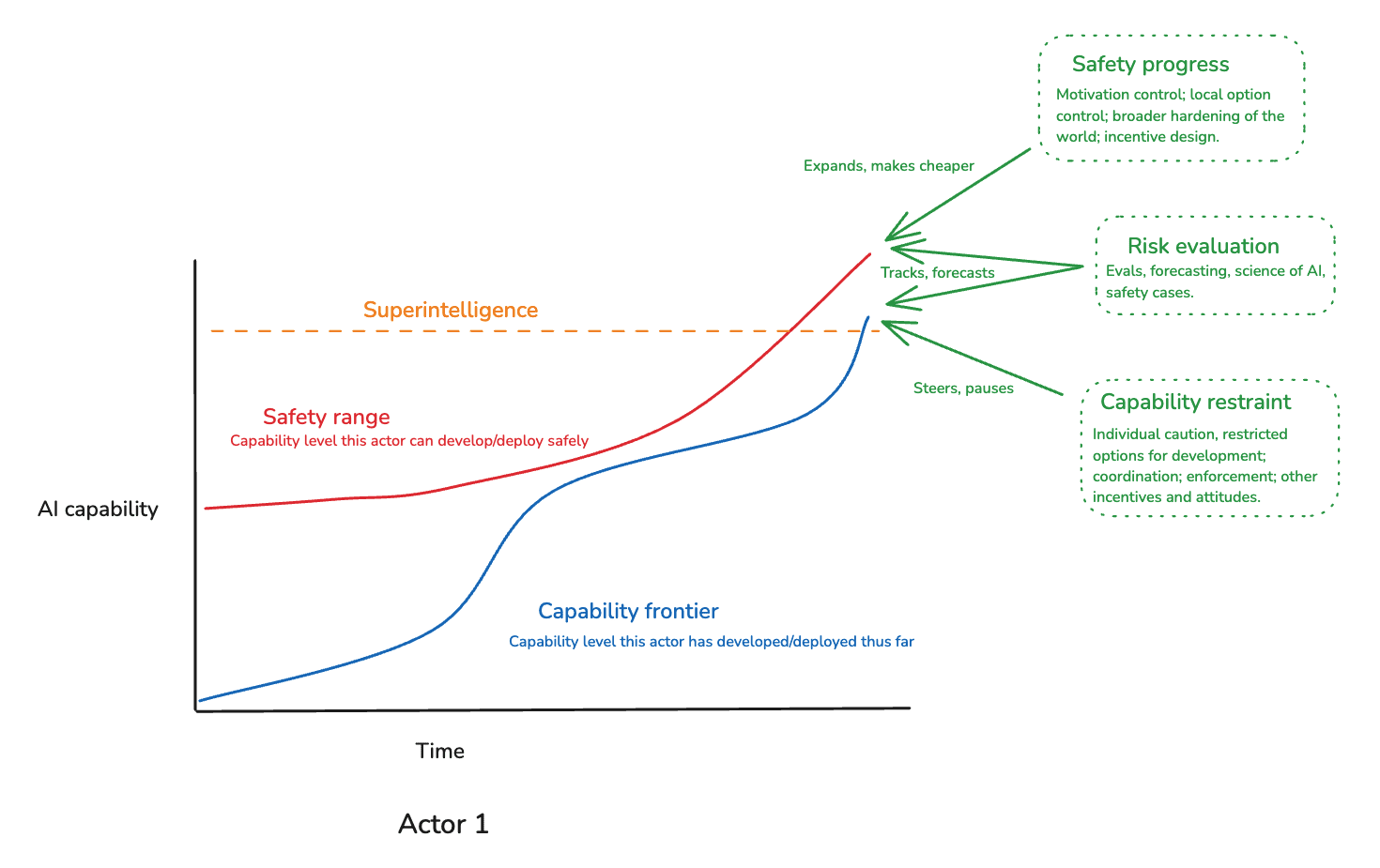

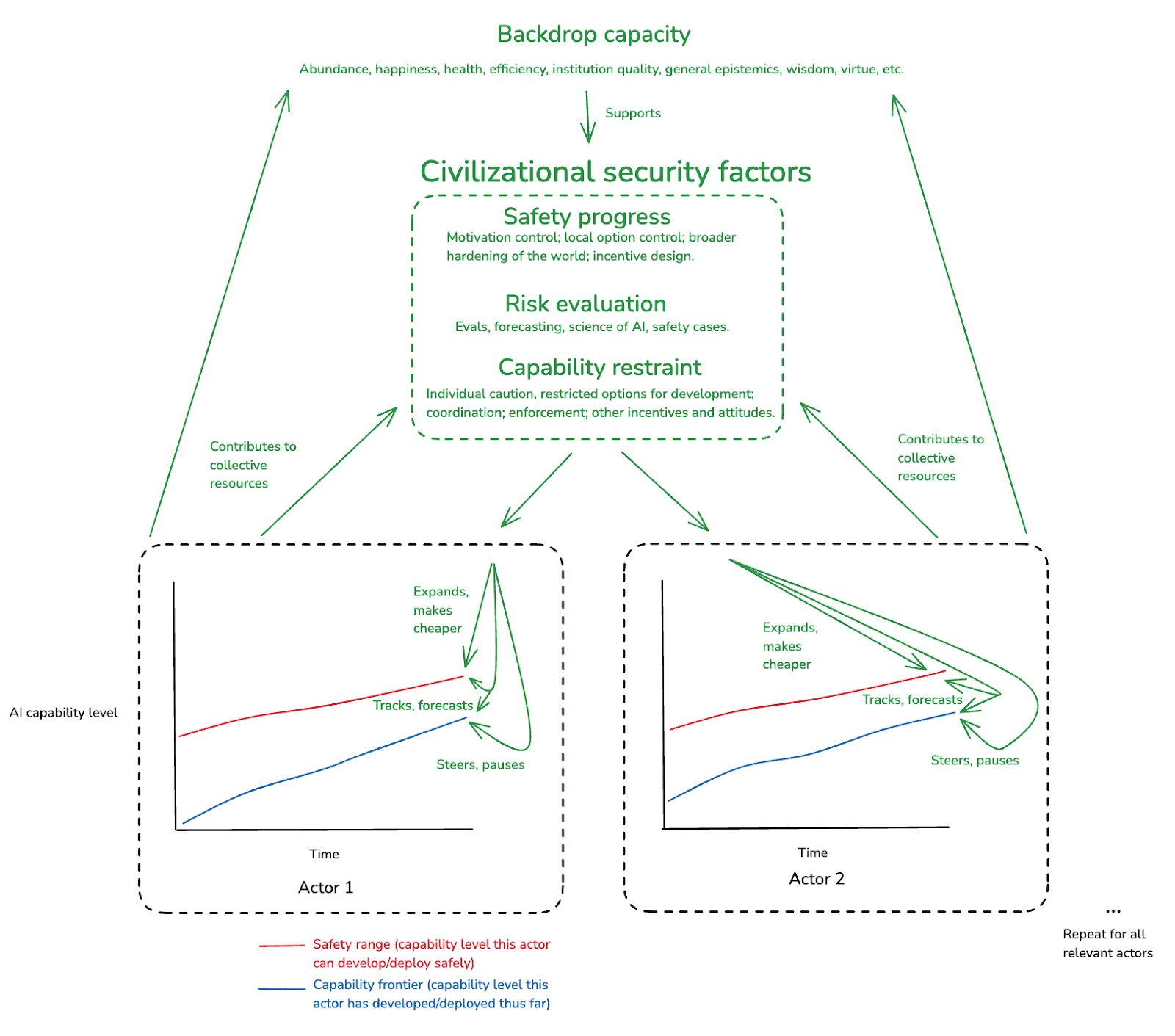

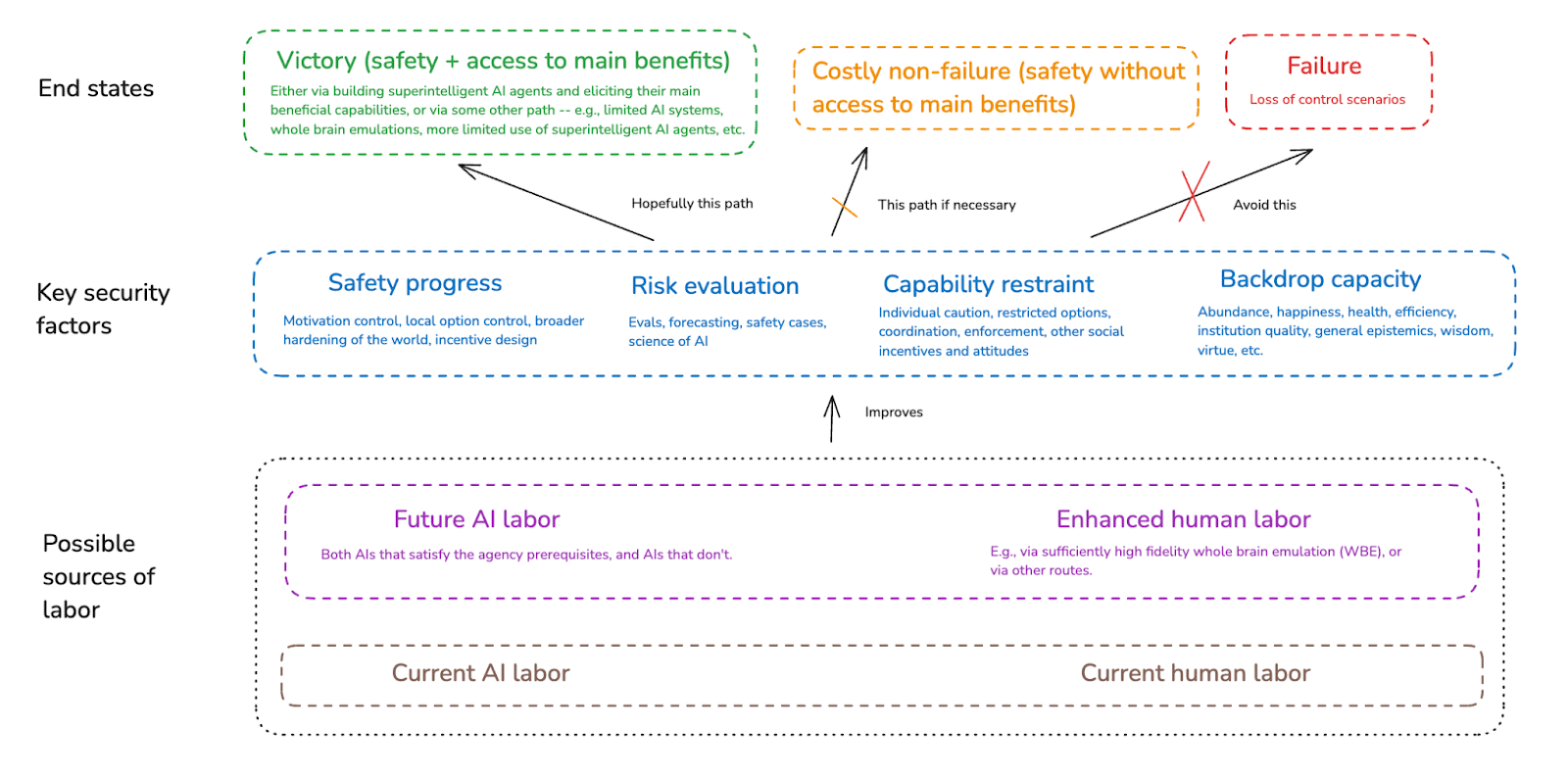

I distinguish between the underlying technical parameters relevant to the alignment problem (the “problem profile”) and our civilization's capacity to respond [...]

Outline:

(00:28) 1. Introduction

(02:26) 2. Goal states

(03:12) 3. Problem profile and civilizational competence

(06:57) 4. A toy model of AI safety

(15:56) 5. Sources of labor

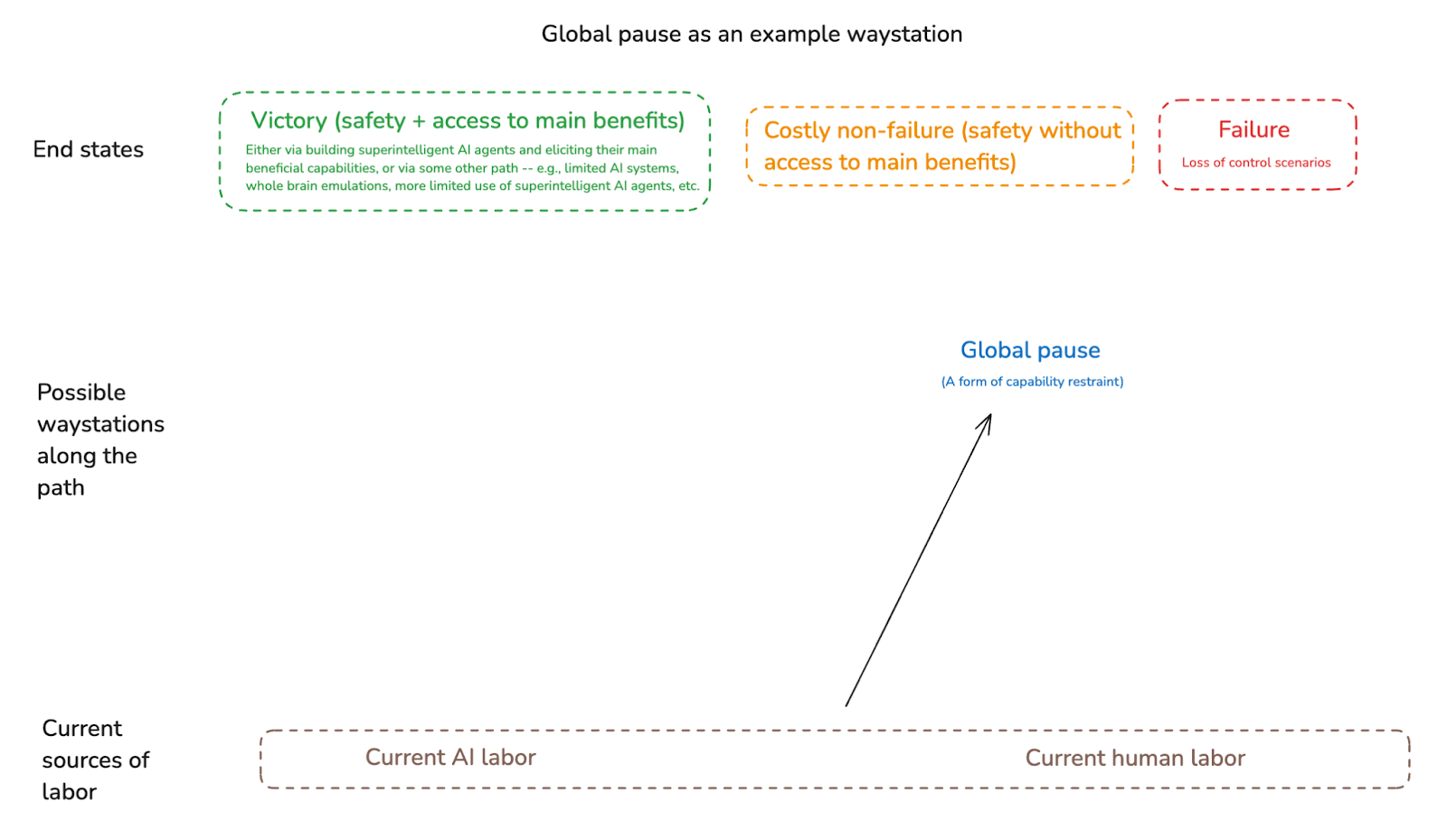

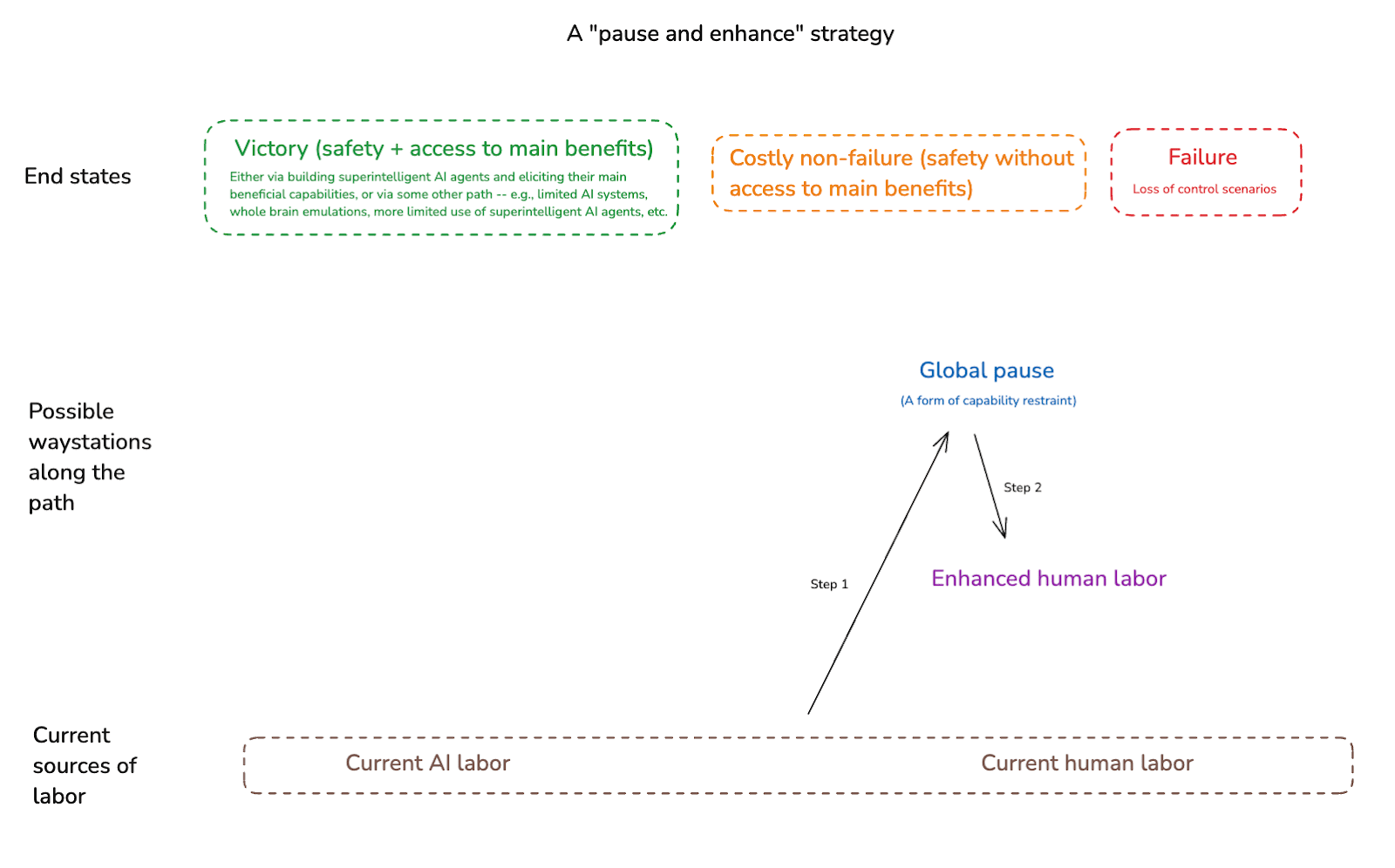

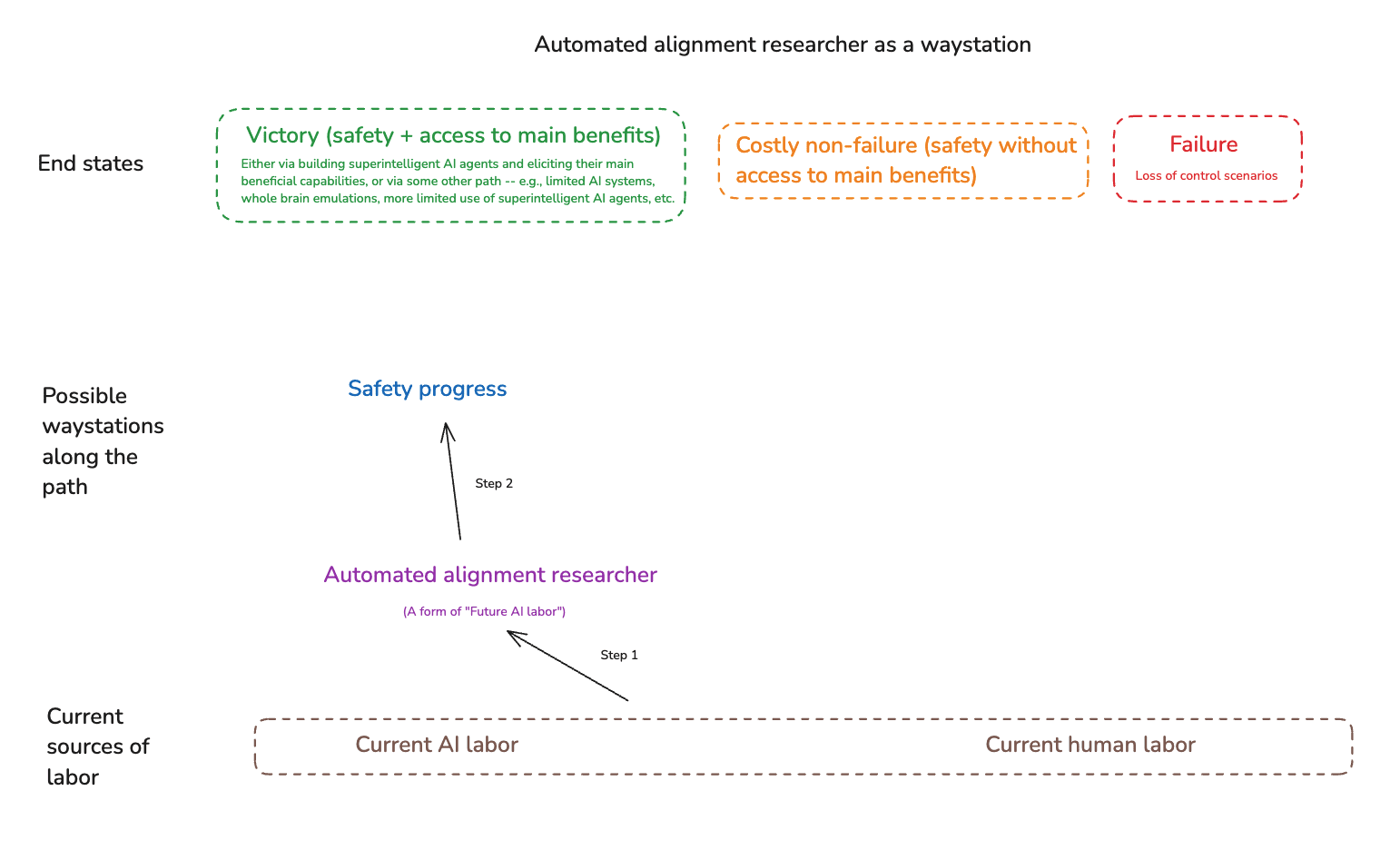

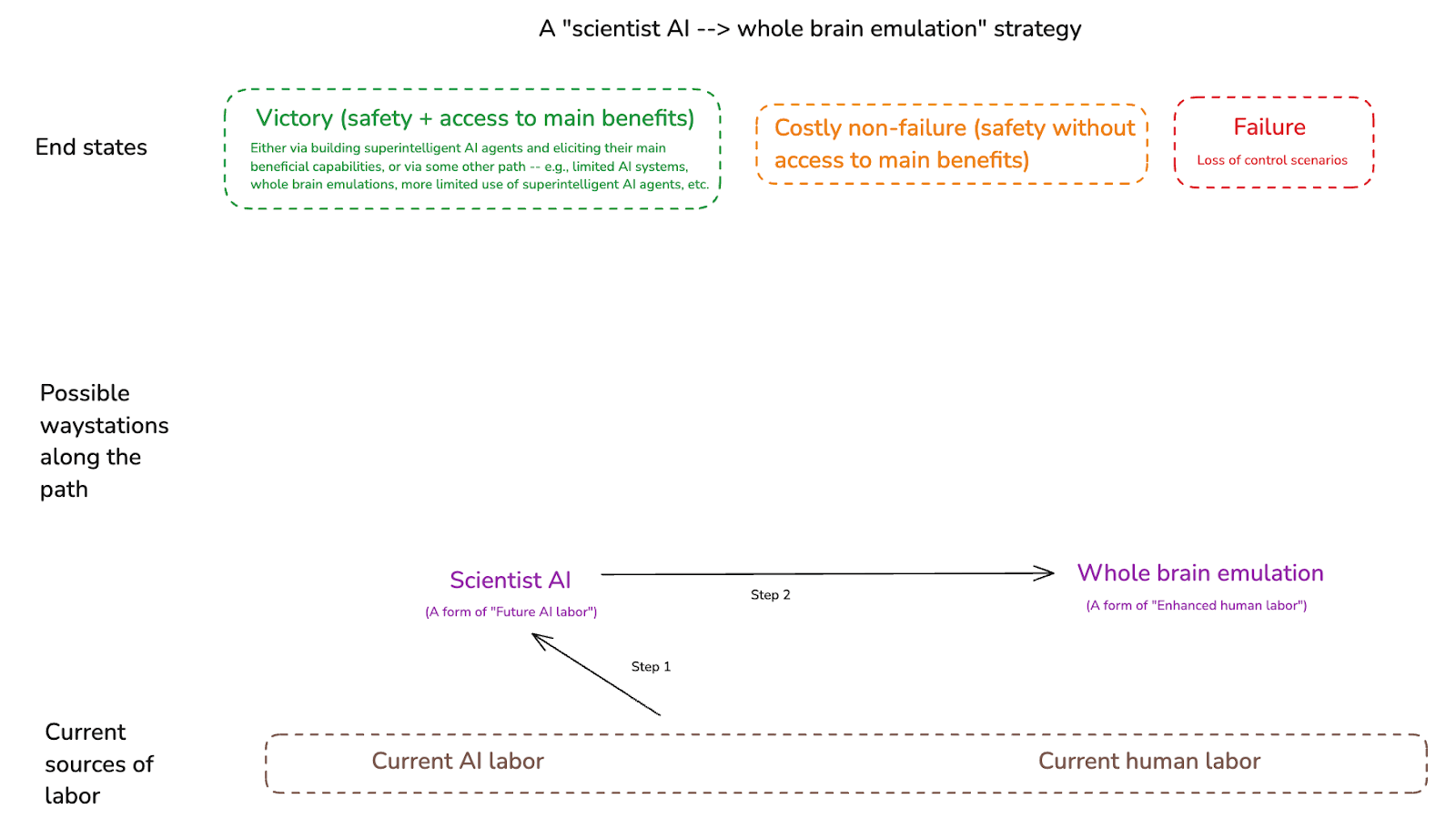

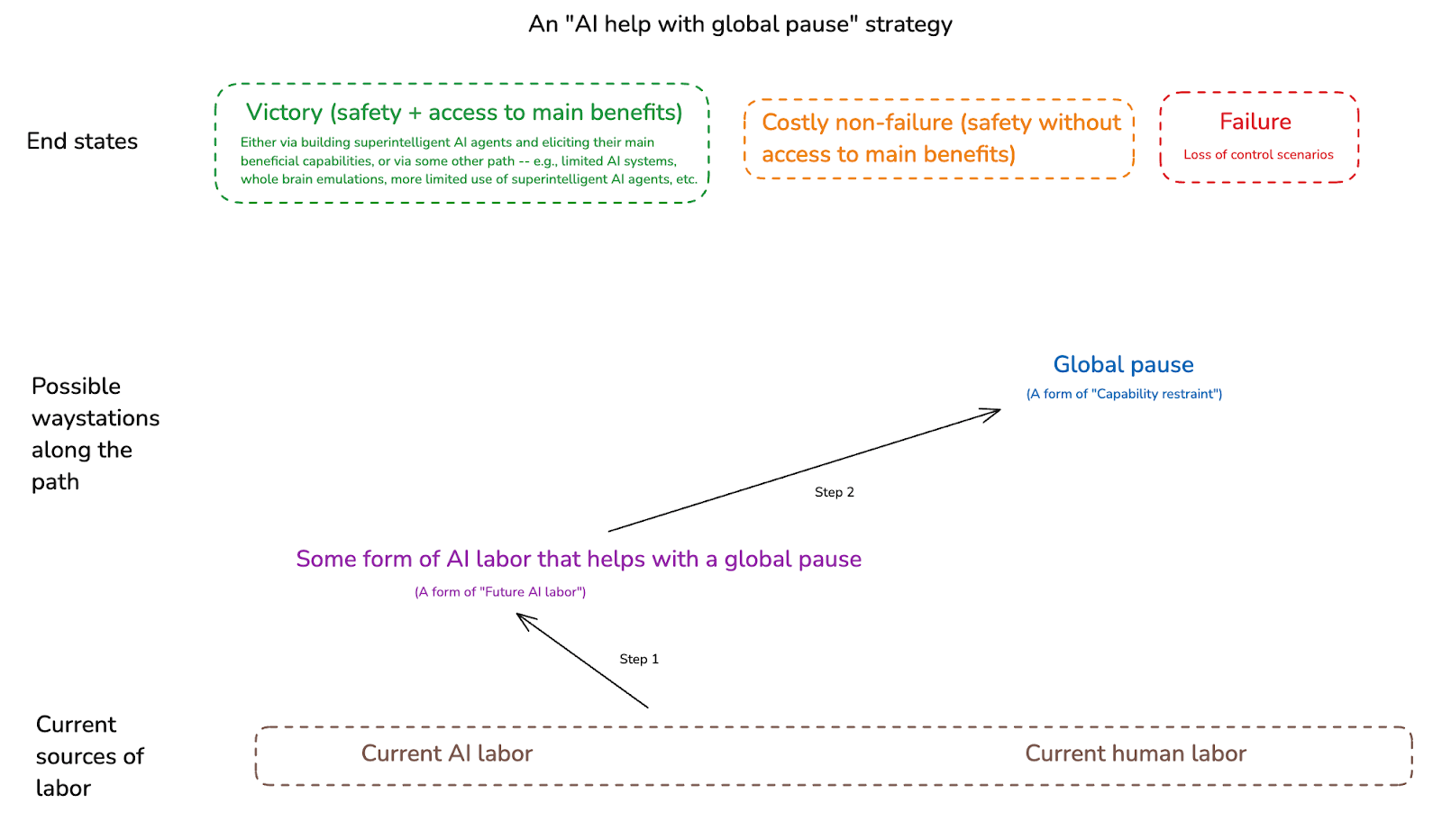

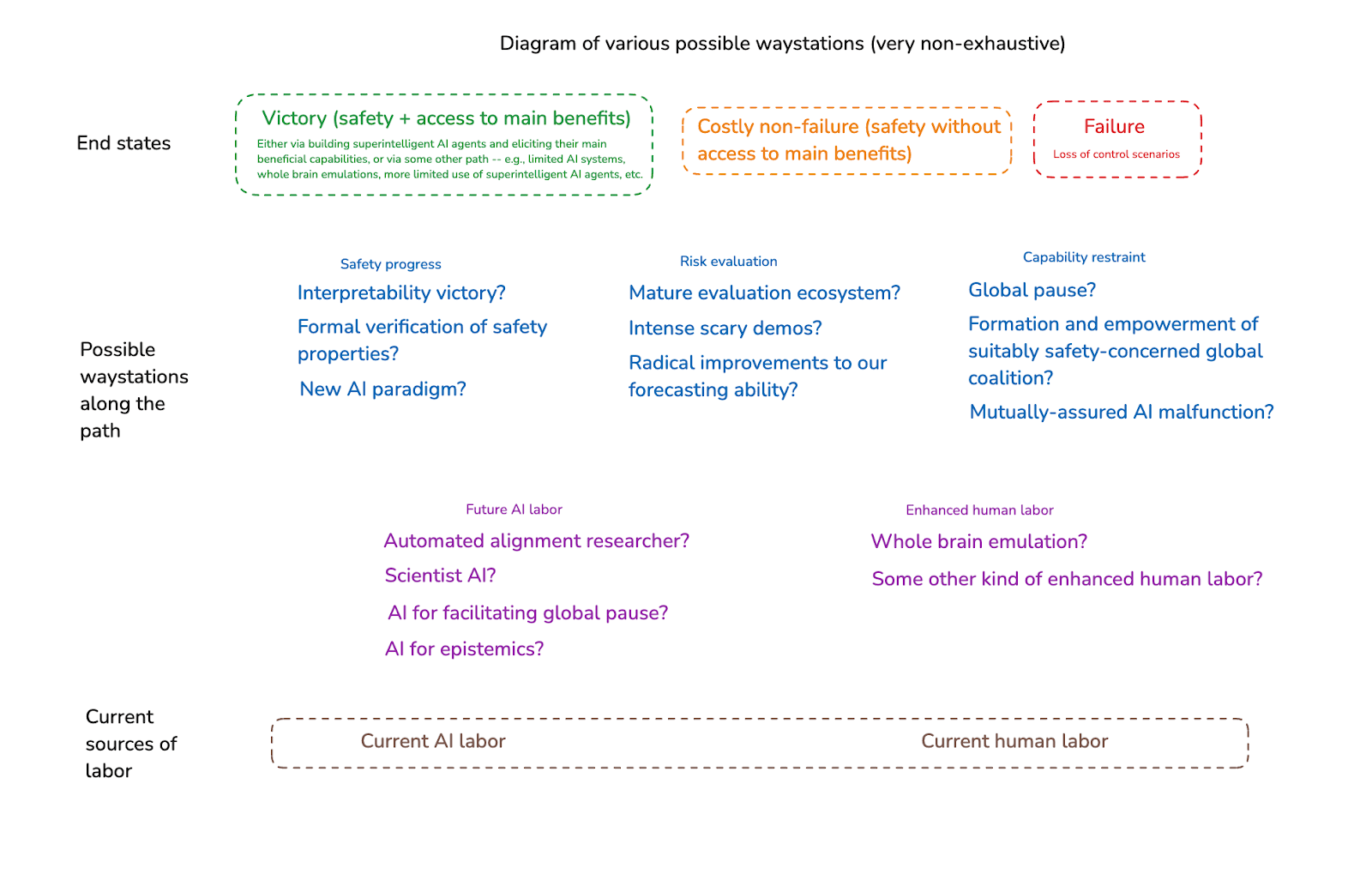

(19:09) 6. Waystations on the path

The original text contained 34 footnotes which were omitted from this narration.

The original text contained 11 images which were described by AI.

First published: March 11th, 2025

Source: https://www.lesswrong.com/posts/kBgySGcASWa4FWdD9/paths-and-waystations-in-ai-safety-1)

---

Narrated by TYPE III AUDIO).

Images from the article: