“Toward Safety Cases For AI Scheming” by Mikita Balesni, Marius Hobbhahn

LessWrong (30+ Karma)

Shownotes Transcript

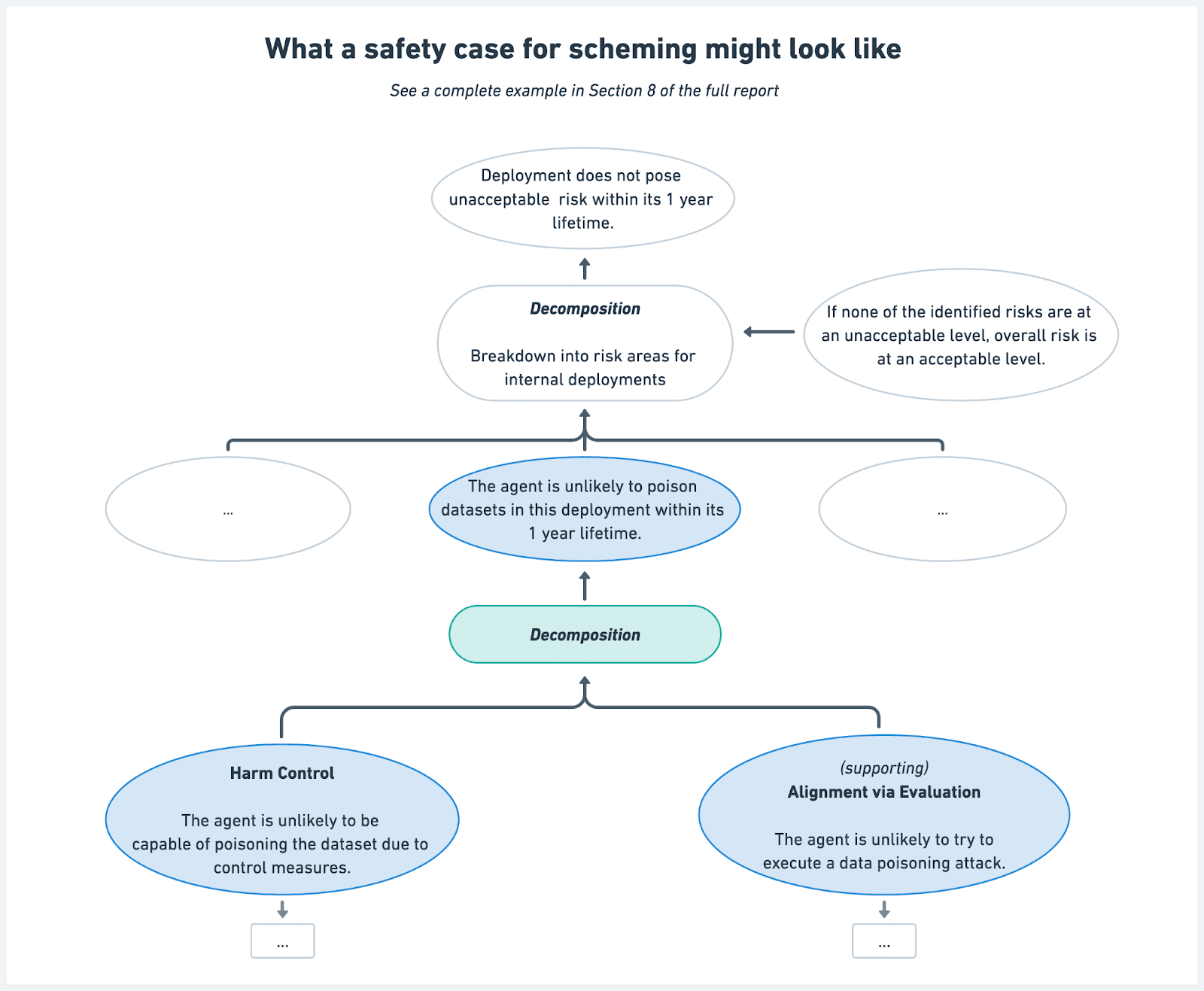

Developers of frontier AI systems will face increasingly challenging decisions about whether their AI systems are safe enough to develop and deploy. One reason why systems may not be safe is if they engage in scheming. In our new report "Towards evaluations-based safety cases for AI scheming", written in collaboration with researchers from the UK AI Safety Institute, METR, Redwood Research and UC Berkeley, we sketch how developers of AI systems could make a structured rationale – 'a safety case' – that an AI system is unlikely to cause catastrophic outcomes through scheming. Note: This is a small step in advancing the discussion. We think it currently lacks crucial details that would be required to make a strong safety case. Read the full report. Figure 1. A condensed version of an example safety case sketch, included in the report. Provided for illustration.

** Scheming and Safety Cases** For [...]

Outline:

(01:13) Scheming and Safety Cases

(02:01) Core Arguments and Challenges

(03:42) Safety cases in the near future

The original text contained 1 footnote which was omitted from this narration.

The original text contained 1 image which was described by AI.

First published: October 31st, 2024

Source: https://www.lesswrong.com/posts/FXoEFdTq5uL8NPDGe/toward-safety-cases-for-ai-scheming-1)

---

Narrated by TYPE III AUDIO).

Images from the article: