#184 - OpenAI's Voice 2.0 + execs quitting, Llama 3.2, To CoT or not to CoT?

Last Week in AI

Deep Dive

Why is OpenAI rolling out Advanced Voice Mode with more voices and a new look?

OpenAI is enhancing the user experience by adding more voices and a new design, making the AI assistant more versatile and engaging. The update includes custom instructions and five new voices, increasing the total to nine. This feature is primarily available in the US and represents a significant step in the evolution of conversational AI, allowing for more natural and real-time interactions.

Why is Meta paying celebrities millions of dollars for their voices in AI chatbots?

Meta is investing in celebrity voices to make their AI chatbots more appealing and engaging, hoping to increase user interaction with AI features across their platforms like Instagram, WhatsApp, and Facebook. This move is part of their strategy to attract users and demonstrate the capabilities of their AI, particularly in the realm of voice-based interactions.

Why is Grok partnering with Aramco to build a massive data center in Saudi Arabia?

Grok, a chip startup, is partnering with Aramco to build a data center with 19,000 language processing units, initially, and potentially up to 200,000 units. This partnership is aimed at providing significant AI infrastructure to Saudi Arabia, a country that maintains neutral relations between the West and other nations. The move is strategic for Grok to scale its services and for Saudi Arabia to advance its AI capabilities.

Why did OpenAI execs quit as the company removes control from the non-profit board and hands it to Sam Altman?

Several OpenAI executives, including the CTO, VP of Research, and Chief Research Officer, have quit following a reorganization that shifts control from the non-profit board to Sam Altman. This move towards a for-profit structure and the potential for commercial interests to influence governance may have led to these departures. The company is also facing challenges in maintaining its original mission of benefiting all humanity.

Why is O1, OpenAI's new model, particularly impressive in math and symbolic reasoning?

O1 is designed to handle complex tasks by using a chain-of-thought (CoT) approach, which allows it to break down problems and reason through them step-by-step. This capability is especially effective for math and symbolic reasoning, where it can achieve up to 50% better performance compared to GPT-4. The model's ability to spend more time on reasoning and planning makes it a significant advancement in these areas.

Why is Microsoft planning to power data centers using the Three Mile Island nuclear plant?

Microsoft has signed a 20-year power purchase agreement to reopen the Three Mile Island nuclear plant, now named Crane Clean Energy Center, to power its data centers. This move is part of their strategy to secure a reliable and sustainable energy source as AI models require significant power to operate. Nuclear energy, despite its past controversies, is seen as a stable and environmentally friendly option compared to fossil fuels.

Why did Governor Newsom sign bills to combat deepfake election content and protect digital likenesses of performers?

Governor Newsom signed several bills to address the growing concerns around AI-generated deepfakes and their potential misuse in elections and the entertainment industry. AB2655 requires large platforms to remove or label deceptive election-related content, AB2839 expands the timeframe for prohibiting such content, and AB2355 mandates disclosure for AI-generated performer likenesses. These laws aim to prevent misinformation and protect the rights of individuals.

Why is an AI tool like ChartWatch reducing unexpected hospital deaths by 26%?

ChartWatch, an AI early warning system, monitors changes in a patient's medical record and makes hourly predictions about potential deterioration. By alerting doctors and nurses to patients who need immediate intervention, it significantly reduces unexpected deaths. The system uses over 100 inputs, including vital signs and lab results, and has shown promising results in early trials. This technology could lead to more comprehensive health monitoring and timely interventions.

Why is Snapchat introducing an AI video generation tool for creators?

Snapchat is introducing an AI video generation tool to allow creators to generate videos from text prompts, enhancing content creation on the platform. This tool, powered by Snap's own foundational video model, is in beta and available to a small subset of creators. It aims to make video creation more accessible and creative, with plans to expand the feature in the future.

Why is Lionsgate partnering with Runway for AI-assisted film production?

Lionsgate is partnering with Runway to explore the use of AI in film production, particularly in pre-production and post-production stages. They aim to develop AI models that can create backgrounds and special effects, potentially reducing the need for traditional VFX crews and storyboard artists. This move is part of a broader trend of integrating AI into creative industries to streamline processes and enhance productivity.

Shownotes Transcript

Welcome back to the AI scene Where am I too keen? The story's not lean You've got whispers of robots talking to us Shifted open near causing quite a fuss All must seem clearer taking bigger strides Videos evolving, worlds in our sights So step back and relax, dive into the weak then

Hello and welcome to the Last Week in AI podcast where you can hear a chat about what's going on with AI. As usual in this episode, we will summarize and discuss some of last week's most interesting AI news. And as always, you can go to lastweekin.ai for our text newsletter with even more AI news and also emails for the podcast with links to all the articles we discuss, which are also in the show notes.

I am one of your hosts, Andrey Karenkov. I finished a PhD in AI from Stanford last year, and I now work at a generative AI startup. And as we've been saying the last couple episodes, Jeremy is busy with having a baby and not sleeping as of recently. So congrats to him. He'll be off for the foreseeable future. I'm not sure how long it takes to adjust to that, but presumably soon.

some time. So we do have a guest co-host and it is once again, as he has done now several times, John Crone. Yeah, it's such an honor to be here. Thank you for inviting me back, Andre. I was really flattered that in the most recent episode that was published, it said, I guess we're going to have John or one of the other regular co-hosts back on. I was like, yes, calling me back in. And congratulations to Jeremy. That's a huge deal. Wow. I mean, it doesn't get bigger.

than having a baby. So I wonder if he's going to even have time to listen to this episode. I doubt it. That congratulations is just, you know, well, congratulations to any listener out there who's recently had a kid. We're really rooting for you. Yes. Yeah. And so I guess my two line biography is,

I host the world's most listened to data science podcast. It's called Super Data Science. And unlike this show, so it isn't a new show, really. It's more of an interview show. About two thirds of episodes have a guest. We do an episode every Tuesday and Friday.

And, uh, we also do sometimes on those Fridays, about half of the time, instead of having a guest, I kind of do a deep dive into a topic. So, um, a recent one, for example, I did a half hour on the O one algorithm from open AI. Cause as you guys said, it's like, I think the quote from Jeremy was the release of the quarter, at least. And I absolutely agree. It's,

A big sensational thing. I won't go into detail on that because you guys have already covered it. But yeah, that's the kind of thing we do. I'm also co-founder and chief data scientist at an AI company called Nebula, which is automating white-collar processes with AI and

And yeah, I actually use something recently that happened. Andre is I started doing some stuff on TV last year and I have a couple more things which I can't publicly announce yet, but are in the works are going to happen very soon on TV. It's pretty exciting.

Wow, sounds exciting. Yeah, and you've had, what, 800? More than 800 now, right? Yeah, more than 800 episodes now, and we've had both you and Jeremy on the show. We'll see how quickly my spreadsheet can load so that I can find your episode. Yeah, so your episode is episode number 799. Mm-hmm.

And that was a really great one. We got deep into conversations about AGI and artificial superintelligence and how that's going to transform society in that episode, which was not, you know, that wasn't my intended really topics to cover, but we got deep into it. And that incidentally, that is what at least one of these big TV projects is about. It's kind of

It's creating a show for the masses about how everything could dramatically change in the coming years as machines eclipse us with their intellectual capacity. So it could be interesting. And yeah, Jeremy's been on the show a bunch of times as well. He was most recently on episode 545, it looks like.

So people can check those out if they want to learn more about the great hosts of Last Week in AI. Yeah, yeah. Those are pretty fun. You do get to hear a bit more background and whatnot. Here we do try to keep it mostly to news or sometimes a bit of personal stuff sneaks in. I also, I misspoke. Jeremy's 565, not 545.

And as usual, before we get into the news, I do want to spend a bit of time acknowledging reviews and comments. We got a few more on Apple Podcasts. That is always nice. We have some...

I like this one. No idea how you get bad reviews on anything covered in the show. You know, maybe I do think we have some flaws. I don't know. It's nice to have some, let's say, constructive criticism. But thank you for that praise. Got another one from Andrew, which I think is pretty fair. Love it, but does not release consistently. That has certainly been true.

Recently, I will try to look into hiring an editor because that is a major bottleneck, me having to do the edit. And I have pretty specific requirements that I try to get done. So it hasn't been quite as easy as you would like it to be.

But, uh, I, every way, like, let's hope, let's hope I managed to keep it a bit more consistent going forward. It's a tricky chicken and egg kind of situation with these, with creating a podcast, because you're expected to be releasing material consistently. Like your listeners expect it every week, ideally, probably at like the same day or something, which is tough to have happen, but you have a full-time job, Andre, and you do a

all of the editing. And so then to go out and hire an editor, you'd need to have, you know, consistent revenue, but then getting consistent revenue, like sponsorship, that kind of thing, that's another time commitment, which would eat into your ability to edit and release episodes. So it's a tricky, tricky situation to get these things rolling. Yeah. Yeah.

But I do find it pretty fun. It's always, it's a fun thing to have this podcast. I guess that does drive me to do it. And just one more shout out before we get into the news talk. Have had a couple of comments on YouTube, which is always fun to see. And in particular, I think, what is it? Tangalo commented on the last few in the most recent one, actually commented on the intro and outro song and,

Actually commented that it got a low score, suggested like a type of theme for the next one, type of music, which actually is helpful because every time I'm generating a song, I'm just like, well, what genre should I do this time? You know?

What haven't I done yet? So if you do have any requests for type of music for the intro or outro, feel free to send an email, comment on YouTube, one of these things, and I will certainly probably give it a try.

What was your prompt for the most recent one? Was that like David Bowie or something? I think it was like 60s psychedelic or something synth-y, but 60s rock. I usually try like a couple and that one got something interesting going on. I have so many drafts of songs that are just discarded. Yeah.

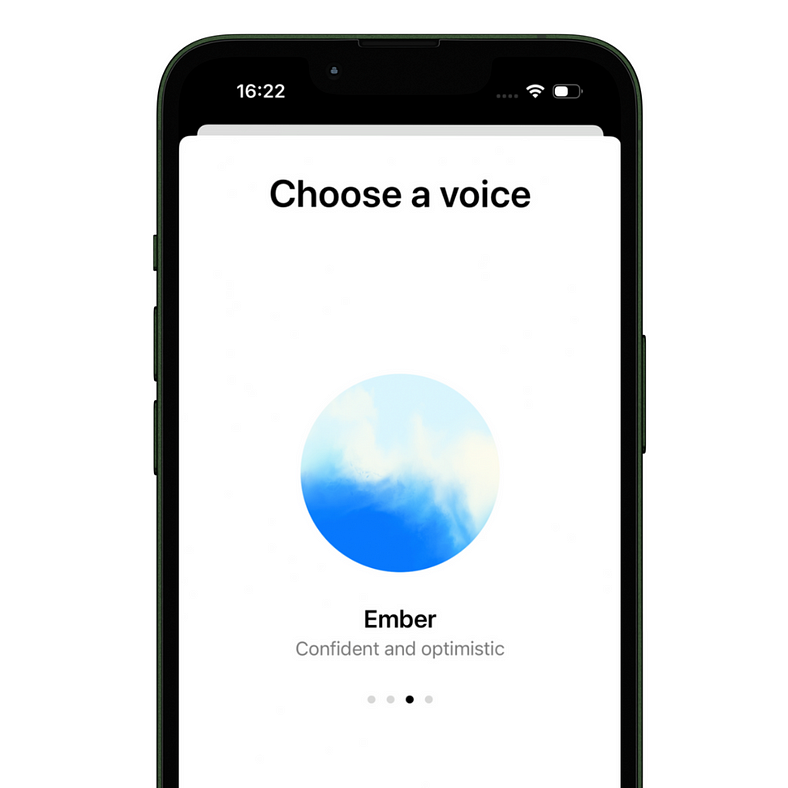

All righty, enough prelude, let's get into the news and we begin with tools and apps where we have some kind of exciting developments in the emerging space of conversational AI. So starting out with OpenAI, they are rolling out advanced voice mode with more voices and a new look.

So we've had this voice mode thing where we got GPT-4.0 Omni now quite a while ago. And in the introduction to that model, kind of a big deal about it was that it was conversational. You could speak to it not just via text, but via your voice.

And it was almost real time, right? It was kind of pretty groundbreaking, I would say, as far as conversational audio interaction with an AI. And so with this new update, which is rolling out to paying customers, so Plus and Teams tier,

They'll be getting custom instructions, five new voices. They call them Arbor, Maple, Sol, Spruce, and Veil. Not sure what those voices are like, but some cool names. Hopefully Maple is really like sticky and rich. Yeah, yeah, yeah. And so that means that there's now nine voices that you can use.

And some other stuff going in here, getting a new design. Now represented by a blue animated sphere, which is, I guess, cooler than just the little music or audio notes that they had.

And that's about it. They've been kind of slow to roll out this feature, and so it seems that they are now gathering enough data to improve accents to be able to expand voices and so on. And it appears primarily available in the US. This is not available in the EU, the UK, Switzerland, various other places.

Yeah, this is the future. It's kind of obvious to say this is one of those mega trends moving away from having to be able to type. This allows so much more flexibility in how you interact with AI.

At the time of recording, it was just a few days ago that Meta had a big announcement about a deeper integration with Luxottica on their Ray-Bans and having integrated AI in those. And yeah, so that kind of AR experience or just auditory in, auditory out can be supported by much smaller devices. You don't need to have a screen open in front of you. You don't need to slow down whatever you're doing to use your fingers to type anything.

And so this is obviously the future. And yes, this advanced voice mode from open AI is right at the cutting edge in terms of capabilities. Yeah, I feel a little embarrassed to not have tried it personally because it's been kind of available available for a little while now.

So I guess that'll be homework for me after this episode to give it a try and see what I feel. This is a bit of a tangent, but I know you guys go on tangents on this show, so I'm not gonna feel that bad about it. Andre, have you ever tried Apple Vision Pro? I did try it once and it was a bit of a hater. I prefer Vimeta pseudo products. I'm a big VR fan actually. So that was the reason. Yeah. But it is definitely cool. Yeah.

I loved it. I had a really like emotional experience in there. I was like almost brought to tears. They, they, I went to an Apple store and did a demo and they end the demo with this really like gut wrenching video of beautiful things like soccer goals being scored right in front of you. And you're like looking around the stadium and a woman tightrope walking and sharks. And it just like, they try to make like this really immersive and, um,

beautiful vision of the world and excitement. And you're like, wow, it's so great to be alive. It's even better with this headset on than being out in the world. Um, I don't know. Anyway, I liked it a lot, but, uh, yeah, just kind of tangentially related to, you know, where it's, it seems like this kind of thing, like augmented reality, like it's easy to imagine how in 10 years, maybe instead of having a bunch of screens, uh,

around your desk. You can just have all of the space in whatever room you're in being occupied by screens. And I think the only real limitation right now is battery life because you can only do two hours on the Apple Vision Pro. And so you can like completely replace your office setup right now with that.

Right. And another trend you're seeing that will be closely tied to that is this conversational stuff. If you have a device on that is augmented reality or even not augmented reality, like

just like a smart glasses kind of feature, then you will have sort of always on ability to talk to AI assistance and whatnot. So that'll be very closely tied in if we do get that feature. Exactly. For sure. One last quick thing on this was that I said,

I was like, why don't you guys have a power cable instead of having this be battery powered? Because then I could use this all day. And they actually said no, because part of the issue is overheating. So right now the device, it just, it probably couldn't run for like a full workday.

Yeah, well, first generation, so it's going to get better. Next up, quite a related story about Meta, where AI can now talk to you in the voices of Awkwafina, John Cena, and Judy D.

Dench. So they have this AI chatbot on kind of a lot of their apps, Instagram, WhatsApp, and Facebook. And they've had various bots that you can talk to. And now you do get these celebrity voices in addition to non-celebrity voices also named Aspen, Atlas, and Clover. It really ignites me because they're non-human names that are like

coming from nature. And according to the Wall Street Journal, Meta is paying these celebrities millions of dollars for their voices.

So again, interesting to me, I feel they've tried this before already prior to the expansion with Lama on all these platforms. So quite an investment from Meta in, I guess, trying to get people to interact with their AI kind of offerings across these platforms.

apps. I can say I still haven't used anything and I use WhatsApp and Instagram and I kind of have just ignored the AI on that front. I'm sure many people are on the same boat. So perhaps this is meant to address that. I have used it in WhatsApp. It's just, it's not very interesting because you just, for people who are the regular listeners to this podcast, you're probably quite aware that you're using a Lama model and

And it's probably not the most expensive Lama model if it's free and WhatsApp.

And so, you know, you can get some, you get the kinds of responses you'd expect. There's nothing mind blowing. You're not getting state of the art capabilities like when you use Cloud 3.5 Sonnet. And so, I don't know, it isn't very compelling. I don't, it doesn't seem obvious to me right now that I need to be having a generative AI conversation in WhatsApp instead of in the ChatGPT app or the Cloud app, which is

equally close to me in my phone. Exactly. Yeah. I have the same impression as, you know, if I'm...

And usually I do use chatbots quite a bit in my workday and just throughout doing tasks. I just open a tab on my browser to go to either cloud or chat GPT and these kinds of things. Don't start in IC, but who knows? Maybe we'll make friends with our AIs soon enough. Now that it has a John Cena voice, I'm going to be in there.

That's what I need to hear. One thing I guess to call out here, which might be obvious to listeners already, is these different strategy choices between open AI and meta here, where

On the one hand, there's something that you talk about a lot on the show where OpenAI has gone down the closed AI route of keeping their models proprietary, whereas Meta AI is the biggest proponent of open source models. And then another strategic difference here is that OpenAI, for whatever reason, has decided to try to

not be partnered with specific celebrity names and to just have those generic tree names, even though they obviously did famously get in trouble with Sky being too close to Scarlett Johansson and being sued by her. But yeah, it's an interesting strategy for Meta to say, okay, we're going to deliberately partner with these celebrities to get their voices in and opening eyes seemingly except for the Scarlett Johansson blip.

deliberately avoiding that. So I don't know. I don't know. I don't know if I have like a, an explanation or something bigger to say there, but I want to call out that distinction. Mm-hmm.

Onto lightning round, which sometimes means we go through these quicker, sometimes not. First story should be quicker. And once again, it is on this theme. It is that Gemini's voice mode is out now for free on Android. So we saw this Gemini live voice chat mode around the same time as GPT-4.0 was announced.

was announced very similar in that it's semi-real-time conversation with a language model, in this case, Gemini. And it is now out for a huge swath of Android users. It's only available in English, but they are saying that it will arrive

Later, with new languages, it's also only on Android. It will arrive on iOS later. But if you are using Android and are speaking English, you can speak to it pretty easily. There's like a new waveform icon in the bottom right corner of the app.

or overlay of Gemini. So another example of, you know, this kind of modality is making pretty rapid progress. It's only like a few months ago that we even got demonstrations that this is possible. Now it's kind of rolling out wide.

Yeah. And I'm a Gemini fan. I actually subscribe to all three of ChatGPT, Claude and Gemini because there's different use cases for me that end up being valuable. And so for Gemini, it's the real-time web search as well as very large files because I think the biggest context window right now is 2 million tokens, which is a lot. So you can pass in a huge amount of audio or video, which sometimes comes in handy for things related to the podcast in particular. And

And yeah, I'm a fan of Gemini in general. It's great to see that they're making this progress on the voice front as well, just like the other big players that we mentioned in this episode already, Meta and OpenAI.

And yeah, I think the big thing here with all for all of these companies is going to be, like you said, near real time. It's once this becomes actually real time. You can see with Gemini here, apparently I haven't used the Gemini voice functionality, but apparently you can interrupt the AI mid sentence, which is going in that direction. But it's going to be where you feel just like you and I talking on this episode right now, Andre, where it seems like everything that I'm saying can be responded to kind of

really reactively, really in real time. And something that's going to be interesting is once. So for a long time, you and Jeremy recorded this podcast audio only, but you went on video to record it. And that's because there's lots of extra information that we provide with our faces and our gestures that allows us to have an even better conversation.

And so it's going to be interesting. And I bet that some of these companies, maybe all of them have already been prototyping ways of having your webcam or your VR goggles be able to react to your facial expressions, because then you could have things like, and things like, this is completely speculative. I have no idea, but I use an Opal C1 camera and

OpenAI recently acquired Opal, which is a hardware company that makes cameras. Why would they be doing that? And it seems to me like there's a great opportunity there to be, because imagine you're looking at the outputs in a chat GPT conversation and you furrow your brow as you're reading it. Then it might all of a sudden, it might stop and say, oh, I'm

I'm sorry, I realize I just made a mistake without you having to type or do anything like there is no technological reason why that couldn't happen. And so it's probably just a matter of kind of like testing and feeling like you have worked out the kinks and maybe, you know, ethical issues that could be associated with it. Yeah, that's some interesting speculation. I haven't thought about that, but it would make a lot of sense that to actually make the conversation seamless.

being able to see your face and see those signals of like, oh, let me jump in there would definitely be useful. Next, moving away from conversing with AI, we are moving on to AI for video, another big trend this year. And the story is that

AI video rivalry has intensified as Luma announces Dream Machine API hours after Runway. Kind of a long title, but pretty informative. So both of these companies, Luma and Runway, kind of the leaders in the space of AI video generation, I would say, are now offering APIs. And APIs are what you can use programmatically. So instead of going on the

user interface on the website and clicking buttons, writing text, you can now have some code that sends a request. And this is how you would make another app or perhaps integrate it with your company's product, whatever.

So you now have this API. It's connected to the latest version of Dream Machine. They say that it's priced at 0.32 cents per million pixels generated, which is a fun way to think about it, I guess.

And as that's for Luma, just hours prior to that, Runway has also launched the API where you actually fill out a form to get access to it now, versus Geo Machines API is available to use for everyone, I believe.

And similarly has Gen 3 Alpha Turbo with different pricing plans, pretty equivalent. So another trend where, you know, it's not that long ago that we started to see usable video generation, so to speak. And now they are trying to actually commercialize it with these moves.

And you say that you started off by saying Luma and Runway are the leaders in text-to-video, which I guess is true for easily accessible models because, of course, there is that juggernaut Sora out there that few people have access to. Yep.

Moving on to Microsoft, they have some new stuff and it is called Copilot Wave 2. So they have this whole Copilot branding that is kind of everything that Microsoft has with AI. Here it's focused on Microsoft 365. That's all their tools, Word, Excel, PowerPoint, etc.,

So this will have a chat interface called Business Chat, which allows you to combine knowledge from your company with web-based information. And you can create these collaborative documents called pages.

similar to Word documents, but they can be expanded and collaborated on by team members. Kind of a reminiscence of artifacts from Cloud and some of these things like perplexity, which also allows you to publish results of your searches. There's also another new feature, switching between web and work modes.

that enables Copilot to tap into knowledge contained in all work documents. And Gemini has some similar functionality, I believe, in Drive, where you can talk to it with awareness of some documents that are relative to your context.

Last note, presumably there's quite a lot of things here, but interesting one, PowerPoint has a new narrative builder that creates a whole deck of slides from a prompt using Copilot, including transitions and speaker notes, which for those working in the industrial world, in the corporate world, might be kind of exciting.

Yeah, I'm not really a Microsoft user, but they are certainly doing a lot in this space and they're investing a lot behind it. You shouldn't be surprised if they emerge like they have for decades now as the leader in enterprise applications of computing. Now with AI being at the forefront of computing.

One last note, these features are not quite out there. So the business chat and pages features are rolling out. These other features have been announced, but will be appearing in public previews later this month. And there are quite a few features here. So Microsoft's still pretty aggressively expanding their AI suite.

On to the last update, and here we have Perplexity introducing a new reasoning-focused search powered by OpenAI's O1 model. So this is designed to solve puzzles, moth problems, and coding challenges. It's a feature currently in beta available to paying Perplexity Pro users.

And currently very limited to just 10 queries per day, probably because 01 opening eyes model limits your API access.

And it actually does not integrate with search. So this is essentially, it seems like kind of a connection to O1, just a way to use it indirectly. But Perplexity has integrated various models pretty rapidly. And this is another example where O1 just rolled out and Perplexity now has this in their tool.

Yeah, pretty curious decision. Yeah, I'm not sure. It's not obvious from this that you get any extra value.

from Perplexity AI. But I guess if you're already a Perplexity AI subscriber and you're not using a paid version of OpenAI, then this gives you some access to O1. So I guess that's the advantage. Yep. I wonder, have you tried O1? Because this one I have played around with and actually used for coding. Pretty complex feature.

And I was quite impressed, I will say, compared to GPT-4. Oh, it is leagues better at handling complex. Oh, absolutely. So it was episode 820 of my podcast, which came out on September 20th.

I do. It's almost half an hour exclusively on 01. And in it, I do a lot of testing of the model as well. And so if you watch the YouTube version, you can see a screen share of me trying different things out. And some of the most impressive things include that I was copying and pasting questions. I teach a calculus for machine learning curriculum.

And I was taking some of the relatively advanced, it's an introductory calculus course, really, but I was taking some of the more advanced questions that I have in that curriculum, like partial derivatives and,

And I was copying and pasting them into 01. And it absolutely knocked them out of the park in a way that you would never even think that you could try that with GPT-4.0. Like if you try that with GPT-4.0, like you know that the next token prediction is just, it's an estimate. It's not doing math. It's not going to be checking for mistakes. Right.

And so the odds with even a moderately complicated partial derivative calculus question of you getting to the correct answer are very small with GPT-4. But with O1, every time, every exercise that I put in, integrals too, worked really well. And the way that it...

uh, expressed the answers as well was really impressive. If I had a student in class that expressed it the way that O1 did, I'd be like, wow, you should be teaching this class. Um, and so it also helps with, uh, with understanding problems. So, um, with these kinds of things, like the partial derivatives, the integrals, O1 would think for about 10, 12 seconds.

But I tried a much more complex math problem that I candidly, I didn't understand. I didn't understand this question, but it took about 90 seconds processing to the answer and then provided me with a response after that processing that allowed me to actually even understand what the question meant. And it seemed to get to the right answer as well. So super impressive. Also tried to

There's lots of coding tasks that I expect models like GPT-4.0 and Cloud 3.5 Sonnet to be really great at. And so it took me a while to come up with an idea for something that I wouldn't expect Cloud or GPT-4.0 to get correct. And eventually what I thought of was creating an interactive website that

That is a neural network where I can hover over nodes in the neural network and get the bias at that part of like at that node for that neuron in the network. And if I hover over an edge in the network, it shows me the weight of the

that connection in the neural network. And so I was like, that sounds pretty complicated. And it was a long prompt with lots of details. It didn't 100% get it right. There were things like I asked for arrows going from left to right to show forward propagation through the network.

And it wasn't rendering arrows. It was just rendering straight lines. But other than that, it nailed all of it. The interactivity in HTML, it was a huge amount of code. And I mean, so it shows the potential for

Yeah, this is, I mean, in a nutshell, on O1, if you want to get like this, having, as Jeremy described it, having this other, this additional way of scaling. So we already had, you know, scaling laws associated with making these LLMs larger and larger, scaling the amount of data. Now you can also scale inference time. And so an obvious extension, which Noam Brown, one of the researchers working on O1, said

at OpenAI, he wrote in a really great Twitter post about how this kind of processing now can be expanded. You can just add inference time instead of thinking on the seconds scale.

You can think on minutes, hours, days, maybe weeks. And then you could put in really complex questions like solve cancer and come back six months later and see how it's doing. I'm being a bit reductive, but there's a huge amount of potential here. And as the cost, like right now, the cost is obviously very expensive. But like everything else in computing, the cost is going to continue to decrease at crazy rates.

We're going to figure out software cleverness to make it much cheaper at inference time, and hardware is always getting cheaper as well. So we already have, for specific use cases like physics, chemistry, biology, O1 demonstrates PhD-level capabilities.

And so even if we just were at today's level of capability with L1, you know that it's going to get cheaper in the coming years. And so then you theoretically have basically limitless number of PhD level hard scientists all around the planet just crunching through problems. That is a serious game changer. And then on top of that, when you factor in the things like longer inference time, more cleverness around the way that they train these models, you

This isn't just going to be PhD level in hard sciences. This is going to be, it seems obvious to me,

more than PhD level, like serious, like, you know, lesser level or beyond in, you know, any imaginable quantitative subject. And so that's, and as that becomes really cheap, then, you know, having that, it's effectively unlimited intelligence far beyond human capabilities. Like we're already, we're looking at that now, these kinds of things that seem like science fiction just a year ago. Right. Yeah. O1,

To your point to this whole story of how you try to have, I've tried it. It is a big deal, right? As we already covered. And it is a big deal, not just in a sense that it is very impressive, but also in terms of what it represents as a new paradigm of improvement beyond just scaling training to scaling kind of how much you can think. Now I will say there are some caveats there. There are some challenges to just getting on what to be better, but yeah,

Definitely exciting. And from my personal experience and your experience, you know, believe the hype, so to speak.

And moving on to applications and business, once again, as is often the case, we have some stories related to OpenAI that are not related to O1, but instead some drama with their executive team and their corporate structure. So the new story here, which just came out today,

Dramatically titled, OpenAI execs mask quit as company removes control from nonprofit board and hands it to Sam Altman. I will say that's being a little bit overdramatic, but is also not entirely wrong. So,

So the big part of this kind of the first wave, at least what I've seen was a company CTO, Amira Muradi announcing her departure. And she has been there for six and a half years. So a pretty significant move.

She was for some background and involved in some of the drama with Sam Altman being removed from his position as CEO last year. She was the interim CEO. Yeah, she was the interim CEO. She expressed some concerns, it sounded like, although I don't believe she was directly pushing him out. Regardless...

She is now announcing her resignation. She's saying that she wants to pursue other opportunities, explore options. So there's not any huge drama here.

But in addition to her leaving, the VP of Research and the Chief Research Officer are also stepping away, which does seem a little significant that there's been these departures. And this is following on some notable researchers leaving just earlier this year.

And all of this is falling on our heels. We don't have concrete details yet, but there is more and more details or rumors coming out of this planned move away from being a nonprofit to being basically entirely for profit, more traditionally. And reportedly also Sam Altman is going to get equity in

in opening, I kind of get basically some amount of control and some amount of monetary, I guess, benefit from being the CEO.

So, yeah, another chapter in the exciting and quite dramatic history of open eyes, governance, corporate structure, whatever you want to call it. Yeah. A lot of people, a lot of big people have left, obviously now. Ilya Sutskiver being the biggest researcher and, you know, one of the co-founders. Greg Brockman's still there. But other than that, I can't like immediately think of names other than Sam Altman that are kind of the people who've been around for many years here.

in leadership that are at least, you know, prominent publicly. Like, yeah, so a lot of these people have now left. It does seem, it does seem interesting because if you think about how well the company is doing commercially and in terms of their brand, I mean,

I don't know. Do you think more than half the time on this podcast last week in AI, the opening story is an open AI story? Yeah. It's wild. There's so much at the forefront of what's happening in AI, period, that you'd think people would be clinging to that more than ever before. You're like, wow, this thing that we've been working on, it's really working. But simultaneously, people are leaving. Yeah.

Yeah, these things like going for profit, you know, becoming closed AI. Yeah, I guess that changes everything.

I guess. It's kind of funny, like the term closed AI or the criticisms of open AI being closed off may go back to like 2019 is, you know, as a PhD student, the first few years of open AI, it was like, you know, some weird R and D lab doing reinforcement learning research and putting out some open source packages for people to do reinforcement learning with. And they did contribute quite a lot. And, and,

One of their early developments of PPO for reinforcement learning was kind of a big deal. So, you know, it was a very gradual shift in some sense away from being a more open just research company to now being a massive, you know, multi-billion dollar company business that

seems to be shifting very much away from being this R&D lab with a focus on AGI having benefit for all, cap profit, all of these kind of weird things that are inspired or motivated by this idea that they are going to get AGI.

Again, we don't know the full details of this supposed move to for-profit and so on. We don't know if the departures are directly related, but as ever, interesting stuff seems to be happening at OpenAI. The other interesting thing to highlight, you didn't mention it there in brief, but I think deserves a bit more attention, is you talked about how Sam Altman is getting equity now in...

Well, supposedly he's going to get equity in the new way that open AI is structured. And it is interesting. And I don't know all the details on this. I feel like it's the kind of thing maybe you know a lot more about. And I think Jeremy would have for sure. My memory of it was that Sam didn't have equity. And that always was weird to me. The CEO didn't have equity. And now it would be 7%, supposedly, is what is being reported. And that's a lot. That's a lot because often...

startups kind of reserve about 10% of their capital for all employees, including the CEO. So 7% is a huge amount.

Definitely. Right. That's a pretty significant amount to get once you are like, you know, obviously, if you are a founder, you have a big chunk of equity early on. But to get it from a position of not having any equity is a big move. One last thing, I guess it is worth covering some of the statements. So Mirabarati did put out a statement that was very positive. Right.

No criticisms of anything. Sam Altman posted a statement to X, and let me just read this. Leadership changes are a natural part of companies, especially companies that grow so quickly and are so demanding.

I obviously won't pretend it's natural for this one to be so abrupt, but we are not a normal company. And I think for reasons Mira explained to me, there's never a good time. Anything not abrupt will have leaked. And she wanted to do this while OpenAI was in an upswing. Make sense. So...

there you go. Let's not make it sound like Open AI is definitely in some sort of turmoil. It's just interesting. It seems like there might be more to the story than gets...

tweeted publicly. And in the same way, very similar to the tweet that you just read from Sam Altman, there was the same kind of thing from Greg Rockman. I have deep appreciation for what each of Barrett, Bob, and Mira brought to OpenAI. We worked together for many years. It's a very long post.

And in fact, if anything, I mean, I realize I'm just creating like, you know, I'm generating drama here. But, you know, even that, like to have all of these people that you kind of expect, you know, everyone's kind of watching. What is Greg Brockman going to say? What is Sam Altman going to say? And then they all have these like really nice things to say, long posts. It seems like it could be a bit manufactured, I guess. But yeah, again, completely speculation. Maybe there is an end drama at all.

Next, another story on OpenAI, and this is, you know, in a bit of an odd to Jeremy, let's do a safety story. So Sam Altman is departing OpenAI's safety committee. This is their internal safety and security committee, which oversees safety decisions related to a company's projects.

They will now be an independent board oversight group chaired by Carnegie Mellon professors Zico Coulter and including several other people. The committee will still be responsible for things like safety reviews, including their review of things like O1, and will continue to receive regular briefings from OpenAI safety and security teams.

So for me, hard to read fully into what this implies. It sounds like maybe it's becoming more independent, which is something you'd want, right? You don't want Sam Altman to be on a committee that is overseeing and evaluating how safe something is that you may want to release for commercial interests. Yeah, I wish I was Jeremy so that I could provide a lot more rich color on the safety aspects of this, but I'm not. So on to the next story.

Out to the lightning round. First, we have chip startup Grok backs Saudi AI ambitions with our Ramco deal. So we've been talking about Grok pretty frequently this past few months. Grok is a producer, a designer of advanced chips for

running AI models for inference in particular. We have these language processing units and they do appear to be a leader in how fast they can run models such as LAMA. Now they have partnered with oil producer Aramco to build a giant data center in Saudi Arabia.

This is going to have apparently 19,000 language processing units initially. And Aramco will fund the development that is expected to cost in the order of nine figures, according to an interview with the CEO. They say the data center will be up and running by the end of the year and could later expand to include a total of $1,000.

200,000 language processing units, which would make this a massive, massive AI infrastructure center. We've heard Elon Musk, of course, saying that he'll have 100,000 GPUs up and running.

at their super AI inference center. So another one being worked on here. Yeah, an interesting thing for me here is that this is providing a huge amount of capacity to one of these countries that is quite neutral between, say,

the West and say the other access, which could be like China, Russia, Iran, you know, you have, so you have kind of these two, you know, these two, um, industrial systems that are to some extent becoming decoupled a bit, although it isn't like the kind of Soviet era decoupling that was experienced, uh,

But, you know, there are efforts to have, you know, more and more tariffs and sanctions going both ways between those two groups. And Saudi Arabia is one of those countries that kind of sits in the middle. It freely does trade with both the West and the other axis. And so, yeah, it's an interesting situation.

Yeah, it's an interesting play and it's interesting how, at least for now, there doesn't seem to be any resistance from, say, U.S. politicians to be selling 200,000 language processing units to Saudi Arabia, which obviously is a critical...

Partner to the U.S. in the Middle East. But yeah, they're also a critical partner to China. So yeah, it's an interesting... I don't know a lot about geopolitics, but... Yeah, I'm sure there's a lot of nuances here with relations and implications. Either way, from a business perspective, clearly a good thing for Grok. And they've seemed to be on the rise here.

And a leader in the technology with this kind of investment, they can actually scale up and provide services to many more companies that really compete in the cloud AI inference space. Yeah. On the other side of things, the bad thing for Grok, G-R-O-K, this company is

It's got to be so annoying for them that X has named their big model Grok G-R-O-K. And on to our next story.

Which, yeah, you have a nice segue there. So GROK, GROK, has been in the news partially lately because of the new iteration of it, but also because they've integrated image generation with Flux, the image generator from Black Forest Labs. And this story is about that company, Black Forest Labs. They are raising $100 million at a $1 billion valuation rate.

This company was co-founded by engineers behind Stability AI. So basically some real advanced people who were involved in some of the pivotal technology and progress in AI image generation. The company is pretty new, actually pretty fresh. They have previously raised $31 million.

And it sounds like they are now going to raise a bunch more and get to the 1 billion valuation AI startup club, which is not as prevalent, not as easy to do yet.

nowadays as it was maybe a year ago. So Black Forest Labs, clearly a lot of excitement about what they're working on. My favorite thing about Black Forest Labs is how delicious it sounds. I can't hear Black Forest Labs without tasting the taste of Black Forest Cake. And I think that's unique amongst tech companies. No other tech company name makes me salivate. Yes, I do think they have some very cooler sounding names. And it's definitely more fun to say than...

some of the other ones out there. Next, another story related to a trend that we cover pretty frequently on the show. It is about a new humanoid robot. Although this one is apparently semi-humanoid. So this is from Pudu Robotics. They're introducing the D7, a semi-humanoid robot with an eight-hour battery life.

10 kilogram lift power another one that is seemingly going to be somewhat affordable that is practically usable potentially it was unveiled by them in may as part of the long-term strategy for service robotics sector so potentially seeing this in stores etc

Final note, what does semi-humanoid mean? To be clear, it means that this has no legs. So basically it drives around on a wheeled base, but the top of it, it has a torso, arms and a face kind of. It's a Roomba with a torso on top. Yes, which I guess we haven't seen many other people do. So perhaps the semi-humanoid tact here will help.

Makes a huge amount of sense, though. I actually I hadn't seen this kind of design before the semi humanized design with the wheeled bottom torso top. But makes a huge amount of sense because if you think about it, there's not I mean, going up and downstairs, I guess this wouldn't be able to do, which is something a totally humanoid robot could do. But that being able to go up and downstairs comes at the expense of a lot of extra compute and probably also power required just to stabilize things.

Whereas when you've got wheels and it's just supporting a torso, that's going to be great for battery life. And it's going to be useful for a lot of situations because now you can be doing manual manipulations on, you know, countertops or, you know, at a bunch of different levels that humans can access things at with their own arms without, yeah, all that extra expense and complexity and presumably battery usage associated with legs. Right, exactly. In the...

the video they released, which is about a minute and a half, shows a lot of use cases. One of the ones they highlight is retail scenarios, goods sorting and shelving, taking bottles and placing them. So you can see how this is maybe not requiring of legs. It's actually better to be able to drive around.

I got to say, you know, where Black Forest Labs, I think it is a great company name. It makes me salivate. It's easy to know how you spell it and type it. I got to say PUDU, P-U-D-U, PUDU. I mean, come on. It sounds like something that kindergarten kids came up with. I don't know.

I don't know. Maybe it depends on some background. It's based in Shenzhen, so perhaps we don't fully understand. Right, we just don't get it. They're like, it's delicious. You haven't tried voodoo?

And on to the last story. It's about Amazon and they are introducing Amelia, an AI assistant for third-party sellers. So this is described as an all-in-one generative AI-based selling expert. It will be available in beta to select US sellers. And it is, you know, an ongoing demonstration of Amazon trying to include generative AI. They have introduced...

AI powered shopping assistant named Rufus, the business chatbot Q. They've introduced like a, I believe a thing that helps people who leverage AWS understand how to do this. This is yet another tool in that trajectory. And I

Apparently, more than 400,000 of Amazon's third-party sellers have used its AI listing tool, which is a recent increase from just 200,000 in June. So maybe third-party sellers will get a lot of use out of this. It's interesting to me that most of the other big tech companies, in fact, maybe all of them,

they try to brand their generative AI capabilities under one name. Whereas Amazon, I guess, is a reflection of its kind of corporate structure where they, you know, you have those two pizza meetings famously, you're trying to always have small teams be efficient. It's ended up creating this kind of collage of lots of different kinds of models. Rufus, the shopping assistant, business chatbot Q. Now, Amelia, it seems like that kind of

While I totally get how under the covers, this is different infrastructure, different model weights, makes a lot of sense from a data science development perspective. But from a marketing perspective, I think it's pretty confusing. And I don't know, like I've seen Rufus show up in my Amazon shopping experience recently. And it's not something that I like, just like having meta AI in WhatsApp. I'm like...

I'm good. Onto projects and open source. And even though we're almost an hour in, this could perhaps be the top story of the week. Certainly if you're someone who follows AI news closely,

Meta has released Lama 3.2 and it gets a major update. It is capable of processing both images and text. So this has been all the limitations of Lama 3, Lama 3.1. Even as they've gone really big, they haven't been able to take in images, which of course GPT-4.0 and other things can.

Now you can give it images and they say that you can use it for various AI applications like understanding video, visual search, things like that.

There are two vision models. So there's an 11 billion parameter vision model and a 90 billion parameter. And along with that, Lama 3.2 comes with two lightweight text-only models with 1 billion parameters and 3 billion parameters. Somewhat similar to what we've seen from Microsoft with FI, what we've seen from Google with Gemma. These are condensed models

Models that appear to be working really well. They seem to be condensed from very big Lama models into these relatively small language models that are still capable of a lot. This came along with a bunch of announcements, actually, that they've demoed. So they also announced...

Their Ray-Ban metaglasses get more feature. They demoed live translation from Spanish to English. They have a prototype augmented reality thing, which of course will integrate AI presumably. But certainly having an open source model that is very good and now capable of dealing with images is

is a bit of a game changer in the sense that Lama 3.0 was already very important to the AI ecosystem as a large language model that is at least sort of competitive with the frontier models. And now we can do images. You know, it makes it even more of a useful tool for people who want to build something but relying on open AI.

Totally. Yeah. I mean, kudos to Meta for continuing to support these kinds of open source releases. I'm hugely grateful myself as someone running the data science function at an AI startup. We are able to leverage these models. These are our preference.

Lama architectures, we get a huge range of possible sizes, lots of different fine tunings, you know, for code generation or chat applications. It's a huge service to everyone who is developing and deploying AI models. And so I'm deeply grateful. You know, we are able to have our own proprietary software.

models running on our own infrastructure at, at least in terms of training, an almost trivial cost. It could cost hundreds of dollars, thousands of dollars to create really well fine-tuned models for the tasks that we need them to. If we had to train, if we had to do the pre-training, it costs hundreds of millions of dollars that Meta's investing here. And so that also then ties into

You know, we talk about all these earlier in the episode, we were talking about all these new voices being added in and meta trying to push us as consumers of these free products into using these AI tools. And part of why that push is happening is because.

And Meta, Mark Zuckerberg, would like to be able to show to investors that they're getting some kind of return, that there's an increase in engagement in Instagram, say, as a result of these billions of dollars being spent on open source research.

And the next story, quite related, Alibaba has unveiled OVIS 1.6, a new multimodal language model. So Alibaba is a leader in AI hailing from China. They are, I don't know, something like Amazon, you could say, from the East.

And they've revealed this multi-model large language model, meaning basically it's same as Lama 3.2 in the sense that it can take in both images and text. So OVIS stands for Open Vision, and they introduced some kind of new technology

techniques for having the architecture for multimodal large language models. They have a full paper out on this stuff, and this is an update on their Code Depot. So they're adding this new version, 1.6, released under an Apache license.

And they have a model out, they have a demo out, and it, of course, is better. It is trained on a larger, more diverse, and higher quality data set and also has instruction tuning. So it seems to be performing pretty well on various benchmarks, beating out other benchmarks.

multimodal large language models out there on just about every kind of benchmark, including QN2VL, which we covered last week. Probably not beating LAMR 3.2, but who knows, might be similar. So an exciting week for multimodal large models, for sure.

Yeah. The biggest thing for me from this release is this term, which I had not come across before, MLLM, multimodal large language model, which I really like because it's been a bit confusing to call. Originally we had LLM describing just text in, text out LLMs, which is really straightforward because you've got language going in and language coming out, even if that's

A programming language, okay, still language. But then as these became multimodal, sometimes then they get described as a foundation model, which isn't as widely used a term and isn't disambiguous. And so I like this MLLM. So you're, and I also like, part of what I like about that is that even if it's multimodal, even if it has vision capabilities, say, as in the case of Ovis here,

It's still relying on language at an abstract level in order to be able to do the vision capabilities that it has. So the language capabilities of the LLM enhance the world model that the vision model has to work with, that the vision capabilities have to work with, and are complementary in that sense. Right, exactly. The model itself, while getting too technical, is kind of...

Smooshing together images and text, you can almost say, like both get tokenized, converted into these like set of symbols, then get converted into a big vector, a bunch of numbers, and then ultimately both go into a big neural net, sort of just together. So there's like a soup of representation of images and text and a lot of sort of cross-referencing between images and text. So yeah.

So there's potentially, you know, you can scale up, train on more images and on more text. And, you know, you might get better overall results.

Moving on to research advancements, we have a couple of papers. The first one is to COT or not to COT? And the result of that question is chain of thought helps mainly on math and symbolic reasoning. So COT is a chain of thoughts. We've mentioned it quite a few times. So to recap quickly, it is just telling your model, you know, think a bit and first think

enumerate your chain of thinking, basically think through the problem and then give me the answer instead of just jumping to the answer. And there's been a lot of research on this, a lot of kind of known ways of using it. Of course, GPT-01 from OpenAI has chain of thought baked in and trained to do this.

chain of thought or you could say reasoning that is certainly related to chain of thought

In any case, this paper is looking at whether chain of thought prompting is actually useful and makes the LLM better. And what they have found from quite a large analysis of over 100 papers is that it does help on math and symbolic reasoning, but on other tasks like common sense, reasoning, text classification, context-aerotic QA, etc.

it is, let's say, less of a difference. It doesn't give you quite as much of a jump in performance. So this is, let's say, maybe more of an empirical paper, right? They're just demonstrating a result of a lot of different evaluations of using this.

Perhaps not fully surprising that chain of thought and kind of enumerating your thinking is mainly useful for math and symbolic reasoning, logical reasoning, these kinds of things that require you to talk through kind of a problem.

But another nice finding also for trying to get a better sense of how alarms work. I would say here I have a reductive oversimplification that has served me well in terms of understanding where chain of thought or where an O1 style model would outperform a GPT-4-0 style of model. And that is with...

Daniel Kahneman's thinking fast and slow kind of paradigm. So Daniel Kahneman recently, he just earlier this year passed away, Nobel Prize winning economist, was famous for decades for creating lots of research, particularly with someone named Amos Storkey about how human brains work. And one of the big conclusions that they had, which is right in the title of the book, Thinking Fast and Slow from Daniel Kahneman, is that we have these two systems of thinking.

And so you have the fast system one, thinking fast and slow. So the first one is the fast one, system one. And that's like your intuition. That's what I'm doing right now. Words are just coming out of my mouth. They're just spilling out. I'm not planning what I'm saying. It's just happening. And that's like GPT-4-0.

And so on tasks where you're generating an email or you're editing the copy of a document that you wrote, that kind of problem can be handled very well by that kind of system one just spewing words out without thinking ahead.

that GPT-4-0 can do. And so in the O-1 research announcement a couple of weeks ago, they did comparisons across different subject areas and on things like I just said, like writing an email or editing text, GPT-4-0 might even do better than O-1 or they're at least comparable in human evaluations. They do about, you know, they perform about 50-50 on human evaluations.

Whereas it's tasks that leverage your slow thinking, the system two in that thinking fast and slow, where when I'm tackling a math problem that is not something that I've already become adept at, I need to break that down into parts. I need to spend time just staring with pencil and paper at the problem. And it's those kinds of problems where you have to stop and

and think that chain of thought types of systems like O1 significantly outperform. And so that's kind of my, you know, when I'm thinking about

a task that I might need an LLM for, I'm like, you know, is this a system one kind of task? In which case I'll probably go to cloud 3.5 Sonnet today. Whereas if it's a system two kind of task, like writing some very sophisticated code, doing some doing math for sure. If I had physics problems regularly, like maybe Jeremy would, he's probably just always sitting around at home doing physics problems. Right. Yeah.

then, you know, in those kinds of scenarios, I would go to O1 right away. Exactly right. And so they do show that on those types of problems, math and symbolic reasoning, to get into the numbers, you are seeing improvements of, let's say, up to 50%, 20%, pretty high possible improvements versus if you're looking at common sense reasoning, you might still see some improvement depending on your context.

But it might be just like a few percent, for instance. So you really don't require it. And it makes sense if you are able to do kind of a quick common sense response, or if it's just needing to know some knowledge, there's no need to plan ahead, as you say.

Next paper, quite relevant on a related topic, and the title is LLMs Still Can't Plan? Can LRMs? A Preliminary Evaluation of OpenAI's O1 on Plan Bench. This paper is using this notion I haven't heard before of a term, Large Reasoning Model, LRM. Not sure if it has been used before, but

They do say that O1 or Strawberry as what is codename, according to them, is claimed to be a large reasoning model designed to overcome limitations of LLMs. They show in this paper that it does represent significant improvements on sort of classical planning tasks, but still isn't significantly improved

capable of doing long plans of, let's say, going on to 10, 12, 14 steps. So how do they do this? What is planning in this context? What is plan bench?

The idea here is that there's in AI a sort of whole category of problems known as planning problems, where essentially you have a goal state you want to get to represented in some sort of set of variables. So one example that they are working with in this paper is this block world problem where you have a set of blocks.

They are stacked on each other or kind of in various physical configurations. You have a set of actions like picking up a block and

unstacking a block on top of another block, putting down a block, and stacking a block on top of another block. And so you may have a set of configurations for the blocks that you want to get to from an initial set where you need to do this set of actions, picking up, stacking, unstacking, one by one to get there.

And there's a whole set of algorithms going on to the very beginning of AI and robotics, like PGL, Shakey from Stanford, that solve this kind of thing, that find you the sequence of actions that get you from a state to another state. And you can do this if you have exact actions. You don't need machine learning, even. You can just do planning. So...

Here they evaluate O1 to see if it can give a description of a state and some actions it can take, whether it can generate a valid plan. And it certainly does perform much better than GPT-4.0 and just about any other language model.

But as you increase the path length, going to, again, 12, 14, 15 steps, it still goes to 0% correctness on this context of this kind of block world type of problem, where it's just a few types of actions and a very specific state that you want to get to.

Unsurprising in some sense. This is comparing soft reasoning, so to speak, neural net reasoning to something that is much more algorithmic. Planning in this sense is essentially doing search through a sequence of things that is kind of the playground of straight up algorithms, straight up like search code routines and not so much neural networks.

So, yeah, like you said, I like this new term LRM language reasoning model. And this falls very neatly into the exact same thinking fast and slow buckets that I was just talking about where LLMs, the way that they're talking about them here, that's fast system one thinking where you're just spitting words out. And the LRM is taking time to reflect and plan before generating an output.

Onto Black Mound, a couple more stories starting with an advancement rather than a paper, something a bit more fun. And the story is that Norwegian startup 1X has unveiled an AI world model for robot training. So 1X is one of the leaders in the space of humanoid robots. They have their

robot Eve, and they say that they have now an AI-based world model. And this world model is essentially meant to enable you to do various types of tasks in dynamic environments. So it can simulate the world, and that's what a world model is. It's essentially the ability to predict what will happen if you take a certain physical action.

And they trained in simulation and the various techniques to get a state-of-the-art world model quite a bit better in actual context with robot handling of, let's say, clothes or individual objects, opening doors, things like that, that enables it to do pretty reliably in novel environments.

So exciting if you're a robotics person, if you are excited about the humanoid progress we've been seeing.

One more note, they have also launched the 1x World Model Challenge, where they are incentivizing more progress by offering over 100 hours of video data pre-trained model and having a cash prize for people able to even provide improvements on top of that.

Yeah, very cool. Some people think that the realization of AGI will require robots to be able to kind of have an embodiment and explore the world, you know, as opposed to just being able to be, you know, a digitalized

digital media in, digital media out kind of model. And interestingly, this kind of paradigm where you have a world model simulator that could potentially accelerate that ability of an AI system to be able to explore, you know, because it's very expensive to be actually out in the world exploring. And so, yeah, if you can do that virtually somehow, like this appears to do, then yeah, it could really speed robot training up.

And the last story, AI tool cuts unexpected deaths in hospital by 26% according to a Canadian study. So this is about an early warning system called ChartWatch, which has led to 26% in unexpected deaths among hospitalized patients at St. Michael's Hospital in Toronto.

This system monitors changes in a patient's medical record and makes hourly predictions about whether the patient is likely to deteriorate. And so this includes about 100 inputs from the patient's medical record, including vital signs and lab test results.

It can alert doctors and nurses to patients who are getting sicker or require intensive care or are on the brink of death requiring intervention. So according to the study, they looked at more than 13,000 admissions to the internal medicine ward and compared that to admissions to other subspecialty units and patients.

seems that it definitely helped in a context of the internal medicine ward yeah this is a big deal and i love that it's coming out of i grew up in downtown toronto and i went to saint michael's choir school which is at jason it's next door to saint michael's hospital and it's i don't know that's like a weird personal connection to this that just makes me think wow that's cool and uh

especially because this 26% drop is huge. I mean, a quarter of unexpected deaths. And if you think about this as an early iteration on this system and stretching forward, when you see these kinds of systems work well, like we're seeing here from ChartWatch and you say, okay, we have a hundred inputs at this kind of level of granularity. We could be recording so much more data in hospital systems and training much, you know, more and more and more better. Right.

More and more better AI systems. And so this is like just the beginning. So to already see this 26% drop in unexpected deaths with what is probably a prototype working on relatively limited data is really exciting. And I think it kind of ties into a world that I think we will have in our lifetimes where a huge amount of data is being collected and monitored, not just in emergency rooms, but in

in your bedroom, in your home, and allowing us to have a really great sense of our health and get these early warning systems on people being on the brink of death and interventions being able to happen. Exactly. Pretty exciting. Worth noting, just as a caveat, this, of course, is only one hospital, so we do need more research on this.

The data collected was also during COVID to some extent. It was November 2020 through June 2022. So a little bit of a different context, but that's what you get. Like this is a year and a half long study collecting real data at a real hospital. So it's certainly a very positive signal on that front.

And now to policy and safety, we are going back to a topic we've been talking about more and more, which is how will you actually power all of these data centers that require a whole lot of energy, much more than the grid has been used to supplying to data centers.

And this next story is providing one answer. Apparently, the Three Mile Island nuclear plant will be open to power Microsoft data centers. Three Mile Island is a bit notorious. It was the site of the worst commercial nuclear accident in U.S. history. And it seems that there was a power purchase agreement signed with whoever controls this

to enable this nuclear plant to provide energy. They have an agreement for 20 years and the plant is expected to reopen in 2028 and renamed to Crane Clean Energy Center. This is reopening to be clear from it having been shut down in 2019 due to inability to compete with cheaper energy sources.

So this is not sort of like reopening a fully shuttered plant from decades ago. This is more of Microsoft investing to get another source of energy in addition to, you know, I guess, non-nuclear energy. Yeah, hopefully that thinking for months that O1 and more models like O1 will soon be doing will help us realize the nuclear fusion energy in a short order. But in the meantime, nuclear fission is...

is out there as one of the best energy sources we have. It's interesting. There's some countries like Germany that are really opposed to having nuclear energy, but it's a great part of an energy mix alongside solar and wind.

because it can provide lots of solar and wind. You can't always guarantee you're going to have sunshine or wind. You can have batteries, and those are getting better and better as well. But having nuclear fusion as your backup, as opposed to, say, oil or gas generators, is obviously way better for the environment, at least in terms of carbon dioxide. Yes, with nuclear fusion, you have nuclear byproducts, but...

We're pretty good at managing those and we're pretty good at managing risks with nuclear power plants as well. Newer generations of power plants don't have any record of issues like we did with Three Mile Island. Exactly.

Next, a story related to policy. Governor Newsom signs bills to combat deepfake election content. So we've been covering SB1047 a whole lot. That's a big regulation bill. But it turns out there are other bills related to AI that are happening.

And there's been a slew of them that have been signed into law in California recently. So these ones in particular related to deepfake election content include AB2655, which would require large online platforms to remove or label deceptive and digitally altered election-related content and provide mechanisms to report this content.

AB 2839 expands the timeframe in which entities are prohibited from distributing deceptive AI-generated election material. So that's presumably meaning, you know, in the context of an election, when are you meant not to do this kind of thing? It also expands the scope of existing laws to prohibit deceptive content.

Last up, AB 2355 mandates that electoral advertisements using AI-generated or substantially altered content future disclosure.

So there you go. A whole, you know, little set of bills related to AI-altered content for elections. We've covered, you know, not too many stories related to deepfakes and elections, but there have been some. So perhaps looking ahead at emerging trends. Yeah. I mean, did you know that Kamala Harris has never had anyone in any audience on any talk she's ever given? Yeah, it's the AI. It's the deepfakes. 100% AI. Yeah.

Exactly. And next, a couple more bills, actually, not related to elections and deepfakes. These ones are about the digital likenesses of performers. So there's a bill AB2602 that would require contracts to specify the use of AI-generated digital replicas of a performer's voice of likeness.

informed by the historic strike by SAG-AFTRA and some of the negotiations related to that. So I guess California does have Hollywood. It makes sense that there is actual legal precedence being established here for this kind of thing.

I'm curious, John, you are a podcaster. You have a lot of data. Were you thinking of doing a digital replica at all? We are actually in the midst of exploring having the Super Data Science podcast be broadcast in other languages. So we're looking at having Brazilian, Portuguese, Spanish and Arabic as additional podcasts.

additional versions of the podcast to get us out there to billions of people in aggregate that speak those languages. And so you could, you know, vastly increase our audience and the tools are starting to get pretty darn good at it. So yeah, it's something, it's one of those things that kind of feels like, like I always kind of feel overwhelmed by things that are going on and I'm like, oh man, this seems like a lot to do here. You know, you're, you're then talking about, you know, whole new YouTube channels and podcast RSS feeds and,

But yeah, a lot of potential listeners out there that you could be making an impact on. So yeah, definitely something to explore here. Yeah, yeah, for sure. I think I mentioned this once before in a podcast. I have played around with 11 labs with text to audio generator and fed it in like three hours of data from these kinds of recordings and got a pretty good replica. So if you wanted an AI version of yourself, you could definitely give it a try.

And one last story. Startup behind world's first robot lawyer to pay $193,000 for false ads, according to the FTC. So this is a little bit of a funny story, but I guess also a serious story. Apparently, the Federal Trade Commission has taken action against the startup Do Not Pay.

which was advertised as the world's first robot lawyer. And the FTC found that this company, Do Not Pay, conducted no testing to verify if its AI chatbot's output was equivalent to a human lawyer's level and did not hire any attorneys to validate its legal claims. So Do Not Pay is paying this fine of $195,000. It doesn't seem huge.

And there's a 30-day public comment period, presumably. And they have also agreed to inform consumers who subscribe to a service between the last couple of years about the limitations of these features.

They are also prohibited from making baseless claims related to these services being able to substitute for professional lawyers. You got to think that they're getting way more than $200,000 worth of media exposure here. This is one of those...

no news is bad news kind of situations? No. I think that's the other way around. Any news is, yeah, any bad press is good press kind of thing. Yeah. I think the bigger thing, aside from a fine, is the prohibition of making baseless claims related to AI being able to replace a lawyer. And it seems that this is part of a larger initiative called Operation AI Comply, which

aimed at cracking down on deceptive AI claims. So that's kind of a major problem is people making claims about AI tools that are not true. Really weird company name, Do Not Pay. I mean, I guess it's kind of, so they build themselves as an AI consumer champion to help you. They use AI to help you fight big corporations, protect your privacy, find hidden money and beat bureaucracy. So

Yeah, I guess it kind of came from appealing parking tickets to start. And I guess that's kind of where the name came from. So like, do not pay the fine. But it looks like they will be paying this $193,000 fine. Nice, nice. Onto the last section, synthetic media and art. And we got actually a couple pretty major stories, I would say, in this one.

Starting with Snap is introducing an AI video generation tool for creators. So Snapchat, if you don't know, has a sort of feature similar to Instagram or TikTok, where you do have creators posting videos and various skits and whatnot.

Apparently, this tool will allow creators to generate AI videos from text prompts and in the future also image prompts. The tool is in beta and available to a small subset of creators, but presumably will be expanded later on. And apparently this tool is powered by Snap's own foundational video model.