#185 - Movie Gen, ChatGPT Canvas, OpenAI's VC Round, SB 1047 Vetoed

Last Week in AI

Deep Dive

Why did California's Governor veto the AI regulation bill SB 1047?

California Governor Gavin Newsom vetoed SB 1047 because he believed it focused too much on regulating the largest AI systems based on model size rather than on the outcomes and uses of the AI. He argued that the bill applied stringent standards to even the most basic functions if a large system deployed them, which he felt was not the best approach to protecting the public from real threats posed by the technology.

Why is OpenAI's new logo causing shock and alarm among staff?

OpenAI's new logo, described as a large black box or ring, is causing shock and alarm among staff because it is seen as less iconic and more inhuman compared to their previous hexagonal geometric logo. The new design is perceived as cold and brutalist, which does not align with the company's brand or the optimistic image of AI.

Why is Meta's MovieGen significant in the realm of AI video generation?

Meta's MovieGen is significant because it introduces advanced features such as video editing, object swapping, and in-painting, which allow for more precise and creative control over AI-generated videos. It also generates high-quality 16-second videos at 16 frames per second, outperforming current models like Runway Gen 3 and Sora. However, it is not yet available for public use.

Why did OpenAI launch a new 'Canvas' interface for ChatGPT?

OpenAI launched the 'Canvas' interface for ChatGPT to provide a more fluid and efficient user experience for writing and coding projects. The interface allows users to generate and edit specific sections of text or code directly within a workspace, making it easier to collaborate with the AI and make precise changes without regenerating the entire document.

Why is Google bringing ads to AI Overviews in search results?

Google is bringing ads to AI Overviews in search results to monetize the AI-generated summaries, which are more expensive to produce than traditional search results. This move helps Google recover the costs associated with AI inference and maintain their search business, which is primarily funded by advertising.

Shownotes Transcript

Welcome to the future episode of A5. Let's dive in deep where the small things thrive. Where AI is the buzz and the data never sleeps. That's us movie gen. Let's change the game, take your leaps. Last week in AI, we're breaking it down.

Hello and welcome to the Last Week in AI podcast where you can hear us chat about what's going on with AI. As usual in this episode, we will summarize and discuss some of last week's most interesting AI news. And as always, I will mention you could go to lastweekin.ai for the stuff we didn't cover in this episode and also for the links that come with this episode to all the stories.

I am one of your hosts, Andrey Karenkov. My background is having studied AI at Stanford and now working with generative AI. And once again, Jeremy is still on paternal leave, as regular listeners know. So we have another guest co-host, another returning guest co-host, actually, and I will let you introduce yourself.

Hey, everybody. This is Gavin Purcell. I'm one half of AI for Humans. Our podcast and YouTube show specializes in kind of like trying to both demystify AI for a kind of a more mainstream entertainment-based audience, but we also do a lot of fun creative experiments along the way. My partner, Kevin Prayer, and I have been doing it for about a year and a half now. So I would say, Andre, that we're almost experts. We're getting there. We're getting there.

Yeah, I would say so. Definitely experts like in the realm of trends in AI, especially, you know, outside of research and technical stuff, presumably. And creative stuff. I think that's one thing Kevin and I have talked a lot about where our kind of like sweet spot is, is like, if you're interested in creative stuff, we both come from creative backgrounds. I worked in TV for a long time. Kevin also did a lot of TV. So we do a lot of weird experiments with the creative tools and try to show people how to use them in fun ways.

Yeah, exactly. Your background is creative, also entertainment. And I will say, probably compared to this podcast, it's a little bit more entertaining for sure. I'm sure I listen. I listen to this podcast to get the technical details down, and sometimes I re-synthesize it in ours. So they both have their use cases. Yeah, but I also am a fan of podcasts. I love the AI co-hosts and the little experiments. So for any listeners, if that sounds appealing, do check it out.

If you want to learn more about our show, go check out AIforHumans.show. That's our website. You can get all the links there to YouTube and the podcast.

Yep, or just, you know, you can go to YouTube and presumably search there and find a bunch of cool clips as well. Yes. And real quick, before we get into the news, do you want to respond to some comments? We had a cool correction on YouTube, which is always nice. Hiding secret messages in plain sight is steganography, not sonography, which is according to a cryptographer.

which I did not know. So that's interesting. And another one that commented on

on us being back on schedule and it no longer being a time warp to listen. For that, hopefully we'll stay on schedule. We'll see if we manage. And a couple of nice reviews also on Apple Podcasts from Extant Pensus and Nerd Planet. I always find these, you know, names on Apple Podcasts pretty entertaining.

So thank you for all reviews and comments. Always appreciate it. Feel free to go to YouTube, to our Substack, etc.

And with that out of the way, let's get into the news. And this week actually is a great week for you, Gavin, to co-host because we have quite a few news related to creative stuff, to tools people can use, and not so much the more technical, open source, whatever that sometimes is the focus. And you begin in tools and app.

with I think the biggest story of the week, at least for me, which is Meta announcing MovieGen, an AI-powered video generator. So this is pretty much Sora, I would say, from Meta. And it's actually not just a video generator. The paper they put out is called MovieGen X.

cast of media foundation models, kind of a cute title. And so in addition to generating video, it can also do video editing. It can, you know, modify video in certain ways like swapping out objects, et cetera. They also have a different model for generating audio for video for a clip.

And they have just a lot of examples of ways you can use this. And the details here, we can get a little bit into it. So the model can generate 16 seconds of video at 16 frames per second. They compare it to...

all the kind of current models, Runway Gen 3, Sora, Kling 1.5, Luma Labs, and it just beats them out of the water. I'll try to edit in some clips as we were talking here, and it looks really good. So I'm curious to hear your thoughts on it, Gavin. Looks really good.

And I think it's really awesome. But just like Sora, it is not available to try. And I think this is a really important aspect of both this and Sora because I spent a lot of time with these tools, Runway Gen 3 and Specific, but also with Luma and Kling and Minimax, the Kling and Minimax, both the Chinese models. And

And, yeah, it looks amazing. I mean, the one thing I'll say that's really different about this versus what Sora has shown and really what any of the video models have shown to date is the in-painting feature. And the in-painting feature that they're showing off here is really transformative for use cases if you're using AI video. One of the coolest clips is they have a show of a guy who's kind of running in this kind of desert landscape.

and they can change him or they can change the landscape around him. And that's something that's been really hard with AI video today because it's very much like that slot machine mechanic where you're like, okay, let's see what we get here. Even with image to video, you're not really sure what you're going to get.

If you're going to start being able to control this a little better, it's great. I think the examples that they've shown of this so far, there's an amazing, at the top of their blog post, there's an amazing, almost like Moodang baby hippo that's kind of swimming in the water, which you can show here, which is really cool. The thing that's interesting to me about this, and I'm curious to think about what your side of it is, is like, you know, Runway themselves has talked about this. Soras talked about this. I think Metagen is getting at this as well. Sorry, MovieGen is getting at this as well, too.

is this idea of AI video as a world simulator and not just as a video simulator, right? And I think there's a lot to be said for, and this may be getting in the technical side of the weeds a little bit, but the idea that instead of LLMs training on words, that soon we're going to be training, we're already training on images and video, but like training on the real world and what the real world looks like and how physics works

will maybe create a much bigger, more interesting model at large. And so these kind of like high-end movie generation models feel like they're a step towards that, which, you know, as a video gamer, the kind of holy grail is you go into an environment and you say, I want to play X, Y, or Z, and I want it to look like this. And that's a really cool use case. Now, it may take half the resources of the planet to generate that video game. I know that.

I think this is a really interesting step in the next direction. I think the other thing that Kevin and I talk a lot about in the show, about in the entertainment industry, but really kind of at large, there's a lot of people who are, we call the AI nevers, right? The people that never want to use AI. And I think there's a message that we always try to send to both those people, but also to the people that might be a little more curious about it, which is, you know, this is not going to slow down. So in a lot of ways, you're better off being aware and understanding

open to these tools as tools because it's only going to get crazier from here is kind of our takeaway. That makes sense. And yeah, to your point, the kind of general idea of training on video is something that seems to be in the future of these multimodal models, right? We have a GBD 4.0 from OpenAI, which was trained on audio and

And the images and so on. And it seems like probably video is one of these things that they could tap into more. And it's not super clear yet if you sort of get compounding effects, if training on video improves also your reasoning in text, but it also isn't, you know, an unreasonable theory. And there are some indications that could happen.

So yeah, video is very much still the future and present of the frontier of AI. And yeah, I think also, as you said, it's worth pointing out, this is not real time, first of all. It does take a long time to generate videos, as was the case with Sora. So the comparison to Runway, Gen 3, and Luma, and these actual tools isn't entirely fair.

considering they are meant to actually be used. And in fact, there's no release partially because they have stated that it's just sort of too early, it's too slow, etc. This is more of a preview. I'm really curious to see where Sora is at, right? Because Sora is something we've now known existed for a while. And as far as I can tell from research in the background, really has been around inside OpenAI for probably a year at this point or most.

I'm surprised it's not out in some form yet because it is kind of shocking to me. Now, they may be like, we're already burning our servers out running 01. So like we can't release a video model at this point. But I expect Sora based on, you know, Sam's new ship mentality where he's shipping stuff quite a bit. I kind of expect Sora to come out

post-election this year, before the end of the year, because I don't think OpenAI wants to see that seem like they're farther behind. And I also think, I know you were probably following the story too, but like,

Sora also, from what we've seen, there's an image model too there, right? So that might be an easy way to update Dali to have Dali 4 or you just release it as Sora. I do think that's coming soon. This feels like it's a little bit further off, but it just shows you again, Meta is throwing so much money at this stuff and doing so much things that they are going to be a big player and

you know, again, it's like Lama 4 is not that far away. And you have to just imagine the size of an open source model that that's going to be that good is going to be kind of a game changer. Exactly. And to a point of the open source aspect of this, there have been questions of like, well, are we going to get the weights of this as we have with these Lama models? No weights so far and no real promise of an open release, which I wouldn't

I don't find surprising. Like this is actually something that, let's say, Google or other competitors don't have as opposed to large language models. So for a competitive advantage, this would be certainly something you might want to keep to yourself and not share with everyone.

One last thing on this. I think what's interesting is this fits very much into the sort of stuff that Zuckerberg has been talking about with their image models in that there's one of the things they also highlighted was the idea that you can take a picture of yourself and put it into a video clip. And, you know, we've seen a lot of open source things that can do that. We've seen a lot of other tools like face fusion or things like that that exist on the open source side. But

this is a big aspect, I think, of what Meta wants to do. I think what Meta really wants to do is make these tools available in their apps like Instagram or WhatsApp or, you know, Facebook and

And I think that aspect of it, because my theory about Zuckerberg is it's less about like being on the absolute cutting edge and more about kind of undercutting everybody else and getting people to be on the Facebook apps, like the meta apps. So I think for him, this is another onboarding tool, right? Like if you can get a viral video of your grandmother sending around in the

in 300, like, you know, raising her sword. That's a pretty good thing. Now we'll be exactly 300 because that is a movie that is, has rights to it, but you can make her into a Greek soldier and kicking somebody off a ledge. Well, you know, that's an interesting question of like, did we use any sort of copyrighted data? They do say they've trained on sort of public and licensed data. So probably you can't get yourself to be Iron Man presumably, but you know, who knows? Maybe, but,

You have to go to the Chinese models for that. The Chinese models have no problem with that whatsoever, unfortunately. Very true. Yeah, yeah. And yeah, I think that's a very good point. In fact, I noticed in their blog post about this, which is very long, I think, if you want to check out sort of all the details. It's like 90 pages, the downloadable one, which is crazy. The paper is, by the way, like 60 pages of content, 30 pages of offers. Not really, there's a panic. Yeah, yeah, yeah.

super detailed paper, which I think is also very exciting on the research front for the community and also on the open source front. There are actually a lot of technical innovations going on here, which is interesting. And to a point about this being integrated into the tools, I found it interesting in a blog post they finished with this quote,

Imagine animating a day in the life video to share on reels and editing using text prompts or creating a customized animated birthday greeting for a friend and sending to him on WhatsApp.

Yeah, there you go. They're just telling you right away, we want to have this. They're killing JibJab, Andre. They're killing JibJab. That's what they're doing. Do you remember JibJab? Do you know what that was? I do not. JibJab was an old, for those in the audience who remember, thank you. There was an old app where you basically would replace, you would put your head on these little dancing. Originally it was elves and then it was a bunch of other stuff. So it's just an easy way to create shareable material, which I think is really, you know, meta's bread and butter.

Right. And to me, it's kind of interesting. Last week, we covered the news that Vio, Google's... They'll be on YouTube, right? Yeah, they'll be on YouTube. And then Snap is also doing this. So this seems to be like another standard playbook of all these creation tools, creative tools, adding this. Presumably, TikTok will soon have their own thing as well, it seems like.

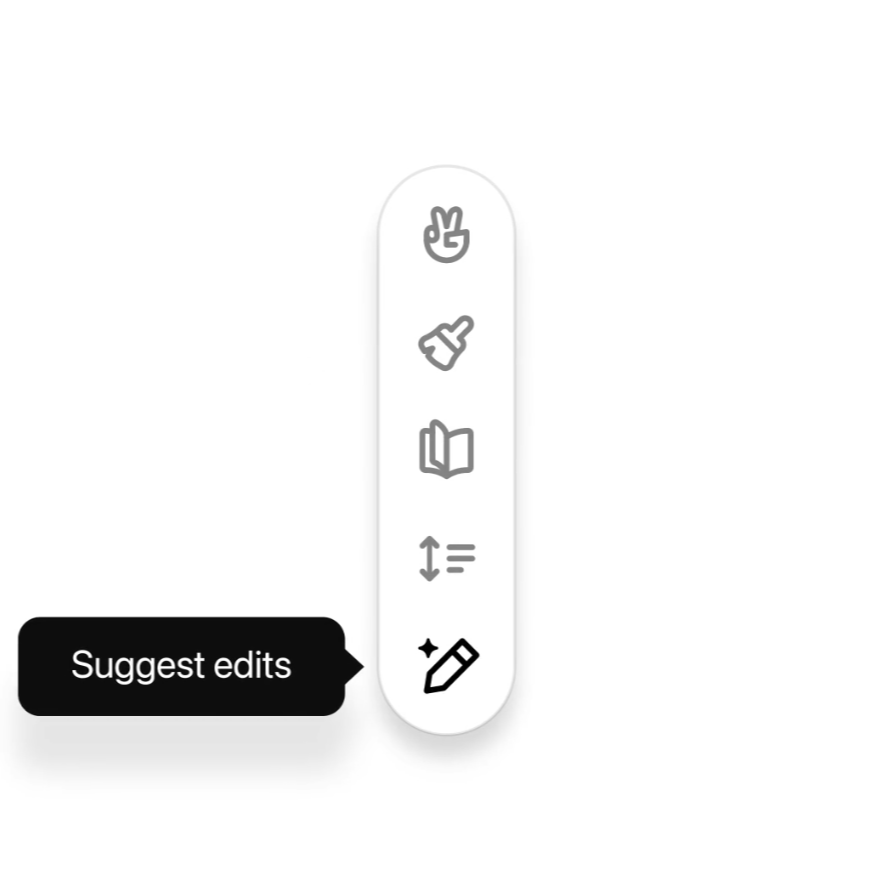

I think we're going to talk about Pika later, which is a really interesting example of this as well. And moving on to the next story, we've got one related to OpenAI. They're launching a new Canvas chat GPT interface tailored to writing and coding projects.

So this is sort of on top of your typical chat GPT experience. You're still talking to a chat bot, but you can think of it as kind of a split screen. So now you'll have a separate workspace for writing and coding. You can generate writing or code directly in that workspace and then have a model edit selected sections of it. So you can

Instead of, I guess, going back and forth and getting just sort of a text chat interface, you now have an actual draft interface.

of what you're working on. And you're almost collaborating with it in a sense on editing that draft. And this is similar to something we've already discussed, Anthropix artifacts, and also this tool cursor, which is an interface where you are not chatting in the code. Like you can have the AI modify it and not just generate on top of what you already have.

So this is another sort of trend we're seeing. This seems to be a new user experience paradigm with these chatbots and a pretty significant step in making it kind of more fluid to do whatever you're doing.

instead of just going back and forth with a chat interface. The thing that's really interesting to me, and I know for coders, it's a huge deal because you can change like specific things. As somebody who uses it a lot to write things or to write, you know, whatever, blog posts or use it to brainstorm. One of the things that I love about

this because I spent some time at it is instead of having to regenerate the entire document all over again, you can just generate sections. And like one of the most annoying things about using any LLM to do this sort of thing is that like when you would say like, okay, try this and do that.

but then give me another version of it. When it spits out the other version of it, a lot of times it does still change in some way the other things you didn't want it to change. So what's cool about this is, say you're writing, I don't know, a cover letter for something, right? You can choose a section of it and say like, help me with this section, but not the rest of it. And it's able to do that. And that feels like,

like just from a UX perspective, a massive step up when you're playing with this thing. I also think the other thing that this kind of lends itself to is when we get an agent who can go in and do something for us, if you were to tell it, you know, give me this document, but change this line. Like, I think it's going to be able to do that in a much more specific way than it could have before. If it can break it down this way in a weird way, it's a little bit of like,

step by step but within the thing do you know what I mean like within a specific thing and that like

does seem to give you a significant leg up when you're wanting to modify something. Yeah, I totally agree. I think having been using Cursor, this sort of similar tool only for coding, the ability to highlight a piece of text and tell the AI exactly what you want to do there. So you can highlight a paragraph and say, you know, make this paragraph more concise, for instance,

you know much better experience we're just having it re-output the same stuff over and over and imagine with coding because there's you know bugs happen in certain sections like that doesn't hugely step up the ability to kind of fix parts right yeah and it also speeds up a lot of kind of boring things sometimes you have to do like for instance you know let's say you have like a list of numbers related to a list and then you take out a bit of list and now you need to like

decrement every number like 10 in a row. It used to be you would have to actually do that one by one. And now you can just highlight that and say, you know, reduce every integer by one. Oh, that's great. That's very cool. Yeah, exactly. So that's kind of a big win for me. It can help a lot with fixing things, but also with just doing the boring stuff that in the past you would have to do yourself.

Do you think this and in artifacts, like does this undercut cursor as a business? Like, is this what cursor does? Like, or do you think cursor still has like a significant runway to go? Because I think one of the other things that we talk about in our show a lot is how, you

companies like OpenAI and Anthropic tend to kill startups whenever they do these announcements, right? Like whatever the next step is. I know Cursor's got a ton of funding now, but do you feel like it's got special sauce on its own to keep going? I think that's definitely the case for Cursor just because they are only about coding and it's...

integrated into you know as a coder or you always have a program in which you edit your work exactly so yeah it wouldn't like chat gpt is a separate thing it's not tied to your file system it wouldn't be a real game changer i do think for writing assistants there are a few of these for creative writing and so on this could be a pretty big let's say challenger yeah that makes sense

Next up, we have another story about OpenAI, this time less usable, more for devs. OpenAI actually had a Dev Day where they introduced a bunch of developments related to software engineers. Specifically, the most exciting part was a real-time API where you can have a nearly real-time speech-to-speech experiences in their apps.

Presumably, maybe similar to some extent to what we have already done with 4.0 and being able to generate audio at real time. And now you can modify speech in real time. On top of that, there's a bunch of treats that they announced. We don't need to go super into detail, but they're reducing

reducing some costs. They're introducing vision fine-tuning, which is kind of important because you can fine-tune things related to images, which is already the case for their text models, by the way. If you're at a company, you have your own data, you have your own use case, you can pay to modify ChatGPT to make it customized to your case. And some other stuff, but the highlight here, I suppose, is this

speech to speech offering. Yeah, I mean, the real time voice API, we dug into this a little bit, because actually, Kevin and I are working on an actual idea with using voice stuff, which we will hold on to for now, but I'm pretty excited about. So this is very exciting, mostly from a standpoint of it does seem like advanced voice opening eyes, advanced voice is kind of like the cutting edge, at least of what's out there in the world right now. But

The thing that's interesting about this is being able to implement this into existing apps or new apps that would use it. Right now, it's very expensive. I think one thing that came out is like it's something like a quarter for... I can't remember what the number is, but it's very expensive trying to use it on a regular basis for a regular consumer app. But...

As we know, this stuff just gets cheaper over time. And I think a year from now, the idea that there could be exceptionally cheap voice-to-voice without a pause. If you've used the OpenAI Advanced Voice app, there's really great about the fact that it's listening as it goes along and it will respond to you right away. And it does feel kind of magical. I think my personal theory about this, and I think this is just going to go further in this direction, is that

voice is going to become a massive input for everybody, right? Meaning that up till now, we got used to using voice for Siri and maybe for Alexa, but just for the most basic things. Now voice can...

With Whisper or any of these tools, it can really transcribe exactly what you're saying so it can get exactly what you want. And then if this interaction happens, and again, going back to this idea of agents do become real in the world of AI, if you can say to an agent, hey, can you write an email to this person about this? And it can just do that and then you can glance it over and say, okay, great, send it. That is a real fascinatingly great use case of what AI can do. And I think the thing that is maybe a larger, broader thing is

I think this is going to change society in a really weird way. I think we're going to look at a world in about 10 years where everybody's going to start talking to these AIs and they're going to humanize them because you're going to be spending time talking to them. I think this is going to speed up the idea that AIs are...

are something other than just a computer in a weird way. And I also think it's going to change how we interact with the devices in front of us. When you pair these with like what Meta did with their new glasses,

I see a world and granted, I know a lot of people out there might be like, you're freaking crazy, but I see a world without keyboards, right? Like in that, that is a really weird world because we've had a keyboard as our input device for a very long time. And imagine, I mean, already on our phones, we're getting used to typing on this little thing and everybody thought we would never do that. But I see a world where voice is probably the main input that we have into computers going forward. And that feels like we're laying the groundwork for this. Yeah.

I agree, certainly, to some extent. I don't know about keyboards. As a programmer, that sounds a little bit radical. But also, if you can tell your AI to do like X, Y, and Z, then you can tweak it a little bit. It's not the craziest thing. Yeah, and I definitely do agree that voice is going to be a primary modality for interacting with AI. Even already, to some extent, with 4.0, we are kind of moving in that direction. And certainly, in the coming years,

And it's interesting in some ways, like we've had pretty good transcription of audio for a while on our phones. Like instead of typing, you could just say something could be more efficient. Personally, I haven't been doing that somehow. Like the habits haven't changed much.

To use that, but I could see it becoming more of a case as we get, you know, these smart glasses and we all start talking to ourselves in public, which is, I guess, that's what's going to be the weirdest thing is instead of people talking on the phone, which they've kind of stopped doing. I think you're going to see a lot of people talking, but they're going to be talking to AIs, which is going to be a strange thing. It's going to be interesting. Yeah. Yeah.

And next, we are moving away from OpenAI to a bit of a startup, and it is Black Forest Labs, which has released Flux 1.1 Pro and an API. So, yeah,

A reminder, Black Forest Labs are a pretty fresh company who created or from the creators of Stable Diffusion, the seminal text-to-image model. And they are, I would say, currently a leader, if not sort of the best image generation model. We've seen that when they enable people to play around with it via...

Grok on X. And so here we have the next iteration of AirModel Flux 1.1. The images are kind of mind blowing. And now, you know, if you know, you could still see some things in these images that gave it away for photograph type images, things that could be seen as real images. Now it's getting pretty hard to find anything.

And alongside with that model update, we also did release a paid API for developers. So now if you're creating your own app, you know, outside of something that is already out there, you can use Flux in it with the API. Yeah, I mean, Flux blew us away when it first came out. Like I was kind of shocked by it. And really, in my mind, I put it kind of taking the place of what Stable Diffusion was because Stable Diffusion has kind of fallen off. You know, we'll see what James Cameron and those guys do with it. But yeah,

I consistently get the best results out of Flux. Now, the interesting thing with Flux is right now, I'm sure there are actually there are a few programs where you can pay a subscription and it's integrated into it. But Flux is a pay per use. Oftentimes, if you use it on Fall or on Replicator, all these, you know, different sort of server systems,

It's amazing. And I think that the truth of the matter is I'm just thrilled that there's another company that's pushing image modeling forward. And the thing I'm most excited about is they teased a video model, right? Like teased a video model when they came out with Flex 1.0, right?

If they can come out with a open source video model that is better than, say, the Runway Gen 3 model, which I think is kind of like if you look at the runways and the Lumas and all those sort of things that are out already, that feels exciting to have another person pushing that forward. But it's a cool company. I think they're doing interesting work. And again, it's a really great thing because it opens the door to these companies.

It's also powering Grok, which has been the case for a while and allows you to do certain things that you can't do in other imaging models, which is always an interesting thing if you're trying to play with creative ideas. I mean, I don't know if you've seen what the work of the Doerr brothers, they're the guys who made the Trump and Hillary Clinton and Kamala go into the convenience store video, which a lot of people hated, but that

That sort of thing, which is kind of a piece of art, right? Like a piece of art is created with open source models because none of the closed models will let you generate images of famous people. Although Midjourney will let you get close. Midjourney has the weirdest things where it will, it'll kind of mostly get you there, but not all the way. Black Forest Labs and Flux have certainly kind of come out.

really quickly since being founded. And this, it's not just better, it's actually a lot faster. So they say that it delivers six times faster generation speeds, which as someone trying to do a creative project or just playing around with it is, you know, a game changer. Six times faster is...

Yeah, crazy. Definitely would be very exciting to see them release something related to video. And they did just fundraise and get a bunch of money. So we'd be surprised. Oh, they did? How much did they raise? I'm curious about that. Does it do you know? I forget the exact number, but it was like in the tens of millions. Yeah, yeah. So I mean, they're going to be around for a while then. That's good. Yes.

Next, moving away from generating stuff, we have another tool-related story. Microsoft is giving Copilot a voice and vision in its biggest redesign yet. There's a bunch of new features being added to it. It has this virtual news presenter mode. It has the ability to see what the user is looking at, voice feature for natural conversation, which is similar to OpenAI's advanced voice mode.

And this redesign is being implemented across mobile web and their dedicated Windows app.

I don't know. I forgot Copilot is a thing, to be honest. But while there's a bunch of, there's a bunch of Windows users out there that like, and by the way, I was in a Best Buy the other day and they are schlocking it hard on their PCs, right? Everybody's schlocking on their PCs. I will say this to me feels like what, you know, OpenAI, Microsoft has a giant investment in OpenAI, obviously. I think they own like 40, they have 49% of something of OpenAI. I don't know if it's profits or if it's, if it's ownership of the company, but they,

looks like to me they got the tools that OpenAI announced and then dropped, right? Like what I mean is like they put all the OpenAI stuff into Copilot. One of the things that people may or may not remember is that Mustafa Suleiman, the guy that was the Pi co-founder, is now running Microsoft's AI division. And I think that's a big step here. He's kind of redesigned Copilot to be a little bit more friendly and open to doing stuff. And I just think of Copilot as...

the kind of like normie way into a lot of this stuff. Now, granted, I don't know what their audience is for this. And I know there's been some stories that like Copilot, you know, a lot of businesses have tried using it. It hasn't been that useful. I think in some instances, this will help it a lot, especially because by the way, Apple intelligence still not out. And I have a new phone that I still don't have access for now. It is going to come out, I guess, at the end of this month, finally. But

Co-pilot is a very powerful service because it's powered by OpenAI. In fact, there's even a chain of thought. I don't know what they called it, but there's a chain of thought that they released that's clearly a one in some form being dumped into co-pilot as well. So the overall, I think this is just like makes sense to me. It's like Microsoft has a big chunk of OpenAI. They're going to roll out their products under a different brand and the Microsoft world. And I think people will probably use it. I think this will get in front of a lot of people.

I think so, yeah. Maybe because I use a Mac and don't use Windows very much, I forget about it, but I'm sure it's pretty prominent in the OS and across anything you use in Microsoft. And I do agree, it seems like there's a bit of influence of that Pi sort of consumer-facing, and definitely it looks more sleek and it looks a bit more approachable compared to something like ChatGPT. So I think they're also trying to differentiate a bit from the other chatbots, where it's just a text box, you know.

I think the thing I would really love, because I do have my closet up here. I have my Microsoft gaming PC that I've used for AI stuff before that I haven't brought down for a while just because a lot of the stuff I'm doing is in the cloud. But I would love them to do, and again, this may go back to not being able to do it yet because you need an agent that can operate on your way. I would love it to be able to do things on my PC for me. I don't even have to do stuff on the internet. If it can do something as simple as if I can tell it, you know,

go update this piece of software or not even that, like approve this piece of software, like things like that, or even like, you know, eliminate this file. That would be a really huge benefit to me. And I know at one point Microsoft was really trying to get into that, like finding ways to make it useful feels like the next step here. Like, okay, it's cool. I can talk to it and I can make it say different weird things. But right now the use cases are not that strong. And I don't think we'll be that strong until we start having it

have some sort of ability to manipulate files or do stuff for us. Yeah, I totally agree. I think the next step would be something like, you know, in this folder, delete all image files or rename each files by taking out this little piece of text, something I've had to do, you know, annoying repetitive tasks. That's going to be AI pretty soon.

And the last story, I think you mentioned Pika, and now we are covering their news. They have released Pika 1.5. So just a reminder, Pika Labs is an AI video platform, one of these things comparable to Luma and also Runway Gen 3. And with 1.5, they are focusing on hyperrealism. In particular, they highlighted hyperrealism

Pika effects, which are these lifelike human and creature movements, sophisticated camera techniques. And I think for me, the most cool part was seeing some of these videos with images

like physics seeming thing that had like smoke and I think things dissolving, like being kind of liquidy. And it was pretty smooth. I was, I was pretty impressed by this. Yeah. So I think what, so there's a couple of things going on here. One thing Pika released a new model, which is great. And you always want to see these AI videos companies push forward to come out with new models. But Pika has always been a little bit of a little behind some of these other models, right. In terms of quality. The thing I think that's really different here is,

And I think a smart move on Pika's part is they've pivoted slightly into these, like almost I'd call them like AI animation templates. So there's about six of them that they call Pika effects. And this is like slightly different than what they're in their beginning of their trailer video. They're kind of talking about the ways that you can manipulate the new AI video model. But these are like,

specific animation types that you can do with stuff. And one of them is called inflate. One of them is melt. One of them is cake. So you can make things out of cake. So what's cool about this is you can take any image and it will give you a similar effect and it kind of knows what the effect of it's almost like a video Laura in some way, which is kind of cool. And, uh,

I think the smart thing for Pika is it's a little bit like when Vigil got popular, right? If you remember Vigil, the company that made the little yachty kind of jumping on the stage thing and everybody did those animations. If you can find something that will go viral for you, it's a massive selling point. But again, going back to like the meta stuff, it's like the way that you get people to use this is by sharing it, right? And some of these AI video models are looking to be

high-end tools for filmmakers or even AI filmmakers who are going to spend all their time and make a two to five minute film or maybe longer at some point to make a really compelling video to watch. Other ones are really about capturing that kind of like casual user who's going to make something funny with their image and do something. And

That feels like a right direction for Pika to go into me, because in some ways, I don't think Pika is going to beat out even the Lumas or the runways of the world. So, you know, it did raise a lot of money already. I think Pika raised like something like $70 million. And that feels like a raise for somebody that's trying to be the AI video actual model generator. But...

This could be a really interesting use case if they can grow and get big enough. I don't know if it's, it feels like to me, like, I don't know where this company goes from here. Maybe there's a world where they can make this into something, but it feels like

It's going to be a tricky pathway, but I really do love these new effects. I think it's worth trying. And anybody can do it for free. That's the most coolest part about it. It will take you about a half an hour to get a result back because the free generations are put way behind in the queue. But I think it's at pika.art and you can go try it for free. And we had a lot of fun with it. We actually did our thumbnails for our show. And, you know, the inflate thing was really weird because it's two of our heads. It inflated us and then brought us together into some weird flesh bulb at the bottom.

which was really disturbing, but it's fun to watch. Yeah, that's a good point. I think personally, with all of these video generation services, it does feel a little implausible that you could actually make it a business on the consumer front. Certainly, I think opening AI we've known has...

gone and had meetings with Hollywood people. And I do think part of the reason they haven't released Sora is that it wouldn't be a real moneymaker from a consumer perspective. Yes, yes. So yeah, with Pika, this idea of Pika effects, maybe something more filter-like, right, which people already use a ton of, could be a smart play.

Well, and also it's like you think, well, could a company like, I don't know, maybe not ByteDance, but somebody who's got like Snapchat come in and buy Pika. Yeah, sure. That's something that'd be really useful because then you turn this whole group on to making more effects for you. I just think it's,

It's an interesting thing because I think with Sora and even with Runway, you can tell they're actually trying to push themselves in some ways towards Hollywood, right? Because worst case scenario, Runway and Sora could both make incredible drone shots, right? Or establishing shots that could actually be probably close to used in movies right now. They may be a few years away from making full movies from this stuff, but Pika feels like maybe it does need to go down that pathway. Exactly, yeah. I think Runway in particular is...

building itself as a, you know, it's not just video generation. Runway has a whole suite of AI tools and all that stuff. Yeah, yeah, exactly. So there needs to be some differentiation in the space, it feels like, and Runway is definitely the leader on that front of trying to be like for creative professionals.

And moving out to the applications and business section, we begin with another big story of the week, one that isn't surprising, but I guess is nice to have. And that is the end of the OpenAI fundraising saga. So we've been talking about this on and off. We've covered a bunch of rumors here.

Well, OpenAI has now closed their VC round, and this is the largest VC round of all time. Oh, is that right? I didn't know that. That's amazing. Yes, exactly. So they have raised $6.6 billion.

billion dollars from various investors that values them at 157 billion post money. You know, has a bunch of investors, as you might expect, 5Capital being the previous investors. They have a lot of reoccurring investors in this, although there are some interesting details here. Supposedly, they did ask the investors not to invest in other competing AI companies.

which I guess makes sense, but also is a bit of a ask for OpenAI, which does have pretty significant competition from on FilePick and Google and so on.

But, yeah, it seems like people have a lot of faith in opening still despite competition. Yeah. So I've been thinking about this story a lot. We talked about it on our show as well last week. And one of the things or two things that really stood out to me, one is obviously the size of this round is big. Right. And you can't imagine like any almost any other startup in the history of startups, as you just said, ever raising something as big without going public. Right.

But two, and also I've been thinking a lot about like what this money is used for, because, you know, one of the things that's famous with opening eyes to now is that like, it's not, it's not making money overall, right? Like it's, it's bringing a lot of money in and it does have a lot of money coming in, but it is still burning through money overall, right? Like it's, it's costs are higher than it's, than its profits. And I think my thought is, okay, well, you've got 6.5 billion dollars now to do whatever the next generation thing is, right?

I have been thinking about like how far away we are from seeing what OpenAI has in its pack pocket, meaning that like we're just now seeing 01. Supposedly the rumor is that 01 is what Ilya saw back in the day that freaked him out. And that was like a year ago almost now, right? Like back in November. Sorry. Yeah.

And so my question is what they're showing to investors because investors are going to get the open cloak, like look at what the company is doing right now. They are not going to be throwing a $6.5 billion to the company if they don't know what's happening.

Clearly, the next step of whatever is behind the door right now is a significant step. And I would imagine, you know, they've got... I can't remember what his name is, but the guy that came in as like the new business guy, Brad something. I'm sure that they are projecting out all these different business use cases for the software that don't exist yet. Because there's no other way to imagine developing it at that high of evaluation without that. So...

I think this is a point to how big OpenAI's next leap could be. Now, granted, I'm not an expert, but what I'm thinking is the only way you get this many people to put this much money into something is because you've shown them that there is a massive output of money on the other end. That's what this feels like to me. Yeah, exactly. I think that's sort of right take the primary. I mean, obviously, they are burning through insane amount of cash today.

as is just due to the employees. I do think we're not profitable at all at this point. It's not clear what the margins are on any of these chatbots. But even that aside, I think we are still very much seeking to build AGI, right? That's their main thing. And that would involve training at GPT-5. And, you know, GPT-4 costs on the order of hundreds of millions. These frontier models in general, including Lama 3, are costing something like hundreds of millions.

of millions and gp5 could cost like you know tens of billions potentially which is crazy that's the funny thing is like tens of billions they don't they didn't get that do you know i mean that's the funny thing now granted they've got microsoft in their back pocket to help them do things and there is that story where like microsoft is helping to turn back on three mile island and they're going to get all this the resources that microsoft is going to try to pull for ai training and data centers but like

That's the thing that kind of worries me is that like if they don't have that thing kind of the next generation trained already, then like there's a lot of money that's going to get burned through just to do that. Right. Because they've got to buy the chips. They got to do all this stuff. That's why I think GPT-5 is probably baked already.

And that this is a promise, this investment is a promise based on what the results of that are, right? Like, I think that's probably what this is. Yeah, I could see that as well. It's been, you know, I forget, like almost a year and a half or quite a while since GPT-4, right?

Sorry, 01, their new naming scheme is so confusing. But yeah, their reasoning model certainly was a pretty significant leap. But still, it doesn't feel like we've had GPT-5 and they haven't released GPT-5. So I guess that's got to be the question of like, do they have it? Do they not? I think they definitely have started training it, probably not finished yet.

And I guess you would need the billions for GPT-6. Yeah, that's kind of what I think is probably the case, is that GPT-5 is at least started training, if not, like, not done. But, like, I think that probably the next version of what... And now you're right. It's, is it 01 we're going to get? It's already done, right? 01, because 01 preview's out. Maybe it's going to be called 02 for all we know. Or there's GPT-5 plus 02. Like, I really wish we'd get some sort of significant things. But it does feel like

this raise is really about where it goes after that point. The other question is like at some point this company, you know, $157 billion valuation, this is kind of businessy, but like,

A company like that has to go public at some point. And by the way, maybe that helps OpenAI because I do think in some form, OpenAI is probably a little Tesla-like in that it would have a lot of meme stock potential. So maybe if they go public, it just shoots their valuation up in a significant way versus something that would be more difficult for a different company.

For sure, yeah. They have the brand recognition of ChagGPT, and I think for retail investors, they know of that, so I could see that happening. And one last interesting detail here,

for the fundraise, it sounds like there was a provision of that. If OpenAI failed to restructure into a for-profit organization within a year, the investors could claw back the money. So they are really promising here that we are going full-on for-profit, normal company, you know, forget this non-profit business. I mean, I think you have to if you're raising that much money, right? And again, you know, you can argue that it should be a non-profit and there's a lot of people out there that believe in that. But also like,

there's no way to get that much money from people and say like, okay, guess what? We might still be a nonprofit. Like you got to change. Do you remember that like original image that was on the opening eye site, which talked about how it was a nonprofit and like, it wasn't going to spend, you know, it just was a bad investment. That can't be the case when you're taking the $6.65 billion and you have to be promising at least these people are going to get, you know, I don't know, two to five X, if not more on the money that they're investing. Right. Right.

And next, we've got a story that hasn't generated so much hype, but I do think is kind of interesting. The story is that Google is bringing ads to AI overviews. So they're going to start displaying ads in these AI-generated summaries provided in certain Google search queries. And we'll also be adding links to relevant web pages in some of the summaries.

So this has been something we've always wondered and that has been a kind of conversation topic. It costs a lot to do these kind of AI inference for search much more than just a normal Google search. So you need to somehow pay for it, right? And so we've been wondering, do we get ads? Is that how going to be your business model? And here we go. We are starting to get it. And I do think there has also been...

I forget if this is actually out, but I've seen information about this coming to Bing as well. So I think not surprising, I suppose, kind of what you might have expected, but still interesting.

I guess worth noting that nothing comes for free. And now if you do use this, you will soon start seeing ads coming out and being labeled as sponsored, appearing alongside non-sponsored content. Yeah, I mean, you have to realize Google was giving up probably the most valuable part of their search page to this AI.

search paragraph that they were producing because oftentimes that very first result is a sponsored ad, right? Like you can search for an REI and you get an email, you get a Yeti ad at the top, right? Like for Camping Gear and

And I think this only makes sense to me. The other thing that follows up on is the company Perplexity, which is like an AI direct search engine, also announced that they're having a business model planned on when you reply to a question, when you reply to a... So you put in your search query, you get your answer, and then when you reply, they're going to allow sponsors to reply back to you, which is an interesting different way of looking at this because then you've already shown intention. So imagine that is since you're searching for like...

what's the best cooler I can buy for a camping trip? And it comes up and it says, these are the three coolers. And then if a Yeti cooler, you say like, hey, tell me more about the Yeti cooler. Well, Yeti could come out or REI could also say like, hey, do you want to see a discount on REI? That just feels like this is the give and take of...

of what we're going to see with these things. Like, unfortunately or not, this is the money that has paid for the internet at large for a long time and grown these companies into, in some cases, trillion dollar companies. And Google specifically, uh,

Is trying to figure out how to make this AI stuff, make it money up until this point, I think they were playing defense, trying to kind of like break the fact that chat GPT was taking a ton of search. I don't know about you, but like I spent a lot more time searching in chat GPT now than I thought I was going to. Like I actually asked the questions that aren't as explicit.

specifically related to like finding a product. I often ask in chat GPT, which is probably like at least half my search queries. So this is just a way I think that they can now monetize this thing that they've invested a lot of money in. I still don't know if it the long term. I think Google search business is kind of borked in that. Like, I think there's going to be a lot of different people coming along. They're going to be trying to do it in different ways. And Google's just got to find its way to at least hold on to like

at least half of that, right? If not more, because that's their, the vast majority of Google's business is search advertising. Exactly, yeah. They really do need to find a way to stick around and,

I guess this kind of feature of theirs, the AI overview, is pretty comparable to what you've seen from others, providing a summary of various web pages. It was a bit silly and made some funny mistakes early on, but I'm sure they've kind of improved on it over time. For me, the interesting question is, are they going to release a sort of

optimized search for, let's say, creative professionals that will cost more to rival perplexity, right? Because for perplexity, they do have a more expensive tier of model and of search. And you have to pay $20 a month to be able to do this more in-depth kind of exploration. And certainly, I could see a lot of people paying that subscription cost. Yeah.

to be able to use that as a tool. I mean, at this point, even it's like, I would pay for a Google search product, probably. I mean, I already pay. In fact, I have to, I have so many like dumb AI charges that are going against my American Express right now. I have to kill some of them, but like I pay that 20 bucks a month or whatever it is to Google for the, for the cloud storage. Right. And like, in some form, I wish that was like YouTube premium where like, I was not having to watch the ads or I was getting a more dedicated Google search tool. And I think

And that again goes back to like search, you know, subscription revenue versus search revenue. And it'll be interesting to see. I think the other big thing with Google is, you know, if you listen to like, Nilay Patel from The Verge is really good on this, but the entire internet is changing where it used to be based on search and SEO. And now it's going to be based on these kind of AI searches. And that just is going to fundamentally change the business of the internet. And, you know, it used to be about how do you get to the top of the search page? And now it's going to be a little bit more about like,

A, how do you get surfaced in these AI search engines or how do you get surfaced by the AI? But also like, how do you make it so people go directly to you versus trying to come to the Google page, which is going to be a weird transition point for the internet at large, I think. Mm-hmm.

Onto the lightning round with some shorter stories. We begin with a bit of a trend. Anthropic has hired another OpenAI-affiliated person, this time a co-founder, one of the lesser-known co-founders, Dirk Higdon.

Kingma, who was there from the start, did leave OpenAI back in 2018, joined Google. So actually, this is not someone leaving OpenAI, as you've seen in a lot of cases. But nevertheless, yeah, Anthropic has a lot of former OpenAI people now. And if nothing else, I'm sure that helps their case for being a strong competitor.

Yeah, I mean, Dropbox also has drops coming, I'm sure. If nothing, Opus 3.5 has got to be baked and ready to ship at some point. I don't know what they're waiting on, but also Sonnet and Opus 4 is probably coming. When you add a 3.5 level, you know there's a 4 coming at some point as well, too. So I'm excited to see where they go from here.

Next in our story on OpenAI, as always, we have a lot of these. And I like the title of this article. OpenAI's newest creation is raising shock, alarm, and horror among stuffers. And it is their new logo. So supposedly, in a recent company-wide meeting, they presented this new potential logo that is being described as a large black box.

O or ring, basically something like a zero or like the letter O. And this is moving away from their, I would say pretty iconic at this point, logo of this hexagonal geometric shape that I've seen so much over the years. Yeah. And I would be pretty bummed if they actually do make this change because this sounds a lot more boring. Well, also, isn't the scariness of AI really helped by a giant black dot that is like an object

zoom in and out when it talks like that is the scariest version of AI I can imagine is like a black thing that just kind of like it's almost like a 2001 sort of vibe right like it and I think that like I hope this isn't the real logo because it it doesn't look compelling to me it's not optimistic it feels very um brutalist if you know much about like an architecture and

I don't love brutalist architecture. I find it very like bland and kind of cold. And I hope this isn't the case for what they're going to release. Exactly. It sounds very cold, very sort of inhuman. Yeah, exactly. Like you think we would want to push it a little bit more towards the human side rather than it being the inhuman side. But look, maybe Sam Hart is just racing us to the ASI and we're all going to be batteries that get plugged in. Maybe he's already made a deal with the AIs.

Yeah, who knows? Next, moving away from OpenAI, the next story is that Waymo is adding Hyundai EVs to Robotaxi fleet under a new multi-year deal. So this is about the Hyundai IONIQ 5 electric vehicles, a pretty expensive line of electric vehicles, you could say comparable to something like Tesla.

And they are saying that Waymo's sixth generation autonomous technology that they are calling Waymo Driver will be integrated into a significant volume of IONIQ 5 EVs to support their growing Robotaxi business.

For me personally, pretty exciting. It does seem like Waymo is a bit constrained in their ability to get to hardware out there. If you go to San Francisco now, for a while now, anyone in San Francisco could use Waymo. The wait list is over and now it takes like 25 minutes to get a Waymo. It's crazy. Oh, really? Oh, wow. So I'm actually in LA. I got approved. I haven't done it yet, but I'm on the beta test for it here. I might try to take one soon. But

Well, of course, right now they're all Jaguars, which is like a very expensive car. And I also think the interesting thing with Waymo versus something like Tesla is obviously the hardware you have to put on the car with the LiDAR is a more significant kind of lift than it is just to like ship the car as is and hope it works. I do think, though...

And the more I look at Waymo, and again, I haven't been in one yet, but I've learned a lot about it. I've been kind of obsessed with driverless cars for like 10 to 15 years because I thought it was going to come sooner than this because Elon promised it a long time ago. But I think this is as transformative as in some ways as AI is going to be for the average person, because I think there's a world not that far away if this is working, which it seems like it is right now.

where 10 to 15 years from now, you just don't need to buy a car, right? And I think that's a big, huge change to American culture. Now, will people still buy cars? Of course they will, right? Like there's going to be people that buy cars for fun or just because convenience Waymo doesn't service them. But when you think about city driving...

And being around to get around inside cities, I just think that this is going to probably save people money versus car ownership. And that feels like a big step in just having more cars that they can put their technology into make sense to me. Like, I think ultimately, the key to this and what Cruise was always so interesting about is that Cruise was trying to promise that you could put your their software and their hardware onto pre existing cars, right?

In a world where like, say, Hertz finishes with their rental car fleet for the year because they always turn their rental car fleet over. Imagine a world where Waymo just buys those, right? And they're a lot cheaper. You can outfit them all. And then suddenly you've got an extra 100,000 Waymos. That feels like where we're going to be getting to. And I think that feels like a big step in the right direction. Yeah.

Yeah, I totally agree. This was something that was being discussed early on, like, you know, many years ago when people were still excited about self-driving technology, this idea of the end of private car ownership. Yeah. That once you could just call, essentially for probably very little money, like you could always get an Uber and it won't be very expensive and it'd be self-driving Uber, right?

Maybe you don't need to buy a car. Personally, I would find that pretty exciting. But yeah, I mean, why not, right? Like, I mean, again, like my wife and I have talked about, we live in LA now, but when my oldest daughter's about to graduate from high school, we're going to go back to New York, I think, which is where we love living. And one of the most amazing things about New York is you don't need a car, right? Like you really don't. And you can walk around and get around to so many places and it saves a giant hassle cost, but also hassle. I think in the world where that happens in most major metropolis cities, which I think is possible, I think we're getting there.

Yep. Next up, we've got to have a story about chips and hardware on the show. So this one is about Cerebrus, which we've covered many times. They have their own kind of cool chip design that's very different from the standard ones. They are filing for an IPO. So they have released this investor prospectus.

And they are going to try to do this initial public offering, which is a way for investors to buy their stock on the public market, a way in which they could raise a lot of money.

So we haven't seen a lot of AI companies are staying private, like OpenAI, of course, getting a lot of investment. Cerebras is pretty much sure. They've been around for a long time. They've had many rounds of fundraising. So I suppose it makes sense for them to try and get more money via an IPO.

Yeah, I mean, I assume that there's these IPOs are coming for a lot of these companies. I mean, Cerebrus, at least as a chip company, so that they have like, so they can show a scaling, especially if AI continues to go. I mean, look at Grok, GROQ, that company as well. Like, I think there's going to be a lot of these companies that are hardware based that are probably going to do okay. I think the hard part is, though, is like, any of these companies is competing against conceivably the largest 800 pound gorilla of 800 pound gorillas we've ever seen, which is NVIDIA. And like,

My feeling with all these hardware companies is like, okay, can you show... I know both Cerebrus and Grok have shown significant advantages over NVIDIA, especially for AI, but like...

You're competing against the most capitalized, one of the most capitalized companies in the entire world who has thousands and thousands of employees fighting against you. Like, it would just be a tricky investment for me because I don't know if at an IPO level you can see something like this totally taking off. But again, I just think more of these hardware companies are going to get to this place. And last up...

A bit of drama, and we always love having something that's a little more dramatic and perhaps amusing on the show. And this one is about a silly-ish thing that happened with a startup. The title is Why Combinator is Being Criticized After It Backed an AI Startup It Admits It Basically Cloned Another AI Startup.

So we talked about Cursor early on. That's an interface for coding. And now we've had the story about

pair ai which is also an interface for ai coding they actually did a fork of an open source interface called continue and the part of this that was bad is that they replaced that open source license that you're supposed to keep for this one in particular you can't change the license

They just replaced it with what appears to be or was said to be an AI-generated one. They just chagipitied it and pasted it all over. And of course, they came with a lot of criticism. They kind of admitted it. And they did, by the way, say that they fought the project, so they didn't sort of pretend this was theirs. But nevertheless, this is kind of a silly thing for a software company to

And yeah, they came with a lot of scrutiny for sure. Listen, I in this world, there is a very fast turn to the you're a grifter mentality, which I don't know if you followed. Like there's that reflection 70 B story, which was obviously quite a thing.

I think a lot of people in this world are just trying to make things that are interesting and cool. And cloning software obviously seems pretty annoying. And I think the bigger thing is if these guys did this and they weren't a Y Combinator company, it probably wouldn't be nearly as big a deal because Y Combinator has the kind of history. It has the kind of gravitas of both Sam running it, but also Paul and all those other guys that kind of started this company that Airbnb has come out of and all these other sort of things.

I think in general, these stories...

get overblown quickly. But at the same point, like it's kind of dumb for these guys to have done this and kind of done it the way they did. And I think part of that side of it is like everybody's just racing to try to do these things as quickly as they can because they want to be able to like make their impact on this world. There's a lot of people in this space who are like trying to believe that they have the next big thing and they want to get to it before somebody else. And sometimes I think it's just better to like, hey, take a second and think about

a truly unique, interesting implementation of this stuff rather than how can we make this thing that somebody else did and just do it in an open source way. Although I will say also, open source cursor is not a bad idea in general. Like it's good to have something like that, but the business side of it may just be different. Exactly. And you're right to say that this is not kind of a huge deal. We shouldn't think of it as demonstrating that they're grifting. In fact,

The bigger side of this is how this reflects on Y Combinator. For a lot of our listeners, you might know Hacker News is a site where programmers go to chat about these kind of things. And of course, there was some discussion there and some criticism that Y Combinator has perhaps declined in terms of their process, their due diligence, etc.

But now we're getting very inside baseball for Silicon Valley. One thing I'll say is that Y Combinator is kind of like, let's call it the Harvard of startup founding, right? And so like anytime a Harvard or somebody at that level screws up, there's going to be a thousand people that come out of the woodwork and talk, you know, start calling this kind of like old...

generational thing out of touch or like clearly immoral in some way because it gets the attention on it. I think white comedy are still fascinating company. I think they do interesting things. I think they give startups a really interesting lever to get off the ground. And it's just, it feels a little bit like, what is it called? Sour grapes at a little bit, but also understandable.

Onto research and advancements in which we have a couple of papers. The first one is titled, Where are RNNs all we needed? And RNNs, if you don't know, is Recurrent Neural Networks. That's the thing that people used to use before transformers became a thing where you basically have a bit of a loop of input and output and you do kind of an intuitive thing, which is you take one input at a time and you go through your input and

and process it. This used to be the way we processed text before Transformers. But that fell out of favor due to various reasons. Basically, it was kind of harder to train, harder to scale up and parallelize due to how that works. And in this paper that is co-written by Yoshio Bengio, notably a very influential figure in AI, they took the traditional forms of RNNs, LSTMs, and GRUs

and simplified them a bit, removed some of the things that required that inefficient training, leading to a minimal version of the RNNs that they say might actually just work, might be usable and comparable to transformers and things like Mamba and so on. So that's why they have that question of where RNNs are already needed. Wow.

Now, they don't answer that question because they don't scale up and actually compare at large scale, which is what we care about these days. They only have initial results on a relatively small dataset where it does show that there seems to be pretty comparable and good trends for this small set of data.

So ultimately they do kind of justify the question being asked. They don't so much answer it.

Let me ask a question as a non-technical person, and I'm sorry for the audience. I know that probably the vast majority of the audience is more technical than I am. You know, they've always talked about this idea that LLMs can scale to a certain point, but then there's going to be a fall off. But the idea that LLMs alone are not the answer to AGI. Is the idea with this like a parallel kind of like pathway that could somehow intersect with LLMs to kind of make a much larger model together?

Yes, I think that's pretty much it. And this has been a real trend sort of in the background where people have been exploring alternatives to traditional techniques for LLMs for a while. Because as you scale up, there's this kind of famous quadratic thing. The longer your input is, the more it costs at this kind of exponential-ish rate. Right.

And there's ways to make it linear where for every additional input, you pay the same price instead of it having this square power effect. This is another take on that, essentially, where we've seen state space models, Mamba, we've seen XLSTM, now we have a main LSTM and main GRU. So it's kind of been ongoing for a while and we still haven't seen...

any of these models go really big and yeah uh be a game changer but certainly seems like this could be part of what allows us to go to these trillion dollar models or whatever got it okay and next we've got Mio Mio I'm not sure what it is a foundation model on multimodal token tokens so that tokens or tokens which one is it it's supposed to be tokens I know I know sometimes I say things wrong but uh

Multimodal, we all know that's been the big trend of this year. Things like GPT-4.0, where you can take in multiple modalities, images, text, audio, and output that.

And multimodal tokens, what that means is that there's different ways to do this. You can train your model to have different encoders to sort of separately process images and texts and combine them in the middle. Or you can do this training where you just interleave. You have image tokens, you have text tokens, you have audio tokens, and all of that is just part of your input. And you can mix and match in whatever way you want, which allows your model to be a lot more flexible.

So that is the focus here. They are releasing or saying they will release an open source, one of these multi-modal large language models that will be able to take in a variety of input modalities and then also output a variety of modalities specifically here.

for modalities. I think we don't have a very strong open source multimodal model. We've seen some sort of efforts on that front, but nothing like Lama. And one of the exciting things about Lama 3.2, as we covered last week, was them adding vision as an input

So this is going beyond that. This is going into text images, video, and would be pretty exciting. Yeah, I mean, the llama glasses thing, I keep coming back to it because...

I just think all this stuff is so friction-based right now to try to get access to any of it. But the minute that it becomes in something that you're wearing all the time or you're putting in your ears, one of those two, like, it feels like it opens the door to so many more use cases rather than having to, like, I don't know, for the meta stuff, even with the multimodal, I plan to spend some time. But to get access to it, you have to go to, like, Meta's chat, which...

which is like a knowing thing to get to. Like, I just think the multimodal background on these models is going to get better and better. Then suddenly we're going to have a pair of glasses where it's like, oh, this is what this is. Like, it's just going to get better in the next two years, three years. Then we're going to have a pair of glasses and it's just going to be like kind of a mind opening experience.

And just one more story. We've got something from Apple, which is always fun to see. They are releasing DepthPro, an AI model that rewrites the rules of 3D vision, according to this article title from VentureBeat. So this model, DepthPro, can generate a deal 3D depth maps from single 2D images, an ocular depth estimation if you want to get technical,

And this is obviously kind of a leap in this degree of accuracy. They have very high resolution depth maps and fast in just 0.03 seconds. So you must imagine this came out of their work on their vision headset. This is essential for things for AR and VR, being able to estimate depth, which is how far stuff is around you.

And they say that this works kind of in various settings. So you don't need to train in various environments, retrain the model. It'll just kind of work.

So it's a pretty challenging task, actually, and has been one of the kind of important tasks in computer vision for decades, being able to estimate vision. Used to be you would need two cameras, you do stereo vision as we do. But nowadays, because of machine learning, you can get away with using a single camera and being able to estimate depth pretty well.

So, yeah, it's cool to see Apple kind of improving potentially their machine learning, their ability to do cutting edge stuff with things required for their products.

It also makes me think about the idea of going back to the self-driving cars. Like so much of that is based on vision, right, too, as well. Or LIDAR or trying to find out ways to kind of purse out depth of where things are. I just feel like that technology is going to improve like so fast now that we're doing all this stuff. For here, it's also going to go there. And the modeling of the real world seems like it's really the next big step now.

And this kind of feels like a way into that. There's a lot of implications to go into of like for robots. That's another big trend of trying to get smart robots. They need this sort of stuff. And this is being open source. So the code and pre-trained model are being made available on GitHub. By the way, I would love to know, I'm sure it's got to be in somebody's brain, if not on a piece of paper somewhere at Apple, their humanoid robot. Because to me...

As much as the Vision Pro and the glasses are going to be a thing that Apple does, clearly. And I think that the glasses are probably... Now they've seen Meta's product. They're probably pushing forward. And there's a real thought that maybe Meta released these things so they can get ahead of Apple before they... Because Meta's saying it's still three years away, they're saying. Or maybe that much. But imagine Apple's humanoid robot project, which will just be sitting in a research room under six different locks for the next five years. But...

They have a ton of data that could come out and they always wait for other ones to come out first. So I know the Tesla event is coming, I think on Thursday of this week, right? On the 10th. It would be really interesting to see how this stuff also translates into an Apple humanoid robot in some form, which is a big moneymaker for them and could be a next big product.

I could definitely see it. If you do buy a home robot to do chores for you, I could see Apple branding being a big differentiator. Now, it'll be interesting to see if they are going to commit to that given the kind of disaster they had with the self-driving car project. Yeah, who knows, right? I mean, the thing is, Apple can commit billions of dollars to something and then just bury it as a loss, which is pretty crazy in some form. But either way, cool to see them doing

training this and releasing it. Onto policy and safety. And we begin with another sort of big story, the end of the SB 1047 saga. So you've covered the progress of this bill on and on multiple times.

It has passed the House. It has been kind of waiting for the California governor to either veto it or approve it. And he has vetoed the bill. So as we...

1047, the regulation bill that would require safety testing of large AI systems before their release. It had some other ways that regulated large companies, giving the state attorney general the right to sue companies. Governor Nusser has vetoed it and

has argued that it focused too much on regulating the largest AI systems, you know, model size, as opposed to the use, uh,

of it, like the outcome of using AI, which is one of the big debate points. Like, should you regulate according to the size of a model and require things at development time? Or should you regulate more at deployment time? What happens? So, you know, obviously sparked a lot of conversations, sparked some thinking about, you know, this was a bit of a test. This was the first big push to AI coalition within the United States and

for things like frontier models, and it is vetoed. Where did Jeremy land on this? I was so curious because I missed him if you talked about it. Was he for this bill? I think the consensus, my impression is among safety people is that this was a good step, that this was useful, especially kind of early on. I think

People tend to be fans of the idea that at certain thresholds of size, you should have some requirements for your models for safety tasting.