Deep Dive

Shownotes Transcript

Hello, and welcome to the Last Week in AI podcast, where you can hear a chat about what's going on with AI. As usual, in this episode, we will summarize and discuss some of last week's most interesting AI news, which you can go to the episode description to see all the timestamps and links to those articles. I'm one of your regular hosts, Andrey Karenkov. I studied AI in grad school, and I work at a generative AI startup now, which is

Let's say about to do something exciting, hopefully.

Yeah, I'm actually excited about it because we've been talking offline about this announcement that's coming. And I feel like I probably joined the audience in being very curious about what you're up to day to day. So we'll see something soon. I hope. I'm really excited for that. Yeah, yeah, yeah. I guess I haven't disclosed too much. I can say, I mean, I'm working on AI for making little games with the idea to have a platform where people can make and publish and play each other's games online.

And yeah, tomorrow, April 8th, because we're recording this a little late. So Tuesday is a big launch of the latest iteration of our technology. Yeah, exactly. So by the time this episode is out, more than likely we'll have already done a big launch. Anyone listening to this can go to astrok.com to try it out. I'm sure I'll try and plug it elsewhere as well. So you'll be sure to hear about it. Yeah.

Yeah, so on that note, we're basically going to have to blitz this episode because Andre has to get to work, man. He's got to actually get out there and stop being such a lazy schmuck. So yeah, let's get going. Starting right away in tools and apps. And the first story is a pretty big one. It's Llama 4. Meta has released the latest iteration of their open source series of large language models, large multimodal models as well.

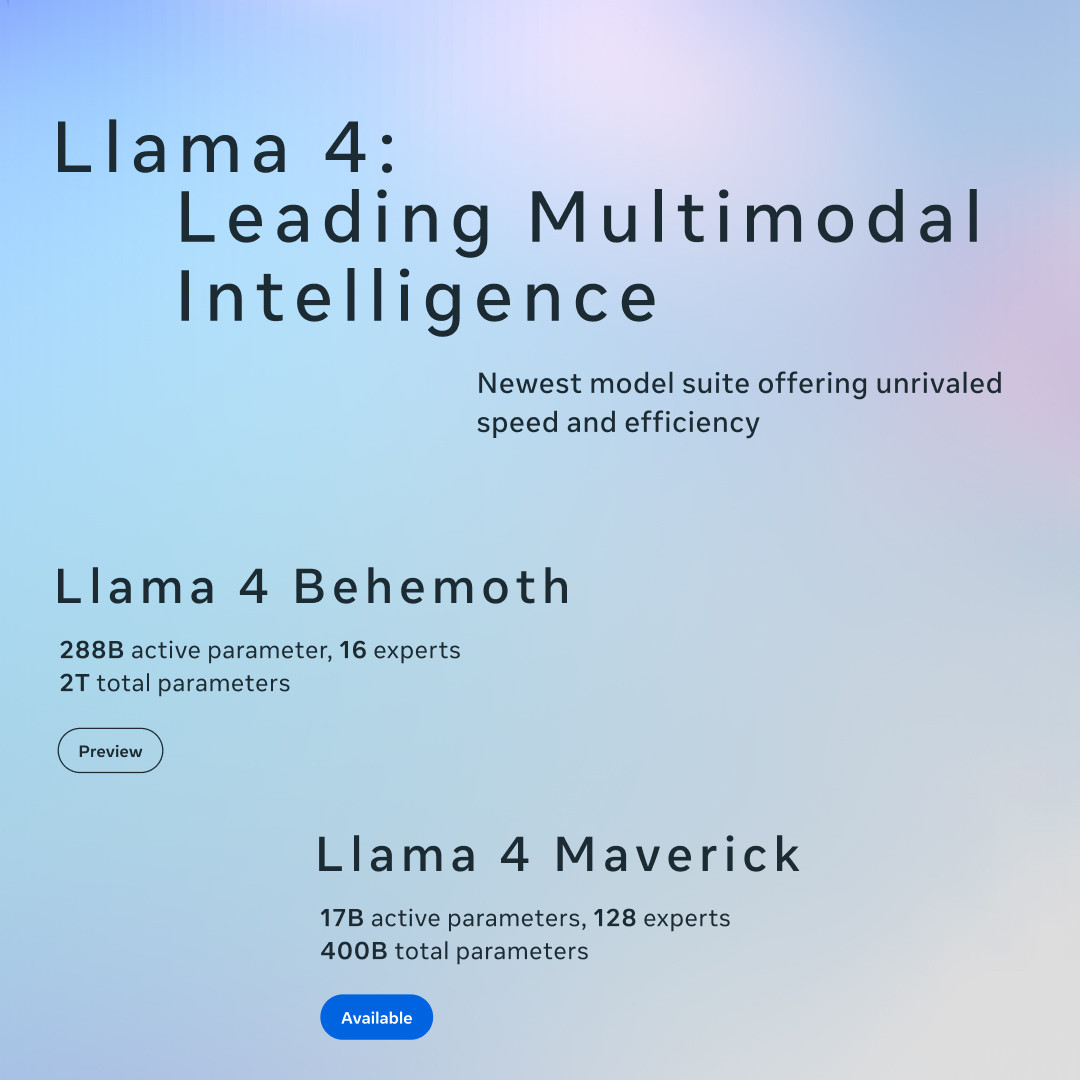

These ones come in four varieties and different sizes. Some of them are called Lama 4 Scout, Lama 4 Maverick, and Lama 4 Behemoth.

And they are also launching it out to all their various ways to interact with chatbots on WhatsApp, Instagram, Facebook. I forget wherever else they let you talk to AI. And these are quite big. So just to give an idea, Maverick has 400 billion total parameters, but only 17 billion active parameters. So they are kind of pushing this also as

more friendly to lower device configurations. But on the high end, Behemoth, which is not released and that they are saying is still being trained, has nearly 2 trillion total parameters and 288 billion active parameters, which from what people were

Kind of a speculation around GPT-4 at the time was that it was something like this. It was a mixture of experts where you have nearly 2 trillion total parameters and then like over 100 billion, maybe 200 billion total parameters. We don't know, but this reminds me of the GPT-4 kind of speculations.

Yeah, this release, by the way, is pretty underwhelming to a lot of people. So there's this interesting debate happening right now over what exactly is fucked with the Lama 4 release, right? So they're large models.

I'll just talk about the positives first. So from an engineering standpoint, everything that's revealed in the sort of 12 page read, I don't know whether to call it a blog post or a technical report or whatever. It's not this like, you know, beefy 50 pager of the kind that DeepSeek produces. It gives us some good data, though. Everything we get there about the engineering seems kind of interesting. By the way, like when it comes to

The general architecture choices being made here, like a lot of inspiration from DeepSeek, man, like a lot, a lot. And just to give you an idea, so they trained at FP8 precision. So again, like DeepSeek v3, though DeepSeek v3 used some like fancier mixed precision stuff too. We talked about that in the DeepSeek episode. Theoretical performance of an H100 GPU is awesome.

around 1,000 teraflops for FP8, and they were able to hit 390 teraflops in practice. So they were hitting utilization of around 39%, 40%, which that's on the high end for a fleet of GPUs this big. This is 32,000 H100 GPUs they used for this. This is no joke. Like that is just getting your GPUs to hum that consistently is a very big deal. And so from an engineering standpoint, that's a pretty good sign.

There are a couple of things here that they did that that are a little bit distinct. So one piece is this is a natively multimodal model. So, yes, drawing a lot of inspiration from deep seek. But at the same time, very much kind of that meta philosophy of, you know, we want good grounding, good multimodality. They use this technique called early fusion, where essentially like text and vision tokens are combined from the very start.

of the model architecture, and they're all used to train the full kind of backbone of the model. And that means that the model learns a joint representation of both of those modalities from the very beginning. That's in contrast to like late fusion, where you would process text and images and other data in separate pathways and just like merge them near the end of the model, more of a kind of like more of a janky kind of hacked together Frankenstein monster. This is not that right. This is more of a monolithic thing. Anyway, there's a whole bunch of stuff in here. It turns out very much that Scout and

seem to be in the same model line. Like they kind of seem to have, they're making some of the same design choices in terms of their architecture. Maverick is,

seems like a deep sea clone. It seems like an attempt. And some people are speculating this is like a last minute decision to try to replicate what deep seek did. Whereas if you look at scout and you look at behemoth, those are much more of the kind of like, like you said, Andre, it's like somebody was trying to do GPT four meets like a mixed trial type of model. And like, it's very unclear why this happened because,

But one thing we do know is the performance seems to be shit. Like when people actually run their own benchmarks on it, or at the very least very mixed, right? There's all this stuff about like, just for example, on LMSIS, they've got a model that seems to be crushing it and it's doing great on amazing ELO score, but...

We see if we read very closely in the paper that the model they used for that is not the model they released, is not any of the models they released. It's a custom fine tune for the LMSIS arena leaderboard. And that is a big problem, right? That's one of the things people are really ripping on meta on us about. Like, look, you're showing us eval results or benchmark results for one model, but you're releasing a different one. And this really seems like...

Wow.

Last thing I'll say is one explanation people are throwing around for the bad performance of these models is just the hosting. The systems they're being hosted on are just not optimized properly. Maybe they're quantizing the model a little too much or poorly. Maybe they're using bad inference parameters like temperature, like, you know, top P or whatever, or bad system prompts. Anything like that is possible, including more nuanced kind of hardware considerations. But bottom line is,

This may be the flub of the year in terms of big flashy launches that should have been a big deal. I think we'll be picking up the pieces for a couple of weeks to really figure out how we actually feel about this, because right now there's a lot of noise and I personally am not resolved yet on how impressive any of these things really are. But those are my top lines anyway. Yeah, I think that's a good overview of the sort of discussions and

feedback and reactions people have been seeing. There's also been speculation that they at Meta, at least leadership, pushed towards gaming the quantitative benchmarks, things like MMLU, GPQA, the usual sort of numbers you see, Live Code Bench. Of course, they say it's better than Gemini 2 Flash, better than DeepSeek 3.1, better than GPT 4.0. But as you said, when people are using these models,

sort of anecdotally, personally, or on their own personal held out benchmarks that are not these available benchmarks where you can cheat, you can train it.

even like accidentally cheat or sort of like cheat by not intentionally trying not to cheat, right? Which is one of the important things you do these days. You need to make sure your model isn't trained on the training data when you're scraping the internet. If you're not doing that, you might be cheating without knowing it or at least like pretending not to know it. So yes, it's seeming like the models are not good, right?

Is the general reaction worth noting also, as you said, with Behemoth Maverick Scout, it was this difference where they have 16 experts for Behemoth and Scout. So, you know, pretty big models that...

are doing most of our work. Maverick is different. It has 128 experts. So it's a bigger model, but the number of total active parameters is low. And I think there's various reasons you could speculate. Like they want the models generally to be runnable on less hardware and Behemoth would be the exception to that. As you said, also they need to keep costs down. I have no idea how Meta

Like is thinking about the business plan here of supplying free chatting with LLMs, which is relative to anything else, very expensive. And they're still doing it for free kind of all over their product line.

So various kind of speculations you can have here. But as you said, seemingly the situation is they launched possibly kind of in a hurry because something else is coming because these businesses typically know each other's releases somehow and perhaps they should have waited a little longer.

Yeah, a lot of questions as well around like just the size of these models and what it means to be open source, right? We've talked about this in the context of other models, including DeepSeq v3 and R1 and all that. At a certain point, your model is so big, you just need expensive hardware to run it in the first place. And I think this is a really good example of that, right? So Scout, which is meant to be their small model, right? Like it sounds like Flash. It sounds like one of those 2.7 billion parameter models or something. It is not.

So it's a 17 billion active parameter model, as you said. Their big flex here is that it fits on a single NVIDIA H100 GPU. So that's actually pretty, that's pretty swanky hardware. That's, you know, tens of thousands of dollars of hardware. You know, that's 80 gigs of HBM3 memory, basically. Does have one thing, by the way, that this stuff does have going for it is an insanely large context window. So 10 million tokens.

That is wild. The problem is that the only evals they show on that context window length are needle-in-a-haystack evals, which, as we've covered before, are...

pretty shallow. Like it doesn't really tell you much about how the model can use the information that it recovers. It only tells you, oh, it can pick out a fact that's buried somewhere in that context window. It's not bad, but it's not sufficient, right? It's one of those things. So, and that's the Lama 4 Scout. Maverick, they say, fits on one H100 GPU

host. Now the word host is doing a lot of heavy lifting there. Really what that means is one H100 server. So presumably they mean the H100 DGX. In fact, I think that is what they said in the write-up. That would be eight H100s. So hundreds of thousands of dollars worth of hardware. Hey, it fits on just one of these servers. Like that's a lot of hardware. So anyway,

Bottom line is, I think, you know, these are incidentally, Scout, I believe, is a distillation of Llamath for Behemoth, which is still in training. So we don't know what Llamath for Behemoth actually is going to look like. So we're all kind of holding our breath on that. But for now, unless Meta has like really screwed the pooch on distillation and they just they have an amazing Behemoth model and they just the distillation process didn't work.

it seems plausible that the behemoth model itself may be underperforming as well. But again, all this is still up in the air. As with so many things here, it does seem like a rush release. And I think the dust is going to be settling for a few weeks to come. Still worth highlighting, they are sticking to their general approach of open sourcing this stuff. You can request access to Lama 4 Maverick and Lama 4 Scout to get the actual weights as you were able to with previous Lamas.

As was the previous case, they are licensed under these bespoke things, the LAMA IV community license agreement, where you are saying you will not be doing various things that Meta doesn't want you to do. LAMA has, I think, been a big part in a lot of research and development on the open source side. So that at least is still laudable.

And on to the next story, we are moving to Amazon, which hadn't released too many models, but they seemingly are starting. And their first act here is Nova Act, which is an AI agent that can control a web browser. This is coming from Amazon's AGI lab, which hasn't really put out many papers, many products so far, but this seems to be a pretty big one.

And it is comparable to something like OpenAI's, I forget what it's called, but their web use agent that can... Oh, operator, yeah. Operator, exactly, where you can tell it, you know, go to this website, scrape all the names of the links and summarize it for me, stuff like that.

So they have this general purpose AI agent. They are also releasing the Nova Act SDK, which would enable developers to create agent prototypes based on this. They say this is a research preview. So it's still a bit early, presumably not fully baked.

But yeah, it's an interesting play, I think. We still don't have too many models or offerings of this particular variant. We have Anthropocomputer use. We have OpenAI Operator, which I don't recall if they have an SDK for that. So this could be a pretty significant entry in that space. Yeah, and this is the first product to come out of the Amazon AGI lab, right? So this is kind of a big unveiling. A couple of, I mean, we don't have a ton of information, but...

some notes on benchmarks, right? So they are claiming that it outperforms OpenAI and Anthropix best agents on at least the screen spot web text benchmark. That's a measure of how well an agent interacts with text on a screen. And apparently on this benchmark, Novak scored 94%

And that's in contrast to opening eyes agent, which scored 88% in Anthropix quad 3.7 Sonnet at 90%. So significant seemingly on that benchmark, but that isn't enough data to actually be able to kind of generally assess the capabilities agent in particular web Voyager is, uh,

a more common evaluation that was not like the reports, the performance of the Nova Act agent isn't being reported on that. So that's kind of leads you to ask some of these questions, but we'll see. I mean, they definitely have, you know, great distribution through Alexa and maybe that'll allow them to, you know, iteratively improve this pretty fast. We'll see. They're also improving their hardware stack thanks to, among other things, the Anthropic Partnership. So even if it doesn't come out great out the gate,

They're sitting at least on a promising hyper-scaler stack. So this might improve fairly quickly. Right. And it could be also part of their plans for Alexa Plus, the new subscription service. They are launching Alexa also as a website in addition to their hardware. So presumably they might be thinking to make this part of their product and will keep pushing.

Onto a few more stories. Next up, another giant company planning a giant model release. This one is Alibaba, and reportedly they are...

preparing to release their next flagship model, QWEN 3, soon. So the article here is saying as soon as April. Apparently, Alibaba is kind of rushing here to respond to DeepSeek and a lot of the other hot activity going on in China. We've talked about QWEN actually quite a bit over recent months, QWEN 2.5, various smaller releases, I guess you could say. And

And Coen Free presumably is meant to be kind of the best and beat everyone, right? The one thing I'll say is I've seen speculation that this may have been part of the driver for the rapid release of Llamaphore.

So all we really know. Could be. Next, we have a smaller company, a startup, Runway, and it has released their latest video generating AI model. So this is Gen 4, and it is meant to be kind of a customer facing usable video generation model.

And it looks pretty impressive from just having looked at it. It is kind of catching up to Sora, catching up to more top of the line models that are capable of a consistent video, capable of also being

prompted both by text and image. They have a little kind of mini short film that they launched this with, where you have like a cute forest and then some characters interacting, showcasing that consistency across multiple outputs. And this is at a time when they are raising a new funding round, valuing them at $4 billion with the goal of getting $300 million in

Our goal is to get $300 million in revenue this year. So Runway, a major player in the space of AI for video.

And a similar story next. This one is about Adobe and they are launching an AI video extender in Premiere Pro. So Premiere Pro is their flagship video editing tool. And we've seen them integrate a lot of AI into Photoshop, for instance. This is the first major AI tool to get into Premiere Pro and video editing. And the feature is generative extend. It will allow you to extend videos

but up to two seconds with Adobe Firefly. We covered, I think, when they previewed this, but now it's rolling out to the actual product. Yeah, this kind of rollout, at least to me, makes a lot of sense as a first use case for these sorts of videos. It sort of reminds me of Copilot that was powered by Codex back in the day. First use case was text or code autocomplete. We've seen the autocomplete feature

Kind of functionality is sort of like native for a lot of these transformer models. This one's a little different, but it's still kind of this very natural, very grounded in real data. And you're just extending it a little bit. So especially for a video where you need to capture a lot of the physics, that's something I'd expect to be kind of a nice way to iron out a lot of the kinks in these models.

Right. And they do have some other small things launching alongside that. They have AI powered search for clips where you can search by the content of a clip. I would suspect it's actually a bigger deal for a lot of developers. That's true. Yeah, yeah.

Because if you have 100 clips, now you can search for the content as opposed to file name and whatever. And they also have automatic translation. So quite a few significant features coming from Adobe. And just one more story. OpenAI is apparently preparing to add a reasoning slider and also improved memory for Chad GPT. So we've seen some...

I guess, people starting to observe, presumably, this idea of a reasoning slider in testing. And that allows you to specify that the model should think a little thing harder, or you can leave it at automatic to let the model do its own thing, mirroring to some extent what Anthropic has also been moving toward.

And on to applications and business. The first one is about NVIDIA H20 chips and there being $16 billion worth of orders from ByteDance, Alibaba and Tencent recently. So this is covering sort of a set of events, I suppose, or a time period.

In early 2025, where these major AI players from China have all ordered this massive amount of the H20 chip, one that's not kind of restricted. And the one that I believe, or this or some variant of it, was what DeepSeek was built upon and what showcased the ability to train DeepSeek v3.

So this is a big deal, right? And NVIDIA presumably is trying hard to not be too limited to be able to actually do this. Yeah, the DeepSeq was trained on the H800, which is another China variant of the H100. So you're right, they all fall under the Hopper generation. But specifically for China, this is a play that we've seen from NVIDIA a lot,

It's them responding to the looming threat of new potential export control restrictions. Right. So you can imagine if you're NVIDIA and someone tells you, hey, like in a couple of months, we're going to crack down on your ability to sell this particular GPU to China. Well, you're looking at that and like you'll go, OK, well, I'm going to quickly try to sell as many of these to China as I can while I still can make that money. And then once the export process

control band comes in, then that's it, right? So you're going to actually tend to prioritize Chinese customers over American customers. This has happened in the past. This will continue to happen in the future as long as we include loopholes in our export control regimes. And so what you're literally seeing right now is NVIDIA making the call to

Do we have enough time to proceed with making the chips to meet the $16 billion set of orders from like Dance, Alibaba and Tencent? Like, are we going to have the GPUs ready and sold before the export control bans come into effect? Otherwise, if we don't, we're just sitting on this hardware. Now, keep in mind that

H20s are strictly worse than the, say, H100s that NVIDIA could be making or H200s that NVIDIA could be making instead. So from the standpoint of selling them to the domestic market or potentially, depending on the node and the interactions here, they could be making Blackwells too.

So there's this question of like, if they choose to go with making H20s to try to meet the Chinese demand, which is about to disappear, then they may end up being forced to sit on these kind of relatively crappy H20 chips that don't really have a market domestically in the US. Right. So that's a big risk. And they're calculating that right now. They have limited TSMC allocation to spare. So it's not like they can meet both demands.

Yeah.

Moving along, we have kind of a funny story and I suppose another one of the big business stories of a week. And it is that Elon Musk's

X, previously Twitter, has been sold to Elon Musk's XAI in a $33 billion all-stock deal. So you heard it right. The company, the AI company that is developing Rock has bought the social media company Twitter slash X for $33

tens of billions of dollars grok has been hosted as part of x for basically since its inception you can pay a subscription tier to use grok on x grok.com i believe also exists but i guess grok has primarily lived on x and the justification here is you know of course that twitter will provide a lot of data to train grok and there's like deep synergies that can be leveraged

Yeah, it's also kind of interesting to note that when Elon actually bought X in the first Twitter as it was back then, he paid $42 billion for it, right? So now this is an all stock deal at $33 billion. So the company's value is actually nominally decreased. Now, there are all kinds of caveats there. You know, you have a sort of internal, let's say, purchase within the ecosystem, right?

I'm not clear on what the legalities of that are, whether fair market value is an issue in the same way that Elon raised it with respect to opening eyes attempt to sell its for-profit arm to the nonprofit arm. That was one argument is, hey, you're not selling this at fair market value. I suspect this is probably more kosher because it has less control weirdness issues, but super not a lawyer, just interesting numbers.

So yeah, anyway, all stock transaction and the ultimate combination of these two would value XAI at $80 billion. So that's, you know, pretty, pretty big. And also, interestingly, pretty close to Anthropix valuation, right? Which is amazing, given that X was 20 minutes ago, non-existent, right? This is a classic Elon play. XAI. Yeah, it's confusing. Yeah.

You're right. XAI came out of nowhere, right? Like what, 18 months, two years ago, pretty wild, pretty impressive and a kind of classic Elon place. So there you have it. Not much information in the article, but we just get these top lines and the numbers are big. The numbers are big. And as you might expect, there is speculation. I mean, a lot of people are making memes about the, you could say self-dealing, I suppose, in this case, right? Like these are two Elon Musk companies. One of them is buying the other.

And it could have to do with some financial aspects of the purchase of Twitter, the loans that Elon Musk took out against his Tesla stock, which is now falling a little bit. Yeah, so you can do various kind of nitpicky ideas as to why this happens right now, why this precise pricing. But in any case...

I suppose not entirely surprising given how this has been going. Onto the lightning round, we have the story that SoftBank is now OpenAI's largest investor and has pushed the market cap of OpenAI to $300 billion, but at the cost of a ton of debt. So SoftBank is a big investor out from Japan that has invested

Very large sums into various tech startups. And seemingly they've taken on debt to do with $40 billion investment round for opening eye with $10 billion borrowed from Mizuho Bank. So, wow, that's quite a borrow.

Yeah, it is. It's also consistent with the $100 billion, let alone $500 billion to invest in this deal. And all the sordid details started to come out. And it's like, yeah, well, this is part of it. They're literally borrowing money. So this is an interesting play. Masayoshi-san is taking a big risk here. There's no other way to put it. And there's a kind of budding relationship there with Sam Altman that we've seen before.

in various forms. There are a couple of strings attached, as you might imagine, right? If you give $40 billion to an entity, there are going to be strings. So the $10 billion deal is expected to be completed in April. The remaining amount is set to come in early 2026, right? So this is not a super near term, but

But when you're thinking about the super intelligence training runs that OpenAI internally thinks are plausibly going to happen in 2027, this is basically that, right? This is that injection of capital.

OpenAI has to transition to a for-profit by the end of the year in order to get the full $40 billion, right? So more and more pressure mounting on OpenAI to successfully complete that transition, which seems to be bogged down in an awful lot of legal issues now. So this is kind of a challenge. Apparently, SoftBank retains the option to pare back the size of the funding round to $20 billion if OpenAI does not successfully transition.

That is an accelerated deadline, right? So OpenAI previously was under a two-year deadline from its last round of funding. And now it's saying, okay, well, by the end of the year, you've got to complete this transition. So that's kind of interesting, more heat on SAM to kind of complete this weird acquiring the

Is it the nonprofit, the for-profit? I mean, who can keep track anymore? But basically, yeah, acquiring the nonprofit by the for-profit and all that. So we'll see. I mean, this is going to be a legal and tech drama, the likes of which I don't think many of us have seen. Truly a unique situation that can be said about the opening act. Who hasn't? Who hasn't been there? Yeah. Yeah.

And next up, we have a story about DeepMind, which is Google's AI arm. And there's reports now that it's holding back release of AI research to give Google an edge. Just, I think, last week, maybe two weeks ago, we were sort of commenting on some of the research

coming out of DeepMind as seeming like something Google might not want to share because it is competitive in footage. And yeah, now there's reports coming from former researchers that DeepMind, for example, is particularly hesitant to release papers that could benefit competitors or negatively impact

Gemini, their LLM offering. And I've also heard a little bit from people I know that there's a lot of bureaucracy, there's quite a lot of tape around publication and going to publication these days. So

Apparently now, new publication policies include a six-month embargo on strategic generative AI research papers and have to justify the merits of publication to multiple staff members. Yeah, this is also, I mean, in effect, we've seen this sort of thing from DeepMind before. In particular, I'm trying to remember if it was the Chinchilla paper or if it was Gatto or something. Anyway, we've talked about this before on the podcast as well, but

There was one case early on where there was a full year's delay between, I think, the end of the training of model and then it's sort of announcement. What is one of these early kind of post GPT-3 models? And so, you know, this is in substance, maybe partly new, and then it's partly a habit that's been developed internally. It makes all the sense in the world because, you know, they're forced to compete with an increasingly hot field of companies like OpenAI, Anthropic and Chinese companies.

But it's definitely interesting. I mean, the claim as well has been there were three former researchers they spoke to who said DeepMind was more reluctant to share papers that could be exploited by competitors or that cast Google's own Gemini models in a negative light compared to others. They talked about an incident where DeepMind stopped the publication of research that showed that Gemini is not as capable or is less safe than, for example, a GPT-4. But on the flip side, they also...

said that they'd blocked a paper that revealed vulnerabilities in chat GPT over political concerns, essentially concerns that the release would seem like a hostile tit for tat with open AI. So you get a bit of a glimpse for the kind of inter company politics, which is absolutely a thing. There's a lot of rivalry between these companies, which is kind of an issue too on the security and the control alignment side. But anyway, so there you have it.

Maybe not a shock, but certainly a practice that we now have more evidence for coming from Google. And by the way, it's certainly going to be practiced at other companies, too. This is not going to be a Google exclusive thing.

Yeah, I mean, to be fair, OpenAI has basically stopped publishing for the most part. So yeah, I think it's not surprising. But not only because DeepMind for a long time was a fairly independent, like pure research, more or less organization that was much more academic friendly, and that is definitely changing.

Next up, we have a story about SMIC, China's leading semiconductor manufacturer trying to catch up to TSMC. Apparently, they are at least rumored to be completing their five nanometer chip developments by 2025, but at much higher costs due to using older generation equipment and presumably having very, very poor yields.

Yeah, and check out our hardware episode for more on this. But what they're doing is they're forced to use DUV, deep ultraviolet lithography machines, rather than EUV for the five nanometer node. Normally, five nanometers is where you see the transition from DUV to EUV, or at least that's one kind of node where you could transition.

And anyway, in order to do it with DUV, the resolution is lower. So you've got to do this thing called multi-patterning. Take the same chunk of your wafer, scan it through again and again, potentially, and again, potentially many times in order to achieve the same resolution as you would with just one scan with EUV. And so what that means is you're spending four times as long on one particular pass for one particular layer of your lithography.

And that makes your output slower. So it means that you're not pumping these things out as fast. And it also reduces yields at the same time, both of which are economically really bad. So yeah, yields are expected to be an absolutely pathetic 33%.

And that translates into a 50% higher price than TSMC for the same node. By the way, I mean, the CCP is going to be subsidizing this to blazes. So the economics are just fundamentally different when you talk about, for example, like lithography and fab for AI chips in China, because it's just like a national security priority. But still, this is kind of interesting. And this will be used, this node, by Huawei to build their Ascend 910C chip. So these are actually going to see the light of day in production.

And just one more story in this section, Google-backed Isomorphic Labs is raising $600 million. So Isomorphic Labs is basically spun out of DeepMind back in 2021. They are focused on AI to model biological processes and primarily do drug discovery, presumably.

And this is their first external funding round. So they were able to do a lot, I suppose, with support from DeepMind and Google. They have now made major deals with companies like Eli Lilly and Novartis for billions in partnerships and research programs. So Asimovic Labs is certainly active, it seems.

Yeah. It's sort of a funny headline in a way. Like I'm not sure how they get to like external investment round. Cause apparently they see the financing. They're like, it's led by thrive capital. Okay. Okay. Cool. Cool. With participation from GV, which is called GV the same way KFC is called KFC. KFC used to be called Kentucky fried chicken. GV used to be called Google ventures, Google ocean.

oh shit, that's Alphabet, isn't it? Yes. Yes. Alphabet also kind of the parent company of Isomorphic Labs, right? So it's like, so GV is participating, that's Google, and then follow on capital from an existing investor, Alphabet. So like at least by entity count, this is two thirds sort of like the Google universe. Yeah. External is, let's say generous, I suppose. Yeah. Yeah. I couldn't see how much it is being led by Thrive Capital and their external. So

great. I don't know how much is being contributed by whom, but I just sort of thought that was funny. Like Google is so big or Alphabet is so big. They're kind of everywhere all at once, which anyway, I just said what counts as external these days. I don't know anymore.

And moving on to the research and advancements section. First up, we actually have a paper from OpenAI. So I should take back a little bit what I said about them not publishing. They do publish some very good research still. And this paper is called Paper Bench, Evaluating AI's Ability to Replicate AI Research.

So this is basically doing what it sounds like. They are evaluating in a benchmark suite the ability of AI agents to replicate state-of-the-art AI research and real AI research. So this is 20 ICML 2024 spotlight and oral papers from scratch. They need to understand the paper, they need to develop the code, and they need to execute the experiments.

So kind of the ultimate result we are seeing is that the best performing agent, Claude 3.5 Sonnet, with some scaffolding, achieves an average replication score of 21%. And that is worse than top machine learning PhDs, which were also recruited to attempt

the benchmarks. And they are also open sourcing this benchmark to facilitate future research in the AI engineering capabilities of AI agents.

One of the key things behind this benchmark is the strategy they use to kind of decompose the papers or the task of replicating a paper into a kind of tree with increasingly granular requirements. So you'll have these like leaf nodes that have extremely specific binary and relatively measurable results of the replication that you can actually get your judge LOM or this thing called judge eval done.

to go through and evaluate. And then what they do is they have this sort of like, I mean, in a way it's sort of like a, not a back propagation thing, but they essentially combine the leaf nodes together into one sort of like not quite leaf node. And then those kind of next layers of the tree combine and merge up higher at more and more kind of higher levels of abstraction. And so this allows you to essentially like, you know, give partial marks for partial replications of these papers,

And a submission is considered to have replicated a result when that result is reproduced by running the submission in a fresh setup. So there's a whole kind of reproduction phase before the grading phase begins. And the kinds of tasks that they're evaluating at the leaf nodes are things like code development, execution, or results match. So if you match a particular task

result or product of executing code. Anyway, this is all kind of a way, I think a very interesting way of breaking down these complex tasks so you can measure them more objectively. I think what's especially interesting here is that we're even here, right? We're talking about, let's make an evaluation that tests an LLM's ability or an agent's ability to replicate like

some of the most exquisite papers that exist. Like ICML, as you said, this is like a top conference. These are the spotlight and oral papers that were selected from the 2024 ICML conference. So like these are truly, truly exquisite papers

They span 12 different topics, including deep RL, robustness, probabilistic models. It is pretty wild. And they work with the actual authors of the papers to create these rubrics manually to kind of capture all the things that would be involved in a successful replication. And then they evaluated whether or not the replication was completed successfully using an LLM-based judge. But they did check to see how good is that judge. And it turns out when you compare it to like a human...

who does the, a human judge say that does the evaluation, they get an F1 score of 0.83, which is pretty damn good. So these are at least reasonable proxies for what a human would score on or how a human would score these things. Turns out quad 3.5 sonnet new with a very simple agentic scaffold gets a score of 21%. So one fifth over one fifth of papers successfully replicated by quad 3.5 sonnet new. That's pretty wild.

And anyway, they get into subsets that are potentially a little bit more cherry-picked that happen to show O1 doing better and all that stuff. But still, very interesting result, and I think this is where we're going. This tells you we're closing that kind of final feedback loop

Heading towards a world where recursive self-improvement starts to look pretty damn plausible. You know, 21% of exquisite cutting edge papers today can be replicated this way with caveats galore. But those caveats start to melt away with scale and with more time spent optimizing agentic scaffolds and so on. So I think this is just a really interesting sign of the times. Yeah, exactly. They do have a variant of a setup they call iterative agent, basically letting the model do everything.

more work, making it nonstop early, they get up to 26% accuracy replication with O1 high, so high compute cost. And they give it up to 36 hours in this case, and that gets you 26%. For reference, that's impressive.

Because replication is not necessarily straightforward if you're just given the paper to read and not have a code. And to give you an idea, some of these papers are all-in-one simulation-based inference, sample-specific masks for visual reprogramming-based prompting, test-time model adaptation with only forward passes, things like that that are, you know, yeah, the kind of research you see getting awards at AI conferences.

Next, we have a paper called Crossing the Reward Bridge, Expanding RL with Verifiable Rewards Across Diverse Domains. So reinforcement learning with verifiable rewards is one of the big reasons that DeepSeq and these other models worked well. They were trained with reinforcement learning on math and coding where you had these models

exact verifiers, right? You can know for sure whether what it did was good or not. And so this paper is trying to essentially generalize that to diverse domains like medicine, chemistry, psychology, and economics. And as a result, they are saying that you can get much better performance and you can kind of generalize the approach. Yeah, it's sort of interesting, right? Because like there's this classic idea that

okay, you might be able to make a coding AI or a math AI that's like really good by using these sorts of verifiable rewards verifiers. But yeah, how do you hit the soft sciences? How do you make these things more effective at creative writing or things like this? This is an attempt to do that. So they try a couple of different strategies. They try rule-based rewards. So these are like relatively simple kind of yes or no based on exact matches of, you know, does a keyword, is a keyword contained in the answer?

That's kind of rule-based binary rewards. They also have rule-based soft rewards where they use this measure of similarity called Jacquard similarity just to kind of measure roughly does the content of the output match the right answer. So they try those. They find that they don't actually scale that well. So you kind of saturate beyond a certain point. I think it was like around 40,000 tokens or something. Yeah, or sorry, 40,000 examples where you just, you start to degrade performance in these areas

non kind of quantitative tasks. And so what they do is they introduce another strategy, model-based rewards. And really this is what the paper is about fundamentally, or this is what the paper wants to be about. So they use a distilled 7 billion parameter LLM to basically train this like model-based verifier, model-based issue, model-based rewards. So the way that works is they start by training a base model using reinforcement learning. They have some really large training

highly, highly performant LLM that they use it as a judge. And they're going to use that judge to give these very nuanced rewards, right? So the judge is a very big LLM, very expensive to run. And it'll determine, okay, did the model, the smaller model that's being trained, did it do well at this task, right? And it's actually going to train the smaller model on a combination of math and code and the kind of softer sciences, like econ, psych, bio, that sort of thing.

And so that's step one. They'll just use like reinforcement learning rewards to do that with the big models, the greater models.

After that, they're going to take a fresh base model and they're going to use the base model they just trained using RL as the source of truth, essentially, as the source of text to evaluate. They'll provide correctness judgments from the big teacher model and essentially distill the big teacher model into the smaller model. They'll use about 160,000 distilled training samples.

from, anyway, from the data that they collected earlier with the, in that training loop. Bottom line is it's a giant distillation game. It's, it works well. The result is, is interesting. It's just, I think, good as an example, the kind of thing you're forced to do if you want to kind of go off the beaten path, work not with like quantitative kind of data, like math or code where you can verify correctness, you can actually compile the code and run it, see if it works.

Or you can use a calculator or something to get the, you know, find a way to get the mathematical result. In this case, you're forced to use, you know, language model judges. You're forced to find ways to, anyway, do distillations so that things aren't too expensive. Basically, that's the high-level idea. I don't think it's like a,

a breakthrough paper or anything, but it gives us some visibility into the kinds of things people are forced to do as we try to push LLMs out of the math and code, or agents, I should say, reasoning models out of the math and code zone. Yeah, it's a very kind of applied paper. No sort of deep theory understandings, but shows how to achieve good results essentially on a given problem. And they do also release

a data set and the trained reward model for researchers to build upon. And this is a pretty important problem, right? Because that's how you're going to keep improving reasoning models beyond just math and coding. So cool to see some progress here.

And speaking of reasoning models, the next paper is Inference Time Scaling for Complex Tasks, Where We Stand and What Lies Ahead. So this is, as it sounds like, a reflection of the status of inference time scaling, which in case you're not aware of a term, you're trying to get better results from your model just from more compute after training. You're not doing any more training, but you're still

performing better. And that has been a hallmark of things like DeepSeqs R1 and O1 and other models. Here, this paper is evaluating nine foundation models on various tasks, also introducing two new benchmarks for hard problems to assess model performance. And they basically have various

analysis and results that showcase that in some cases, basic models that aren't reasoning models are able to do just as well. In other cases, reasoning models do better. In some cases, high token usage, so a lot of compute, does not necessarily correlate with higher accuracy across different models.

So in general, right, we are kind of in an early-ish stage and there's some confusion. There's a lot of mess to get through. And this does a lot of sort of showcasing of where we are. Yeah. And this is a point that I think often gets lost in the inference time compute, the kind of high level discussion about it. I think it's pretty clear for anybody who's steeped in the space. But inference time compute is not fungible in the same way that training time compute is. So what I mean by that is training time compute

It's not actually this simple, but very roughly, you know, you're like doing text autocomplete and then back propagating off the result of that, right? To first order. At inference time, things...

can differ a lot more, right? Like you kind of have the choice. One way to spend your inference time compute is to spend it on generating like independent parallel generations, right? So like you sample a whole bunch of different answers from the same model at usually a high temperature and you get a whole bunch of potential responses and then you have some kind of way of

aggregating the result using some sort of operation, like, you know, you might take the average of those outputs or some majority vote or some measure of the best outcome. Right.

So you might use those techniques. That's one way to spend your inference time compute is just generate a whole bunch of potential outputs and then pick from among them. The other is you have one stream of thought, if you will, and you have a critic model that goes in and kind of like criticizes the stream of thought as you go in sequence. So you're imagining kind of one more nuanced sort of chain of thought that you're investing more into than a whole bunch of like relatively cheap ideas.

In anyway, in compute terms, relatively cheap parallel generations. And so this is these are two fundamentally different approaches. And there are many more. But but these are the two kind of core ones that are explored here. And there are a whole bunch of different configurations of each of those approach.

of each of those approaches. And so that's really what this paper is about is saying, okay, well, if we spend our inference time computing different ways, how does that play out? Anyway, it's actually quite an interesting paper and helps to sort of resolve some of the questions around what's the best way to spend this compute. There's apparently very high variability in token use, even across models with similar accuracies, right? So if you take a given model

you know, that tends to get, I don't know, 70% on GPQA or something, what you'll find is it won't consistently use an average of, I don't know, a thousand reasoning tokens to respond or 10,000 or a hundred thousand. There's like a lot of variability. And so what that strongly suggests is that there's a lot of room left for improving token efficiency.

So you've definitely got some highly performant models that are overusing tokens. They're cranking out too many tokens, spending too much inference time compute, and it could be optimized in other ways. Apparently, that's even a problem with respect to the same model. So the same model can yield highly variable token usage for a given level of performance, which is kind of interesting. They also highlight that quite often...

Although it's true, like inference time scaling does work as you increase inference time scaling. In other words, you increase the number of tokens you're using to get your answer. Performance does tend to rise, but

It can also indicate if you see a given model pumping out a whole crap ton of tokens, it can be an indication that the model is getting stuck. And so this tends to be a kind of black pit of token generation and inference time compute spend when the thing's just sort of going in circles. And so a whole bunch of interesting findings around that. They do find that continued scaling with perfect verifiers consistently improves performance. And this is both for reasoning and sort of

conventional or like base models, which is interesting, right? Because that means if you do have a reliable verifier that can provide you feedback on the task, truly inference time scaling does work. But as we've just seen for a lot of tasks, you don't necessarily have these robust all

always correct verifiers. And that's a challenge. And so I think there's a lot of really interesting stuff to dig into here. If you're interested in token efficiencies, where yeah, inference time scaling is going and all that. And some cool curves, by the way, last thing I'll say is interesting curves that show the distribution of token use for different models. So kind of comparing Claude 3.7 Sonnet to 01 on a math benchmark and seeing like, what's the distribution of tokens that's used?

And it's sort of interesting to see how that distribution shifts between those different models, like which models tend to use fewer tokens when they get things wrong versus when they get things right, for example. So anyway, keeping time in mind, that's probably all we have time to go into here. Lots of figures and numbers and interesting kind of observations that you can get from this paper for sure.

And we have just one more paper to go over. It's titled, Overtrained Language Models Are Harder to Fine-Tune. And there you go. That's the conclusion. Overtraining is when you're pre-training, right? When you do the first kind of basic training pass of autocomplete, it has been observed that, you know, there's a theoretical amount of training that is optimal, but you can actually do better by overtraining, going beyond that.

And the general kind of common wisdom is overtraining is good. What this paper is showing is there is an idea called catastrophic overtraining, where when you do too much pre-training and you do what's called post-training or instruction tuning, kind of adapt your model to a specific task or make it behave in a certain way that you don't get from autocomplete, that actually makes it perform worse. So quite an important, I think, result here.

Yeah, like the, to quantify it, they try, so the instruction tune, 1 billion parameter model, OMO1B,

It was pre-trained on 3 trillion tokens. And what they find is that if you pre-train it on 3 trillion tokens, it leads to 2% worse performance on a whole bunch of fine-tuning LLM benchmarks than if you pre-train it on 2.3 trillion tokens. That's really interesting. So this is like, you know, 20% more pre-training tokens than

And then if you then downstream, so after that, you take those two different models and you fine tune them, you get 2% worse performance on the model that was trained, pre-trained with more tokens. The mechanism behind this is really interesting, or at least will have to be really interesting. It's a little bit ambiguous in the paper itself, but

So they highlight this idea of progressive sensitivity. The way they measure this is basically if you take modifications of equal magnitude, models that have undergone pre-training with more tokens

exhibit greater forgetting. They're more likely to forget the original capabilities that they had. This is something we've seen before, right? When you fine-tune a model, it will forget some of its kind of other capabilities that aren't related to the fine-tuning that you've just done. So this is presumably suggesting that the pre-trained model, if you pre-train it on a crap ton of tokens, just becomes like, I guess, more fragile. Like it's more, maybe more generally capable out the gate. But the moment you start to fine-tune it, that structure is like,

in a weird way, almost like unregularized. It's almost like it's overfit, I want to say, to the pre-training distribution. And they do say, actually, to that point, regularization during fine-tuning can delay the onset, albeit at the cost of downstream performance. So to me, this suggests there is a kind of regularization thing going on here where, yeah, if you just like under-train them, or not under-train, but if you pre-train on fewer tokens, the overall model is less likely

Again, you're not necessarily doing multiple epics, so you're not passing over the same data. So you're not overfitting to the specific data, but to potentially the distribution of training data. And I think that's maybe the thing that's going on here, though. It's not like I didn't see this discussed previously.

in the depth that way with that angle, that depth, but I might have just missed it. Either way, very interesting result and huge implications for pre-training, right? Which is a massive, massive source of CapEx spend. Exactly. Yeah. They primarily empirically demonstrate some of these phenomena in this paper and showcase that this is happening with some theoretical analysis as well. But

As you say, we don't have an exact understanding of why this happens. It's kind of a phenomenon that's interesting and has to be researched in more depth.

On to policy and safety, we begin with taking a responsible path to AGI, which is a paper that is presenting an approach for that. This is from DeepMind and I guess is putting forth their general idea. They have previously introduced this idea of levels of AGI, which is

sort of trying to define AGI as a set of levels of being able to automate like a little bit of human labor, all of human labor, et cetera. And so they expand on that. They are also emphasizing the need for proactively having safety and security measures to prevent misuse. And it just generally goes over a whole lot of stuff. So I'll let you take over, Jeremy, and kind of highlight what you thought was interesting.

Yeah, no, I mean, generally nothing too shocking here other than the fact that they're saying it out loud, taking seriously the kind of the idea of loss of control. So one notable thing about the blog post, not the long report itself, but just the blog post is that.

Although they look at in the report four different risk categories, they look at misuse, mistakes, so sort of traditional AI accidents, you could say, structural risks and misalignment. The blog post itself is just basically about their thoughts on misalignment. There's some misuse stuff in there, but it's a blog post about loss of control. That's what it is.

It's clear that that's what DeepMind wants to signal to the world. Like, hey, like, yes, everyone seems aligned on this other stuff. But guys, guys, can we please? This is really important.

And so anyway, there's a lot of interesting stuff in there about like if you're familiar with the Google DeepMind research agenda on alignment, there's lots of stuff in there that will be familiar. So debate, let's keep super intelligent AIs honest by getting them to debate each other and we'll use an AI judge. And hopefully if a super intelligence is being dishonest, then that dishonesty will be detectable by a trusted judge. There are all kinds of challenges with that.

that we won't get into right now, but there's a lot of stuff to hear about interpretability, sort of similar to some of the anthropic research agenda and all that. So they do, they also flag the Mona paper as a, anyway, this is something that we covered previously. You can check out our discussion on, on Mona.

But basically, it's like a performance alignment trade-off option when you essentially are forcing the model to just reason over short timelines so it can't go too far off the deep end and do really dangerously clever stuff.

You can try to ensure that it's more understandable to humans. So anyway, I thought that was interesting. One thing that for me personally was interesting is there was a note on the security side for alignment. They say a key approach is to treat the model similarly to an untrusted insider, motivating mitigations like access control, anomaly detection, logging and auditing. You know, one really important thing that the labs need to improve is

is the just the security generally, not not just even a lot from a loss of control standpoint, but from nation state activities. And so I think a lot of these things are converging on security in a really interesting way. So there you have it. A lot more to say there, but we'll keep it to time.

Right. Yeah. The paper itself they published is 108 pages long. It basically is a big overview of the entire set of risks and approaches to preventing them. And they also mentioned in the blog post that they are partnering with various groups that also focus on this. They have the AGI Safety Council. They work with Frontier Services.

model forum and they published a course the google's deep mind agi safety course on youtube which is interesting so yeah clearly at least some people within deep mind are very safety focused it seems next article is this ai forecast predicts storms ahead this is an article covering

I suppose a report or an essay, I don't know what you'd call it, called AI 2027. This was a corretion by some, I suppose, significant intellectuals.

and highlights kind of a scenario that could be indicative of why you should worry about AI safety and how this stuff might play out. So I don't want to go into too much depth because this is quite a detailed report of theirs. And we probably just cannot kind of go into because it is a detailed fictional scenario.

But if you're interested in kind of getting idea of the sort of ways people are thinking about safety and why people think it's very important to be concerned about it, I think this is a pretty good read on that. Yeah, it's about this write up, as you said, called AI 2027. It's by a really interesting group of people.

Daniel Cocatayo is one of the more well-known ones. There's Scott Alexander from Slate Star Codex or Astral Codex 10, kind of famous in the AI alignment universe, the very kind of niche ecosystem of AI alignment and all that. Daniel Cocatayo, famous for

Basically, like telling OpenAI that he wouldn't sign their really predatory, it must be said, non-disparagement clauses that they were trying to force employees to sign before leaving. Daniel is known for having made really spot on predictions for the state of AI going back, you know, three, four years. Right. So he basically said, like, here's where we'll be in like 2026 or so back then. And if you like, you should take a look at what he wrote up.

It's pretty remarkable. Like it's kind of on the nose. So here he is essentially predicting that we hit superintelligence by 2027. I mean, like I've had conversations with him quite frequently and he's been pretty consistent on this. I think one of the only reasons he didn't highlight the 2027 superintelligence possibility earlier was that it's just kind of things get really hard to kind of model out. So they tried to ground this as much as they could in concrete experimental data and kind of theoretical results today.

And to map out what the government's response might be, what the private sector's response might be, how CapEx might float. You know, like I have some quibbles at the margins on the national security side in this or China picture of this, but that's not what they were trying to really get.

down pat. I think this is a really great effort to kind of make the rubber meet the road and create a concrete scenario that makes clear predictions. And we'll be able to turn back on this and say, did they get it right? And if they did, if they do get things right over the next 12 months, I think we have some important questions to ask ourselves about how seriously we then should take the actual 2027 predictions. And anyway, it is quite an interesting read and it goes through, again, a

Really interesting because Dan Kay himself is a former OpenAI employee. So like he's familiar with how people at OpenAI talk about this. And this certainly is consistent with conversations I've had with OpenAI, Anthropic, DeepMind, like all these guys. Yeah, 2027 seems pretty plausible to me at least.

Yeah, and I would say I tend to agree that 22-7 is plausible, at least for some definitions of superintelligence. For example, they are saying there would be a superintelligent coder that can effectively be a software engineer, like good software engineers at Google. Pretty plausible for me. So, word for word, it's a very well-done sort of story, you could say, of what might happen.

Another story about OpenAI. Next, we have an article titled The Secrets and Misdirection Behind Sam Altman's Firing from OpenAI. So this is, there's a new book coming out with some of these kind of lurid details. And this article is presenting some of them. A lot of stuff we've mentioned already about kind of tensions between the board and Altman, just kind of more specifics as to

actual seemingly lies that were told and sort of toxic patterns that led to this happening. Pretty detailed article. If you want to know the details, go ahead and check it out. Yeah, I thought this was actually quite interesting because of the specifics. At the time,

The board, the OpenAI nonprofit board, fired Sam Altman and kind of like refused to give us a sense of their actual reasoning. Right. They famously said he was being fired for not being consistently candid about

At the time, I think we covered this. I distinctly remember saying on the podcast and elsewhere, like, I think unless the board comes out with a very clear reason, the obvious result of this is people are going to be confused and Sane is going to have all the leverage because you've just fired the guy who created $80 billion or so at the time of market cap and made these people's careers and you don't have an explanation for them. It was pretty clear that there actually was tension behind the scenes, but

Anyway, if you're in the space and plugged in and you know people in the orbit, you were probably hearing these stories too. There are a lot of concrete things that are serious, serious issues. So in particular, Sam Altman claiming allegedly that there were big model releases and enhancement to GPD-4 that had been approved by the Joint Safety Board when in fact that does not seem to have happened. This was an outright lie to the board that

All kinds of things around releases in India of an instance of GPT-4 that had not been approved. Again, the claim from Sam Altman being that that had happened. There's, oh yeah, then the OpenAI Startup Fund, which Sam Altman did not reveal to the board that he essentially owned or was a major equity holder in. It was sort of like managing it.

While at the same time claiming that he, anyway, claiming some level of detachment from it, the board found out by accident that he was actually running it. They thought it was being managed by OpenAI. And so, you know, this again, like over and over again, it does seem like Ilya Sutsky and Mira Marotti were behind the scenes, the ones driving this. So it wasn't even Helen Toner.

or any of the other board members, it was Mira and Ilya who were like, hey, we're seeing patterns of toxic behavior and not toxic in the kind of political sense, right? But just like, this is a dude who is straight up lying to people and on very, very kind of significant substantive things. And so, yeah, apparently they were concerned that they're just, the board was only,

in touch with some of the people who are affected by this stuff very, very sporadically. This is consistent with something I've heard

we'll be reporting on fairly soon, actually, just sort of criticism from former OpenAI researchers about the function of the board as being essentially to pretend to oversee the company. So this is really, really challenging, right? When you're leaning on the board to make the case that you're doing things in a responsible, kind of secure way and preventing, among other things, nation state actors from doing bad things. So anyway, lots of stuff to say there. The take home for me was

I'm now utterly confused given the actual strength of these allegations and the evidence, why it took the board so long to come out and say any, like they actually had cards. If this is true, they actually had cards to play. They had screenshots. Yeah, exactly. Right. This is like Miramarati's like fricking Slack chat. Like, what are you doing guys? Like seriously, this was, if your goal was to, was to change out the leadership, like,

Great job giving Sam A all the leverage. Great job creating a situation where Satya could come in and offer Sam a job at Microsoft, and that gave Sam the leverage. That's what it seemed at the time. The board went about this in a very...

Clearly, they were secretive because they didn't want Altman to be aware of this whole conversation, but they went about it in a very confusing and perhaps confused fashion. And this just backs it up, basically. Yeah. Like, if this is true, again, if this is true, then this was some pretty amateurish stuff. And there's a lot of, I mean, it is consistent with some of the effective altruist theories.

vibing that does go on there where it's like everyone's so risk averse and trying not to make big moves and this and that. And like, unfortunately, it just seems to culturally be in the water. So yeah, I mean, like, sorry, there's no such thing as a low risk firing of the CEO of the largest privately held company in the world. Like that's, you know, big things are gonna happen. So anyway, I thought a fascinating read and something for the history books for sure.

And with that, we're going to go and close out this episode. Thank you for listening. Thank you, hopefully, for trying out the demo that we have launched at Astrocade. And as always, we appreciate you sharing, providing feedback, giving reviews, but more than anything, just continuing to listen. So please do keep tuning in.

Break it down.

♪ ♪ ♪ ♪ ♪ ♪ ♪ ♪

From neural nets to robots, the headlines pop, data-driven dreams, they just don't stop. Every breakthrough, every code unwritten, on the edge of change, with excitement we're smitten. From machine learning marvels to coding kings, futures unfolding, see what it brings.