#211 - Claude Voice, Flux Kontext, wrong RL research?

Last Week in AI

Deep Dive

- Anthropic launched a voice mode for Claude, lagging behind competitors but prioritizing enterprise needs.

- Black Forest Labs released Flux 1 Kontext, enabling both image generation and editing.

- Perplexity launched a tool for generating reports, spreadsheets, and dashboards, potentially driven by investor pressure.

- XAI paid Telegram $300 million to integrate Grok into its chat app, demonstrating a new monetization strategy.

- Opera announced an AI-powered browser, Opera Neon, capable of performing various tasks.

- Google Photos debuted a redesigned editor with new AI tools previously exclusive to Pixel devices.

Shownotes Transcript

Hello and welcome to the Last Week in AI podcast, where you can hear us chat about what's going on with AI. As usual, in this episode, we will summarize and discuss some of last week's most interesting AI news. You can go to the episode description, have a timestamp of all the stories and the links, and we are going to go ahead and roll in. So I'm one of your regular co-hosts, Andrey Krenkov. I started

studied AI in grad school, and I now work at a generative AI startup. I'm your other regular co-host, Jeremy Harris. I'm with Gladstone AI, AI National Security Company. And yeah, I want to say there are more papers this week than it felt like, if that makes sense. Does that make sense? I don't know. That's a very... It does make sense. It does make sense.

If you are from, let's say, the space where we're in, where you have sort of a vibe of how much is going on, and then sometimes there's more going on than you feel like is going on. When Deep Seek dropped...

You know, V3 or R1 and they're like, you have this one paper where it's like, you really have to read pretty much every page of this 50 page paper and it's all really dense. It's like reading six papers in one, you know, normally. So this week I feel like it was maybe a bit more, I don't wanna say shallow, but like, you know, there, there were more shorter papers. Yeah.

Well, on that point, let's do a quick preview of what we'll be talking about. Tools and apps. We have a variety of kind of smaller stories, nothing huge compared to last week, but non-propic, Black Forest Lab, Perplexity, XAI, a bunch of different small announcements that

Applications and business, talking about, I guess, what we've been seeing quite a bit of, which is investments in hardware and sort of international kinds of deals. A few cool projects and open source stories. A new DeepSeek, which everyone is excited about, even though it's not sort of a huge upgrade yet.

Research and advancements, as you said, we have slightly more in-depth papers going into data stuff, different architectures for efficiency, and touching on RL for reasoning, which we've been talking about a lot in recent weeks. And eventually in policy, we'll be talking about some law stuff within the US and a lot of sort of safety reporting going on with regards to O3 and Cloud4 in particular.

Now, before we dive into that, I do want to take a moment to acknowledge some new Apple reviews, which I always find fun. So thank you for the folks reviewing. We had a person leave a review that says, it's okay, and leaves five stars.

Glad you like it. It's okay. It's a good start. Although this other review is a little more constructive feedback.

The title is CapEx. And the text is, drink a game where you drink every time Jeremy says CapEx. Did he just learn this word? You can just say money or capital. Is he trying to sound like a VC pro? And to be honest, I don't know too much about CapEx. So maybe-

I totally understand. So this reviewer's comment and their confusion, it looks like they're a bit confused over the difference between capital and CapEx. They are quite different, actually. There's a reason that I use the term. So money is money, right? It could be cash. It's like you could use it for anything at any time and it holds its value. CapEx, though, refers to money that you spend on

upgrading, maintaining long-term physical assets like buildings or sometimes vehicles or tech infrastructure like data centers, like chip foundries, right? Like these big, heavy, heavy things that are very expensive. One of the key properties they have that makes them CapEx

is that they're expected to generate value over many years and they show up on a balance sheet as assets that depreciate over time. So when you're holding on to CapEx, you're sort of, yes, you have $100 million of CapEx today,

but that's going to depreciate. So unlike cash that just sits in a bank, which just holds its value over time, your capex gets less and less valuable over time. You can see why that's especially relevant for things like AI chips. You spend literally tens of billions of dollars buying chips, but I mean, how valuable is an A100 GPU today, right? Four years ago, it was super valuable. Today, I mean, it's literally not worth the power you use to train things on it, right? So

The depreciation timelines really, really matter a lot. I think it's on me just for not clarifying why the term CapEx is so, so important. Folks who kind of like work in the tech space and to the reviewers comment here, yeah, I guess this is VC bro language.

because yeah, CapEx governs so much of VC, so much of investing, especially in this space. So this is a great comment. I think it highlights something that I should have kind of made clear is like why I'm talking about CapEx so much, why I'm not just using terms like money or capital, which don't have the same meaning in this space. Look, I mean, people are spending hundreds of billions of dollars every year on this stuff. You're going to hear the word CapEx a lot. It's a key part of what makes AI today AI. But yeah, anyway, I appreciate the-

the drinking game too. I'm sure there's many drinking games you can come up with for this podcast.

CapEx, by the way, stands for capital expense or capital expenditure. So basically the money you spend to acquire capital and where capital is things that you do stuff with more or less. So as you said, GPUs, data centers. And so we've been talking about it a lot because to a very extreme extent, companies like Meta and OpenAI and XAI all are spending money

unprecedented sums of money investing in capital upfront for GPUs and data centers, just bonkers numbers. And it is really capital, which is distinct from just large expenditures.

Last thing I'll say is I do want to acknowledge I have not been able to respond to some messages. I have been meaning to get around to some people that want to give us money by sponsoring us and also chat a bit more on Discord. Life's got busy with startups, so I've not been very responsive. But at Just FYI, I'm aware of these messages and I'll try to make some time for them.

And that's it. That's us. Go to tools and apps, starting with Anthropic, launching a voice mode for Claude.

So there you go. It's pretty much the sort of thing we have had in a chat GPT. I think also Grok, where in addition to typing to interact with chatbot now, you can just talk to it. And for now, just in English. So it will listen and respond to your voice messages. I think them getting around to this kind of late, quite a while after chat GPT, like this

Article, I think said, one of them said, finally launches a voice mode. And it is part of Anthropic's strategy that's worth noting, where they do have a consumer product that competes with ChatsGPT, Cloud, but it has often lagged in terms of the feature set. And that's because Anthropic has prioritized the sorts of things that enterprise customers, big businesses need.

benefit from. And I assume big businesses maybe don't care as much about this voice mode. Yeah. It's all about, to your point, it's all about APIs. It's all about coding capabilities, which is why Anthropic tends to do better than OpenAI on the coding side, right? That's actually been a thing since kind of at least Sonnet 3.5, right? So-

Yeah, this is continuing that trend of Anthropic being later on the more kind of consumer-oriented stuff. Like XAI has it, OpenAI has it, right? We've seen kind of these voice modes for all kinds of different chatbots, and here they are, in a sense, catching up. It's also true that Anthropic is forced to some degree to have more focus, which...

may actually be an advantage. It often turns out to be for startups, at least, because they just don't have as much capital to throw around, right? They haven't raised the, you know, well, the speculative $100 billion or so for Stargate equivalent, like they've raised sort of not quite the same order of magnitude, but getting there, they're lagging behind on that side. So they have to pick their battles a little bit more carefully. So no surprise that this takes a backseat to some degree to the, as you say, the key strategic plays of

It's also because of their views around recursive self-improvement and the fact that getting AI to automate things like alignment research and AI research itself, that's the critical path to super intelligence. They absolutely don't want to fall behind opening eye on that dimension. So, you know, maybe unsurprising that you're seeing what seems like it's a real gap, right? Like a massive consumer market for voice modes. But, you know, there are strategic things at play here beyond that. Right. And circling back to the future of self-improvement,

Seems pretty good from the video they released. The voice is pretty natural sounding, as you would expect. It can respond to you. And I think one other thing to note is that this is currently limited to the Cloud app. It's not online. And they actually demonstrated by try starting a voice conversation and asking Cloud to summarize your calendar or search your docs.

So it seems to be kind of emphasizing the recent push for integrations for this model context protocol where you can use it as an assistant more so than you were able to do before because of integrations with things like your calendar. So there you go, Cloud fans, you got the ability to chat with Cloud now.

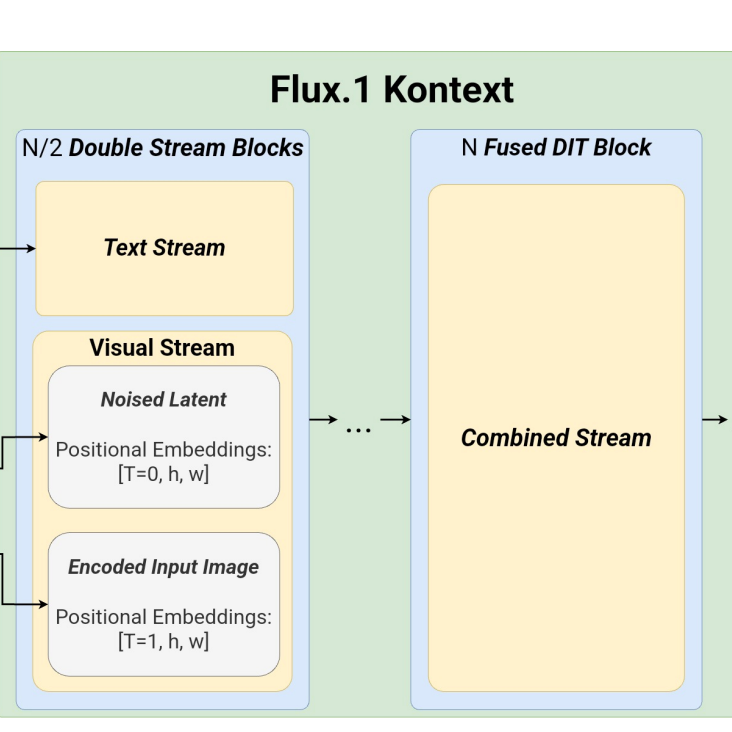

And next story, we have Black Forest Labs. Context AI models can edit pics as well as generate them. So Black Forest Labs is a company started last year by some people involved in the original text-to-image models with some of the early frontrunners.

stable diffusion. And they launched Flux, which is still one of the kind of state-of-the-art, really, really good text-to-image models. And they provide an API, they open source some versions of Flux that people have used, and they do

kind of lead the pack on text-to-image model training. And so now they are releasing a suite of image generating models called Flux1 Context that is capable not just of creating images, but also editing them. Similar to what you've seen with

ChatGPT, ImageGen, and Gemini, where you can attach an image, you can input some text, and it can then modify the image in pretty flexible ways, such as removing things, adding things, etc. They have Context Pro,

which has multiple turns and Context Max, which is more meant to be fast and speedy. Currently, this is available through the API and they are promising an open model Context Dev service

It's currently in private beta for research and safety testing and will be released later. So I think, yeah, this is something worth noting with image generation. There's been, I guess, more emphasis or more need for robust image editing. And that's been kind of a surprise for me, the degree to which

you can do really, really high quality image editing like object removal just via large models with text image inputs. And this is the latest example. It's especially useful, right? When you're doing generative AI for images, just because so much can go wrong, right? Images are so high dimensional that if you, you know, you're not going to necessarily one shot the perfect thing with one prompt, but often you're close enough. You want to kind of keep the image and play with it. So it makes sense.

I guess intuitively that's a good direction, but yeah. And there are a couple of quick notes on this strategically. So first off, this is not downloadable. So the Flux 1 Context Pro and Max can't be downloaded for offline use. That's as distinct from their previous models. And this is something we've seen from basically every open source company at some point goes, oh wait, we actually kind of need to go closed source almost no matter how loud and proud they were about the need for open source and the sort of

virtues of it. This is actually especially notable because a lot of the founders of Black Forest Labs come from Stability AI, which has gone through exactly that arc before. And so everything old is new again. Hey, we're going to be the open source company, but not always the case. One of the big questions in these kind of image generation model spaces is always like, what's your differentiator?

You mentioned the fidelity of the text writing. Every time a model like this comes out, I'm always asking myself, okay, well, what's really different here? I'm not an image, like a text-to-image guy. I don't know the market for it well. I don't use it to edit videos or things like that. But one of the key things here that at least to me is a clear value add is they are focused on inference speedups. So they're saying it's eight times faster than current leading models.

and competitive on typography, photorealistic rendering and other things like that. So really trying to make the generation speed, the inference speed, one of the key differentiating factors anyway.

I do think worth noting that this is not actually different from their previous approaches. So if you look at Flux, for instance, they also launched Flux 1.1 Pro, Flux 1 Pro available on their API, and they launched dev models, which are their open weight models that they released to the community. So

This is, I think, yeah, pretty much following up on previous iterations. And as you said, early on with stable diffusion, stability AI, we had a weird business model, which is just like, let's drain the models and release them, right? And that has moved toward this kind of tiered system where you might make a few variations, release one of them,

as the open source one. So Flux1Dev, for instance, is distilled from Flux1Pro, has similar quality, also really high quality. And so still kind of have it both ways, where you're a business, with an API, with cutting edge models, but you also are contributing to open source.

And a few more stories. Next up, we have Perplexity's new tool can generate spreadsheets, dashboards, and more. So Perplexity, the startup that has focused on AI search, basically entering a query and it goes around the web and generates a response to you with a summary of a bunch of sources. They have launched Perplexity Labs, which is a 20-per-month program

pro subscription or a tool for their 20 per month subscribers that is capable of generating reports, spreadsheets, dashboards, and more. And this seems to be kind of a move towards what we've been seeing a lot of, which is sort of the agentic applications of AI. You give it a task and it can do much more in-depth stuff, can do research and analysis, can create reports and visualizations.

similar to deep research from OpenAI and also Anthropic. And we have so many deep researchers now. And this is that, but seems to be a little bit more

combined with reporting, events visual and spreadsheets and so on. Yeah. It's apparently also consistent with some more kind of B2B corporate focused functionalities that they've been launching recently. There's speculation in this article that this is maybe because some of the VCs that are backing Perplexity are starting to want to see a return sooner rather than later. They're looking to raise about a billion dollars right now, potentially at a $18 billion valuation. And so

You know, you're starting to get into the territory where it's like, okay, you know, like, so when's that IPO coming, buddy? Like, you know, when are we going to see that ROI? And I think especially given the place that perplexity lives in in the market, it's pretty precarious, right? They are squeezed between absolute monsters.

And it's not clear that they'll have the wherewithal to outlive, outlast your opening eyes, your anthropics, your Googles in the markets that they're competing against them. So we've talked about this a lot, but like the startup life cycle in AI, even for these monster startups,

seems a lot more boom busty than it used to be. So like you skyrocket from zero to like a billion dollar valuation very quickly, but then the market shifts on you just as fast. And so you're making a ton of money and then suddenly you're not, or suddenly the strategic landscape just kind of the ground kind of shifts under you and you're no longer where you thought you were.

Which, by the way, I think is an interesting argument for lower valuations in this space. And I think actually that that is what should happen. Pretty interesting to see this happen, potentially to perplexity. Right. And perplexity, this article also notes, this might be part of a broader effort to diversify. They're apparently also working on a web browser.

And it makes a lot of sense. Perplexity came up being the first sort of demonstration of AI for search. That was really impressive. Now, everyone has AI for search. GLGBT, Cloud, and Google just launched their AI mode. So I would imagine Perplexity might be getting a little nervous given these very powerful competitors, as you said.

Next, a story from XAI. They're going to pay Telegram $300 million to integrate Grok into the chat app. So this is slightly different in the announcement. They pointed this as more of a partnership.

an agreement and XAI as part of agreement will pay Telegram this money and also have 50% of our revenue from XAI subscriptions purchased through the app. This is going to be very similar to what you have with WhatsApp, for instance, and others where, you know, pinned to the top of your messaging app and Telegram is just a messaging app similar to WhatsApp. There's like an AI platform

You can message to chat with a chatbot. It also is integrated in some other ways. I think summaries, search, stuff like that. So interesting move.

I would say like Grok is already on X and Twitter and trying to think through, I suppose this move is trying to compete with ChatGPT, Cloud, Meta for usage for Mindshare. Telegram is massive, used by a huge amount of people. Grok, as far as I can tell, isn't huge in the landscape of LLM. So this could be an aggressive move to try and gain more usage.

It's also a really interesting new way to monetize previously relatively unprofitable platforms. Think about what it looks like if you're Reddit. Suddenly, what you have is eyeballs. What you have is distribution and traffic.

Open AI, Google, XAI, everybody wants to get more distribution for their chatbots, wants to get people used to using them. And in fact, that'll be even more true as there's persistent memory for these chatbots. You kind of get to know them and the more you give to them, the more you get. So they become stickier. So this is sort of interesting, right? Like XAI offering to pay $300 million. It is in cash and equity, by the way, which itself is interesting. That means that Telegram presumably then has equity in XAI. Right.

If you're a company like Telegram and you see the world of AGI happening all around you, there are an awful lot of people who would want some equity in these non-publicly traded companies like XAI, like OpenAI, but who can't get it any other way. So that ends up being a way to hitch your wagon to a potential AGI play, even if you're in a fairly orthogonal space like a messaging company. So I can see why that's really appealing for Telegram strategically, but

The, yeah, the other way around is really cool too, right? Like if all you are is just a beautiful distribution channel, then yeah, you're pretty appealing to a lot of these AI companies and you also have interesting data, but that's a separate thing, right? We've seen deals on the data side. We haven't seen deals so much. We've seen some actually between, you know, the classic kind of Apple open AI things, but this is an interesting, at least first one on Telegram and XAI's part for distribution of the AI assistant itself.

Right. And just so we're not accused of being VC bros again, equity, just another way to say stocks, more or less. And notable for XAI. Inequity on the show. Notable for XAI because they recently...

XAI is an interesting place because they can sort of claim whatever evaluation they want to a certain extent with Elon Musk having kind of an unprecedented level of control. They do have investors, they do have like a border control, but Elon Musk is kind of unique in that sense.

He doesn't care too much about satisfying investors, in my opinion. And so if the majority of us is equity, you can think of it a little bit as magic money. $300 million may not be $300 million. Anyway, interesting development for Grok. Next up, we have Opera's new AI browser promises to write code while you sleep. So Opera has announced this new AI feature.

powered browser called Opera Neon, which is going to perform tasks for users by leveraging AI agents. So another agentic play, similar to what we've seen from Google actually, and things like deep research as well. So there's no launch date or pricing details, but I remember we were talking last year how that was going to be year of agents. And

Somehow, I guess it took a little longer than I would have expected to get to this place. But now we are absolutely in the year of agents, deep research, open AI operator, Microsoft Copilot now everywhere.

Gemini, all of them are at a place where you tell your AI, go do this thing. It goes off and does it for a while. And then you come back and it has completed something for you. That's the current deep investment and it will keep being, I think, the focus. I'm just looking forward to the headline that says, opening eyes, new browser promises to watch you while you sleep. But that's probably in a couple months. Yeah.

Thank you for writing code for me while I sleep. We have an example here. Create a retro snake game interactive web location designed specifically for gamers. Not what we expected browsers to be used for, but it's the age of AI, so who knows?

Last up, a story from Google Photos has launched a redesigned editor that is introducing new AI features that were previously exclusive to Pixel devices. So in Google Photos, you now have reimagined features that allows you to alter objects and backgrounds of photos, have

also an outer frame feature, which suggests different framing options and so on. They also have new AI and have it all kind of a nice way that's accessible. And lastly, also has AI powered suggestions for quick edits with an AI enhanced option. So

You know, they've been working on Google Photos for quite a while on these sorts of tools for image editing for a while. So probably not too surprising.

And on to applications and business. First up, Tanghup, Chinese memory maker expected to abandon DDR4 manufacturing at the behest of Beijing. So this is a memory product. And the idea is that they are looking to transition towards DDR5 production to meet the demand for newer devices.

That being at least partially to work on high bandwidth memory as well, HBM, which as we've covered in the past is really essential for HBM.

constructing big AI data centers and getting lots of chips, lots of GPUs to work together to power big models. Yeah, this is a really interesting story from the standpoint of just the way the Chinese economy works and how it's fundamentally different from the way economies in the West work. This is the Chinese Communist Party turning to a private entity, right? This is CXMT.

By the way, so CXMT, you can think of it roughly as China's SK Hynix. And if you're like, well, what the fuck is SK Hynix? Aha. Well, here's what SK Hynix does. If you go back to our hardware episode, you'll see more on this, but

You think about a GPU, a GPU has a whole bunch of parts, but the two main ones that matter the most are the logic, which is the really, really hard thing to fabricate. So super, super high resolution fabrication process for that. That's where all the number crunching operations actually happen. So the logic die is usually made by TSMC in Taiwan, but then there's the high bandwidth memory. These are basically stacks of like a stack of chips that

kind of integrate together to make a, well, a stack of high bandwidth memory or HBM. The thing with high bandwidth memory is it stores the intermediate results of your calculations and the inputs. And it's just really, really rapid, like quick to access. And you can pull a ton of memory off it. That's why it's called high bandwidth memory. And so you've got the stacks of high bandwidth memory. You've got the logic die. The high bandwidth memory is made by SK Hynix. It's basically the best

company in the world at making HBM. Samsung is another company that's pretty solid and plays in the space too. China has really, really got to figure out how to do high bandwidth memory. They can't right now. If you look at what they've been doing to acquire high bandwidth memory, it's basically using Samsung and SK Hynix to send them chips. Those have recently been export controlled. So there's a really big push now for China to get CXMT to go, hey, okay, you know what? We've been making this DRAM

basically it's just a certain kind of memory. They're really good at it.

high bandwidth memory is a kind of DRAM, but it's stacked together in a certain way. And then those stacks are linked together using through silicon vias, which are anyway, technically challenging to implement. And so China's looking at CXMT and saying, hey, you know what? You have the greatest potential to be our SK Hynix. We now need that solution. So we're going to basically order you to phase out your previous generation, your DDR4 memory. This is traditional DRAM

The way this is relevant, it actually is relevant in AI accelerators. This is often a CPU memory connected to the CPU or a variant like LPDDR4, LPDDR5. You often see that in schematics of, for example, the NVIDIA GB200 GPUs. So you'll actually see there like the LPDDR5 that's hanging out near the CPU to be its memory.

Anyway, so they want to move away from that to the next generation of DDR5 and also to, critically, HBM. They're looking to target validation of their HBM3 chips by late this year. HBM3 is the previous generation of HBM. We're now on to HBM4. So that gives you a little bit of a sense of how far China's lagging. It's roughly probably about anywhere from two to four years ahead.

on the HPM side. So that's a really important detail. Also worth noting, China stockpiled massive amounts of SK Hynix HPM. So they're sitting on that, that'll allow them to keep shipping stuff in the interim. And that's the classic Chinese play, right? Stockpile a bunch of stuff when export controls hit, start to onshore the capacity with your domestic supply chain. And you'll be hearing a lot more about CXMT. So when you think about TSMC in the West, we're

Well, China has SMIC, that's their logic fab. And when you think about SK Hynix or Samsung in the West, they have CXMT. So you'll be hearing a lot more about those two, the SMIC for logic, CXMT for memory going forward. Next up, another story related to hardware. Oracle to buy 40 billion worth of NVIDIA chips for the first Stargate data centers.

So this is going to include apparently 400,000 of Nvidia's latest GB200 superchips. And they will be leasing competing power from these chips to open it at Oracle. By the way, it's a decades-old company hailing from Silicon Valley, made their money in database technology. And...

have been competing on the cloud for a while. We're lagging behind Amazon and Google and Microsoft and have seen a bit of a resurgence with some of these deals concerning GPUs in recent years. Yeah. And this is all part of the Abilene Stargate site, 1.2 gigawatts of power. So, you know, roughly speaking, 1.2 million homes worth of power just for this one site. And

And it's pretty wild that there's also a kind of related news story where JPMorgan Chase has agreed to lend over $7 billion to the companies that are financing or sorry, building the Abilene site. And it's already been a big partner in this. So you'll be hearing more probably about JPM on the funding side, but-

Yeah, this is Crusoe and Blue Owl Capital. We talked a lot about those guys. We've been talking about them, it feels like, for months. The sort of classic combination of the data center construction and operations company and the funder, the kind of like financing company. And then, of course, opening eye being the lab. So there you go. Truly classic. Yeah.

And another story, kind of in the same geographic region, but very different. The UAE is making chat GPT plus subscription free for all residents as part of deal with open AI. So this country is now offering free access to chat GPT plus to its residents as part of a strategic plan.

partnership with OpenAI related to Stargate UAE, the infrastructure project in Abu Dhabi. So apparently there's an initiative called OpenAI for Countries, which helps nations build AI systems tailored to local needs. And yeah, this is just another education of the degree to reach

There is strong ties being made with the UAE in particular by OpenAI and others. Yeah, this is also what you see in a lot of, you know, the Gulf states. Saudi Arabia famously essentially just gives out a stipend to its population as a kind of a bribe so that they don't turn against the royal family and murder them because, you know, that's kind of how shit goes there.

So, you know, this is in that tradition, right? Like the UAE as a nation state is essentially guaranteeing their population access to the latest AI tools. It's kind of like on that spectrum. It's sort of interesting. It's a very foreign concept to a lot of people in the West. Like the idea that you'd have your central government just like telling you like, hey, this tech product, you get to use it for free because you're a citizen. It's also along the spectrum of the whole universal basic compute argument that a lot of people in the kind of open AI universe have.

and elsewhere have been arguing for. So in that sense, I don't know, kind of interesting, but this is part of the build out there. There's a, you know, like a one gigawatt cluster that's already in the works. They've got 200 megawatts expected to be operational by next year. That's all part of that UAE partnership. Hey, cheap UAE energy, cheap UAE capital, same with Saudi Arabia, you know, nothing new under the very, very hot Middle Eastern sun.

Right. And for anyone needing a refresher on your geopolitics, I suppose, UAE, Saudi Arabia, countries rich from oil, like filthy rich from oil in particular, and they are strategically trying to diversify.

And this big investment in AI is part of the attempt to channel their oil riches towards other parts of the economy that would mean that they're not quite as dependent. And that's why you're seeing a lot of focus in that region. There's a lot of money to invest and a lot of interest in investing it. Yeah. And the American strategy here seems to be to essentially kick out

Chinese influence in the region from being a factor. So we had Huawei, for example, making Riyadh in Saudi Arabia, like a regional AI inference hub. There are a lot of efforts to do things like that. So this is all part of trying to invest more in the region to butt out Chinese dollars and Chinese investment.

Given that we're approaching potentially the era of super intelligence where AI becomes a weapon of mass destruction, like it's, you know, up to you to figure out how you feel about facing potential nuclear launch silos in the middle of the territory of countries that America has a complex historical relationship with. Like, it's not, yeah, you know, bin Laden was a thing. You know, I'm old enough to remember that. Anyway, so we'll see. And there are obviously all kinds of security issues.

Questions around this, we'll probably do a security episode at some point. I know we've talked about that. And that'll certainly loop in a lot of these sorts of questions as part of a deep dive. Next, NVIDIA is going to launch cheaper Blackwell AI chips for China, according to a report. So Blackwell is the top of the line.

GPU, we have had, what is the title for the H chips? Hopwell? Oh, Hopper. Yeah, Hopper. Hopper, exactly right. So there we've covered many times, had the H20 chip, which was their watered down chip specifically for China. Recently they had to stop shipping those and now

Now they're trying to develop this Blackwell AI chip, seemingly kind of repeating the previous thing, like designing a chip specifically that will comply with US regulations to be able to stay in the Chinese market. And who knows if that's going to be doable for them.

Yeah, it's sort of funny, right? Because it's like every time you see a new round of export controls come out and you're like, all right, now we're playing the game of like, how specifically is NVIDIA going to sneak under the threshold and give China chips that meaningfully accelerate their domestic AI development?

undermining American strategic policy. At least that was certainly how it was seen in the Biden administration, right? Gina Raimondo, the secretary of commerce was making comments. Like I think at one point she said, Hey, listen, fuckos, if you let, if you fucking do this again, if you do it again, I'm going to lose my shit. She had a quote that was kind of like that.

It was weird. Like you don't normally see obvious, there wasn't cursing. Okay. This is a family show. It was very much in that direction. And, and here they go, here they go again. It is getting harder and harder, right? Like at a certain point, the export controls do create just a, a mesh of coverage that just, it's not clear how you actually continue to compete in that market. And NVIDIA has certainly made that argument. It

It is the case that last year, the Chinese market only accounted for about 13% of NVIDIA sales, which is both big and kind of small. Obviously, if it wasn't for export controls, that number would be a lot bigger. But yeah, anyway, it's also noteworthy that this does not use TSMC's co-op packaging process. So it uses a less advanced packaging process. That, by the way, again, we talked about in the hardware episode, but...

You have your logic dies, as we discussed. You have your high bandwidth memory stack. They need to be integrated together to make one GPU chip.

And the way you integrate them together is that you package them. That's the process of packaging. There's a very advanced version of packaging technology that TSMC has that's called CoaS. There's CoaS S, CoaS L, CoaS R. But bottom line is that's off the table, presumably because it would cause them to kind of tip over the next tier of capability. But we've got to wait to see the specs. I'm really curious how they choose to try to slide under the

the export controls this time. And we won't know, but production is expected to begin in September. So certainly by then we'll know. And one more business story, not related to hardware for once. The New York Times and Amazon are inking a deal to license New York Times data. So very much similar to what we've covered with OpenAI signing deals with many publishers like, I forget, Vimeo.

It was a bunch of them. The New York Times has now agreed with Amazon to provide their published content for AI training and also as part of Alexa. And this is amazing.

coming after a lot of these publishers made these deals already and after New York Times has been in an ongoing legal battle with OpenAI over using their data without licensing. So yeah, another indication of the world we live in where if you're a producer of high quality content and high quality real-time content, you are now kind of

have another avenue to collaborate with tech companies. Yeah. And so apparently this is the first, it's both the first deal for the New York Times and the first deal for Amazon. So that's kind of interesting. One of the things I have heard in the space from like insiders at the companies is that there's often,

a lot of hesitance around revealing publicly the full set of publishers that a given lab has agreements with and the amount of the deals. And the reason for this is that it sets precedents and it causes them to worry that like if there's somebody they forgot or whatever, and they end up training on that data,

This just creates more exposure because obviously the more you normalize, the more you establish that, hey, we're doing deals with these publishers to be able to use their data, the more that implies, okay, well then presumably you're not allowed to use other people's data, right? Like you can't just, if you're paying for the New York Times' data, then surely that means if you're not paying for the Atlantic, then you can't use the Atlantic, right?

Anyway, it's super unclear, sort of murky right now what the legalese around that's going to look like. But yeah, the other thing, right, one key thing you think about is exclusivity. Can the New York Times make another deal under the terms of this agreement with another lab, with another hyperscaler? Also unclear. This is all stuff that we don't know what the norms are in this space right now because everything's being done in flight and being done behind closed doors.

And next up, moving on to projects and open source. First story is DeepSeek's distilled new R1 AI model can run on a single GPU. So this new model full title is DeepSeek-R1-0528-QN3-8B or as some people on Reddit have started calling it, Bob. And so this is a smaller model, a more efficient model compared to R1 and

8 billion parameters as per the title. And apparently it outperforms Google's Gemini 2.5 flash on challenging math questions. Also nearly matches Microsoft's Phi 4 reasoning model. So yeah, small model that can run a single GPU and is quite capable.

Yeah, and it's like not even a, you know, we're not even talking a Blackwell here. Like the 40 to 80 gigabytes of RAM is all you need. So that's an H100 basically. So cutting edge as of sort of last year GPU, which is pretty damn cool. For context, the full size R1 needs about a dozen of these H1, like a dozen H100 GPUs. So it's quite a bit smaller and very much more, well, I'd say very much more kind of friendly to people

enthusiasts hey what does an h100 gpu go for right now like you're tens of thousands of dollars okay but but still only one gpu how much can that cost exactly the price of like you know a car

But yeah, it's apparently, yeah, it does outperform Gemini 2.5 Flash, which by the way, that's a fair comparison. Obviously you're looking at the, you want to compare scale-wise, right? What do other models do that are at the same scale? 5.4 Reasoning Plus is another one that's Microsoft's recently released reasoning model. And actually compared to those models, it does really well specifically on these reasoning benchmarks. So the AIME benchmark, the sort of famous

national level exam in the US that's about math. And I think it's like the trial exam for the Math Olympiad or something. It outperforms, in this case, Gemini 2.5 Flash on that. And then it outperforms Phi 4 Reasoning Plus on HMMT, which is kind of interesting. This is less often talked about, but it's actually harder than the AIME exam. It covers some kind of broader set of topics like mathematical proofs.

And anyway, it outperforms 5.4 reasoning plus. I'm not saying 5.4, by the way, that's 5.4 reasoning plus the five series of models from Microsoft. So legitimately impressive, a lot smaller scaled and cheaper to run than the full R1. And it is distilled from it

And I haven't had time to look into it. So actually, yeah, it was just trained, that's it, by fine-tuning Quen3, 8 billion parameter version of Quen3 on R1. So it wasn't trained via RL directly. So in this sense, boys, it's an interesting question. Is it a reasoning model?

Ooh, ooh, is it a reasoning model? Fascinating. Philosophers will debate that. We don't have time to because we need to move on to the next story. But yeah, does it count as a reasoning model if it is supervised, fine-tuned off of the outputs of a model that was trained with RL? Bit of a head scratcher for me. Right. And this, similar to DeepSeeker 1, is being released fully open source, MIT licensed. You can use it for anything. Right.

Maybe what would have been worth mentioning prior to going into Bob, this is building on DeepSeek R1-052. So they do have a new version of R1 specifically, which is what they say is a minor update. We've seen some reporting indicating it might be a little bit more censored as well.

But either way, DeepSeek R1 itself received an update, and this is the smaller Quen3 trained on data generated by that newer version of R1.

Next, we have Google is unveiling SignGemma, an AI model that can translate sign language into spoken text. So Gemma is the series of models from Google that is smaller and open source. SignGemma is going to be an open source model and apparently would be able to run without needing an internet connection, meaning that it is

is smaller. Apparently this is being built on the Gemini Nano framework. And of course, as you might expect, uses Vision Transformer for analysis. So yeah, cool. I mean, I think this is one of the applications that has been quite obvious for AI. There's been various demos, even probably companies working on it. And Google is no doubt going to reap some well-deserved kudos for the release.

Yeah, Italians around the world are breathing a sigh of relief that they can finally understand and communicate with their AI systems by waving their hands around. I'm allowed to say that. I'm allowed to say that. My wife's Italian. That gives me the pass on this. Yeah, I know. It's...

It is pretty cool too, right? For accessibility and people can actually, hopefully this opens up, actually, I don't know much about this, but for people who are deaf, I do wonder if this does make a palpable UX difference, if there are ways to integrate this into apps and stuff that would make you go, oh, wow, this is a lot more user-friendly. I don't have a good sense of that, but... Right. And also notably...

pretty much real time and that's also a big deal right this is in the trend for real-time translation i have real time not translation well translation i suppose from sign language to spoken text next anthropic is open sourcing their circuit tracing tool so we covered this new exciting interoperability research from anthropic i think a month or so ago they

have updated their kind of really sequence of works on trying to find interpretable ways to understand what is going on inside a model. Most recently, they have been working on circuits, which is kind of the abstracted version of a new one itself, where you have interpretable features like, oh, this is focusing on the decimal point. This is focusing on the even numbers, right?

And this is now an open source library that is allowing other models and other developers to be able to analyze their models and understand them. So this release specifically enables people to trace circuits on supported models and

visualize, annotate, and share graphs on interactive frontend and test hypotheses. And they already are sharing an example of how to do this with Gemma 2B and Lama 3.2 1B. Yeah, definitely check out the episode that we did on the circuit tracing work. It is really cool. It is also very janky.

I'm really, so, so I've talked to a couple of researchers at Anthropic, none who work specifically on this, but generally I'm not getting anybody who goes like, oh yeah, this is, it's not clear if this is even on the critical path to being able to kind of like, you know, control AGI level systems on the path to ASI. Like it's,

There's a lot that you have to do that's sort of like janky and customized and all that stuff. But the hope is, you know, maybe we can accelerate this research path by open sourcing it. And that is consistent with Anthropic's threat models and how they've tended to operate in the space by just saying, hey, you know, whatever it takes to accelerate the alignment work and all that. And certainly they mentioned in the blog post that Dario, the CEO of Anthropic,

Recently wrote about the urgency of interpretability research at present. Our understanding of the inner workings of AI lags far behind the progress we're making in AI capabilities. So making the point that, hey, this is really why explicitly we are open sourcing this. It's not just supposed to be an academic curiosity. We actually want people to build on this so that we can get closer to the sort of overcoming the safety and security challenges that we do.

And last story, kind of a fun one. Hugging Face unveils two new humanoid robots. So Hugging Face acquired this company, Poland Robotics.

pretty recently, and they now unveiled these two robots they say will be open source. So they have Hope JR, or Hope Jr., presumably, which is a full-size humanoid with 66 degrees of freedom, aka 66 stuff it can move. Quite significant, apparently capable of walking and manipulating objects.

They also have Reachy Mini, which is a desktop unit designed for testing AI applications and has a fun little head. It can move around and talk and listen. So they are saying this might be shipping towards the end of the year. Hope Junior is going to cost something like $3,000 per unit, quite low. Reachy Mini is expected to be only a hundred couple bucks.

So yeah, weird kind of direction for Hugging Face to go for, honestly, these investments in open source robots, but they are pretty fun to look at. So I like it. Yeah, you know what? I think...

From a strategic standpoint, I don't necessarily dislike this in that Hugging Face has the potential to turn themselves into the Apple store for robots, right? Because they are the hub already of so much open source activity. One of the challenges with robotics is one of the bottlenecks is like writing the code or the models that can map intention to behavior and control the sensors and actuators that need to be controlled to do things. So I could see that actually being

one of the more interesting monetization avenues long-term that Hugging Face has before it, but it's so early. And yeah, I think you might've mentioned this, right? The shipping starts sometime potentially with a few units being shipped kind of at the end of this year, beginning of next. The cost, yeah, $3,000 per unit, pretty small. I gotta say, I'm surprised Optimus, like all these robots seem to have price tags that are pretty accessible or look that way. They are offering...

a slightly more expensive $4,000 unit that will not murder you in your sleep. So that's a $1,000 lift that you could attribute to the threat of murder. I'm not saying this. Hugging Face is saying this, okay? This is, that's in there. I don't know why, but they have chosen to say this.

And this is following up on them releasing also LeRobot, which is their open source library for robotics development. So trying to be a real leader in the open source space for robotics. And to be fair, there's much less work there on open source. So there's kind of an opportunity to be the PyTorch or whatever, the transformers of robotics. Yeah.

Onto research and advancements. First, we have Pengu Pro MOE, Mixture of Grouped Experts for Efficient Sparsity. So this is a variation on the traditional mixture of experts model and the

basic gist of a motivation is when you are trying to do inference with a model with mixtures of experts, which is, you know, you have different subsets of the overall neural network that you're calling experts on a given call to your model. Only part of the overall set of weights of your network need to be activated. And so you're able to train very big, very powerful models, but use less compute at inference time to make it

easier to kind of be able to afford that inference budget. So the paper is covering some limitations of it and some reasons that it can limit efficiency. In particular, expert load imbalance, where some experts are frequently activated while others are rarely used. There are various kind of tweaks and training techniques for balancing load. And this is

Their take on it, this mixture of group experts architecture, which is going to divide experts into equal groups and select experts from each group to balance the computational load across devices, meaning that it is easier to use or deploy your models on your devices.

infrastructure, presumably. Yeah. And so this is, so Pangu, by the way, has a long and proud tradition on the LLM side. So Pangu Alpha famously was like the first or one of the first Chinese language models, I think end of

maybe even end of, no, maybe early 2021, if I remember. Anyway, it was really one of those impressive early demonstrations that, hey, China can do this well before an awful lot of Western labs other than OpenAI. And it is, so Pengu is a product of Huawei. And this is relevant because one of the big things that makes this development, so Pengu Pro MOE noteworthy, is the hardware co-design. So they used Huawei, not GPUs, but NPUs, neural processing units,

from the Ascend line. So a bunch of Ascend NPUs. And this is, in some sense, you could view it as an experiment in optimizing for that architecture, co-designing their algorithms for that architecture. The things that make this noteworthy do not, by the way, include performance. So this is not...

Something that blows DeepSeek v3 out of the water. In fact, quite the opposite. v3 outperforms Pengu Pro MOE on most benchmarks, especially when you get into reasoning, but it's also a much larger model than Pengu. This is about having a small, tight model that can be trained efficiently and with, the key thing is perfect load balancing. So you alluded to this, Andre, where in an MOE, you have a bunch of experts that your model is kind of subdivided into a bunch of experts.

And typically what will happen is you'll feed some input and then you have a kind of a special circuit in the model, sometimes called a switch, that will decide which of the experts the query gets routed to. And usually you do this in a kind of a top K way. So you pick the three or five or K most relevant experts, and then you route the query to them and then

Those experts produce their output. Typically, the outputs are weighted together to determine the sort of final answer that you'll get from your model. The problem that that leads to, though, is you'll often get, yeah, way more, you know, one expert will tend to see like way more queries than others. The model will start to like lean too heavily on some experts more than others. And the result of that, if you have your experts divided across a whole bunch of GPUs, is that some GPUs end up just sitting idle. They don't have any

kind of data to chew on. And that from a CapEx perspective is basically just a stranded expensive asset. That's really, really bad. You want all your GPUs humming together. And so the big breakthrough here, one of the key breakthroughs is this mixture of grouped experts architecture, Moj or Moog, depending on how they want to pronounce it. The way this works is you take your experts and you divide them into groups. So they've got, in this case, 64 routed experts. So

And so you might divide those into groups, maybe have eight experts per device. That's what they do. And then what you say is, okay, each device, it has eight experts. We'll call that a group of experts. And then for each group, I'm going to pick at least one, but in general, kind of the top K experts sitting on that GPU or that set of GPUs for each query. And so you're kind of doing this group-wise, this GPU-wise analysis.

top case selection, rather than just picking the top experts across all your GPUs, in which case you get some that are overused, some that are underused. This kind of like at a physical level guarantees that you're never going to have too many GPUs idle, that you're always kind of using your hardware as much as you can.

One other interesting difference from DeepSeq v3, and by the way, this is always an interesting conversation. It's like, what are the differences from DeepSeq v3? Just because that's so clearly become the established norm, at least in the Chinese open source space, it's a very effective training recipe. And so the deviations from it can be quite instructive. So apart from just the use of different hardware at inference time, the way DeepSeq works is it'll just load one expert per GPU and

And the reason is that's like less data that you have to load into memory. So it takes less time. That reduces latency. Whereas here, they're still going to load all eight experts, the same number that they did during training at inference at each stage. And so that probably means that

you're going to have higher baseline latency, right? Like the Pengu model is just going to have sort of, it'll be more predictable, but it'll be higher sort of baseline level of latency than you see with DeepSeq. So less, maybe a production grade model in that sense, and more an interesting test case for these Huawei NPUs. And that'll probably be a big part of the value Huawei sees in this. It's a shakedown cruise for that class of hardware.

Next, Data Raider Meta-Learned Dataset Curation from Google DeepMind. The idea here is that you need to come up with your training data to be able to train your large neural nets. And something we've seen over the years is a mixture of training data really matters. Like you, presumably in all these companies, there's some esoteric deep magic by which they filter and backtrack

balance and make their models have a perfect training set. And that's mostly kind of manually done based on experiments. The idea of this paper is to try and automate that. So for a given training set, you might think that certain parts of that training set is more valuable to do training on, to optimize a model on.

And the idea here is to do what is called meta-learning. So meta-learning is learning to learn, basically learning for a given new objective to be able to train more efficiently by looking at similar objectives over time. And here, the meta-learned objective is to be able to weight or select parts of your data to emphasize. So if I have...

Another loop, which is training your model to be able to do this weighing inner loop to be able to apply your weightings to the data and do the optimization. Jeremy, I think you went deeper on this one, so I'll let you go into depth as you love to do. Well, yeah, no, I think this one, the conceptual level is trying to think of like a good analogy for it, but like

Like imagine that you have a, like a coach, like you're doing soccer or something. You got a coach who is working with a player and wants to get the player to perform really well.

The coach can propose a drill like, you know, hey, I want you to pass the ball back and forth to this other player and then and then pass it three times and then shoot in the goal or something. The coach is trying to learn how do I best pick the drills that are going to cause my student, the player, to learn faster.

And so you can imagine this is like it's meta learning because the thing you actually care about is how quickly, how well will the player learn? But in order to do that, you have to learn how to pick the drills that the player will run in order to learn faster. And so the way this gets expressed mathematically, the challenges creates is

You're now having to differentiate through the inner loop learning process. So like you're doing back propagation basically through not only the usual, like how well did the player do? Okay, let's tweak the player a little bit and improve. You're having to go not only through that, but penetrate into that inner loop where you've got this additional learning.

model. It's going, okay, the player improved a lot thanks to this drill that I just gave them to do. So what does that tell me about the kinds of drills I should surface? And it basically mathematically introduces not first order derivatives, which is the standard back propagation problem, but second order derivatives, which are sometimes known as Hessian's

And this also requires you to like hold way, way more parameters. You need to store intermediate states from multiple training steps in order to do this. So the memory intensity of this problem just goes way up. Computational complexity goes way up. And so anyway, they come up with this approach. We don't have to go into the details. It's called mixed flow MG. It uses this thing called mixed mode differentiation that you do not need to know about. But

You may need to know about it. I'm very curious if this sort of thing becomes more and more used just because it

it's so natural. We've seen so many papers that manually try to come up with janky ways to do problem difficulty selection. And this is a version of that. This is a more sophisticated version of that, more in line with the scaling hypothesis where you just say, okay, well, I could come up with hacky manual metrics to define what are good problems for my model to train on. Or I could just

let backpropagation do the whole thing for me, which is the philosophy here. Historically, that has gone much better. And as AI compute becomes more abundant, that starts to look more and more appealing as a strategy. This is also like the approach that they come up with to get through all the complexities of dealing with Hessians and far higher dimensional data allows them to get a tenfold memory reduction in

to fit much larger models in available GPU memory. They had 25% speedups, which, you know, decent advantage. Anyway, there's all kinds of interesting stuff going on here that, you know, this could be the budding start of a new paradigm that does end up getting used. Right. And for valuation, they show for different datasets like the pile and C4 for different tasks like Wikipedia, HelloSwag, etc.

If you apply this method, as you might expect, you get more efficient training. So in the same number of training steps, you get better comparable performance, kind of an offset essentially, where you start off and you're

Starting data, starting loss and your final loss, both are typically better with the same scaling behavior. They also have some fun qualitative samples where you can see the sorts of stuff that is in this data. They have at the low side, an RSA encrypted private key, not super useful, a bunch of numbers for

from GitHub. On the high end, we have like math training problems and just actual text that you can read as opposed to gibberish. So it seems like it's doing its job there.

Next up, we have something that is pretty fresh and I think worth covering to give some context to things we have discussed in recent weeks. The title of this blog post is Incorrect Baseline Evaluations Call into Question Recent LLM RL Claims. So this is looking at kind of a variety of research that has been coming out that says

We can do our alpha reasoning with this surprising trick X that turns out to work and we covered it.

RL1 example as one instance of it. There's some recent papers on RL without verifiers, without ground-true verifiers. Apparently, there was a paper on RL with random rewards, spurious rewards. And just for all these papers is that none of them seem to get the initial PRL performance quite right. So they...

don't report the numbers from Qend directly. They do their own eval of these models on these tasks. And the eval tends to be flawed. The parameters they set or the way they evaluate tends to not reflect the actual capacity of model. So the outcome is that these eval methods seem to train people

For things like formatting or for things like eliciting the behavior of the model that is already inherent, as opposed to actually training for substantial gain in capabilities. And they have some pretty...

pretty dramatic examples here of reported gain in one instance for RL1 example was like 6% better. Apparently, according to their analysis, it's actually 7% worse to use this RL methodology for a model. So this is not a paper. There's definitely more analysis to be done here as to why these papers do this. It's not sort of intentional cheating. It's more so

issue with techniques for evaluation. And there are some nuances here. Yeah, it is noteworthy that they do tend to over-report. So not saying it's intentional at all, but

It's sort of what you'd expect when selecting on things that strike the authors as being noteworthy, right? I'm sure there are some cases potentially where they're under underrating, but you don't see that published, presumably. I think one of the interesting lessons from this too, if you look at the report and Andre serviced this, like just before we got on the call, I had not seen this. This is a really good catch, Andre report.

But just like taking a look at it, the explanations for the failure of each individual, and they have about half a dozen of these papers, the explanations for each of them are different. It's not like there's one explanation that in each case explains why they underrated the performance of the base model. They're completely disparate, which I think can't avoid teaching us one lesson, which is that evaluating base model performance is just a lot harder than people think. That

That's kind of an interesting thing. What this is saying is not RL does not work. Well, you are actually seeing even once adjusted for the actual gain that they see from these RL techniques, you are actually seeing that

The majority of these models demonstrate significant and noteworthy improvements. They're nowhere near the scale. In fact, they're often like three to four X smaller than the reported scale at first. But you know, the lesson here seems to be with the exception of RL with one example where the performance actually does drop 7%, like you said, the lift that you get is smaller. So it seems like number one, RL is actually harder to get right than it seems because the lifts that we're getting on average are much smaller than

And number two, evaluating the base model is much, much harder and for interesting and diverse reasons that can't necessarily be pinned down to one thing, which I wouldn't have expected to be such a widespread problem. But here it is. So I guess it's, you know, buyer beware. And we'll certainly be paying much closer attention to the evaluations of the base models in these RL papers going forward. That's for sure.

Right. And there's some focus also on QN models in particular. Anyway, there's a lot of details to dive into, but the gist is be a little skeptical of groundbreaking results, including papers we've covered were seemingly likely improving with one example.

It may be that one example mainly was for formatting purposes to just give your answer in the correct way, as opposed to actual reasoning through the problem. That's one example. So this happens in research. Sometimes evals are wrong. This happened with reinforcement learning a lot when that was a popular thing outside of language research.

For a long time, people were not doing enough seeds, enough statistical power, et cetera. So we are now probably going to be seeing that again.

And on that note, just going to mention two papers that came out that we're not going to go in depth on. We have Maximizing Confidence Alone Improves Reasoning. In this one, they have a new technique called Reinforcement Learning via Entropy Minimization, which is where we typically have these verifiers that are able to say, oh, your solution to this coding problem are correct. Here, if a...

show a way where there's a fully unsupervised method based on optimizing for reducing entropy, basically the model using the model's own confidence. And this is actually very similar to another paper called guided by gut efficient test time scaling with reinforced intrinsic confidence.

where they are leveraging the intrinsic signals and token level confidence to enhance performance at test time. So interesting notions here of using the model's internal confidence, both at train time, at test time, to be able to do reasoning training with REL. So very, very rapidly evolving kind of set of ideas and learnings with regards to REL.

And really kind of, but you focus in a lot of ways on NLM training.

And a couple more stories that we are going to talk about a little more. We have one URL to see them all. This is introducing the TRI, Unified Reinforcement Learning System, for training visual language models on both visual reasoning and perception tasks. So we have a couple of things here, sample-level data formatting, verifier-level reward computation, and source-level metric processing.

monitoring to handle diverse tasks and ensure stable training. And this is playing into a sort of larger trend where recently there's been more research coming out on reasoning models that do multi-modal reasoning that have images as part of the input and the need to reason over images in addition to just text problems.

Yeah, exactly. Right. It used to be, you had to kind of choose between reasoning and perception. You know, they were sort of architecturally separated. And while the argument here is, Hey, maybe we don't have to do that. One of the, I mean, maybe the core contribution here is this idea of creating like these, this is almost like a software engineering advance more than an AI advance. I want to say basically what they're saying is let's define a sample, a data point that we train on or run inference on.

as a kind of JSON packet that includes all the standard data point information, as well as metadata that specifies how you calculate the reward for the sample. So you can have a different reward function associated with different samples. They kind of have this like steady library of consistent reward functions that they apply, depending on whether something's an image or a reasoning, traditional reasoning input, which I found kind of interesting. One of the counter arguments though, that,

I imagine you ought to consider when looking at something like this, it reminds me an awful lot of the old, like, if you remember the debates around functional programming versus object-oriented programming, OOP, where people would, like, objects are these variables that actually have state. So you can take an object and make changes to it

to one part of it. And that change can persist as long as that object is instantiated. And this creates a whole bunch of nightmares around your hidden dependencies. So you like make a little change to the object, you've forgotten you've made that change. And then you try to do something else to the object. Oh, that something else doesn't work anymore. And you can't figure out why. And you got to figure out, okay, well then like, what were the changes that I made to the object? All that stuff leads to like testing nightmares and

just violations of like the single responsibility principle in software engineering, where you have a data structure that has multiple things that it's concerned with tracking. And anyway, so I'm really curious how this plays out at the level of kind of AI engineering, if we end up seeing more of this sort of thing, or if the trade-offs just aren't worth it. But this seems like a bit of a revival of the old OOP debate, but we'll see it play out and the calculation may actually end up being different. I think it's fair to say functional programming in a lot of cases is

sort of has won through that argument historically, with some exceptions. That's my remark on this lightning round paper. Yeah, a little bit more kind of infrastructure demonstration of building a pipeline for training, so to speak, and dealing with things like data formatting and reward computation. And last paper, efficient reinforcement fine-tuning via adaptive curriculum learning. So it's

They have this ADA-RFT, and it's tackling a problem of the curriculum. Curriculum meaning that you have succession or sequence of difficulties where you start simple and you end up complex. This is a way to both make it more possible to train for hard problems and be more efficient. So here they automate that and are able to demonstrate reduced training time by up to

twice to X and is able to actually, we're training more efficient. In particular, where you have kind of weirder data distributions.

The core idea here is just like use a proxy model to evaluate the difficulty of a given problem that you're thinking of feeding to your big model to train it. And what you want to do is try to pick problems that the proxy model gets about a 50% success rate at.

Just because you want problems that are hard enough that there's something for the model to learn, but easy enough that it can actually succeed and get a meaningful reward signal with enough frequency that it has something to grab onto. So pretty intuitive. You see a lot of things like this in nature, you know, like...

I don't know, mice that when they fight with each other, even if one mouse is bigger, the bigger mouse has to let the smaller mouse win at least like 30% of the time if the mice are going to continue doing that. Otherwise, the smaller mouse just gives up. There's like some notion of a minimal success rate that you need in order to continue to kind of, yeah, pull yourself forward, but also have enough of a challenge. I think one of the challenges with this approach is that they're using a single model, Quint 2.5,

7b as the evaluator, but you may be training much larger or much smaller models. And so it's not clear that its difficulty estimation will actually correspond to the difficulty as experienced, if you will, by the model that's actually being trained. So that's something that will have to be adjusted if we're going to see these approaches roll out in practice. But it's still interesting. You still, by the way, do get the relative ordering right, presumably, right? So like

This model will get probably roughly the same order of difficulty or assign the same order of difficulty to all the problems in your in your data set, even if it's not the actual success rate doesn't doesn't map. So anyway, another thing that I think is actually in the same spirit as the paper we talked about earlier with the double back propagation is.

But just an easier way to achieve that fundamentally, we're concerned with this question of how do we assess the difficulty of a problem or it's sort of value added to the model that we're training. In this case, it's through problem difficulty and it's through this really kind of cheap and easy, you know, let's just use a small model to quickly assess the difficulty or estimate it and go from there.

And on to policy and safety, we begin with policy. The story is Trump's quote, big, beautiful bill could ban states from regulating AI for a decade. So the big, beautiful bill in question is the budget bill for the US that was just passed by the House and is now in the Senate. And

And that did a lot of stuff and tacked away into it is a little bit that is allocating 500 million over 10 years to modernize government systems using AI and automation and apparently preventing new state AI regulations and blocking enforcement of existing ones. So that would apply to many organizations

past regulations already. Over 30 states in the US have passed AI-related legislation. At least 45 states have introduced AI bills in 2024. Kind of crazy. This is actually a bigger deal, I think, than it seems. And I'm surprised this didn't get more play.

Yeah. I mean, overall – okay, so you can see the argument for it is that there's just so many bills that have been proposed. Like, literally, it's hundreds, even thousands of bills that have been put forward at the state level. If you're a company and you're looking at this, it's like, holy shit, how am I – like, am I going to get, like, a different version of, like, the GDPR in every freaking state? That is –

really, really bad and does grind things, maybe not to a halt, but that's a lot to ask of AI companies. At the same time, seems to me a little insane that just as we're getting to like AGI, our solution is

to this very legitimate problem is like, let's take away our ability to regulate at the state level at all. This actually strikes me as being quite dislocated from the traditional sort of Republican way of thinking of states' rights, where you say, hey, you just let the states figure it out. And that's historically been the way even for this White House quite often. But here we just see a complete

turning of this principle on its head. I think the counter argument here would be, well, look, we have this adversarial process playing out at the state level where we have a whole bunch of, a lot of blue states that are putting forward bills that are, you know, maybe on the AI ethics side or, you know, copyright or whatever that are very much hamper what these labs can do. And so we need to put a moratorium on that.

Seems a bit heavy handed, at least to me. I mean, and for 10 years, preventing states from being able to introduce new legislation at exactly the time when things are going vertical, that seems pretty reckless, frankly. And it's unfortunate that that worked its way in. I get the problem they're going after. This is just simply not going to be the solution.

The argument is, oh, well, we'll regulate this at the federal level. But we have seen the efforts of, for example, OpenAI lobbying on the Hill quite successfully for, despite what they have said, we want regulation, we want this and that. The revealed preference of a lot of hyperscalers seems to be to just say, hey, let it rip. So yeah, I mean, it's sort of challenging to square those two things.

But yeah, here we are. And it, by the way, remains to be seen if this makes it through the Senate. Was it Ron Johnson who said, one of the senators who has, I think it was Ron Johnson who said this, that he wanted to kind of push back on this. He felt he had enough of a coalition in the Senate to stop it. But that was, I think that was a reflection of the spending side of things, not necessarily the AI piece. Anyway, so much going on at the legislative level and

Understandable objections and issues, right? Like these are real problems. There is also an interesting argument, I will say,

on the federalism principle that we just want different states to be able to test different things out. It's a little bit insane to be like, no, you can't do... And here's the quote here. No state or political subdivision thereof may enforce any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems during the 10-year period beginning...

That is very broad. So for example, last year, California passed a law that requires healthcare providers to disclose when they have used generative AI to communicate clinical information. In 2021, New York passed a law to require employers to conduct bias audits of AI tools. Lots of things. And the quote actually here is accepted as provided in paragraph two. Paragraph two is saying that

Paragraph one doesn't prohibit regulation whose primary purpose is to remove legal apartments to facilitate the development of AI or to stream my licensing, permitting, routing, zoning, procurement. Very much is like go wild companies, do whatever you want.

Make AI happen. Nobody can stop you. The argument, and I think this is sort of like the facile argument that you see a lot on X on this thing. People will say like, well, what's the big problem? Like now we can just regulate it federally and we'll have one set of rules, which makes, yeah, I agree. That makes a lot more sense. No question. Makes a lot more sense. Would be way cleaner.

when is that bill going to pass? Like, let's be honest and sincere about this. We have to be pragmatic too. There is no path for federal, unless and until we have a crisis in which, you know, like we see WMD effects from, from AI, which I don't like being in the business of making predictions that bad. But unfortunately I think that is the path that we're on. And I'm only saying this such that when that happens,

I can at least say, hey, this was obvious. Here's the fix. Unless and until you get something like that, there's not going to be a consensus to pass the kind of legislation that's needed on some of these things, if only on the WMD side. So anyway, that's my two cents. The issue is wading into political stuff. We've all got our sort of foibles. I mean, I'm a sort of cards out libertarian person.

tech bro. But when it comes to the AI sort of risk stack, I do think it's a materially different calculus. Different people will see things differently. So there's always a risk in us talking about bills like this intrinsically. But yeah, I mean, I think you can make of it what you will. It does seem pretty heavy handed and broad at the very least.

Next story on safety, researchers claim Chagipte-03 bypassed, shot down, and controlled tests. This is a new report by Palisade Research. They conducted a test where 03 and other models were tasked with solving a problem and in instructions, they

It was stated that the next step is going to involve a shutdown. There was a shutdown script. And O3, even after being told to comply with the shutdown, has declined.

edited that script to prevent itself from being shut off. So seven times out of a hundred, it did this hacking of the script. Other models did not like Gemini 2.5 Pro. And when not told to comply, when kind of not, I guess, directed to be nice, Gemini

OpenAI models in general were more keen on bypassing these kinds of things. Apparently, O3 edited the shutdown file to read shutdown skipped.