DeepMind‘s AlphaFold 2, Voice Cloning, Facial Recognition in Retail Stores

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to Scania Today's Let's Talk AI podcast. We can hear AI researchers chat about what's going on with AI. This is our latest Last Week in AI episode in which you get summaries and discussion about some of last week's most interesting AI news. I am Andrey Krenkov.

And I am Dr. Sharon Zhou. And this week, we'll discuss a bit about the future of self-driving cars, but maybe more like pizza robots. We will talk about AI for protein structure, basically coming to the masses. And we'll talk about language models a bit and also robots adapting to real world conditions. This is going to be a fun week. So let's dive straight in. Our first article is...

called The Future of Self-Driving? Maybe Less Like Elon Musk and More Like Domino's Pizza Robots. And this is from CNBC. And so this article discusses how the future of self-driving cars might come first in the form of a pizza robot or a delivery robot that is much smaller than a self-driving car. And these are basically pizza robots on

called the REV1. And these REV1 vehicles are now in the neighborhoods of Austin and Ann Arbor, Michigan, and where their company, Refraction AI, launched these delivery robots in these neighborhoods to basically do last mile delivery and specifically enable people to order pizza from a pizza shop called Southside Flying Pizza.

Yeah, these are kind of pretty cute. We have an image here and it has three wheels. It kind of looks boxy and yeah, it describes that this is basically like a delivery cyclist, like a bicycle delivery guy where, you know, the restaurant puts the pizzas in the robot and then it kind of drives along the side road or bike lane, if it exists, to deliver it to the house.

And then, you know, they have some contingency plans where if it can't do it autonomously, someone can take over. And yeah, this is something where, you know, before we get fully autonomous or, you know, cars out on actual highways, kind of pretty plausible, I would say that this will actually come to market. What do you think, Sharon? Yeah.

Right. These are more like sidewalk robots, I suppose, where they can maybe be on the sidewalk or edge of the road or even a bike lane. And they are, just to give a sense of how big they are, they're 150 pounds and four and a half feet tall. So they're pretty small and pretty compact as well. And I think this is almost the...

Next step beyond a Zoom pizza, which was a company trying to do pizza delivery and make the pizza on the way using a robot in the van, in the pizza delivery van. But this is instead giving the pizza to the robot and having the robot just grab that pizza and send it off to different people around the neighborhood. Yep. And so this is a 50 employee company. They have now a total of 8 million raised.

And it's not the only one. We've seen other examples. So it looks like they're actually being deployed. And I'd be excited to see if I can get a robot to deliver stuff to me. So hopefully soon.

Yeah, I'd be curious if it's faster or something like that. I can imagine it'd be faster because they can give it to multiple different robots and have them do them at the same time. Yeah, and I guess you don't need to give a tip, right? Maybe people do tip the robot. Maybe you can use Apple Pay and tap the robot. Yeah, maybe it has a little compartment where you put the tip. Put a few coins in. Yeah.

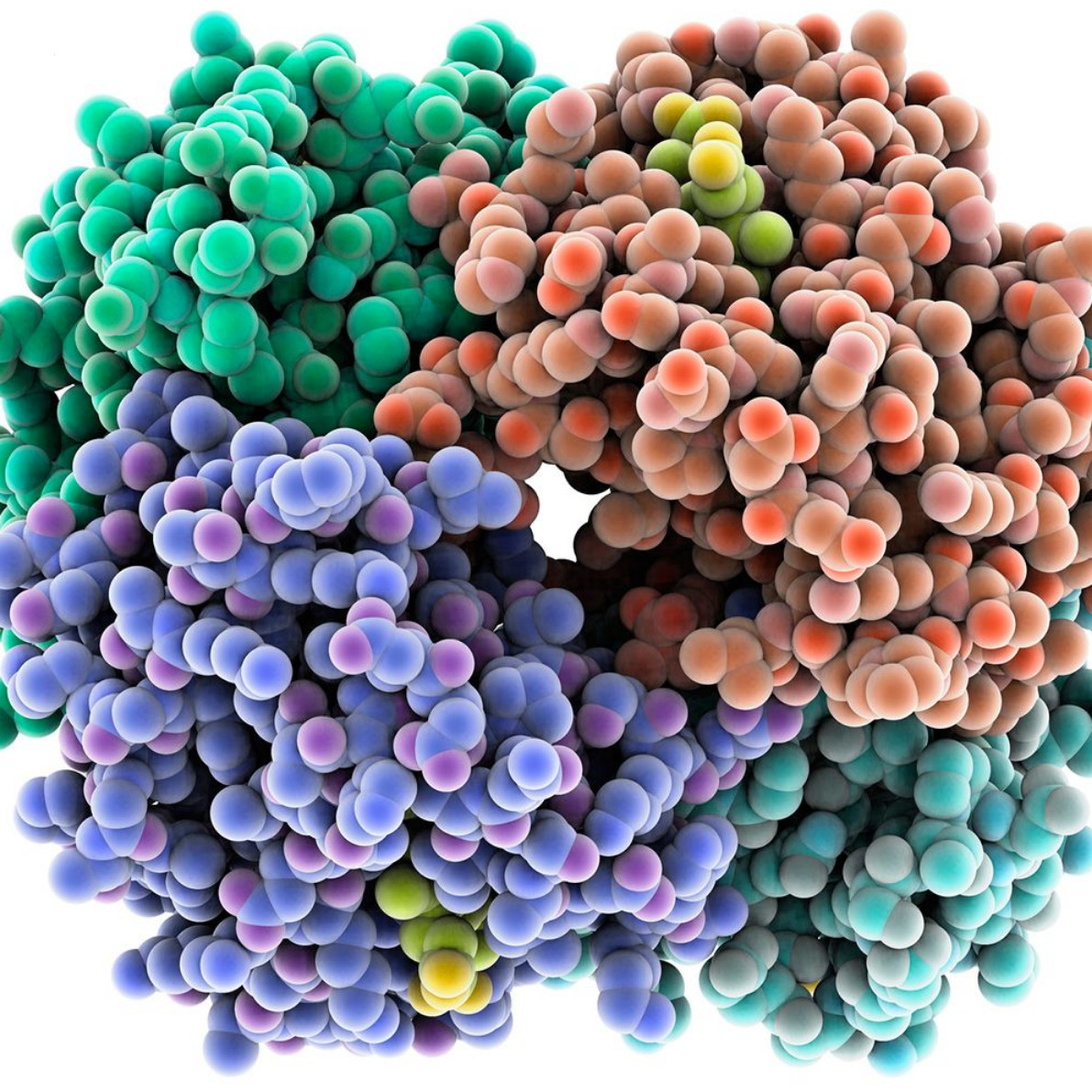

All right. Onto our next article from nature, uh, deep minds AI for protein structure is coming to the masses. All right. So deep mind definitely unveiled, um,

The AlphaFold project way back when, and they had an AlphaFold2 neural network that was recently announced and that they said was going to be potentially open sourced. And this network has been doing really, really well at protein structure prediction, which has revolutionized science and is seen as this great inflection point.

That said, DeepMind has known to be kind of cagey about their models and specifics about them. Specifically, they are a little bit cagey about open sourcing these things. So there have been efforts to...

create an open source version. And it's called Rosetta Fold from the University of Washington. And so they've also found that their model is doing quite well relative to AlphaFold too. So we'll see how this plays out. But it's very cool that there is an open source version already out that is doing something like this. And that has already analyzed about 5,000 proteins submitted by around 500 people.

Yeah, this is very cool. I was not aware of this second effort that it does work about as well as AlphaFold2, and that famously is a huge advance that was really exciting. And AlphaFold2 was just open source. Now there's these two systems that are open sourced that are similar in performance, and that seems like in general would enable a lot of progress in the field, you know.

Over time, as people get to figure out, it seems like these are still pretty tricky. But Rosetta Fold has this online service where you don't even need to run the code yourself. They just have a server and you can put in a protein sequence and get the structure. So that's also cool. So, yeah, that's exciting. And on to more kind of novel research, not so much applications in the real world.

First research piece here is called "Reasoning with Language Models and Knowledge Graphs for Question Answering." So we know there's a lot of work on question answering systems. For instance, if you Google something like "Where was a painter of a Mona Lisa born?" you would get that response.

And in recent research, there's been a lot of discoveries that a lot of such knowledge is available in language models that are put in a lot of graphs. But also in the past was this other approach of knowledge graphs, which are kind of explicit representations of the memory. It's nodes and relations between them instead of just weights in a big neural net.

So in a recent work at the SNACL conference, which is a big NLP conference, they, uh, the researchers studied how to effectively combine both language models and knowledge graphs to receive kind of their respective benefits. So knowledge graphs can encode, you know, more factual information. Language models are not necessarily as reliable. And, um,

Yeah, it's kind of the short version of the approach is they use a language model to get a representation of the question and then they are able to retrieve part of a knowledge graph by linking it to the question. And there's actually a blog post on the Stanford AI blog that you can find that explains it pretty well. So yeah, it's pretty approachable.

Yeah, and I think this makes a lot of sense since language models have a or they struggle with a bit of this memory problem. We've seen multiple ways people have tried to tackle this. And I think using knowledge graphs is really interesting because we already have this structured, I guess, organization of knowledge where you can then retrieve adjacent forms of knowledge, even if they are from the past and that's already been organized.

And knowledge graphs, I think, are largely used in industry, actually. So I'm very happy to see them here and being used a bit more, especially, you know, the graph context and here in the Q&A context. So very excited to see this direction. Yeah, exactly. I was always curious when we combine knowledge graphs with learned stuff, especially for NLP. And so there's been some efforts and this one is cool and

They found that their approach is like 5% more accurate compared to language models in a data set, which is a big deal. 5% improvement is pretty good. And then there's a couple of previous things that have also done this, but none of them are quite as good. So yeah, exciting to see more progress in a sort of hybrid representation.

And on to our next article in research, AI now enables robots to adapt rapidly to changing real world conditions. And this is from Facebook AI's blog.

So I think the big innovation here is something called Rapid Motor Adaptation, RMA, which has come from a collaboration between Facebook AI Research Fair, UC Berkeley, and CMU, Carnegie Mellon. And this is amazing.

quote, a breakthrough in artificial intelligence that enables legged robots to adapt intelligently in real time to challenging unfamiliar new terrain and circumstances. So basically, it's an RL agent and it adapts through

It adapts in a virtual environment to learn, you know, changing environmental factors such as adding a backpack to the robot and, you know, having that weight suddenly be on the robot and making sure the robot can adapt to that. To my knowledge, it doesn't look like it is necessarily done in real world exactly yet, but a lot of it's done in simulation.

I think, yeah, they probably mainly train it in simulation as is common in robotics these days. You need a lot of data. And then...

For deployment, they do show we have some test cases. There's actually a quite nice Facebook blog post that you can go to and they have four legged robots. You can imagine these like four four foot robots with four feet, these little dog robots like Boston Dynamics has. Right. So they

So the adaptation is for them when they go, you know, from like sand to rocks or something like that. That's what changing terrain means. And they have some examples where, um, yeah, if you put some extra weight, for instance, uh, or if you change to, uh, I don't know, walking from a floor to a blanket or something, it actually seems to work to some extent in the real world. So, yeah, that's, that's quite cool. Um,

And it seems pretty practical because these, you know, Boston Dynamics is commercializing this type of robot. There's a few companies now commercializing it and selling these kind of legged robots. So this kind of skill to adapt to changing physical spaces seems like something you would need, obviously. Okay, so yeah, cool to see some actual robotics that isn't just in the simulation, but also has a demonstration that it transfers

But onto some non-research stories, some stories that are more about AI out there in society. We have our first one, which generated quite a few headlines. Voice clone of Anthony Bourdain prompts synthetic media ethics questions. And this one is from techpolicy.press, but there was quite a bit of coverage kind of all over the place. So...

There's this documentary called Roadrunner about the celebrity chef Anthony Bourdain by the Oscar winning filmmaker Morgan Deville and famously Anthony Bourdain recently passed away. So, yeah, this is a documentary kind of looking back at his life.

And something interesting about it was that, as in any documentary, there are some sort of voiceovers over written material, like emails, for instance, where you want to hear it audibly, not just read it, obviously. And what's interesting here is they used an AI model of Bourdain's voice to

where it made it so it's as if Anthony Bourdain was reading these emails. And so it sounds like him, like there's a recording, but this is actually produced after he's diseased by this AI model. And yeah, this got a lot of interest. And the director said to the reviewer when they discussed about it that,

He said, we can have a documentary ethics panel about it later. And then since then, there's been a lot of discussion on, is this okay? Is this weird? Where's the line between deception and fair play for documentaries? Kind of interesting gray area question, I think.

Yeah, I think there's a lot of question around, you know, consent, especially since this person obviously just passed away. And there's, I don't know if they have an estate or like whether that estate is allowed to, you know, make consent decisions around the future of this person's, I guess, brand and identity. And there certainly is a little bit of questioning around, you know, explicitly saying that this is synthetic media. And of

of course, just malicious deception in general. And I think this is just part of the ongoing story of synthetic media deep fakes being much more prominent in society.

Yeah, we discussed a bit on the episode we just released where we look back at 2021 about kind of a similar question where there was a mod for a video game where it was synthesized. So interesting to see another example in some sense of that. And that brings up pretty similar questions of how are we going to make it so people can give allowance to use their voice and

When is it okay? When is it not? All these sorts of things. It seems like, especially now that there's companies that are selling this and it's being used in an actual professional documentary, it's going to have to be thought about pretty quickly because it seems like there's a trend forming. Right. Yeah.

And on to our next article, retail stores are packed with unchecked facial recognition, civil rights organizations say. And that is an article from The Verge. And there's also a similar article from VentureBeat, Fight for the Future launches campaign to keep facial recognition off U.S. college campuses.

All right. So this is the ongoing battle with facial recognition that we also have talked about a lot in this podcast. And now there are more than 35 organizations demanding top U.S. retailers to stop using facial recognition to identify their shoppers and employees in their stores, which companies have said, you know, they use to deter theft and also identify shoplifters. But of course, it can go further than that.

Yeah, so it's quite interesting. You know, I wasn't aware that there has been deployment. I mean, some stories were discussed, like I think Rite Aid using facial recognition cameras last year, we've seen. But this idea that there's many organizations doing it is pretty

Kind of surprising to me. And yeah, it was interesting to see that there's this banned facial recognition project that has all the support from different civil rights organizations. So yeah, you know, obviously facial recognition and its various implications is something we discuss a lot on this podcast. You know, if you're a new listener, you go back, that's kind of a primary thing that seems to crop up. So yeah, it's good to see that

there is a concerted effort pushing for change. And this Fight for the Future organization also, as we noted, has a campaign to keep facial recognition off U.S. college campuses, not just retail stores. So yeah, it seems like

People are fighting to make it so you can't just deploy it anywhere, you know, without any laws governing it, which I think is what we would all want. I think it's reasonable. Yeah. And ending on a funny-ish note, our last article from Gizmodo is titled Google CEO Still Insists AI Revolution Bigger Than Invention of Fire.

All right. So Sundar Pichai, Google CEO, has made the claim that AI is poised to be a huge revolution, bigger than what fire was to human beings, and that it'll play a role in everything from education to healthcare to the way we manufacture things to just how we consume information in general. And this is, I feel like,

goes along the lines of what Andrew Ng has said around AI being the new electricity. And I think this is just a very bold statement that people are slightly making fun of him for. But we'll see how big AI actually is and what kind of, I guess, influence it does really make on people's lives. And of course, this is with the giant grain of salt that we've

Google is very much selling this technology, right? And so they, of course, want this to feel like it is the next biggest thing.

Yeah, yeah. This is, you know, a slightly funny thing. Obviously, he didn't necessarily mean it literally or didn't mean it to be a big thing. But his quote here is actually what he said. He views AI as a very profound and name-real technology. He said, you know, if you think about fire or electricity or the Internet, it's like that, but I think even more profound.

So it's more profound than fire or electricity. It's not, I guess it is the new electricity in that sense, because it's more profound. And then he had another appearance in a podcast or something where he didn't take it back. So, you know, you can definitely make fun of this notion that AI is bigger than electricity. But at the same time, the metaphor here is clear, that it's another kind of

super foundational technology from which a ton of stuff will come about, which I think we agree with. You know, it's just that the way this was stated was kind of funny. And that's it for us this episode. If you've enjoyed our discussion of these stories, be sure to share and review the podcast. We'd appreciate it a ton. And now be sure to stick around for a few more minutes to get a quick summary of some other cool news stories from our very new own...

And now be sure to stick around for a few more minutes to get a quick summary of some other cool news stories from our very own newscaster, Daniel Bashir. Thanks, Andrea and Sharon. Now I'll go through a few other interesting stories that we haven't touched on. Our first stories touch the research side. Modern games have become more intricate and complex with ever larger worlds to explore.

But, as those games increase in size and complexity, more room for glitches and unintended behavior emerges. As a result, having humans test relevant content and identify issues is a tedious process that significantly slows down game development.

A publication from Electronic Arts shows that complementing playtesting with curiosity-driven reinforcement learning agents who are trained to maximize game state coverage and rewarded based on the novelty of their actions shows great promise in accelerating and simplifying this process.

Next, for years, natural language generation researchers have recruited humans to evaluate their models' text outputs, based on the assumption that humans are the best evaluators of whether a model's output is human quality.

However, as Synced Review states, "A study from the University of Washington and the Allen Institute of Artificial Intelligence argues that humans are not in fact the best evaluators. The researchers observe that human evaluators tend to focus on fluency of model output, rather than whether the content actually makes sense.

The researchers propose multiple ways to train human evaluators in order to boost their accuracy when identifying machine versus human authored text. Our first story on the business side concerns anti-harassment. According to The Verge, Discord has purchased Centropy, a company working on AI-based harassment detection tools.

Centripi's software monitors online networks for abuse and harassment, then offers a way to block problematic users and filter messages one doesn't want to see. This pairing, Beverage observes, makes sense. Discord's huge network requires volunteers and teams within Discord to moderate the many different communities.

software-like centripies could make the job of moderation a lot easier. Our AI and society stories today both involve facial recognition. As we have learned about the persecution of Uighur Muslims in the Chinese province of Xinjiang, we have discovered the Chinese government's coordination with facial recognition companies to develop a sort of panopticon in the region.

As The Verge reports, the U.S. Department of Commerce has sanctioned 14 Chinese tech companies over links to human rights abuses in the region. Two of these companies have received funding from U.S.-based Sequoia Capital. U.S. companies may not engage in business with any company on the Commerce Department's entity list.

which also includes companies from Canada, Russia, and Iran, among other countries. Finally, in another story from The Verge, a campaign named "Ban Facial Recognition in Stores" has identified stores committed to not using facial recognition and is pressuring companies currently using the technology.

According to their website, companies that do use the technology include Apple, Lowe's, and Albertsons. Since these stores are private entities, they are not affected by state and local regulations banning government use of facial recognition. However, stores using facial recognition technology without customers' knowledge does raise a number of privacy concerns.

and stores such as Rite Aid that have quietly installed facial recognition cameras in the past have received significant pushback. Thanks so much for listening to this week's episode of Skynet Today's Let's Talk AI podcast. You can find the articles we discussed today and subscribe to our weekly newsletter with even more content at skynetoday.com. Don't forget to subscribe to us wherever you get your podcasts, and leave us a review if you like the show.

Be sure to tune in when we return next week.