Malware in AI models, Algorithmic Bias Bounty, Robot Beach-Cleaners and Beehives

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to Skynet today's Let's Talk AI podcast where you can hear AI researchers chat about what's going on with AI. This is our latest Last Week in AI episode in which you get summaries and discussion of some of last week's most interesting AI news.

I'm Dr. Sharon Zhou. And I am Andrey Kronikov. And this week, we'll discuss some robots for cleaning up beaches and keeping up beehives, some research on malware in AI and translation in different languages, and some more on facial recognition and bias, as we often do.

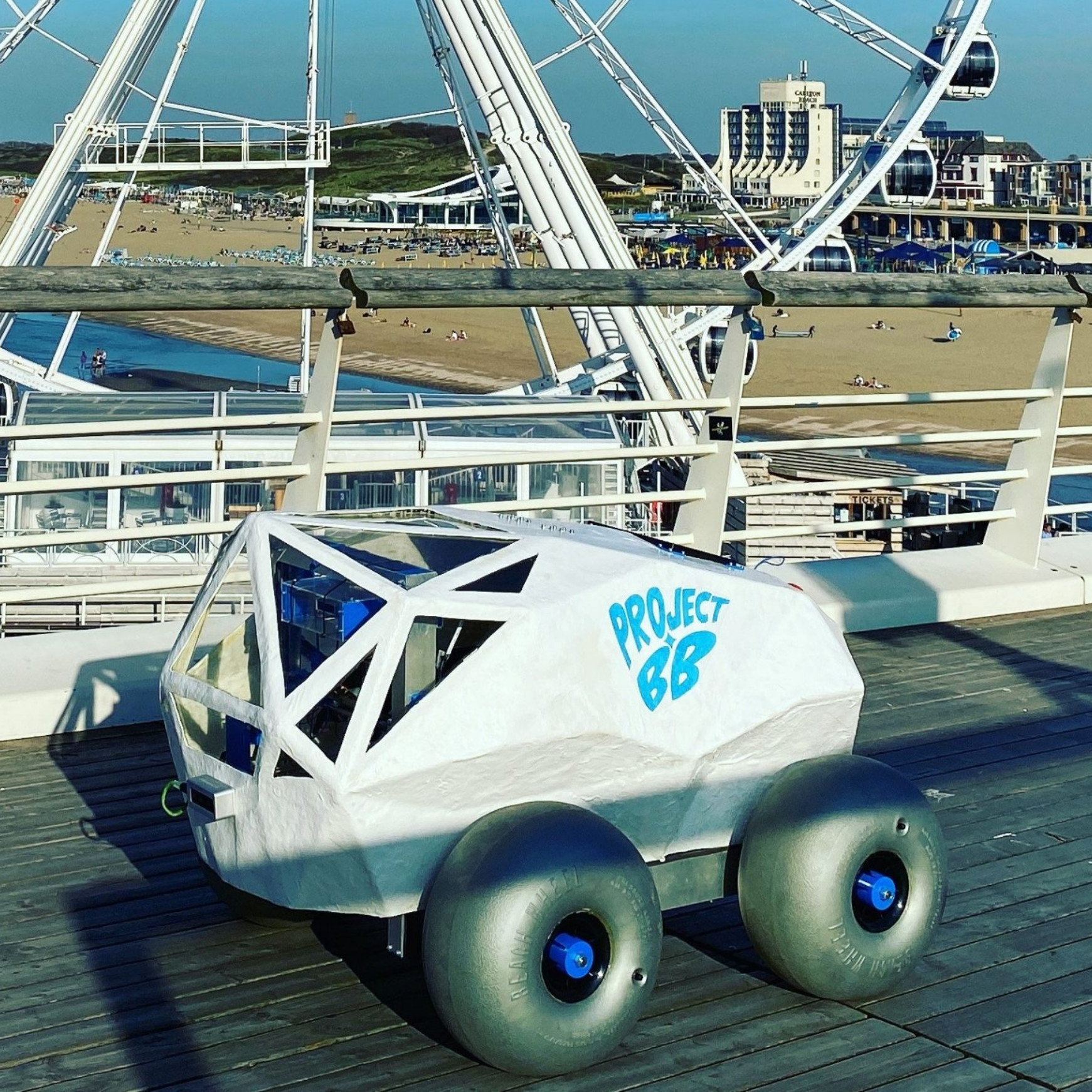

Let's dive straight in. And first up on the application side, we have our first news story titled Meet Beach Bot, a beach rover that uses AI to remove cigarette butts from beaches. So pretty much as the title says, there's a cute little robot. It's about a meter wide and about a meter tall and has these big bumpy eyes.

and it drives around and uses computer vision to detect cigarettes and pick them up and move them away from the beach.

So it's a fairly simple robot. It just has these two cameras and was built by these two entrepreneurs, Edwin Boss and Marci LeCarte, and has been demonstrated once and is going to be demonstrated again. So, you know, not a huge deal, not going to change the world, but it's a neat project and certainly a useful application for cleaning up beaches.

I think it's very cute. And there is a lot of, I guess, beach pollution and beach trash. And this is kind of the start of grabbing some cigarette butts. And I imagine they're going to start cleaning more fully. They're very small, like the beach bots. And they're pretty cute, kind of roaming around the beach. And I can see, I can see

can see beach cleaning being kind of the type of task we're open to automating, especially since a lot of beaches are not that clean. Yeah. Exactly. Yeah. I found it interesting right after this news story about Beach Bot, about a few days later, a week later, there

There was a second news story about this beach cleaning bee bot that is a bit bigger and is more like a tractor that trails this thing behind it and isn't autonomous. It's remote controlled, but it has a similar function and is a bit more kind of bulky and industrial looking. So interesting to see how you can, you know, engineer different solutions for the same problem and have

you know, different types of automation that can attack this problem. Yeah, definitely. And to be clear, it is remotely controlled by a human operator, the VBOT. And what's cool is that it is partially operated or powered by solar, which makes sense because it's on a beach. Yeah, so trying to keep everything clean, including the energy.

And onto our next article from Forbes, here is a fully autonomous AI powered beehive that could save bee colonies. All right. So beehives, um, are, um, pretty hard to, uh, maintain. And so, um, BeeWise is an ag tech startup that was created to create this fully autonomous beehive that is, uh,

AI powered and basically is a beekeeping robot and it can help the bees be maintained all the way by itself. And it's raised 38.7 million in funding to date, which is pretty exciting.

And this is just such an important area. Bees have not been doing super well worldwide, but we need them very much. And so this is super exciting. And again, this is entirely solar powered. Yeah, exactly. It's pretty interesting. You look at it, it looks like a really big sort of storage cabinet sort of and has a little door on it.

And it can manage 24 hives at a time, which means each hive has 30 honeycombs with 720 in each device. So that's one to two million bees in total for one of these. And it has kind of a variety of different AI things going on. So it has a lot of information collection as to how well the bees are doing.

And it has some robotic components that allow intervention to improve things. And they say it reduces B mortality by 80%, which increases yields a bunch and reduces 90% of manual labor.

So beekeepers can use this to augment their work and basically do a better job to make up for, well, obviously there's this big issue of like 35% mortality rate. So it does seem like a really, really useful application of AI.

Yeah, I think it's extremely exciting. I've visited a bee farm before and it is a lot of manual labor. Yeah, especially for these industrial ones, you know, like individual beekeepers maybe are fine. But when you're getting to this large scale, it can get pretty tricky and you need, you know,

tens of beekeepers and a lot of labor. So it definitely seems like a useful problem to address. And like they they seem to be doing a smart job of it. Not just like, you know, marketing themselves in AI when it's something simple.

And on to discussing the research, we have researchers demonstrate that malware can be hidden inside AI models. So there's this fun, uh, fun titled paper called evil model, hiding malware inside of neural network models. And this was published recently and basically is showing that you can do a form of stenography or a form of stegona, a form of steganography, uh,

And it shows that you can do a form of steganography, which is a way of hiding messages inside of different materials. So you can do this with photographs, and now you can do this with neural networks by basically using some of the weights in the network to encode the malware. So they demonstrated that you can use one of the classic models of AlexNet.

And you can have it still perform its function while altering it to encode malware in its content. So you can encode like out of 180 megabytes of a model, you can put in 40 megabytes of malware and it still does its job about the same.

This is obviously a big issue if we can hide a lot of problems inside our neural networks, especially as more and more neural networks are being deployed. So not great. And hopefully we find ways to beat this at the cat-mouse game of security. Yeah. And I did show that, you know,

antivirus programs couldn't detect the virus, which I guess isn't surprising. The good thing is this only shows a way to encode the virus. It doesn't execute the virus until you decode it. So not the most threatening kind of demonstration, but it does show, I guess, how you can hide things over neural nets. And I could see stuff like this being built upon in interesting ways.

Right, right. It could make it easier to just get the malware there and then something else could trigger an executable. And onto our next article, Facebook AI releases Vox Populi, a large-scale open multilingual speech corpus for AI translations in NLP systems. And this is from Mark Techpost.

So basically Facebook released this dataset called the Vox Populi, which means voice of the people in Latin. And it's a collection of audio recordings in 23 different languages, which amounts to 400,000 hours of speech. This is to help with the development of new NLP systems, specifically in speech and audio, which is, I think,

pretty big in the sense that we don't have as many nice audio and speech data sets. And a lot of these were basically all these data was taken from publicly available European Parliament event recordings. And they just basically built out the pipeline to segment them by speaker or whether there were silences and clean up that data into a nice data set.

Yeah, exactly. This is cool because speech databases in particular are pretty rare still and mostly are for English. So here there's 23 different languages. And it's interesting, they actually used all available speeches between 2009 and 2020 of the parliament discussions. So a lot of data, as you can imagine.

And they also demonstrated that you can, you know, not just to target new languages, but using self-supervised training on the unlabeled data, you can pre-train a model and then fine tune it for better results. So, yeah, I really need to have this data set in a space where there's not that many public data sets yet.

And onto some more societal facing AI problems or ethical problems we have. China built the world's largest facial recognition system. Now it's getting camera shy.

So this is from Washington Post and slightly misleading title. This is generally about how there's been a recent crackdown on use of facial recognition by private businesses in China, not so much the government itself. And this came about because Guo Bing, a law professor in the Chinese city of Hangzhou, paid for an annual pass to a zoo in

And then later Vizhou told him that he would be required to have his face scanned in order to enter. And then this seemed unreasonable, so this professor sued Vizhou in 2019, and then the legal process took some time. But just this last week, there was a ruling that basically

Crackdown on a use of facial recognition by private businesses such as the zoo and other things like hotels and shopping malls and so on, which is pretty interesting given that, you know, the perception is and reality to some extent is that facial recognition is pretty commonplace in China.

Yeah. So basically it's not the government's facial recognition going away. It's like the commercial company's facial recognition that requires consent from customers for them to acquire that information for themselves. And I think this is still aligned with

how the Chinese government really works and wants to make sure that while they can retain the power over facial recognition and use that for both security surveillance purposes, companies can't really. And they are worried, actually, that companies who do get that information can actually...

leak it overseas or like something, something would happen with that data as well. And this is just one of many things that have been part of this kind of big tech takedown, not quite takedown, but like itching away at like different things around tech in Beijing in particular. Yeah.

Um, so that includes, uh, things with the Ant Group, um, with Didi and, uh, the, uh, for-profit tubing services that they've, that they've had. They basically have been probably rethinking how they want to, um, want tech to be, be part of their whole system and country. Right. Yeah. So it was built upon this trend or the series of events that happened, I think, uh, over the past few months of a sort of, um,

the government displaying its power. And this is sort of building upon that trend, but in particular with respect to facial recognition. So yeah, the ruling is set to go into effect on August 1st. So I guess it already has. And basically now all these kind of private businesses must get consent as opposed to imposing it. And the use of technology cannot exceed what is necessary.

So it seems pretty sensible for us here in the U.S. where we don't have that sort of regulation yet, interestingly. But yeah, interesting development for sure. I think what's interesting is that they, like on the commercial side, it feels like

Consumers are almost aligned and very much operate very similarly. But when it comes to interfacing with the government, it is very, very different. But with commercial businesses, it's totally the same. Like this professor who said this was unreasonable, I could see that happening here. Exactly. Yeah. And our next article is titled, Twitter Announces First Algorithmic Bias Bounty Challenges from ZDNet.

So last week, Twitter actually announced a bounty. So cash prizes, five of them ranging from $500 to $3,500. And this is open between just two days, so August 6th and 8th.

For people to demonstrate potential harms, an algorithm could have. And specifically, the algorithm that they're talking about here is one that we've already touched on, which is the cropping of an image and figuring out, you know, what are the salient parts of an image and where should we automatically crop?

things. And so people have already crowdsourced, obviously a little bit of what, what kind of bias there was. Twitter themselves already did a deep dive on, you know, the statistics of how, you know, how different, you know, breakdowns of race, gender come out from the cropping algorithm. But now they are kind of opening this up a bit more to have people, you

actually find those potential harms and get prizes for them. Yeah, and this is interesting because this is a bias bounty challenge, which is similar to bug bounties, which has been common in computer science for a long time. And this is being done at the DEF CON AI Village.

So DEF CON is the world's longest running and largest underground hacking conference. And VI Village is part of that, that works to educate the world on the use and abuse of AI and security and privacy.

So this idea of having a bounty for finding bias in algorithms is pretty interesting and does draw on this analogy of finding bugs in software, which has been pretty successful in terms of paying people to find these bugs and be paid for it by disclosing that there are these problems. So you can see kind of a parallel of finding bias in models and disclosing it.

So yeah, it's an interesting idea and it'll be an interesting experiment and possibly could be a model for dealing with bias and finding bias in the future.

And onto our last hilarious article, "Say hello to the Tokyo Olympic robots" from NPR. So if you've been tuning into the Olympics, you might have noticed a couple of cute little mascot robots that are greeting people. And you might have also seen a robot that is helping pick up the different balls on the field.

Yeah, so this is just kind of cute and fun. So the mascots themselves, I have been watching the Olympics, so I was pretty happy to see that they're little robots.

And they actually have animated versions of them that can do different movements and have these anime eyes, which are pretty great. And then on top of that, they have some more useful things. So for instance, they have this little kind of-- I don't know what you call it-- like a cart-ish thing that can drive around a field and pick up things that are thrown, like javelins.

that's kind of helping around humans to do that. And yeah, so it's pretty nifty and obviously also a display of Japan's prowess with robotics. And, you know, as a roboticist, I found it pretty delightful and pretty fun. It's also their love of robots. They very much love robots there and welcome this type of technology more so than any other culture, I believe.

Yeah, for sure. For sure. And actually, the leader of the Tokyo 2020 robot project actually said, quoted that there's a unique opportunity to display Japanese robot technology. And they have some other things as well. So they have human and delivery support robots and they have some...

robots that are telepresence robots with large screens. So not all AI-based. Some of it is more just remote control. But in general, there's a pretty substantial presence, which is interesting. Yeah, I love it. I think it's very cute and very Japanese. Yeah. Cool. And that's it for us this episode. If you've enjoyed our discussion of these stories, be sure to share and review the podcast. We'd appreciate it a ton.

And now be sure to stick around for a few more minutes to get a quick summary of some other cool news stories from our very own newscaster, Daniel Bashir. Thanks, Andrea and Sharon. Now I'll go through a few other interesting stories that haven't been touched on. Our first story comes from the research side. Given the increasing deployment of AI systems in high stakes domains, explainability has become an issue of public concern.

While it is generally agreed that the black box needs to be opened, the question of who should open that box has been underexplored. As Synced Review reports, a team from the Georgia Institute of Technology, Cornell University, and IBM Research conducted a study on how people with and without expert knowledge of AI perceived different types of AI explanations.

The group with AI knowledge included students enrolled in CS programs and taking AI courses, while the non-AI group were recruited from Amazon Mechanical Turk. The researchers had three main findings. First, both groups had unwarranted faith in numbers, but the AI group had higher propensity to overtrust numerical representations and be misled by them.

Second, both groups found different explanatory values beyond the usage the explanations were designed for. Finally, the two groups had different requirements concerning what counts as a human-like explanation. For our business story, transformer-based deep learning models like GPT-3 have been getting a great deal of attention in the machine learning world.

And, as Mark Techpost points out, their understanding of semantic relationships has helped improve products like Microsoft Bing's search experience. But these models can fail to capture more nuanced relationships beyond semantics. A Microsoft team developed a neural network called Make Every Feature Binary, or MEB,

with 135 billion parameters, nearly the size of GPT-3, to analyze queries that Bing users enter. MEB then helps identify the most relevant pages from the web with a set of other machine learning algorithms. The Bing team found that the addition of MEB to its search engine yielded a 2% increase in click-through rates,

and a more than 1% reduction in users rewriting queries because they didn't find any relevant results. And finally, our story on society this week goes into a different domain.

As we develop AI systems that make decisions in contexts like medicine and criminal justice, many have pointed out that we'd like to avoid letting them make decisions based off of protected characteristics like race or gender.

As radiologist and PhD candidate Luke Oakden Rayner and his colleagues found in a recent paper, AI systems can learn to identify the self-reported racial identity of patients to a very high degree of accuracy. Worryingly, AI systems do this when trained for clinical tasks, and this ability to identify race generalizes.

Even more concerningly, the authors couldn't work out exactly what the system was learning or how it did so. This work is very concerning and points to the vital need for work on interpretability for AI systems. Thanks so much for listening to this week's episode of Skynetoday's Let's Talk AI podcast.

You can find the articles we discussed today and subscribe to our weekly newsletter with even more content at skynetoday.com. Don't forget to subscribe to us wherever you get your podcasts and leave us a review if you like the show. Be sure to tune in when we return next week.