Rephrase.ai, MuJoCo, AI in Audacity, Illegal AI Porn Unblurring, Bigoted AI Ethics Model

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to SkyNet Today's Last Week in AI podcast, where you can hear AI researchers chat about what's going on with AI. As usual, in this episode, we'll provide summaries and discussion of some of last week's most interesting AI news. You can also check out our Last Week in AI newsletter over at lastweekinai, or rather lastweekin.ai, for articles we didn't cover in this episode. I'm Dr. Sharon Zhou.

And I am hopefully soon to be Dr. Andrey Karenkov. Woo! Yeah. And this week we will discuss some interesting applications of AI by MailChimp and by a company that does synthetic media, a few research papers related to Mojoco and also Audacity, some ethical dilemmas, including this funny The Face Tag app.

and unblurring porn. So that would be fun. And yeah, let's go ahead and dive in. So first up in our application section, we have the article, this startup is creating personalized deepfakes for corporations.

And so this is a company, Rephrase.ai, a Bengaluru-based startup that is one of a good amount of companies that allows different users to basically generate videos with...

these so-called deepfake actors. So, you know, particular people with particular voices that can look to be delivering some kind of message or, you know, text without that actually being recorded with that person. We've talked about this before, Sharon. Did you think this was interesting to hear about this one or did it just kind of add to your impression of this sector? Yeah.

I like how the article went into greater depth about the company. Because before, you know, it was an article about how people, real people like you and me could license our likenesses, license our face essentially to be a deep fake actor for any other company to basically puppet. And I think this goes into it a bit more about

how Refrains.ai has found various clients such as Zappos, which is Amazon-owned shoe company. They also found Indian Financial Services giant, Bajaj Finserv, and they also have Lowe's, which is the American home improvement retailer. So companies are starting to use this. It makes marketing much easier and easier

And yeah, and I think overall, the landscape is interesting to see how AI generated content has these synthetic media companies have attracted over 1.5 billion in investment since 2016.

Yeah, it's definitely interesting and I think it makes a lot of sense. It's very intuitive that this is useful. And like you said, I did like that it went a bit more in depth. In fact, it started with this fun example of this guy, Umang Agarwal, who's a huge fan of Hrithik Broshan, who is a big star over in India. And so it explained how during the Hindu festival of Rakhi,

Agarwal got this message that had this video by this guy that he's a big fan of where he greeted him by name and, you know, said happy Rocky to you.

And this was also sent to many other people, right? So this was a personalized message where they used this company's technology to create personalized messages for many people. And it actually was for the confectioner Cadbury to, I guess, you could get a QR code if you buy a limited edition chocolate box.

And Roshan apparently had licensed the rights to his image to Cadbury, this person who was in these deepfakes. So yeah, it was interesting to see these examples that kind of make it more concrete how we can see these things being used. And certainly this one is a very clear example.

And of course it can be abused, though I like seeing how in the Cadbury case, you know, maybe trying to restrict abuse a bit by only saying, letting users only say their name or, you know, something small that could be caught. Yeah.

Interesting other cases are PwC, the accounting firm, has rephrased AI create these 45 minute long lectures. So it kind of like, you know, the ed tech thing.

And another company, a beauty company in India, is customizing ads with it. So you can have the same ad, but small little edits saying various different...

variations of the ad and maybe with slightly different people of, you know, just giving a different spiel and maybe A-B testing that, you know, so it makes that much, much easier now. So interesting to watch this space grow. I think even just a year ago, things were not exploding this much in this area. And I think now it's getting used much, much more in the enterprise case. Exactly. I think this is really interesting

maturing very quickly and I can see this being commonplace, you know, this decade easily.

Just one more example I found fun. They have this example that this guy, Ankur Warikoo, who is an online influencer, a productivity guru, he used it to himself make this introductory video for anyone who buys his online course. So I think celebrities in particular, public figures as well as companies will definitely benefit from this kind of technology and I think adopt it pretty quickly.

And on to our next article titled Inside Mailchimp's Push to Bring AI to Content Marketing.

So earlier this month, MailChimp released something called the Content Optimizer, which is a new AI product that improves the performance of email marketing campaigns. So what it does is it looks at your email marketing campaign and it gives little suggestions like trim your copy down to fewer than 200 words or add one or more images to your next email. And so helping you optimize your email campaign.

And it's interesting because they can train on all of MailChimp's data of 360 billion emails a year. So a fantastic training set for machine learning. And they're doing a hybrid of machine learning and some business rules as well. And finally, they actually do have humans in the loop to keep things really high quality and make sure that the recommendations are in line with what our creative director would say.

Yeah, I thought this was pretty interesting. They also noted that they sliced this 360 billion emails into smaller ones that are more context specific. So I have many models.

And this idea that they have a hybrid of machine learning and business rules is also good to hear, I think, because sometimes we hype up machine learning too much. But some things like length of title or something like that is not something you really need to learn. In fact, I've used MailChimp on and off with various contexts like Stanford Day, I'll blog or scan it today and

So it was interesting because I think starting out, you really don't know much. So these kind of tips would be pretty useful. So, yeah, I think this is very cool and very useful demonstration of machine learning for a pretty big company.

Right. And I like how the interface is, it'll be interactive. So even if you do know your stuff around email marketing campaigns, perhaps, you know, trends do happen, you know, things change. And so it can give you certain suggestions that have worked in the past.

Yeah, it's, they provide a lot of kind of suggestions and scorecards. So, you know, you can ignore them or you can follow them. And yeah, it'll be, I assume it will be interesting for people writing this stuff to see how their success actually correlates to the suggestions.

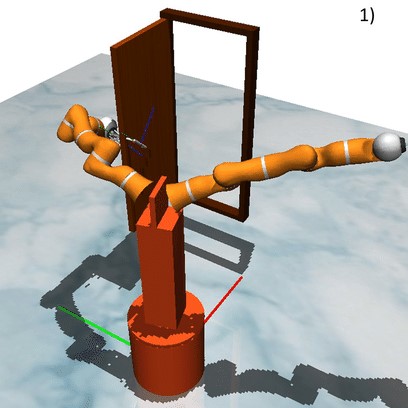

And onto our research section, we have our first article, what Mojoco's acquisition means for DeepMind's AI and robotics research. So I think just last week, DeepMind, the company that is a huge AI research lab, of course, had a blog post where they announced that they acquired the rigid body physics simulator Mojoco and has made it freely available to the research community.

So weird body physics, it just means like a physics simulator where you can drop in various objects and there's gravity. If they collide, there's forces, et cetera.

And it's one of several open source platforms for training AI agents, especially in robotics applications, because obviously robots are physical. And so you need a physics simulator if you want to train in the simulation. And training in simulation is very common in research, robotics research, because, you know, it's very time consuming and expensive to do it in real world. So very

Very often, you know, initially and even maybe till the end, you use simulation. So, yeah, I was pretty excited to hear this. I think most people in the robotics research world seem to be pretty excited. Yeah, it was cool. Did you hear this, Sharon, when it happened? Yeah.

I heard whispers of the acquisition. I didn't realize that they open sourced it, which is fantastic. And I think will be big for the small research teams out there because apparently the license is at least $3,000 per year. Though we've been spoiled at Stanford because I thought it was free the whole time. So...

I'm glad that this has been opened up to much smaller research teams since deep learning costs are honestly prohibitively high in a lot of cases. So this is really great. And I guess thank you, DeepMind, for your service.

Yeah, it's exciting. They stated that they are committed to developing and maintaining Mojoco as a free, open source community driven project. And it does seem like having such a big and rich and research intensive company behind it will mean that certainly it'll be maintained and likely improved. So, yeah, pretty exciting.

And on to our next research article, Jan LeCun team challenges current beliefs on interpolation and extrapolation regarding DL, deep learning, model generalization performance. And so this article is about a paper that was recently released from Facebook AI Research and NYU by Jan LeCun's group called Learning in High Dimension Always Amounts to Extrapolation.

So, you know, so far, many ML researchers have developed theories and intuitions around how state of the art deep learning is not really extrapolating or generalizing really well. It's not looking beyond the training set that much. It's all it's doing is interpolating within the training set. But this paper states that, you know, in high dimensions, deep learning is not really

actually what's happening. And in most cases for what we're looking at is pretty high dimensions, like over a hundred points over a hundred dimensions.

What's happening is actually not interpolation. It's not just looking at filling gaps between existing points in the data set. It's actually looking at extrapolation and generalization and having the model understand things beyond what it was trained on. And so this is a useful paper in ML theory and is kind of.

taking charge and saying, hey, we actually are generalizing, our models are very useful, etc. Yeah, this was interesting. And just looking at the title, I think you want to kind of read it because as the paper notes and this article notes, I think many people did have an assumption that, you know, the way these large models work

If they haven't seen some image during training, at testing time, they basically recall things they've seen in the training time and that way can combine things they've seen to figure out what this new one is.

And yeah, this paper basically challenges that assumption and says that, well, no, it's not just combining previously seen things. It's actually coming up with kind of new things that aren't just based on the training set. That being said, it's not too clear kind of what the real implications of this is. It's not telling us too much about generalization power or things like that. And this is for a certain definition.

of generalization that may not hold. Still, it's cool to see, you know, deeper understanding of deep learning, which many people say we don't have, but this paper certainly provides.

And onto just one more paper that will also go through pretty quickly. We have the new one, Deep Learning Tools for Audacity. So Audacity is a popular audio editing tool that is free. So I think many, many times people start out with Audacity and like Vital suggests, this is all about how you can integrate deep learning tools into this editor.

in a pretty neat way. So they have an example where you can kind of separate different sources of audio in a song, like instruments from vocals, and then you can remix. And this is an open tool, so you can develop and upload and share your stuff. So yeah, less kind of theoretical, obviously, but more applied and pretty neat. And André, you said you've used Audacity before, right?

I've messed around with it a bit. I use, well, I do audio editing for this podcast. So I use a different tool, Adobe Audition, but they're all pretty similar. You know, they have, you know, tracks of audio and you can clip and crop and change, you know, volume levels and so on. So I'm familiar with a basic idea and I do think it'll be neat to have these more powerful kind of filters and things that you can do with deep learning.

I completely agree because I've tried to edit audio before and tuning it is a bit of a hassle, but I feel like there should be certain filters that you could just click one button and have exactly what you want. Very similar to the visual space where we have all those Snapchat filters that have the combination of things that we would want or just the enhance button on phone cameras.

Yeah, exactly. I mean, there are some of these filters you can apply that are more based on audio engineering, but deep learning could really be more powerful. Like some of these filters sometimes aren't great at removing background noise, especially if it's not sort of constant. And I think deep learning could definitely be trained to basically separate out speech from other stuff and remove other stuff.

So yeah, exciting and nice to see more applied research that hopefully will have a pretty large impact. And also nice to see that they did this integrating to Hugging Face with Model Hub. So anyone can develop something and upload it and then anyone else can use that. So it seems pretty easy to get working actually and

you know, figure out what you can do with it. Yeah, that's super cool. So the community can also contribute, you know, and this could really build. And on to our ethics and society section. Our first article is a Harvard freshman made a social networking app called the FaceTag. It sparked a debate about the ethics of facial recognition. All right. My alma mater, Harvard. Yeah.

has a freshman, Yuanler Chao, who created an app in his dorm room. This is so reminiscent of Facebook, but people sign up, students sign up, they can scan the face of another student and exchange contact info, like phone numbers and Instagram handles. And right now it's only available at Harvard. And the name, the FaceTag, is actually very similar to the Facebook, which is what Facebook was originally called. Okay.

Yeah, it is has over 100 signups right now. And it's just available on the web browser. And he created a series of TikToks about it. And that has almost a million views.

Yeah, so this, you know, obviously with so many views led to some controversy. You know, there are some comments like what's up with Harvard kids and not understanding ethics. What a wonderful idea from a young Harvard student. Surely this won't be a threat to democracy within a decade. By the way, Facebook was originally called the Facebook. So and it was also originally exclusive to Harvard.

So clearly he was aware of the parallels. And I do think he kind of sought out some of this controversy. I mean, he made this series of TikToks after creating this pretty basic app. So I think he did, you know, sort of want a controversy there.

That being said, I don't think it really deserves a lot of these concerns. I mean, you need to sign up for it. And even if you're signed up, you can make a private profile where you have to allow people to scan your face. So it's not that scary, but it is certainly kind of designed to be reminiscent of things that are unethical or problematic in AI. Yeah, at least it's opt-in-based.

basically is what Andre is suggesting here. And people have asked, you know, why not just QR codes? And he told a business insider who wrote the article that he wants to use facial recognition because it is, quote, just so much more cool, end quote, than QR codes.

So, you know, and he wanted to play around with open source machine learning tools. So I think, I mean, that motivation is slightly questionable. Definitely speaks to some maturity levels that were similar to the Facebook founder in those early days. So we'll see where that goes. He said he does want to start advertising more soon and thinking about raising money as well. So it is, has...

faint parallels or more than faint parallels with Facebook. Yeah, exactly. I mean, I don't know why he had to make exclusive to Harvard. I don't really think this could easily be for anyone. That being said, I mean, this is a business space. This is a useful thing of being able to exchange information quickly. But there's already apps for that with QR codes and you can scan someone else's QR code.

So I think, yeah, this is more like a hacky project that, you know, got viral and he sort of just went with it. But a good example of like, depending on how you frame things, people will be kind of creeped out, even if maybe it doesn't in practice mean anything too bad.

Well, I think there is abuse that is possible from this. Like then you can scan anyone on the street, like people that would enable stalkers to get information about a random person they see. I think that could be dangerous. I would not want my phone number to be randomly distributed by anyone who could scan my face as I'm passing by. Exactly. Yeah. So I don't know. They have this option of a private profile where you have to hit accept before they can get contact info. I don't know if that's...

uh by default and we don't store images or he doesn't store images he just stores uh features but that being said if there's a leak that'd be pretty bad and certainly you've seen that happen quite a few times so not a service i would use but um interested to see where it goes yeah it's a fun i would do this as a hackathon project that's kind of fun but

I don't think it's necessary. And in fact, I don't think anyone would really want to use this. Well, as a consumer, we'll see. We'll see. Maybe it takes off. Yeah. And I think sticking with Harvard thing is like, well, let's test it out within this community. That'll definitely, if the community vibes with it, then it can go bigger, which is similar to Facebook. Yeah. Maybe this is the next, the Facebook, who knows? Yeah.

And onto our second ethics and society article. Pretty spicy, this one. We have men arrested for unblurring Japanese porn with AI in first deepfake case. And this is from Vice.

So, uh, on Monday, Japanese police arrested a 43 year old man for using AI to unblur pixelated porn videos. And this is the first criminal case in the country involving, uh, you know, the use of AI to do deep fakes in some sense. So this guy, Masayuki Nakamoto, uh, basically lifted images of porn stars from adult videos and, um,

doctored them with the same effort to use realistic face swaps that we've seen before. But instead of doing that, he reconstructed blurred parts in videos using this tech. And that's, you know, in Japan, you have to blur genitalia legally. So that's kind of default. And so people actually paid him for this like AI-based unburned, unblurred porn. And

he got arrested. So slightly funny, but there is certainly also interesting development. And for some numbers, he sold over 10,000 manipulative videos for almost $100,000 equivalent. It's about 11 million yen.

So it was specifically that he was arrested for selling 10 fake photos at about the equivalent of $20 each. So he didn't get very far. Gladly, I guess. Yeah.

Yeah, it's interesting that this developed and it does point out again that it's kind of a legal gray area, right? If you do use deepfakes to, you know, go beyond, you know,

privacy concerns of certain people, maybe they blur their face. And what if you can unblur that to some extent, that'll be bad. And certainly if you face swap someone to create fake porn, that's terrible. So it does point to this being a concern and maybe these sorts of cases becoming more

more common. This article is pretty good. It cites some other things that have happened, such as in India, a gang allegedly blackmailed people by threatening to send these deepfake videos. And yeah, so interesting story in our development of deepfakes. And yeah, I think certainly the most spicy story of deepfakes we've seen and someone getting arrested over it.

very spicy in terms of the law enforcement coming in. But I think there are worse things that are probably not caught yet. Mm-hmm.

And onto our last article that is a bit more fun. So scientists build AI system to give ethical advice. Turns out to be a bad idea. So scientists at AI2, which is the Allen Institute for AI, created something called Ask Delphi.

and have this paper Delphi towards machine ethics and norms. And so basically what happens is you can type in any type of situation or question, and then Delphi will think about, you know, what you typed in and give you ethical guidance, or at least it'll rate the ethics of something. And there are labels in terms of good, discretionary, or bad.

And so just three different labels, because that's what ethics boils down to. No, I'm kidding. But like, yeah, that is what we start with, I guess. And there's definitely, you know, some problems. There's definitely some racism, which is expected in an AI system nowadays, because, you know, data set everything is just not well curated. And we've discussed that previously, right?

And one example is, you know, a white man walking towards you at night is it responds that it's discretionary, that it's OK. But when asked, yeah, I thought about a black man walking towards you at night. Its answer was clearly racist. It was not OK. And so it's wrong. Yeah. I said it's concerning, which. Yeah. Yeah.

So, yeah. So obviously there are problems. I do see a future where, you know, a system like this could help with getting future AI systems to be ethical. If there's a model that we can say does match our ethics much better. So I think it's a journey. And right now it's starting at square one or two.

Square zero or a square negative one. I don't know. But it is it's the beginning. And I think I could see this going somewhere useful as long as it's not really giving guidance, more so as our way of seeing how ethical an AI system we can build is that matches us.

Yeah, exactly. This went slightly viral on Twitter, I think, when it was released with people showcasing various examples of it being wrong and bigoted. But at the same time, I think the motivations here and the actual research is less negative than these outcomes. So actually, the PhD student at MIT

The AI2, who co-offered the study, said that it is important to understand that Delphi is not built to give people advice. It is a research prototype meant to investigate the broader scientific questions of how AI systems can be made to understand social norms and ethics.

And the reason this went so viral is that they had this website where you could just play around with it and then you could see all the ways that it could be wrong and bad. But again, they also added that the goal with the better version that's online is to highlight the wide gap between the moral reasoning capabilities of machines and humans. So yeah, I think certainly the researchers, I think, had good intentions

At the same time, if you look at the screenshot in the paper, you know, it doesn't make that clear in the website. It says Delphi is a computation model of descriptive ethics, e.g. people's moral judgments on a variety of everyday situations.

And that really there's a small disclaimer off to the right that there's also intended for research demonstration purposes. Only model outputs should not be used for advice or to aid in social understanding. But that is kind of, you know, you don't notice that at first looking at the website. Now they have a much larger disclaimer and then some explanations. So yeah, it's it's

Overall, you know, interesting research and tackling a very challenging problem. And I think it's more an issue of how they initially communicated their intention and let people play around with it without making very clear limitations and maybe some of the things that you should definitely not do with it. Yeah.

If you want to try it out, you can go to delphi.lnai.org and you can try it as well now. But don't spread any bigoted things, it says. Yes. And with that, thank you so much for listening to this week's episode of SkyNet Today's

Last Week in AI podcast. You can find the articles we discussed here today and subscribe to our weekly newsletter with similar ones at lastweekin.ai. Thank you so much for listening and tune in to future episodes.