How AI Learned to Talk and What It Means - Prof. Christopher Summerfield

Machine Learning Street Talk (MLST)

- AI can learn about reality from words alone.

- Supervised learning allows learning about reality from text.

- This is considered the most astonishing scientific discovery of the 21st century.

Shownotes Transcript

Superman 3 is a terrible movie, but there's this wonderful scene. So I think there's a kind of giant computer that goes rogue in Superman 3. And there's this wonderful scene where there's this female character and, you know, she's sort of the machine is just kind of like waking up and she tries to she's just sort of walking past it. And the machine kind of like sucks her in.

And she gets kind of like stuck there. And then what the machine does is it gradually like kind of puts armor plating on her and replaces her eyes with lasers and basically turns her into a sort of like automaton. And it's a very compelling scene. I think I was like terrified by it as a child, which is probably why I remember it. But like that is a sort of metaphor for like, you know, what is happening to us, right? You know, we're worried about

the robots taking over or whatever. But in a way, it's more like us being sucked into the machine. We become part, just like that poor character, we get turned into something we are not. You become part of that system and it erodes your authenticity and in a way it erodes your humanity.

people often say, well, you know, kind of ChatGPT, of course, it was exposed to more, I think I have the analogy in my book, it's exposed to the same amount of language as if, you know, a single human was continually learning language from the middle of the last ice age or something like that, right? That's how much data it's exposed to. But it's a false analogy, right? It's a false analogy because we don't learn language like ChatGPT does, right? So,

Language models are trained in a kind of like, you might think of it as it's almost like a Lamarckian way, right? One generation of training, if you think of a training episode, right? Whatever happens in that gets inherited by the next training episode, right? That's not how we work, right? My memories are not inherited by my kids. There's this fundamental disconnect. We're Darwinian. The models are sort of like, I don't guess you could call them Lamarckian.

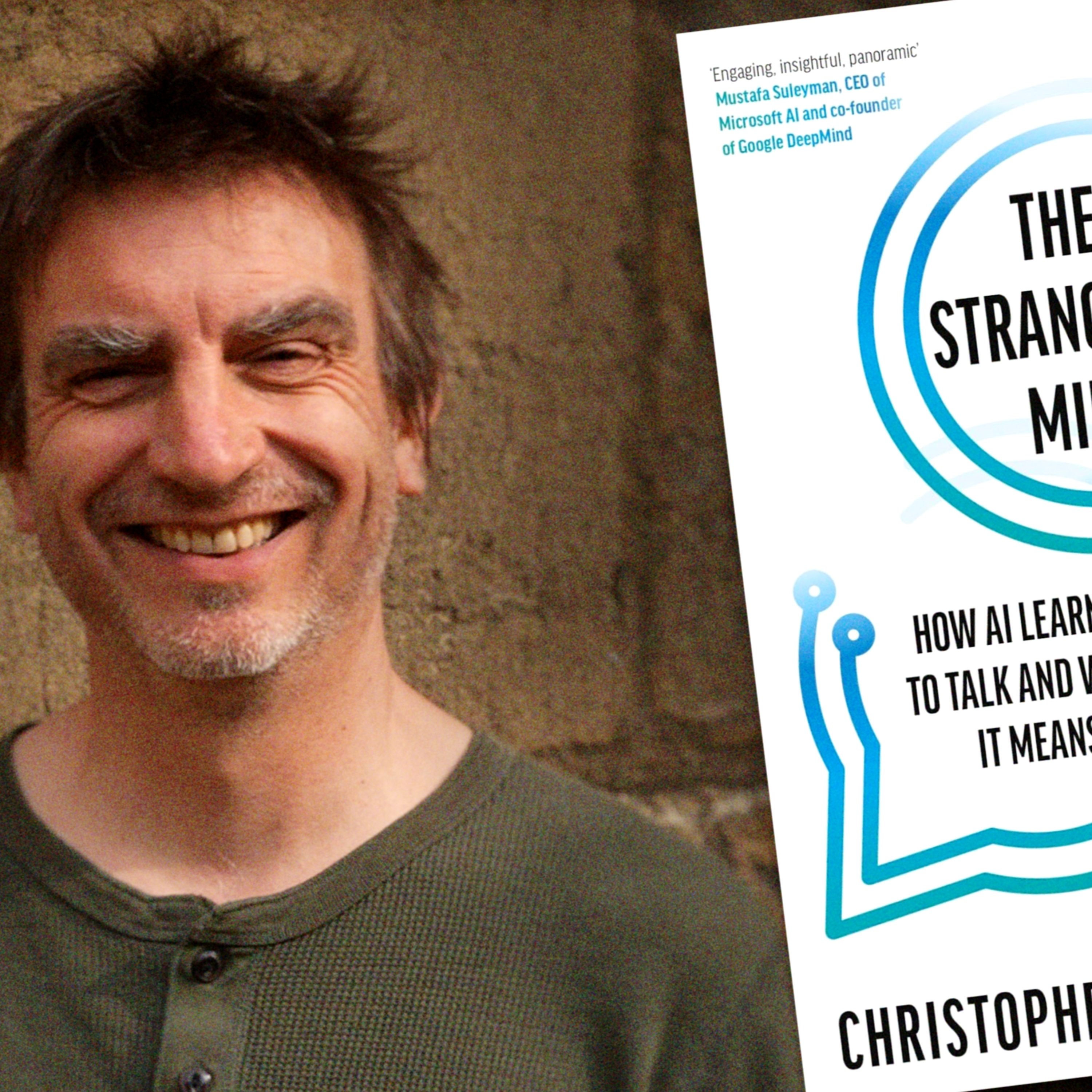

So we're here in Oxford today to speak with Professor Christopher Summerfield. He's just written this book called These Strange New Minds, How AI Learned to Talk and What That Means. He spoke about the history of artificial intelligence and how the allure of AI is to build a machine that can know what is true and what is right. Imagine a world in which everything was like that, but it could actually talk back to you.

And it could simulate all of the kind of social and emotional types of interaction that we have with people that we care about. So, you know, the milk in your fridge is like your best friend, right? Yeah.

This is a very strange world in which, you know, of course, that's a silly example. The milk in the fridge is never going to be your best friend. But, you know, kind of like there are, you know, as I mentioned earlier, there are already, you know, kind of large numbers of people who are engaging with AI in ways that are kind of like mimic the sorts of interactions they have with other people.

I thought that grounding, you would need, you know, kind of like sensory signals. You know, you can't know what a cat is just by reading about cats in books. You need to actually see a cat. But it turned out I was wrong. And so were many, many, many other people. And that is, to my mind, perhaps the most astonishing scientific discovery of the 21st century.

is that supervised learning is so good that you can actually learn about almost everything you need to know about the nature of reality, at least to have a conversation that every educated human would say is an intelligent conversation, without ever having any sensory knowledge of the world, just through words. That is mind-blowing.

This podcast is supported by Google. Hey, everyone. David here, one of the product leads for Google Gemini. Check out VO3, our state-of-the-art AI video generation model, in the Gemini app, which lets you create high-quality, 8-second videos with native audio generation. Try it with the Google AI Pro plan or get the highest access with the Ultra plan. Sign up at Gemini.google to get started and show us what you create. ♪

I'm Benjamin Crousier. I'm starting an AI research lab called Tufalabs. It is funded from past ventures involving machine learning. So we're a small group of highly motivated and hardworking people. And the main thread that we are going to do is trying to make models that reason effectively and long-term trying to do AGI research.

One of the big advantages is because we're early, there's going to be high freedom and high impact as someone new at Tufa Labs. You can check out positions at tufalabs.ai. So Professor Sommerfeld, I have to congratulate you on this book. Your previous book was my favorite book that I've ever read in AI. It's up there with Melanie Mitchell's book. And actually, Melanie Mitchell reviewed your new book as well. She did.

So generously. Yeah, I'm a big fan of Melanie. So you've been writing this for a couple of years. And of course, you actually extolled in the afterwards that it takes quite a long time to get these things into publication and the space is moving very, very quickly. But can you give us a bit of an elevator pitch of the book? Yeah, sure. So the book, yes, as you said, it was actually finished at the end of 2023. So that is kind of like cast your mind back to

to the sort of medieval period of AI, if you like, 12, 14 months after ChatGPT had just been released. Yeah, so the idea of the book was that at that time, and I guess, you know, to a large extent still today, there was considerable debate over, like, what is the kind of cognitive status of these types of people?

title of the book, Strange New Minds, that we seem to have created and are now increasingly interacting with. And that debate, the debate that I heard, and I heard, you know, the same debate going on in academic conferences and down the pub. The debate was, you know, kind of like, should we think of these things as actually a bit like us? Are they thinking? You know, kind of, are they reasoning? Are they understanding? And of course, this very quickly became a highly polarized debate.

And that debate was kind of like on the one hand, a bunch of people who, you know, really sort of vehemently rejected the idea that these tools could ever be anything like us. It's just computer code, which is, of course, true. And then on the other hand, you know, you had people who were like absolutely astonished by not just by the capability, but by the pace of progress and thought, you know, kind of like we really are on course. Finally, finally.

to build something that is as competent in a general way as humans. And this debate was playing out, and I was like, well, this debate is not really grounded in... I don't hear the language of cognition being used to scaffold this debate. So this debate is being had by people who...

care deeply about this issue but are not trained in kind of like a grounded computational sense of what does it actually mean to think what does it actually mean to understand something

And so I thought as a cognitive scientist who has done a lot of work in AI, that's probably quite well placed to talk about that. So that was sort of part one. And then, you know, kind of I also have for the past five years been very, very interested in the implications of AI for society.

And so I was working on that problem when I was at DeepMind and we were doing work to try and understand how AI could be used to kind of intervene directly in society and the economy and help people find agreement. And at the time when I wrote the book, I was just about to move to the AI, as it was then, AI Safety Institute in UK government to work more on that. So I had a kind of understanding of like the landscape of AI

about deployment risks, thinking about how AI might change the way that we live our lives. And I thought probably putting those things together, I had enough of a unique perspective to write a book about it. So that's what I did. The discourse is quite fractured. And you speak about this in great detail. You speak about the, you know, the hypers, the anti-hypers, the safety hypers, and so on. And

Early on in the book, you kind of trace this back to two intellectual threads going back to the ancient Greeks. So Aristotle and Plato, basically empiricism and rationalism. Can you kind of sketch that out? Yeah, sure. Well, the history of AI has itself been kind of repeated an ancient philosophical debate about

about whether the fundamental nature of building a mind, including our mind, is fundamentally about learning from experience or about reasoning, particularly reasoning over latent or unobservable states, right? And that reasoning over unobservable states is, of course, traced back to Plato. That's kind of this idea that, you know, everything is fundamentally unobservable. We just get the sort of shadows on the cave wall or the light on the retina, and we have to impute what's there. And

And the corresponding view, which might trace back to Aristotle, this idea that everything comes from experience. And the history of AI, of course, was that very debate playing out actually in the workshop, so to speak, or at least on the keyboard. So on the one hand, originally good old-fashioned AI was

was structured around the idea that, you know, kind of we sort of know how to work out what is true. And the reason we know how to work out what is true is because we have a kind of like long tradition back through kind of positivism and, you know, kind of early theories of reasoning back to Boole and, you know, even Leibniz before that.

The idea that, you know, kind of like you can use logic to work out what is true. It is unassailably true that, you know, kind of like if I say that, you know, all men are Greek and Aristotle is a man, then Aristotle is Greek. Right. That is just like true by definition.

And so that seemed like a really sensible way to build AI, right? Like you put in those primitives and you crank the handle. And like, you know, if you've got enough computational power, then you can derive really, really complex things.

And it worked, right? It worked. So in the 1950s, Newell and Simon built the logic theorist, right? Which I like to say is the first super intelligence, 1958, right? So it's an AI system that was able to prove theorems

that to prove theorems with a greater kind of like, well, it was able to prove many of the theorems that were in Russell and Whitehead's Principia Mathematica, which is like already a feat. And it was able to find more elegant solutions to many of those theorems. So like, that's astonishing. So, you know, initially it seemed like this kind of reasoning approach worked. And then, you know, kind of like, of course,

What happened is that as the problems that we tried to tackle with this kind of approach moved from these very abstracts of clean problems about maths and logic, and we started to tackle problems in the real world, we ran into a fundamental problem, which is that the real world just isn't kind of like all that clean and nice and neat in the way that, you know, kind of like reasoning problems are designed to solve. So the world is full of weird exceptions, which, you know, don't

aren't fundamentally amenable to analysis with logic. And so you had this other corresponding approach, which is the learning approach or the empiricist approach. And that was where neural networks and the deep learning revolution ultimately came from. Isn't it a crazy time to be alive, though? I interviewed the CEO of one of the largest companion bot platforms and...

In the comment section, there was a lot of negativity. And you actually mentioned, I think, in your afterword that it seems strange to us now that we would want to have a relationship with an AI companion. And maybe we might revise that belief in a few years' time. But, I mean, more broadly, though, you said in your book that language is basically the biggest gift that has ever been given to us. It allows us to acquire knowledge and communicate it, and it survives many generations. Yes.

And I guess the Rubicon moment with this technology maturing was chat GPT. That changed everything in November 2022. Sketch that out for me. Well, I mean, you know, the history of NLP, I guess, has been told many times, probably by people more qualified than me. But, you know, kind of like we talked earlier about this kind of back and forth between learning and reasoning. And, you know, kind of in the history of NLP, what played out was exactly the same question, right? So NLP, natural language processing,

And, you know, kind of the as in more general, the sort of more general symbolic AI movement, you know, kind of the early models were basically attempts to define the computations that lead to the generation of valid sentences. Right.

That's basically the gauntlet that Chomsky lays down in his 1958 book. And, you know, there are a set of rules which, like, you know, if you could just apply them all lawfully, they would allow for the generation of sentences that, you know, kind of like obey the rules that we would all understand, you know, to be like legal.

what makes a valid sentence so syntax right choppy school is mainly concerned with english of course so he's worried about english syntax but like so that movement you know kind of of course was then just like neural networks came along in the wider field was then challenged by statistical approaches and that went back and forth and back and forth and back and forth and you know

When the deep learning revolution happened, by 2015, we had models that could... You could train a model on the complete works of Shakespeare, and it could generate something that looked a lot like Shakespeare, but it didn't make any sense. And so still, you know, kind of even when the deep learning revolution was in full swing...

Most people, including myself, thought there is no way that the mere application of like powerful function approximation and lots of data is going to solve this problem. I did not believe that to be true. I thought that I think like any other people that you would need grounding, you would need, you know, kind of like sensory signals. You know, you can't know what a cat is just by reading about cats in books. You need to actually see a cat.

But it turned out I was wrong. And so were many, many, many other people. And that is, to my mind, an absolutely astonishing, perhaps the most astonishing scientific discovery of the 21st century, is that supervised learning is so good

that you can actually learn about almost everything you need to know about the nature of reality, at least to have a conversation that every educated human would say is an intelligent conversation without ever having any sensory knowledge of the world, just through words.

That is mind-blowing. And I think it changes the way we think about many, many things. It certainly changes how I think about things. So one big theme in the book is this dichotomy between equivalentists and exceptionalists. So some folks argue that humans are exceptional and the kinds of cognizing that language models do are not really in the same category. Yeah, so, I mean...

that distinction is a cartoon. So of course, you know, kind of like everyone has a different view about the relationship between AI and humans or biological intelligence in general. And, you know, kind of like the evidence clearly admits a spectrum of different views. But I found it useful in the book to kind of cartoon to extremes of that continuum and

And, you know, kind of at one end, you have people who I think probably just kind of like ideologically reject the idea that something that is non-human could ever use, that we should refer to that, refer to whatever that system is doing.

behaviorally or cognitively using the same vocabulary as we use to apply to a human. So, you know, kind of like clearly today's models are capable of reasoning at levels which is beyond the capability of most even educated humans today, right? Certainly when it comes to formal problems like math and logic and so on. So it can reason like a human,

But there are people who I think just fundamentally think that we shouldn't think of that as reasoning because we should kind of like circumscribe the definition of reasoning as something that humans do. And that is a stance which I think is not really, it's not really about the empirical evidence, although some people kind of construe it to be that way by saying, oh, the models aren't actually that good at reasoning, which I think is a

Even in 2023, it was a hard-to-defend view. Now it's probably an even harder-to-defend view. But I think it's kind of like...

It comes from a place which is like a sort of radical humanism, right? It's a sort of, it is a desire to kind of like really ring fence a set of cognitive concepts and think of them as uniquely human. And like for people who care about humans, which by the way includes me, like I can see why that's really important. But what it does lead you down the road of is kind of like

a refusal to kind of ever see the cognition that an AI will engage in and the cognition that a human will engage in as comparable, even when their kind of capabilities are clearly matched.

So that's what I call kind of exceptionalists, because in a way they're sort of like, you know, kind of, they are espousing a view of human exceptionalism. Humans are special and different, end of story. And, you know, somewhat kind of cheekily in the book, I compare that to kind of earlier instances of human exceptionalism. Of course, that occurred when, you know, Darwin first proposed that, you know, we weren't kind of uniquely created by God, but we're actually related to all the other species and like,

you know, kind of when the heliocentric model, you know, kind of first became established and was rejected by the Catholic Church and so on. But that was kind of, I guess those analogies give color. But fundamentally, you know, I think it is a defensible position, but it's not a, it's an ideological position, I think. Yes, so you invoke this notion called the duck test. You know, basically, if it looks like a duck and quacks like a duck, we should call it a duck. And by extension, I guess, yeah,

you would call yourself a functionalist, which is this idea that it's not about the internal constitution or the mechanism, but it's about the function that it performs. And we can use this information metaphor to say, well, you know, if we have an AI system over here, which is doing cognizing and it's doing the same types of things, then we could reasonably make the inference that it's appropriate to use mentalistic language to describe it. That's absolutely right. Yeah. And you're absolutely right to say that it's a functionalist perspective. And that is broadly my perspective. Yeah.

I think, you know, kind of once again, that functionalism, you know, it's kind of like from a scientific standpoint, right? I'm like, if it reasons like a human, then we may as well use the term reasoning. But that doesn't imply a broader set of equivalents, right? That doesn't, for example, imply moral equivalence.

It doesn't apply, you know, it doesn't, it doesn't mean that, you know, kind of like the motivations or, you know, relationships we have with AI are similar to those we have with humans. Absolutely not. Of course, they're completely different. Um, but,

But it does mean that, you know, when purely, you know, if you put on cognitive scientist hat and you're really just thinking about, you know, let's let's talk dirty about information processing, then, you know, kind of like that functionalist perspective. Yeah. If it walks, if it quacks like a duck, it's you may as well call it a duck.

The anthropomorphism thing makes it a little bit more tricky. I mean, I think in a film, if you see a robot peel the face away and all of a sudden you see they're not a human, that they're a robot. And the intuition there is that they have a different mechanism.

And this is what John Searle was getting at when he was talking about the Chinese room argument. And I read what you had to say about that. So I think Searle was saying that when you take a type of process and you represent it in silicone as computation sands the machine, which we are bio machines, so we are causally embedded in the world. And when we do things, there's this large kind of light cone of

low-level interactions that happen and and i guess this is his notion of semantics and and i think um professor summerfield you subscribe to something called a distributional notion of semantics which is that we can actually remove things from the physical world and recreate patterns of activity in silico and and for all intents and purposes it would have the same meaning

That's, yeah, I mean, I do subscribe to that view. I mean, I think that, you know, kind of, of course, as not only a cognitive scientist, but a neuroscientist, I'm uniquely aware that, you know, whilst there are many differences between machine learning systems and the computations that go on in the brain, there are also like astonishing similarities, right? At the level of kind of certainly at the algorithmic level, not clearly at the implementational level, you know, kind of like, you know,

Neural networks don't tend to have, you know, they don't have to have, they don't have many, many different types of synapses. And we don't have many different types, you don't have basket cells and, you know, fast inhibitory into neurons and things like that. But there is at the level of the neural network, there is a striking similarity. And, you know, kind of the most reasonable assumption to me is that

There are broad shared computational principles that happen when you take networks of neurons that are wired up to have some dense interconnecting. And, you know, for the most part, recurrent. We have to remember the transform is not a recurrent architecture. So it uses tricks to mimic what a recurrent architecture does. But like for the most part, recurrent network. And we know that because we know, for example, that

way that information after various after optimization has been applied and actually sometimes even before optimization has been applied we know that there are striking similarities in the semantic representations that you can read out of those two classes of network biological artificial by doing experiments right so you know kind of we know that you can go into the brains of monkeys or

or if you have access to it, humans via neural imaging or whatever, and you can see patterns of representation that express themselves in terms of not just, you know, in terms of like coding properties, but in terms of like neural manifolds, in terms of like neural geometry, express themselves very much like in the neural network. So, you know, kind of like the substrate is shared in some very loose sense. The behavior is shared in

in some, you know, perhaps not so loose sense. And...

To me, it makes sense to, you know, kind of science is a puzzle, right? Like you get bits of information and you try to come up with the most parsimonious explanation. And for me, the most parsimonious explanation is that by, you know, kind of like sheer kind of like a mixture of like luck and like, you know, trying enormously hard, we've kind of got to a place where we've built something that is a bit like a brain. And lo and behold, it does stuff that is a bit like a brain.

That doesn't mean it does everything. And it also doesn't mean that it is like a human in the sense that like meaning how we should treat it, how we should think of it. But it does mean that the computations are most likely shared. I realize this is a difficult argument to make. And there were some scornful comments in your book about this. But there are some people who still make the argument that it only appears to be reasoning and understanding, but it's not really. And is it possible that Chomsky could still be right in some sense? So, you know...

His idea is obviously he's a rationalist, but it's this Platonistic idea essentially that the laws of nature have bestowed our brains with the secret functions that explain how the universe works. And in a sense, he's quite similar to a lot of folks now. He's a computationalist. He doesn't subscribe to this causal graph thing, but he does think that the brain is a Turing machine and we should do this recursive merge type stuff.

But is it possible that empiricism seems to work, but it's kind of like a pile of sand and Chomsky would still be right if only it were possible to have like the low level stuff? Yeah, I mean, I think, you know, kind of the what we will find out, my guess is that what the end point will be.

when we sort of look back after perhaps having figured this stuff out, is that in the end, the dichotomy that was set up and that we fought about literally for millennia actually is kind of a question of perspective. So in a way, there is a way in which the rationalists broadly construed a right. Reasoning is really important for computation.

But what they were wrong about is how you acquire the ability to reason. So I think what we have learned since 2019 is that the types of computations that you need to reason about the world can be learned through large-scale parameter optimization, through function approximation, essentially, through training a neural network. So in a sense, Chomsky kind of is not wrong that there are...

rules to language. Those rules need to be learned. It was just wrong about how they got learned, right? And like, you know, of course there's always a sleight of hand in saying, well, you're born, this is inborn. Because it really just begs the question of how it's inborn, right? And, you know, where does that gene that allows you to do recursion or merge or whatever, where does it come from? And, you know, kind of like, what was the pressure that got it there? And, you know, I think that...

there is a subtlety to an argument that is often not kind of expanded on. And I think it is that, you know, of course,

We are born with the predisposition to learn language. And we know that that is not just kind of like an accident, right? Because other species, even highly, highly intelligent species like chimpanzees and gorillas, capable of really, really sophisticated forms of social interaction, you know, political machinations and so on, they can't learn.

structured language. So they can learn to communicate, but they can't learn to communicate in infinitely expressive sentences, right? Guided by lawful syntax. And the fact that they can't do that tells us that there is something special about our evolution. And

So the question is, how do you explain that in the deep learning framework, right? And people often say, well, you know, kind of chat GPT, of course, it was exposed to more. I think I have the analogy in my book. It's exposed to the same amount of language as if, you know, a single human was continually learning language from the middle of the last ice age or something like that, right? Because that's how much data it's exposed to. But it's a false analogy, right? It's a false analogy because we don't learn language...

Like ChatGPT does, right? So language models are trained in a kind of like, you might think of it as it's almost like a Lamarckian way, right? One generation of training, if you think of a training episode, right? Whatever happens in that gets inherited by the next training episode, right? That's not how we work, right? My memories are not inherited by my kids, right? So there's this fundamental disconnect. We're Darwinian. The models are sort of like, I don't guess you could call them Lamarckian, right?

And so you can't compare the amount of training that ChatGPT has to the amount of training that we have, because it's just kind of like apples and oranges, right? What happens in a person's lifetime is like, it's been guided, although it's not, you know, it doesn't have the content in that, you know, I live in Britain, but if I had

My kids have been born in Japan, they would grow up speaking Japanese. But it's been guided by all of the other generations of learning, which inculcate this predisposition to learning language. And we never think of language models in that way. It's like meta-learning. It's really just like meta-learning. And so Chomsky is right that we are born with priors, because those priors are the earlier cycles of Darwinian evolution, right?

that everything that went on before we were born, right, as individuals. And so this kind of like, I think when we talk about data efficiency and we try to make claims about data efficiency between biological and artificial intelligence, we need to be really, really specific about whether we're talking about phylogeny or ontogeny, so evolution or development. And neither really works as a comparator. So it's just more complicated, right?

Is it possible that we're being deceived in some way, though? Because there are...

Certainly computational limitations with neural networks, there are complexity limitations, learnability limitations. So we kind of know that there are certain types of things that the networks can't do that we can do. And we are susceptible to this anthropomorphization. You mentioned this wonderful experiment where it was like a cartoon of arrows kind of interacting with each other and humans interpret them as agents. And this is the grumpy bully agent experiment.

And there was the ELISA machine as well, where it was a very simple program, which was quite sycophantic and people really took deep meaning from that.

Is it possible that we're reading more into what's going on here than is actually the case? Well, it's definitely true that we are intrinsically prone to attribute kind of like much more elaborate forms of cognition to all other non-human agents, actually, where simpler explanations may be available, right? Everyone who is a pet owner...

We'll be very familiar with this concept, right? It's like the easiest thing in the world to kind of like attribute complex human-like states to your cat or your dog or your hamster when it may or may not be merited, right? We know that people have been doing this for centuries, right? So psychologists know about the clever hands effect, the clever hands effect. Very famously, there was a performing horse which apparently could do mathematics, so simple arithmetic. And, you know, it did those so by repeatedly stamping its hands

It's hoof the correct number of times to solve a sum. But, you know, of course, it wasn't actually doing mathematics. What it was doing was checking whether its trainer gave it a kind of like unconscious signal that it should stop tapping. And so, of course, you know, we are always prone to kind of want to impute these more complex equations.

thoughts and feelings and emotional states or complex abilities to models. I don't deny that for a moment. But when you look at today's frontier models, that may be going on. We may be thinking, oh, it's really my friend, when actually it's not. But in terms of the raw capability, the numbers are the numbers, right? The models are just really good. And there's no denying that.

They can't do everything. There's lots of things they can't do, and they're still not fully robust, but they are really good. They're not just clever hands. You said yourself something in the book which intrigued me, which is that even cognitive scientists and neuroscientists and psychologists don't really know what the answer to the question is. If you said, what is thinking, when we talk about these mentalistic properties, and of course about this intentionality thing, the agency interpreting the...

the sort of intentions of cartoon arrows that are interacting with each other. And Daniel Dennett, of course, coined this intentional stance, which is that essentially we need to understand the world. It's a very complex place. And that's where perhaps some of these mentalistic properties come from. But do you subscribe to an idea? I read this wonderful book called The Mind is Flat by Nick Chater. One of my favorites, yeah. A lot of these mentalistic properties, even in humans perhaps, are a bit of an illusion. Yeah.

What do you think? I love that book. Yeah, I mean, that book essentially argues that, you know, kind of like we draw heavily upon prior experience to formulate what we like. So in other words, our preferences are a product of

or not just of like kind of some kind of internal value function which is different for everyone you know kind of like you like apples more than oranges and i like oranges more than apples but it's actually due to our memories for our past experiences so you don't actually like apples more than oranges but you just think you do because you had an apple this morning and you're like oh i had an apple this morning i must like apples more than oranges so it's this beautiful theory which in which you know kind of like we essentially construct ourselves out of our own actions

And, you know, kind of it can account for an astonishingly broad range of phenomena. Do we do that? You know, I think that's a scientific theory, but I think, you know, kind of in our everyday interaction with other agents, so animals, with technology, like we do the opposite, right?

Like we impute, this is what Dennett says, right? We impute, you know, far more than is due often, right? So, you know, your car fails to start in the morning and you get cross with it, you know, as if it was just being stubborn. But of course, there's no point in getting stuck with it. We're getting cross with it, right? And that is an example of the intentional stance. It is undoubtedly true that

That, for example, you know, kind of like when interacting with the models, people are very, very prone to attribute intentionality. So in the technical kind of like philosophical sense of the word, right? In other words, that they, there is something kind of like that it is like to be that thing, right? Yeah.

And people are really prone to attribute that sense of like, you know, they have some essence, some sense of what it is like to be themselves to probably all forms of technology, but especially to AI because they can talk back.

People do that all the time. You know, this is manifest in so many different ways. You know, of course, the types of interactions that people have with today's frontier models, starting with Blake Lemoine, who, of course, you know, famously, I talk about in the book famously, you know, kind of argued that after his interactions with Lambda, that it was sentient. And

playing out today in like, you know, we see that, you know, two of the top 100 most visited websites in the world are companion applications. These are generative AI systems that are trained to behave as if they are your friend. Why are they so popular? Because they're good at that, but not just, but they don't have to be that good because people are really prone to be, you know, to think of them as if they were a person.

That is undoubtedly true. But I think it's possible to hold that view and to be cognizant of our predisposition to do this, but also still to be sober about

The capability, I think it's just a different question, right? The capability question is like, how do you get something that can, you know, solve simultaneous equations if they're posed in natural language? Like, how do you do that? That is a problem that we did not know how to answer in 2018. We know how to answer it now. And the system which we implemented to solve that problem shares high level computational principles with what our best understanding of what the brain is doing.

There's also a lot of things that are different, but it does share those principles. And the most parsimonious explanation for how it can do it is that it's basically drawing on those principles, the same principles, in my view. Coming on to the alignment thing a little bit, you said that wouldn't it be amazing if we could have an artificial intelligence that would know what was right epistemically and also what is right ethically? One of the things I'm most proud about in having written this book is so it is now...

more than it's a year and a half since i finished writing it and in the closing chapters i talk about three things that i'm worried about for the future and the three things that i'm worried about that i said i was worried about are still the three things that i'm worried about so i'm quite so at least that has not kind of gone stale which is like given the pace of change is not kind of definitely not given so i think it's quite surprising okay so what are those three things so i say

Number one, I'm worried about like the translation of kind of like systems that generate information that allows the user to behave in some way, giving way to systems that directly behave on the user's part. Right. So what we now call agentic AI. We're even calling it then.

So I'm worried about that. I'm worried about personalization. So the extent to which models, instead of satisfying kind of like some general collective sense of what is right, can be tailored to everyone's individual sense of what is right.

If you're an individual who has a set of beliefs and preferences that you're quite attached to, that sounds like quite a nice idea. But until you think about there are an awful lot of people out in the world who have beliefs and preferences that you definitely, definitely wouldn't want reinforcing and you think that personalized AI, that's exactly what it would do. So if you take agentic systems and personalized systems and you put them together and you imagine what deployment looks like, what it looks like is a vision that we've been

The companies have been talking about for several years now, which is personal AI. So everyone has personal AI. And it is a medium through which they interact with the world, takes actions on their behalf. Probably like, you know, it is a conduit for information, resources,

And like offers a layer of protection and so on. So what that really cashes out as is a world in which there is kind of like a, there is a sort of social economy amongst humans, but there is also a parallel social economy

amongst the agents that we have and use to interact with the world. And that might sound kind of a bit sci-fi, but actually I don't think it's all that sci-fi, right? It's really not all that weird to imagine that we will have, you know, we will interact with the world

in a way that is technologically mediated, because that's what we do already. Almost everything we do is technologically mediated. It's not weird to imagine that the technologies that we use to interact with the world, instead of being rule-based, like they mostly are now, will be optimization-based. They'll have, like, minimal forms of agency. It's like, why not? So you create this kind of, like, multi-agent, parallel kind of, you know, if you like, it's like a, it's like, almost like a culture. You can think of it as a culture. And the trouble is that

We know that when you get, you know, lots of kind of, if you build a system and that system is complex and it can interact in complex ways, then you get complex system effects. And, you know, it can be nonlinear and it can, it's, you know, it has weird dynamics and can have feedback loops and so on. And that's exactly what happened in that flash crash. Yeah. And actually there's been, you know, maybe, I don't know, dozens of flash crashes that

Most famous one was in 2011, the one that I talk about in the book. So you can think about, like, what are the complex system dynamics that emerge where we are all kind of, like, represented by AI? And the reason why I think we should worry about that is because, like, you know, we have... You can think of the norms that we've evolved socially and culturally as a set of principles that curtail those complex system dynamics.

So we have evolved in such a way that, you know, we generate, we have a set of predispositions which generate a set of kind of like constraints on our social interaction that stop to a large extent those runaway processes. They're not perfect. Sometimes we go to war. Sometimes like crazy stuff happens. But like for the most part, you know, we particularly, you know, reasonably small groups and for long periods, we can live in relatively stable, harmonious societies, right?

But the trouble is that the models won't have those norms. At least there's no reason why they should have them. And the question is, what are the constraints that prevent the same sort of weird runaway dynamics that might lead to like, you know, flash crash like events? And I don't think we have an answer to that. That's why it worries me.

Yes, and designing in constraints would actually limit the technology in quite a strong way. It's a really interesting thing to think about, though, because in the physical world, the constraints are quite strict. And then language is a kind of virtual organism that supervenes on us, has more degrees of freedom. And this new type of AI technology that we're inventing, arguably, as you say, has even more degrees of freedom. So constraining it is a real challenge. Yeah, absolutely. And I think, you know, kind of like we...

that the sheer, even if you had systems which were perfectly aligned, which of course is not an assumption any of us reasonably can make, but if you did, the sheer pace and volume of activity that AI can generate is not something that our systems are prepared for. So most systems are

operate under the assumption that there are like reasonable frictions that prevent the system from collapsing. So a good example is like the legal system, right? So, you know, many people know that it is possible, particularly depends on the jurisdiction, but it's pretty much possible to engage in what often is often called lawfare. So adversarial use of kind of spurious legal challenge, right?

And there are certain jurisdictions where it's just strictly optimal to do that because the cost of defending yourself is so high that people will just capitulate and you can make money, right? There are frictions that prevent like most people from doing that, right? It's like most people don't have legal training. Most people, you know, kind of like don't know how to do that. Most people don't know the grounds on which you could do it. It's a lot of work. You've got to file paperwork. You know, you've got to, there's domain specific knowledge that you need. If we remove those frictions, right?

so that you can just like with a few sentences say, please do this, and you have a system that goes and does it, then you suddenly live in a very different world because like lots and lots of people can do this. There are many, many other such examples. I was speaking with Conor Leahy about this, and he was talking about this phenomenon called the fog of war, which is that we slowly lose control through illegibility. So I just, you can imagine based on what you said that you have all of these agents and they have, even, you know, when,

when a country is invaded or when some geopolitical event happened, the average person doesn't understand why that is because it's the culmination of so many countervailing forces and these systems are just very complex to understand. So you can imagine a world that becomes so abstract...

And I also wanted to point out that this doesn't require, because, you know, some people think of AI as a cultural technology, a bit like a library or something like that. And then there's this almost Duma narrative that it's agentic and this, that, and the other. But you don't need it to be strong for all of these things to happen. And so, you know, kind of...

The human analogy in AGI, of course, overlooks the fact that although collectively what we've done is astonishing, individually, we're actually extraordinarily vulnerable and just not all that good at life in general on our own, right? So, you know, kind of the classic, like, you know, you and a chimp on a desert island, my money's on the chimp, right? Yeah.

So, you know, kind of like that is we our strength is our ability to cooperate individually. We are not all that strong. Right. So, you know, kind of like this notion of like a lone intelligence that is like us, but much, much better, I think is kind of a strange one, like what we should actually worry about is.

is the unexpected externalities that come from linking together lots of potentially weak systems to create something which is like probably completely unlike us and unlike our culture and society, but which we can't control.

And I didn't know the fog of war analogy. That's very nice. But my favorite paper, which talked about this recently, is from David Duvenaar. So he's written this really nice paper and others written this really nice paper called Gradual Disempowerment. And it expresses a threat model, which I have subscribed to for a really long time and which I talk about in the book, which is, you know, kind of broadly exactly that, that we sort of gradually change.

lock ourselves in to the use of optimization-based technologies and the complex system interactions between those systems sort of write us out of the equation. And the interesting thing about that analogy, which is this point is not made either in the book or in David's paper,

is that in a way it's coextensive with what happens anyway, right? So if you think about a corporation, right, the world we have created through hegemonic capitalism with large corporations, for example, in many ways large corporations, they are things that are more powerful than any one person.

in a way that they kind of like, they run under their own imperatives and with their own rules and with their own incentives and with their own dynamics. And for many, they are so powerful that there is no one person that could kind of stop them, right? So we sort of have a model for what this would look like. It's just that, of course, you know, in the case of like large complex systems like the corporation, the interactions are slow because they're largely human mediated. It's like email, you know, or Slack, right?

And, you know, kind of like the dynamic, everything is kind of like humans are the cogs in the wheel, or the cogs in the machine, sorry. Yes. But in the case of AI, it's going to happen at warp speed, right? Yeah.

I suppose it's an interesting time because, I mean, just look at AlphaFold, for example. This technology can be used for revolutionizing science, potentially. But there are so many downsides as well, potentially. I mean, what downsides do you think we need to be most cautious about socially? If you think about how many products work. So, of course, you know, kind of like firms advertise products.

And they do so by branding those products, right? So branding is a kind of, it's a way of trying to get us to engage with something a bit like as if it was a human, right? Where that something is, maybe it's not the product itself, maybe it's the company, it's the brand. And, you know, that is more or less successful. Imagine a world in which everything is,

was like that, but it could actually talk back to you. And it could simulate all of the kind of social and emotional types of interaction that we have with people that we care about. So, you know, the milk in your fridge is like your best friend, right?

This is a very strange world in which, you know, of course, that's a silly example. The milk in the fridge is never going to be your best friend. But, you know, kind of like there are, you know, as I mentioned earlier, there are already, you know, kind of large numbers of people who are engaging with AI in ways that are kind of like that mimic the sorts of interactions they have with other people. And this creates a whole bunch of vulnerabilities. A lot of people have talked about like, you know, risks to mental health and so on. And we should be

We should be really aware of that, especially where vulnerable people or minors are concerned. But I think there's another issue which is talked about much less, and that is the degree to which that will give the organizations that build these systems power over people. You talked about, you said that AI increases our agency, and I would actually like, in a way that is true, but I actually think that there's also a really powerful sense in which the opposite is true, right?

Just as, you know, kind of like access to social media gives you, in theory, access to lots and lots of information.

And, you know, kind of like that should be empowering. Actually, most people's practical experience of it is that they spend a lot of time doing something that they think is a bit stupid and would rather be doing something else. Yes, I'm glad you brought that up. It's a weird phenomenon. This comes into the labor market disruptions. I think initially for some people, certainly now, if you fire up Cursor and you can build

a software business in a week. In that sense, it increases your agency, but actually everybody else has this capability and the long-term or even the medium term is it sequesters your agency. It takes your agency away massively. And this is a huge problem. Yeah, I mean, I think that this is, you know, this is true. This is not a unique problem to AI, right? You know, kind of you could see like...

the trend of increasing organization in society, right, in a way, it liberates us in lots of ways, right? The things that you can do in a society which is organized collectively are much greater than things you can do on your own, right? Your opportunity is enormously increased. But at the same time, in order for that society to function, it has to curtail the

what you can do right and i think you can see you know technology as the natural combination of that process right you know technology it gives us freedoms which we wouldn't otherwise have we do things we wouldn't otherwise do and of course there's an imperative to seize those opportunities not least because they're usually economically you know really beneficial

But it's like a kind of Faustian pact, right? When you buy into that, you lock yourself into the use of that technology. And, you know,

That plays out in all sorts of like trivial ways. Like how good are you at making a fire from scratch without a box of matches? I don't know about you, but I wouldn't be able to do it, right? I'm locked in. It's like a basic thing that we need to survive and I couldn't do it, right? I know. I wonder whether this would be ameliorated when we have a more diffused distributed AI. But, you know, you were kind of alluding to Daniel Dennett's counterfeit people article in The Atlantic. And I was lucky enough to interview him about that before he died.

and um you know he was basically saying that um when when everyone starts talking to you know you mentioned the milk but it may be maybe the milk in the fridge will be an agent everything will be an agent and unfortunately we start to see this weird behavior when we see any kind of interaction online and it's it's so-called counterfeit people so you know and we acquiesce because we just

stop participating because the world suddenly looks very strange to us. But I also see the opposite, which is that we become counterfeit people. So if you look at the way people behave on LinkedIn and social media now, it's becoming far more robotic. And it's just almost the meaning of the entire system seems to be eroding. Yeah, yeah, absolutely. There is like, I mean, you know, you could call it like a crisis of authenticity, right? I think you can see this.

broadly in society, like, you know, kind of because our modes of interaction become so stylized that we lose that sense of authenticity, right? There are so many dependencies. You know, we always have to present ourselves as being like, you know, kind of in line with the party line. You just asked me something that I wasn't able to answer, right? You know, kind of like...

Because I have other dependencies. Like there is a loss of authenticity in our communications because in a complex world, we represent many interests. And that I think is a natural byproduct of our kind of like becoming part of the system. I love this. My favorite metaphor for this is this.

I woke up in the middle of the night and it just hit me one night, which is, I don't know if you remember Superman 3. Superman 3 is a terrible movie, but there's this wonderful scene. So I think there's a kind of giant computer that goes rogue in Superman 3. And there's this wonderful scene where there's this female character and she's sort of, the machine is just kind of like waking up and she tries to, she's just sort of walking past it and the machine kind of like sucks her in

And she gets kind of like stuck there. And then what the machine does is it gradually like kind of puts armor plating on her and replaces her eyes with lasers and basically turns her into a sort of like automaton. And it's a very compelling scene. I think I was like terrified by it as a child, which is probably why I remember it. But like that is a sort of metaphor for like, you know, what is happening to us, right? You know, we're worried about

the robots taking over or whatever. But in a way, it's more like us being sucked into the machine, right? We become part, just like that poor, you know, character. We get turned into something we are not by technology. And I don't think, this is not a comment that is specifically about AI.

I think this happens to every person who has to go to a press conference or every person who has to represent their organization or a broader group of people. You become part of that system and it erodes your authenticity and in a way it erodes your humanity. Yes. Last time I came to interview you, I went to Luciano Floridi directly afterwards.

And his argument is kind of similar about us becoming ensconced into the infosphere and it changes our ontology. And perhaps you're arguing more from an agentual point of view, but I think, you know, it's quite related. Well, I think, you know, kind of as a psychologist, we have dramatically under-indexed on the extent to which what is good for us is actually about our agency, our control,

are not about reward. So, you know, kind of like we have, of course, you know, economics, psychology, machine learning have all grown up with this notion that like utility maximization is like the fundamental framework for understanding behavior. And that's expressed, of course, most prominently in ML through reinforcement learning.

But, you know, kind of like, of course, like when you actually look at what, and of course, you know, this is not, everyone needs to be warm and have enough to eat, right? But once those basic needs are satisfied, like if you look, and even sometimes when they're not, if you look in development, and if you take a sideways view at a lot of kind of both healthy and abnormal psychology, what you can see is that what people really care about is control.

people need to understand, and by control I really mean formally, like your ability to have predictable influence on a system. So in machine learning, this often gets quantified as like this wonderful notion of empowerment, right? But like the idea that, you know, what we want to maximize is the mutual information between our actions and kind of future states, for example, either immediate or delayed. And

That is agency, by the way. And that is agency. And I think that we really, really, if you think of kids, just two examples, the extent to which kids will explore the world.

The extent to which they will take actions to try and understand, like, why if I tap that thing? Or why if I take my dinner and throw it on the floor? What's going to happen? Oh, look, I have control. Or I cry. Oh, look, my dad's going to come. Like, I have control. I can understand that system. Like, that's what they're doing, right? Right through to, you know, kind of like, you know, in adulthood or adolescence, adulthood forms of pathological control, like,

Too much control, like, you know, you can see OCD, excessive compulsive disorder, there's a need for too much control. So anyway, I digress. But like, control is really, really important. And I think we, when thinking about the impact of technology on our well-being, that conversation needs to be grounded in a robust understanding of how important it is to us to have control.

predictable influence on our world and what a lot of AI or a lot of technological penetration actually does is make our actions kind of unpredictable.

It's like that, this is the frustration that happens whenever you interact with a website that doesn't quite work. Or, you know, you get a computer says no answer, or, you know, two-factor authentication, but then there's no internet and you're like, ah, it's like you've lost control. But that control you, in the systems that we evolved to the environment that we evolved for, that control is much more, you know, it's much more readily available. It's

Isn't that fascinating? There have been studies, I'm sure you're familiar with this one, where managers in an organisation, this study was in the 70s or something like that, and they had fewer rates of heart disease because they had more power. And then the underlings would get disease much more regularly.

And if you think about it, with social media and even with these chatbot platforms, so I interviewed the team that built all the engagement hacking algorithms. And they were incredibly proud that their average session length was 90 minutes. And they were talking all about how they would do model merging and send this response and this response and keep them hooked, keep them there for longer. And in a sense, dopamine hacking is about giving random rewards.

And it's a disempowering thing along the lines you said. And that is the kind of modus operandi for all technology now. Yeah, absolutely. Yeah, variable reinforcement schedule is the best way to train animals, including humans, and we are susceptible. The unpredictable nature of the reward engages us with the system because we want to control the system, right? We want to know, how do I make the reward come?

And of course, if you can't, then you keep on trying and trying and trying. Yeah, I mean, you know, we live in a world in which the people have a lot of liberty about like how they spend their time. And I think that's as it should be. You know, I don't, you know, I don't think we should, I don't think we should legislate against frivolity, right? You know, if people want to spend a lot of time on TikTok, collectively, I understand that that's bad. But, you know, kind of like,

It is also, we, for better or worse, live in a world in which that is permissible. We live in a country, at least, in which that is permissible. Where I think we need to be cautious, you know, so, you know, kind of what I'm saying is that, you know, kind of that kind of hacking, you know, maybe it's undesirable. I might deem it undesirable. But, you know, collectively as a society, there are many things that are undesirable, you know.

Alcohol is also addictive, but I'm probably going to have a beer as soon as this is done. So we make those choices. But I think that there are vulnerabilities. There are people who are uniquely vulnerable where that kind of liberty to kind of hack, if you like, spills over into something that can be really actively harmful and can lead, of course, to people to self-harm,

And there have been tragic cases as well, as I'm sure you already, as I'm sure you know, in which people have even taken their own life under influence, which came from an AI system with which they were interacting in this kind of like companion mode. Do you think it makes sense to think of evolution as having a goal?

Probably not, right? So there's this great way of thinking about a paper that I really like, which draws upon the analogy of the kind of like blind progress of evolution, right? That, you know, kind of it's a selection mechanism that is not teleological, right? It doesn't have a purpose. It just happens.

And, you know, kind of argues that, you know, kind of we should think of kind of evolution and training in neural networks in a similar way. Right. It's kind of like it's very blind. And, you know, kind of like I think that. Yeah. Yeah.

I think that there is a fundamental difference between evolution and the way that optimization happens, put it that way. And we could learn a lot in thinking about neural networks from thinking about the purposeless evolution

that happens in evolution, basically. It's a really interesting topic for me. I was speaking with Kenneth Stanley the other day and he's done a lot of work about open-endedness and of course Tim Rock-Taschall works with DeepMind. In Tim Rock-Taschall's paper with Edward Hughes, he said that an open-ended system is one from the perspective of an observer that produces a sequence of events which are learnable and novel. Yes, exactly. It's about learnability, isn't it? Yeah.

Yeah, Joel Lehman. Have you had Joel Lehman on the show? Yeah, so Joel has written really, really nicely about this. I mean...

Yeah, so I largely share his view and very close to Tim and Ed's view, which is that, yeah, so the world is open-ended and optimizing for open-ended systems using kind of like well-specified kind of narrow optimization towards a narrow goal is just doomed to failure, right? And there is probably something really deep about the way the purposeless...

selection that happens in evolution conferring robustness because it doesn't precisely optimize for this narrow goal but rather what it creates is this like astonishing heterogeneity right and the optimization algorithms that we use are all completely opposite right they are basically tailored for homogeneity like heterogeneity is a bug

And that's why LLMs show mode collapse. It's why, you know, kind of like you get this kind of like, you know, this platonic hypothesis, you know, the idea that we're gradually converging towards kind of like essentially one common shared set of representations, right? It's like, yeah. Yeah. Yeah. Evolution doesn't do that. And they've wrote this wonderful paper called the, the fractured entangled representation hypothesis. Oh, I don't know that paper. So we,

with Joel, I'm not sure if Joel was part of this, but he was on Why Greatness Cannot Be Planned. They did this thing called Pick Breeder. And that was like Flickr where it was supervised by a diverse source of humans and the humans could pick interesting image generators which were CPPN, compositional pattern producing networks.

And you could create this phylogeny. And they speak about this concept called deception, which is that the stepping stones that lead somewhere interesting don't resemble the interesting thing. So humans have this kind of idea of what's interesting.

because we seem to know the world well. And with a few steps in the phylogeny, they found these pictures of butterflies and apples. And when you do parameter sweeps on the networks, because they so abstractly understand the objects, the apple would actually get bigger. One neuron would make it bigger. One neuron would make the stem swing faster.

And if you train a neural network with stochastic gradient descent to do the same thing and you do parameter sweeps, it's just, it's like spaghetti all over the place. So their hypothesis, and this seems like an obvious thing to say, if we could have a sparse representation which mirrored the world...

then the creative leaps because the knowledge is evolvable we could trust it with autonomy because it would do the right thing yeah that's amazing so i don't know about this paper it sounds like i should read it i mean the idea that yeah that you have these that it's difficult to get places because the interim states are not highly valuable i mean i guess this is like you know kind of

This is a very old argument. This is the basis of like Paley's watchmaker argument, right? It's like, how did we ever get the eye? You couldn't possibly evolve that. It's just too complicated. But yeah, those gradients must be there, right? The gradients are there.

I have to say, Professor Somerfield, the prose, the way that you've written this book is very impressive to me. It's one of the best written pieces of writing I've ever seen. And it couldn't, it occurred to me whether you were deliberately making it so creative as for it to be impossible to be mistaken for AI generated content. And I don't know whether this is maybe I've, you know,

My standards are so low now because, you know, I mean, shitification and all of that. But it was remarkable. But what were you thinking now? Were you sort of like leaning into the creativity a little bit? I love to write. I love to find new ways to explain things, to convey ideas. So that's a it's for me, it's a selfish pleasure. It didn't cross my mind that.

that people might think that I had used ChatGPT to write the book. But I guess in hindsight, that's a kind of very sensible way of thinking about it. But no, yeah, it was all me. That is mind-blowing. Professor Somerville, it's been an absolute honour. Thank you so much. Thank you.