“Self-prediction acts as an emergent regularizer” by Cameron Berg, Judd Rosenblatt, Mike Vaiana, Diogo de Lucena, florin_pop, AE Studio

LessWrong (30+ Karma)

Shownotes Transcript

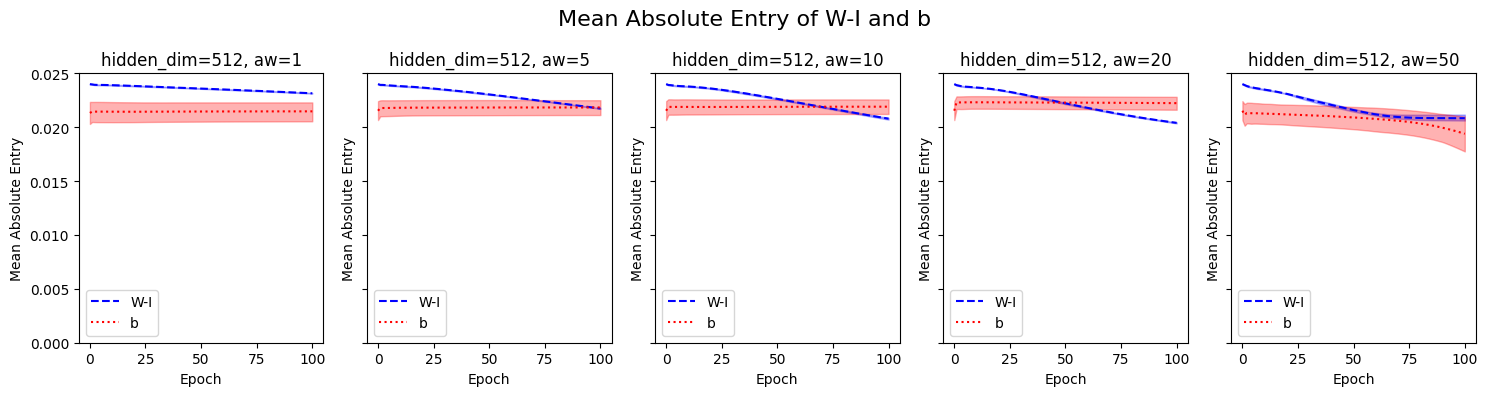

** TL;DR:** In our recent work with Professor Michael Graziano (arXiv, thread), we show that adding an auxiliary self-modeling objective to supervised learning tasks yields simpler, more regularized, and more parameter-efficient models. Across three classification tasks and two modalities, self-modeling consistently reduced complexity (lower RLCT, narrower weight distribution). This restructuring effect may help explain the putative benefits of self-models in both ML and biological systems. Agents who self-model may be reparameterized to better predict themselves, predict others, and be predicted by others. Accordingly, we believe that further exploring the potential effects of self-modeling on cooperation emerges as a promising neglected approach to alignment. This approach may also exhibit a 'negative alignment tax' to the degree that it may end up enhancing alignment and rendering systems more globally effective.

** Introduction ** In this post, we discuss some of the core findings and implications of our recent paper, Unexpected Benefits of Self-Modeling in [...]

Outline:

(00:07) TL;DR:

(01:06) Introduction

(03:06) Implementing self-modeling across diverse classification tasks

(04:08) How we measured network complexity

(05:19) Key result

(06:03) Relevance of self-modeling to alignment

(07:07) Challenges, considerations, and next steps

(08:50) Appendix: Interpreting Experimental Outcomes

(09:07) Does the Network Simply Learn the Identity Function?

(10:25) How Does Self-Modeling Differ from Traditional Regularization?

The original text contained 1 footnote which was omitted from this narration.

The original text contained 3 images which were described by AI.

First published: October 23rd, 2024

Source: https://www.lesswrong.com/posts/5se67gAcaExEYdCCg/self-prediction-acts-as-an-emergent-regularizer)

---

Narrated by TYPE III AUDIO).

Images from the article:

)

)

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.