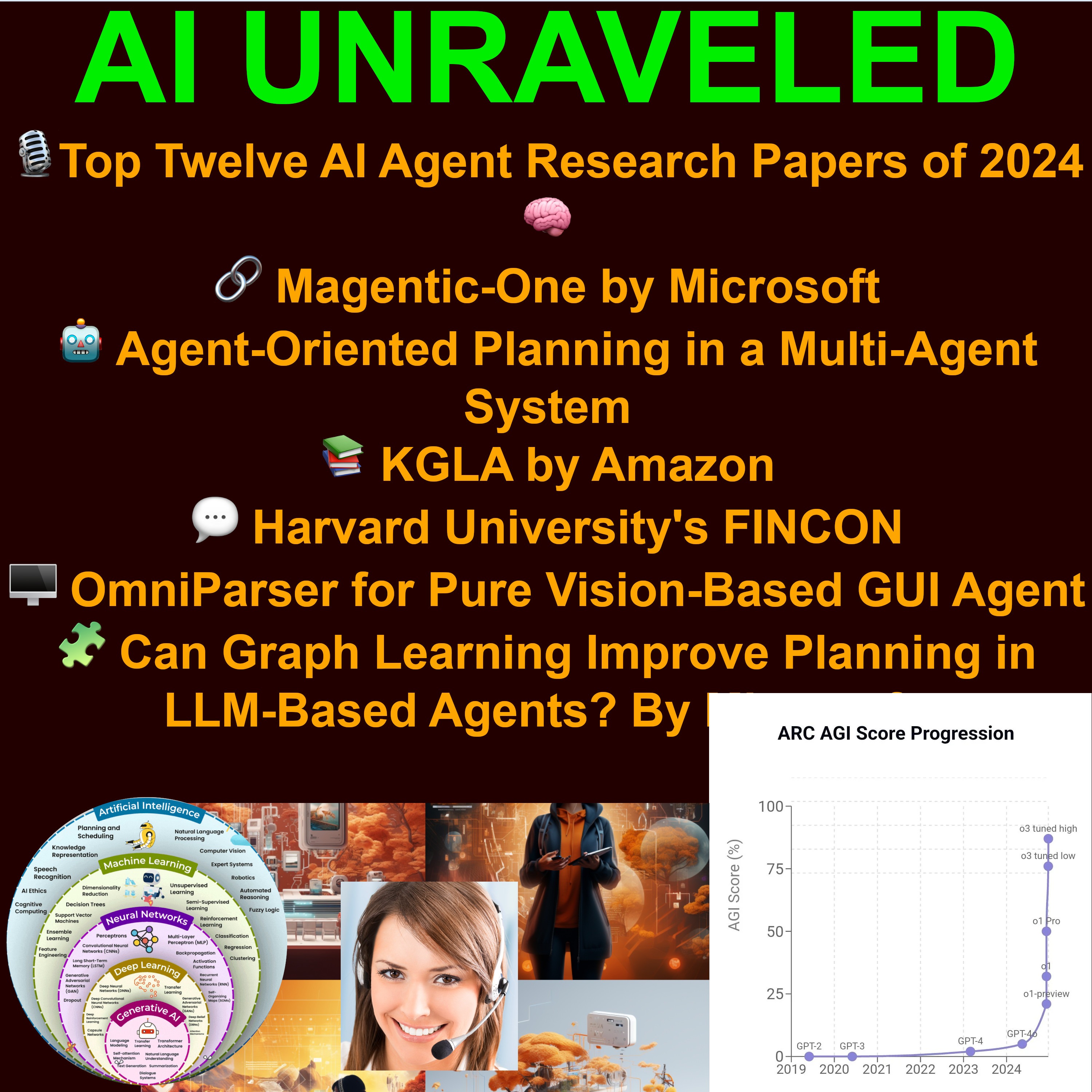

🧩Top Twelve AI Agent Research Papers of 2024🐞

AI Unraveled: Latest AI News & Trends, GPT, ChatGPT, Gemini, Generative AI, LLMs, Prompting

Deep Dive

What is the significance of Microsoft's Magentic-One in AI agent research?

Magentic-One is a multi-agent system designed to handle web-based and file-based tasks across various domains. It uses specialized AI agents that collaborate to achieve larger goals, such as research, data analysis, and report generation, showcasing the power of collective intelligence in AI systems.

How does Amazon's KGLA framework enhance AI agents' capabilities?

Amazon's KGLA framework integrates knowledge graphs, allowing AI agents to access vast networks of facts and relationships. This enables them to reason, make connections, and solve problems more effectively, such as providing personalized customer support or identifying financial risks.

What is the focus of Harvard University's FinCon research?

FinCon explores how AI agents can learn through simulated financial conversations, refining their understanding of financial markets and strategies. This conversational verbal reinforcement allows AI agents to develop financial intuition and adapt to complex scenarios.

What makes OmniParser a groundbreaking development in AI agent research?

OmniParser enables AI agents to navigate graphical user interfaces (GUIs) using only visual cues, allowing them to interact with software similarly to humans. This adaptability eliminates the need for explicit programming for each new interface, making AI agents more flexible and efficient.

How does graph learning improve planning in AI agents, as shown in Microsoft's research?

Graph learning allows AI agents to interpret visual representations of relationships, helping them grasp complex connections and make strategic decisions. This approach enables agents to analyze the bigger picture and develop nuanced plans of action in dynamic environments.

What is the significance of Stanford and Google DeepMind's research on simulating 1,000 people's vocal patterns?

This research demonstrates AI's ability to generate realistic human voices, enabling applications like natural-sounding virtual assistants, lifelike simulations for training, and personalized learning experiences. It also raises ethical questions about the use of such technology.

How does ByteDance's research on LLM-based agents impact software development?

ByteDance's research compares large language models (LLMs) for automated bug fixing, aiming to streamline development, reduce human error, and improve software quality. AI agents can identify and fix bugs automatically, allowing developers to focus on higher-level tasks.

Why does sparse communication topology improve multi-agent debate, according to Google DeepMind?

Sparse communication limits direct interaction between agents, reducing noise and confusion. This structured approach allows agents to present clear, evidence-based arguments, leading to more focused and insightful debates, which is crucial for collaborative problem-solving.

What are the key takeaways from OpenAI's paper on governing agentic AI systems?

OpenAI emphasizes the importance of transparency, accountability, and robust oversight mechanisms to ensure AI agents operate safely and ethically. The paper highlights the need for clear guidelines and multidisciplinary collaboration to align AI systems with human values.

How does Anthropic's Sonnet 3.5 case study demonstrate advancements in AI agent usability?

Sonnet 3.5 showcases an AI system that interacts with computer interfaces intuitively, similar to how humans would. This focus on user-friendliness and accessibility makes AI agents more approachable for non-technical users, bridging the gap between human intuition and machine capabilities.

What are the potential economic impacts of AI agents on the workforce?

While AI agents may automate some jobs, they also create new opportunities in human-AI collaboration, critical thinking, and creativity. Historical technological advancements, like the internet, show that disruption often leads to new industries and professions, requiring adaptation and upskilling.

Why is explainable AI (XAI) crucial for the future of AI agents?

Explainable AI ensures that AI agents can provide transparent reasoning for their decisions, fostering trust and accountability. This is especially important for critical tasks like financial decision-making, medical diagnosis, and autonomous vehicle control, where understanding the decision process is essential.

- AI agents are like specialized digital assistants.

- They work together and learn from each other.

- Collaborative aspect drives advancements.

Shownotes Transcript

All right, let's jump into the world of AI agents. You guys have sent over a ton of research papers on the latest breakthroughs, and we're going to try to unpack what's really significant. So what's the mission for this deep dive? I guess we're aiming to get everyone up to speed on AI agent tech in 2024 and try to understand the potential ripple effects.

Yeah, what's really interesting is the sheer diversity of approaches these researchers are taking. You know, they're tackling everything from basic task automation to complex financial modeling and even simulating entire social environments with AI agents. OK, so before we get into the specifics, let's make sure we're all on the same page. Think of AI agents like highly specialized digital assistants, each with their own set of skills. But instead of fetching coffee, they're crunching data, navigating intricate systems.

and even learning through conversations to become even more effective. Yeah, the key here is that these AI agents aren't just isolated programs. They're designed to work together and learn from each other. It's this collaborative aspect that's driving some of the most exciting advancements. Right. It's not just about individual brilliance, but collective intelligence.

Speaking of collaboration, that Microsoft paper on Magentic One really caught my attention. They've built this system that can seamlessly handle a bunch of web-based and file-based tasks. Yeah, Magentic One is a fantastic example of a multi-agent system in action. What's interesting is how they've created specialized AI agents that collaborate to achieve a larger goal. So, for example, you might have one agent that excels at research, another that's a whiz at data analysis.

and yet another that can generate reports or presentations. - So it's like having a dream team of AI specialists working behind the scenes. And this ties in nicely with the paper on agent-oriented planning in a multi-agent system, right? - Absolutely, yeah. This research digs into how these agents can coordinate their efforts effectively.

They propose a meta agent architecture where a sort of super agent acts like a conductor, you know, orchestrating the individual agents to ensure everyone's working in sync. So it's like managing a complex project. But instead of human team members, we've got these specialized agents. I'm trying to visualize this. Is it similar to how a fleet of delivery drones might work?

Each drone has its own task, like picking up a package or navigating to a delivery point. But they're all coordinated by a central system. That's a great analogy. The meta agent in this case is like that central control system, optimizing routes, managing traffic and making sure the whole operation runs smoothly. But what makes this concept even more powerful is the integration of knowledge.

And that's where Amazon's key GLA research comes in. They're talking about giving AI agents access to vast knowledge graphs. So essentially, these agents can tap into a massive network of facts and relationships. Exactly. And this is a game changer because it allows AI agents to reason and make connections in a way that was previously

previously impossible. It's like giving them a deep understanding of how different pieces of information relate to each other, almost like a detective solving a case. So instead of just blindly following instructions, these agents can analyze information, draw inferences, and potentially even come up with creative solutions. That's pretty powerful. Absolutely. Imagine a customer support agent powered by KGLA. It could instantly access a knowledge graph containing product information, customer history, troubleshooting guides.

and so much more. That means more accurate, personalized, and efficient support. Or think about financial modeling. An AI agent with access to a comprehensive knowledge graph could potentially spot patterns and risks that humans might miss. Well, the possibility is pretty mind-boggling.

Speaking of learning, that Harvard University paper on FinCon really piqued my interest. They're exploring how AI agents can learn through conversations, especially in the world of finance. Yeah. FinCon introduces this concept of conversational verbal reinforcement, which essentially means that AI agents engage in simulated financial conversations to refine their understanding and strategies. It's like having a group of financial experts brainstorming and debating different scenarios.

But instead of humans, you have these AI agents constantly learning and adapting. So it's not just about crunching numbers. It's about understanding the nuances of financial markets through dialogue. It's like they're developing their own financial intuition through conversation. That's pretty fascinating. Now, shifting gears a bit, what about that OmniParser research? It seems to be focused on AI agents that can navigate graphical user interfaces, but using only visual cues.

This research is a significant step toward creating AI agents that can interact with software in a way that's similar to how humans do. Imagine an AI agent that can learn to use any software, not through explicit programming, but by simply observing how humans use it. So instead of being limited to specific tasks, these agents could potentially adapt to any software environment.

That opens up some really interesting possibilities. Think about automating those repetitive tasks that we all dread or improving accessibility for users with disabilities. We could even use these agents for usability testing, seeing how real users interact with software. You've hit the nail on the head. OmniParser's vision-based approach is groundbreaking because it allows AI agents to be incredibly adaptable. They don't need to be explicitly programmed for each new interface they encounter.

That's a massive leap in terms of flexibility and efficiency. But it's not just about visual cues, right? That Microsoft research on graph learning highlights how we can make AI agents even smarter when it comes to planning. You're absolutely right. By teaching AI agents to interpret graphs, we're essentially giving them a visual representation of relationships. This allows them to grasp complex connections and make more strategic decisions. So instead of just blindly following a set of instructions,

These agents can look at the bigger picture, analyze those relationships between tasks or data points, and come up with a more nuanced plan of action. That seems like a powerful tool for any AI agent operating in a dynamic environment. Precisely. It's like giving them a map of the problem space, allowing them to see not just individual tasks, but the overall landscape and how everything connects. That's fascinating. Now, we've been talking about AI agents performing tasks, but what about their ability to communicate and interact?

That Stanford Google DeepMind paper on simulating 1,000 people's vocal patterns really caught my attention. Yeah, this research showcases the incredible progress in AI's ability to generate realistic human voices. Imagine a world with virtual assistants that sound completely natural or, uh, or...

or being able to create incredibly lifelike simulations for training or even entertainment. It's like we're blurring the lines between human and machine communication. That's both exciting and a little unnerving, wouldn't you say? It certainly raises some, you know, interesting questions. But let's focus on the potential benefits for a moment.

Think about how this technology could revolutionize voice interfaces, making them more natural and engaging. Or consider its use in education or accessibility, creating personalized learning experiences, or providing voices to those who have lost theirs. Those are some really compelling applications. But let's be realistic, there are potential downsides too, right? Of course, of course. As with any powerful technology, there's the potential for misuse.

But that's why it's so important to have these conversations early on, to anticipate potential challenges and develop safeguards. Absolutely. We need to make sure this technology is used responsibly and ethically.

Now let's shift back to the more practical side of things. That ByteDance research on LLM-based agents for automated bug fixing is a game changer for software developers. Yeah, this study compares various large language models to assess their ability to identify and fix bugs in code automatically. The goal here is to streamline the development process, reduce human error,

and ultimately create more robust software. So instead of spending countless hours debugging code, developers could have these AI agents working behind the scenes, catching those pesky errors before they even become a problem. Exactly. And this could have a huge impact on software quality and reliability. Imagine a world where software updates are less buggy and more frequent.

Because AI agents are doing a lot of the heavy lifting when it comes to testing and debugging. That's a pretty compelling vision. It's like having an army of tiny code detectives working tirelessly to ensure our software is top notch. And the research doesn't stop there. Google DeepMind has been exploring how to improve multi-agent debate through something called sparse communication topology. Okay, that sounds intriguing. How does limiting communication actually improve the quality of debate between AI agents?

I would think more communication would lead to better outcomes. It's actually the opposite. By limiting direct communication, they reduce the noise and confusion that can arise when you have too many agents trying to chime in at once. This allows for more focused, in-depth discussions.

where agents have the space to present their arguments clearly and support them with evidence. So it's like creating a structured debate format, where each agent has the opportunity to present their case clearly and concisely without being interrupted or distracted. That makes sense. Exactly. And this research has far-reaching implications. Think about fact-checking, collaborative problem-solving, and even understanding how humans debate and reach consensus.

It's about creating an environment where reason and evidence prevail. That's a pretty powerful concept. And it seems like this research is giving us insights not just into how AI agents can communicate,

but also into how we humans can communicate more effectively. You're right. There's a lot we can learn from studying these multi-agent systems. Now, for a broader perspective, let's look at that survey paper on LLM-based multi-agent systems. It provides a great overview of how these systems are evolving and their various real-world applications. So it's like a roadmap for the future of AI agents.

highlighting the key breakthroughs, the areas where research is still ongoing, and maybe even some of the challenges that lie ahead. Exactly. And speaking of challenges, let's not forget about the ethical considerations. OpenAI's paper on governing agentic AI systems provides some valuable guidance on responsible adoption and oversight. So as we create these incredibly capable AI agents...

We need to make sure they're operating within a framework that aligns with human values and safeguards against potential risks. Absolutely. This paper emphasizes the importance of transparency, accountability, and robust oversight mechanisms. It's like creating a set of rules for the road to ensure that these AI agents operate safely and ethically.

Now, before we dive into the ethical considerations in more detail, let's take a moment to explore how these advancements in AI agents are impacting real world applications. And I think that case study featuring Anthropix Sonnet 3.5 provides a great starting point. They managed to create an AI system that can effectively use a computer interface to accomplish a variety of tasks. What's particularly interesting about this case study is the emphasis on user friendliness and intuitiveness.

- SANA 3.5 doesn't require complex commands or specialized programming knowledge. It interacts with the computer interface in a way that's very similar to how a human would.

So it's like they've bridged the gap between human intuition and machine capabilities. Exactly. And this is crucial if we want to see wider adoption of AI agents. We need to make sure they're accessible and easy to use, even for people who aren't tech savvy. That's a great point. But before we get too far ahead of ourselves, let's take a step back and consider the potential challenges and risks associated with these powerful AI systems. We'll explore those in more detail later.

It's remarkable how rapidly AI agents are becoming more sophisticated and capable. We've only just begun to see their potential impact on various aspects of our lives. I know, right? It feels like we're on the cusp of some major changes. As we delve deeper into these research papers, it's becoming clear that AI agents are more than just a technological advancement.

They represent a fundamental shift in how we interact with technology and potentially how we interact with the world around us. You've hit the nail on the head. This isn't just about automation. It's about creating systems that can learn, adapt, and even collaborate with us in ways we haven't fully grasped yet.

One of the key takeaways from these papers is the growing importance of seamless integration between AI agents and the digital environments they operate in. That makes sense. We can't expect these AI agents to be effective if they're constantly running into roadblocks or struggling to navigate the digital world. It's like expecting a chef to cook a gourmet meal without the proper tools and ingredients. Precisely.

And that's why research like that Omni parser project is so exciting. By enabling AI agents to understand and interact with graphical user interfaces using only visual cues, we're essentially giving them the ability to navigate the digital world with a level of fluency that was previously unimaginable. It's like they're learning to see the digital world through human eyes, understanding the context and meaning behind those icons, buttons.

and menus that we take for granted. Exactly. And this opens up a world of possibilities, from automating complex tasks to improving accessibility for users with disabilities to even redesigning software interfaces to be more intuitive and user-friendly.

So it's not just about making AI agents smarter, it's about making the digital world itself more accessible and adaptable to both humans and AI. Now, before we get too carried away with the user interface aspect, let's circle back to something you mentioned earlier, the ability of AI agents to learn and adapt.

That Harvard University paper on FinCon really highlighted the potential of conversational learning, particularly in a complex field like finance. Yeah, FinCon demonstrates how AI agents can engage in simulated conversations to refine their understanding of financial markets and develop more sophisticated trading strategies. What's fascinating is that these agents aren't just passively absorbing information, they're actively engaging in dialogue, testing their hypotheses.

and refining their strategies based on the feedback they receive. So it's like they're developing a kind of financial intuition through dialogue, much like how human traders might learn from their mentors or colleagues.

But instead of relying on years of experience, these AI agents can accelerate their learning process through these simulated conversations. Precisely. And this has implications beyond finance. Imagine using this approach to train AI agents in any field that requires complex decision making.

and a deep understanding of nuanced information. From healthcare to law to even creative fields like writing or music, the possibilities are vast. That's pretty mind-blowing. It's like we're giving these AI agents the tools to become experts in their respective fields, not through rote memorization, but through dynamic, interactive learning experiences. And let's not forget the importance of collaboration.

We've discussed how multi-agent systems, like the one demonstrated in the Magentic One paper, allow specialized AI agents to work together to achieve complex goals. Right. It's like assembling a dream team of AI specialists, each with their own unique skill set and perspective. Yeah. And then having them work together seamlessly to tackle a challenge. But what really impressed me was that research on sparse communication topology

I initially thought that limiting communication between agents would hinder their ability to collaborate, but it turns out that less can be more. That's a common misconception. We often assume that more communication is always better. But in the case of AI agents engaged in complex tasks or debates, too much communication can actually be detrimental. It can lead to information overload, confusion.

And in a lack of focus. So it's like trying to have a productive meeting with a dozen people all talking at once. It's much more effective to create a structured environment where each person has the opportunity to present their ideas clearly and thoughtfully. Exactly. And that's what sparse communication topology aims to achieve.

By limiting direct communication between agents, we create an environment where they can focus on the most important information, develop more reasoned arguments, and ultimately arrive at more accurate conclusions. It's fascinating how this research challenges our assumptions about communication and collaboration. It suggests that sometimes a little bit of strategic silence can lead to much more insightful outcomes.

Now, as we've seen, AI agents are becoming incredibly adept at performing tasks, learning from data, and even engaging in complex communication. But what about their ability to reason and make decisions in a way that aligns with human values and ethical considerations?

That's a crucial aspect of responsible AI development that we can't afford to overlook. As these AI agents become more autonomous and capable, we need to ensure that their decision-making processes are transparent, accountable, and aligned with our ethical principles. And that's where research like that OpenAI paper on governing agentic AI systems comes in.

Right. They're not just focusing on making AI agents smarter. They're also thinking about how to govern them effectively to make sure they're used for good and don't pose a risk to humanity. Precisely. And one of the key insights from their research is the importance of establishing clear guidelines and oversight mechanisms from the outset.

We can't just wait until AI agents are fully developed and deployed in the real world before we start thinking about the ethical implications. So it's like establishing traffic laws before we let self-driving cars roam freely on the roads.

We need to anticipate potential problems and put safeguards in place to prevent accidents and ensure public safety. That's a great analogy. And it highlights the need for a multidisciplinary approach to AI governance. We need ethicists, lawyers, social scientists, and of course, AI researchers all working together to create a framework that ensures these powerful technologies

are used responsibly and ethically. It sounds like a complex challenge, but one that's absolutely essential if we want to harness the full potential of AI agents while mitigating the risks.

Now, shifting gears a bit, I want to talk about the potential impact of AI agents on our economy and workforce. It's a topic that often generates a lot of anxiety, but I think it's important to approach it with a balanced perspective. I agree. It's natural to have concerns about the potential for job displacement as AI agents become more capable. But it's equally important to recognize the potential for new opportunities in economic growth. Exactly. Throughout history, technological advancements have often led to shifts in the job market.

but they've also created new industries and professions that we couldn't have imagined before. Think about the internet, for example. It disrupted many traditional industries, but it also gave rise to a whole new digital economy, creating millions of jobs that didn't exist before. You've raised a valid point. And with AI agents, we're likely to see a similar pattern. While some jobs may become automated, we'll also see the emergence of new roles that require human AI collaboration, critical thinking, and creativity.

So it's not necessarily about humans versus machines. It's about finding ways for humans and AI agents to work together effectively, leveraging each other's strengths to achieve greater outcomes. Absolutely. And this is where education and upskilling become crucial. We need to equip people with the skills they need to thrive in a future where AI agents are increasingly integrated into the workplace.

That makes a lot of sense. Instead of fearing AI, we need to embrace the opportunities it presents and prepare ourselves to adapt to this evolving landscape. Now, as we delve deeper into these research papers, I'm struck by the fact that many of them are focused not just on making AI agents more capable,

but also on making them more user-friendly and intuitive. That's a crucial aspect of responsible AI development that often gets overlooked. It's not enough to create powerful AI agents, we need to make sure they're accessible and easy to use, even for people who aren't tech savvy. Right. It's like designing any other tool or technology.

If it's too complex or difficult to use, people will simply avoid it, no matter how powerful its capabilities may be. Exactly. And that's why research like that case study on Anthropocene Sonnet 3.5 is so important. By focusing on user friendliness and intuitive interaction, they're paving the way for a future where AI agents can seamlessly integrate into our lives.

without requiring us to become programmers or tech experts. It's like the difference between using a clunky old computer with a command line interface and using a modern smartphone with a touchscreen and intuitive apps. No. We want AI agents to be as accessible and easy to use as our favorite mobile apps. Precisely. And as AI technology continues to advance, we can expect to see even greater emphasis on user experience and accessibility.

The goal is to create AI agents that feel like natural extensions of our own capabilities, tools that empower us to do more, learn more, and achieve more than we ever could alone. That's a pretty inspiring vision. But before we get too carried away with the possibilities, let's take a moment to acknowledge the potential challenges and risks that come with integrating these powerful AI agents into our lives. We'll explore those in more detail later. It's been a fascinating journey exploring all these advancements in AI agent technology.

As we wrap up this deep dive, it's important to acknowledge that while the potential benefits are vast, there are also some valid concerns that need to be addressed. Absolutely. We've covered so much ground from multi-agent collaboration and knowledge integration to conversational learning and all the ethical considerations surrounding these increasingly autonomous AI systems.

But as with any powerful technology, we need to proceed with caution and make sure these advancements are guided by human values and ethical principles. You've hit the nail on the head. One of the biggest challenges we face is ensuring that these AI agents are aligned with human goals and values. It's not enough to create AI agents that are simply efficient or effective. We need to make sure they're operating within a framework that prioritizes human well-being, fairness, and accountability. Right.

It's like it's like teaching a child the difference between right and wrong. We can't just expect agents to inherently understand human values. We need to instill those values through careful design and development. Exactly. And that's where research like that open paper on governing agentic systems becomes so crucial. They highlight the need for robust oversight mechanisms, clear ethical guidelines.

And the ability to intervene if an AI agent's actions deviate from our intended goals. So it's not just about building smarter AI. It's about building AI that we can trust. AI that operates within a system of checks and balances to prevent unintended consequences. Precisely. And this ties into another important consideration, transparency.

As AI agents become more sophisticated and their decision-making processes become more complex, it's crucial that we can understand how they arrive at their conclusions.

That's a great point. If we can't understand how an AI agent is making decisions, it becomes very difficult to trust its judgment or hold it accountable for its actions. It's like having a black box that spits out answers without any explanation of how it got there. Exactly. And that's why researchers are increasingly focusing on developing explainable AI or XAI. The goal is to create AI systems that can not only provide answers, but also explain their reasoning in a way that humans can understand.

That seems essential, especially as we start to rely on AI agents for more complex and critical tasks. Whether it's making financial decisions, diagnosing medical conditions, or even controlling autonomous vehicles,

We need to know that these AI agents are operating transparently and ethically. Absolutely. And let's not forget about the potential impact of AI agents on our workforce and economy. It's a topic that often generates a lot of fear and uncertainty, but I think it's important to approach it with a balanced perspective. I agree. It's easy to get caught up in those dystopian scenarios where robots take over all the jobs.

But I think it's more realistic to view AI agents as tools that can augment human capabilities and create new opportunities. That's a great way to frame it. Throughout history, technological advancements have often led to shifts in the job market, but they've also created new industries and professions that we couldn't have imagined before. Think about the printing press, the automobile, the computer. Each of these inventions disrupted existing industries.

but they also created countless new jobs and opportunities. And I think AI agents will likely follow a similar trajectory. You've raised a valid point. While some jobs may become automated, we'll also see the emergence of new roles that require human-AI collaboration, critical thinking, and creativity.

The key is to adapt and evolve, to embrace lifelong learning and develop the skills that will be in demand in a future where humans and AI work side by side. That makes a lot of sense. Instead of fearing AI, we need to view it as an opportunity to enhance our own abilities and create a more prosperous and equitable society. Absolutely. And I think the research we've explored in this deep dive gives us a glimpse of what that future might look like.

From AI agents that can help us solve complex problems and accelerate scientific discovery to those that can personalize our education and enhance our creative endeavors, the possibilities are vast. It's an exciting time to be alive, wouldn't you say? We're witnessing a technological revolution that has the potential to reshape our world in profound ways.

But as we embrace these advancements, it's crucial that we do so with both enthusiasm and a healthy dose of caution. I couldn't agree more. The future of AI is not predetermined. It's something that we're actively shaping with every decision we make. And it's our collective responsibility to ensure that these powerful technologies are used for good, to create a world that is

more just, more equitable, and more fulfilling for all. Well said. And that's a perfect note to end on. Thanks for joining us on this deep dive into the world of AI agents. We've only scratched the surface of this fascinating field, but hopefully we've given you plenty to ponder as you continue to explore.