Fabricated AI Research, AI Phishing Emails, AI Package Theft Detection

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to Skynet today's Let's Talk AI podcast, where you can hear AI researchers chat about what's actually going on with AI. This is our latest Last Week in AI episode in which you get summaries and discussion of some of last week's most interesting AI news. I'm Dr. Sharon Zhou. And I am Andrey Kryankov. And this week we'll discuss new robotics for warehouse automation, AI that helped discover ketamine as a treatment for disease,

papers with tortured phrases, and a couple of fun and interesting news stories. So let's dive straight in into our application of AI paper. Sorry. So let's dive straight in into our application AI articles. And the first one is a new generation of AI powered robots is taking over warehouses. And this is coming from Technology Review.

And this article is looking at an overview of a lot of the new startups that are creating AI powered robots for factories and warehouses. And these robots that can manipulate objects of different shapes and sizes. And this opens up a whole new set of different tasks for automation. Yeah, I enjoyed this article a lot because as a robotics researchers, I've been seeing this trend. There's probably like a dozen startups for the last two years doing it.

And it also mentions that there's like 2000 of these robots already deployed. So yeah, I think it's, it's an interesting thing to be aware of. And this article does do it justice.

Yeah, the article mentions the fact that the robots can do simple tasks like picking up objects and putting them in boxes, which is also just non-trivial too. It needs, you know, perception, it needs computer vision to do. But I guess TBD is still working on these flimsier materials or even transparent ones, you know, not sure where the item actually is, whether it's in a box or not. Yeah.

And how much hype is there in terms of dexterity and robots? I know at some point, you know, in the article, there is saying, you know, we can replace all of these everything and there can be general dexterity, but that still feels like it's far away.

Yeah, yeah. I think this article is pretty well written. It doesn't over-claim very much. I do think that if you're not in robotics, you might be surprised by how little we can actually do with robots. Most of these startups are working with two-finger robots, so you just clamp down to pick something up.

Or you have like a vacuum suction, basically a vacuum to scoop things up. So you're not using like five fingers. You're not using two hands. You're using a single hand essentially to pick things up and move them between one box and another. But even doing that is going way beyond what is currently in factories, which is just robots doing the same movement over and over. Any adaptation to their surroundings or any sensing, anything.

for the most part. So these are still a lot more AI-based than existing robotics in terms of manipulation of objects. Navigational robotics are a bit more advanced. So yeah, I think this is a good article as far as the dexterity aspect.

Yeah, it's exciting to see where this heads. Of course, there are concerns with respect to employment, right? But, you know, some of the companies have spoken out to say that they have more jobs for humans to work alongside these robots. And the robots are mainly doing certain specific tasks, not everything, not yet at least.

Yeah, I think it'll be a gradual shift because beyond just building robots, maybe even more complicated part is retrofitting or adapting the warehouses and factories themselves. Since I imagine that's pretty complex.

And this is, yeah, I think a good area for it to be in because robotics famously is best to address dangerous or dull or repetitive kinds of jobs. And these are warehouses jobs are kind of

unhealthy, not necessarily dangerous, but it's bad for your back and muscles to do this. And dull, since you're doing a lot of this kind of mindless stuff. So I think on the whole, yeah, we should be a good use of automation, assuming that these companies can kind of buffer the transition.

And onto a very different area where AI can be used. We're talking about with a nudge from AI, ketamine emerges as a potential rare disease treatment from STAT news.

So this article covers how a tool named Maddy Kenron scanned millions of biomedical abstracts and looked for a relationship between existing compounds and the gene involved in a disease called ADNP syndrome and pinpointed potential treatment by ketamine. I think this is really exciting since I actually had my doubts on whether the

different drug discovery things from AI were actually going to surface anything useful. But it sounds like, you know, while this is still in trial, it hasn't been really proven out yet. It's still a great candidate to examine for this disease. Yeah, this is pretty interesting. Some of the details here are pretty cool. So, for instance, the stool found research that was years old, but

But just nobody advocated for it until the tool kind of spotted it and sent it over to this neurosurgeon named Matt Davis. And this tool actually was created by the director of UNB's Hugh Cole Medicine Institute. So this is by someone who is in medicine as opposed to primarily AI.

So yeah, it's interesting to see how these AI tools could help sift through a massive amount of information where drug interactions that have been revealed but remain kind of buried in all the data can be surfaced.

And on to our research section. Our first article is Tortured Phrases Giveaway Fabricated Research Papers. And this is from Nature. So there has been this paper that has dropped and actually published in the journal Science around how...

there are a lot of submitted papers and published papers that were probably AI generated. And basically this came from the researchers not understanding why other researchers in those fake papers would use certain terms like counterfeit consciousness, profound neural organization, and colossal information among others instead of just like AI or deep neural network. And so it's

This prompted the researchers to look into this a bit more closely at the University of Toulouse in France, and they found that there were some...

something more than 860 publications that used one of these phrases at least. And that even one of the journals out there were, had published 31 of them, which is very, very concerning. Yeah. So this is pretty interesting. I would say as, as a researcher just to, you know, realize that this is a,

Seems to be fairly common that people are just taking, you know, in some cases copying phrases or, or, you know, text and just translating to get these tortured phrases. So things like, you know, counterfeit intelligence standing for, or sorry, counterfeit consciousness standing for artificial intelligence is pretty common.

And then that these papers get published furthermore is really pretty troubling. And I guess I'm kind of surprised that this is this common and it's only recently come to light. Yeah, I mean, it is, you know, fake news. People have been talking about that. But now this is fake science, which is interesting.

Really concerning. And I think, I mean, people have joked about this. I know people have joked about this, but now seeing this in much more serious light is both concerning and, you know, makes me worried about the future of science if there's just that much more noise in the system.

Yeah, I do like that in the paper they looked into and analyzed a bit how this was going on. And they found that for this Micropressors and Microsystems publication, over time, their review process got a lot shorter. So now, apparently, in early 2021, it's 42 days on average.

to review. So it could be a little more specific to this particular publication, hopefully, but it's good to know that these sorts of things exist. So then, you know, publications can look out of them and journals can look up for them and have systems in place to make sure these don't get through. Right. Absolutely.

And on to our next research story, we have fast, efficient neural networks copy dragonfly brains. So this is by Francis Chance in the IEEE Spectrum magazine. And Francis Chance is a computational neuroscientist by training and a principal member of the technical staff at Sandia National Laboratories.

So I don't think this is quite research, but this is kind of an article she has written from some more ad hoc experimentation. And the summary is essentially it goes into how dragonflies are pretty interesting as animals. Despite being quite simple, they can do some amazing things like most animals can. And

it goes into how she experimented with kind of making an analog to their biological brains in an artificial neural network and some of the implications from that and her experience. So, yeah, I think it's a fun read. It's not very technical, but

It's kind of interesting to see what you can know about Dragonflies and what you can replicate with your network.

I think there's a bit of an asterisk here that I think the title is maybe a little bit hyped up. It is just creating a neural network for simulation of insect interaction. And so it's still in simulation. It's not like it's deployed in some way and it suddenly can interact. This robot dragonfly can interact with insects.

other, you know, other insects and other dragonflies, nor actually copying the dragonfly brain exactly in every way.

Yeah, that's a very good point. This actually only has like a simple three layer fully connected network, and it's only being trained to do the same thing as a dragonfly by tracking kind of head movement and deciding on movements. So it's not at all copying the brain, really. It's only copying some of the superficial behavior. But still, it's a pretty fun read.

There are some fun, interesting details here, like the fact that when dragonflies hunt, they need to aim ahead of prey's current location. They need to do that in just 50 milliseconds in terms of response to a prey maneuver.

And then if you factor in all the things that happen, that means that the processing of information needs to happen in just, you know, maybe three or four layers of neurons in sequence. And that's it before motor controls are sent out. So I found that interesting and how that tied into the experiment. That is pretty cool, especially since it's such a small network. Maybe it can be interpretable then.

And on to our ethics and society articles. The first one is from Wired titled AI wrote better phishing emails than humans in a recent test. So basically, researchers found that using GPT-3, the large language model from OpenAI, as well as other AI as a service platforms,

They could write better phishing emails than humans. And that is very concerning because then anyone can tap into these services and be able to launch some phishing messages to get information.

to do this at scale and to get, of course, like get money from people, um, put viruses, spread, spread that type of, that type of stuff. And this makes me think that this is, you know, this is going to be a growing problem where provenance and detection will be important, uh, but also education of, to help people detect this too. And also to help people, um, kind of be aware of this, uh,

And it does make me think that the Internet is not fair to all ages and groups because this is people like some people are going to be definitely much more susceptible to this. I think more people would be susceptible to phishing than than before. It's less of a joke of like avoid the Nigerian prince asking for money. It's pretty realistic stuff coming your way.

Yeah, I found it interesting that they actually differentiate between just phishing, which is already commonplace with sort of templated messages. Right. And they here call that spear phishing, which is, I guess, more targeted and more labor intensive. And that's where I can can come in and, as you said, be kind of worrying in terms of being much more effective.

In the past, it would take a lot of work to train an AI model to do this sort of stuff. And so maybe it wasn't worth it. But now this team from the from Singapore's government technology agency showed that you could do that with just a few of these AI as a service platform and GPT-3. So just using available APIs, not writing in code, not training any models, they could sort of hack this thing together.

which is more than I would have expected, to be honest. And they show that you can get more clicks in their tests than with human-composed messages. So it's a small experiment. It's not a full research paper, but definitely something to keep an eye on. And as language models keep getting bigger, maybe something we'll see happening.

I think one interesting note in the article is that while some APIs like the OpenAI GPT-3 one is a pretty strict and stringent rule, there are other AI as a service companies that don't

give free trials, you know, and give, don't ask for a credit card and don't verify your email address and make it very easy to turn out content without even, you know, meeting any identity verification. Um, even if an email identification is not quite identity even, but like, you know, very, very easy to get started. And I think that's what's concerning. Yeah, exactly. So it's, this is quite interesting. I thought, and, um,

I think we've talked about deepfakes a lot and so far there hasn't been much of an issue and this is sort of related to that. But as it gets easier and cheaper to do, it seems like it's only inevitable that phishing will be hard, which is a bit sad to think. But yeah, I guess that's inevitable.

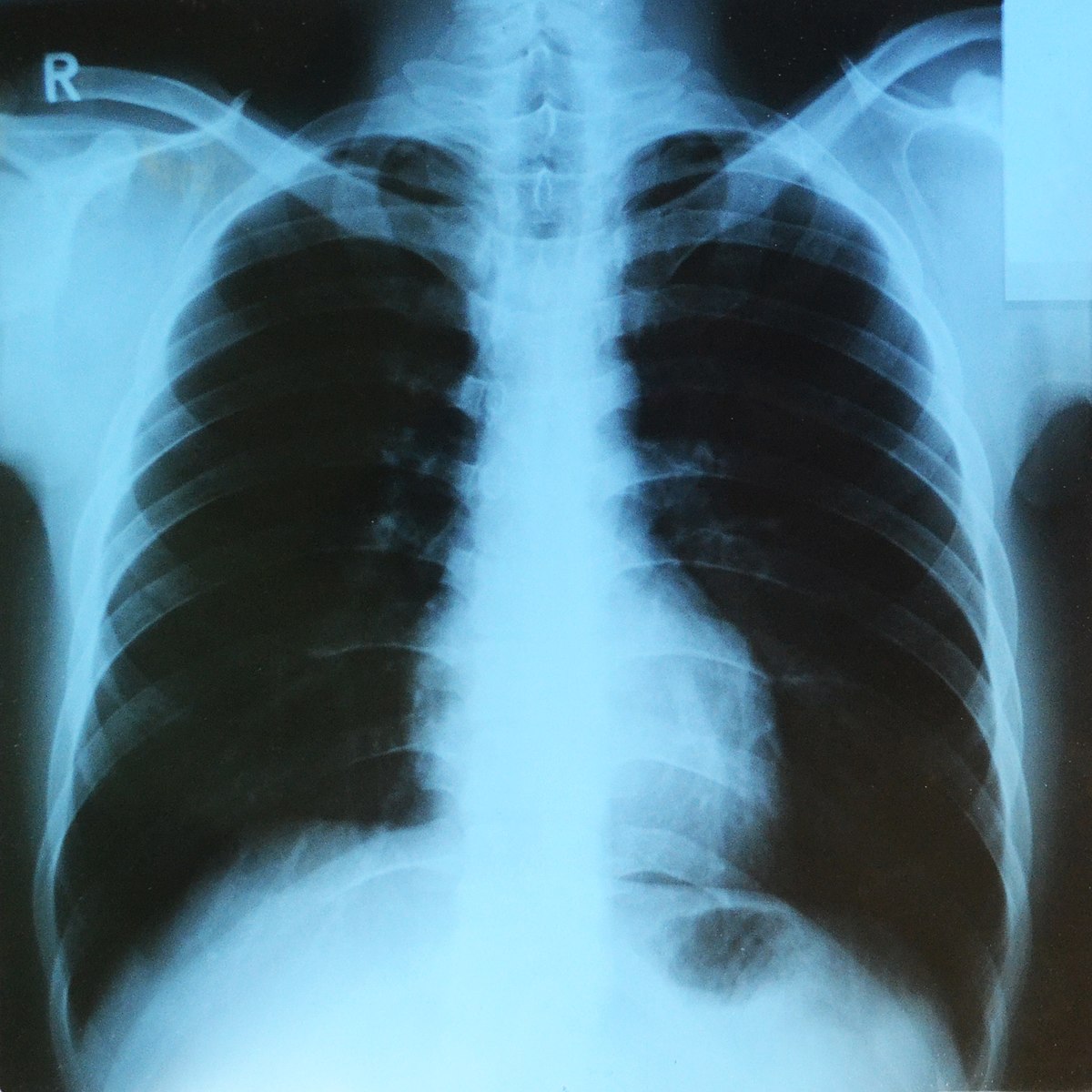

Well, onto another ethically troubling paper that's also a bit of a downer, but maybe less so. We have this paper called, or this article from Wired called, These Algorithms Look at X-Rays and Somehow Detect Your Race.

So this is covering the paper "Reading Race: AI Recognizes Patient's Racial Identity in Medical Images," which was done by about 20 authors from a lot of different affiliations, 15 different groups. And the summary is basically as the title says, that these trained AI systems can predict a patient's racial identity, self-reported racial identity.

even though they shouldn't be able to, as far as we know. So the authors, the medical experts could not know why or how these algorithms predicted the person's race. And that means that it could be a case of bias, in which case this is problematic and could mean that these models work worse for some races than others.

And I believe this is the first time this has been seen from like pure medical images in this way. I think to go one level deeper than the article and, you know, extract some things from the paper,

Basically, if you train these... If your task is to detect race, you can do it with very high ARC. Basically, just very, very... You can very much tune the algorithm to detect race. And it was something like 99% even. And for a lot of tasks, that's not...

For a lot of, you know, diagnoses, that's not easy. So to do that for race means that we can, it can see race if we train it for it.

And, of course, it's going to be lower if you train your algorithm for something else for another task and then see if it implicitly learned race there, too. And so that that would be lower at something more like point eight or in some cases, you know, like it was also up to like point nine. So.

Yeah, I think like I'm not surprised by that, but I'm actually quite surprised by generally how high things can be, especially since, you know, detecting cancer. And in this case, I guess like pneumonia or like nodules or all sorts of things in an X-ray, you know, that it can be very difficult. And so this is a simple task, but humans can't see it and algorithms can. It's something to definitely reflect on.

Yeah, exactly. And then interestingly, I mean, we know for instance that, uh,

There's been cases where trained models were just trained to see text on images of x-rays and stuff like that. We've known that, but I don't believe this is the case here. And the authors further tested it for various conditions. For instance, they degraded the quality of the x-ray so that it was unreadable to even a trained eye.

And then the software could still do it. The algorithms could still predict ways. So it could be maybe not troubling depending on why or how they do this, but it does imply that there might be some inappropriate associations and downstream problems if they can do this and humans can't.

I think it brings in the question of fairness and whether we can remove those biases and think about just, you know, we can't easily remove it with some kind of simple blurring or downsampling. And we will need to think hard about when deploying these types of models. Yeah, and in this case, it's also interesting because as far as I know, they haven't demonstrated that

This does lead to worse performance in terms of, you know, correctly identifying where there is cancer or, you know, these sorts of things. So that's another question of, you know,

Is it by default good to remove a bias if it's there, if we don't understand why it's there? Or is this something that needs to be studied more before the tools can even be deployed? It seems like an interesting start to explore more following this research.

Right. Definitely. I've definitely seen work where, you know, if you remove the variable that is most correlated with bias, if you just actually remove maybe race, even you get worse accuracy overall and you actually get even worse accuracy disproportionately for the disadvantage group. And so that is just so hard to kind of disentangle a lot of these things and know what to optimize for there. Yeah.

And to lift it up a little bit under our fun articles. First one is tably and AI powered camera app. Uh, give you a deeper understanding of your cat's health and mood. And that's from Mark tech post. Uh, so tably, which is, uh,

Sylvester.ai's new AI-powered software can help you understand your cat's feelings better because this is definitely what you need. I actually know someone who is very close to me who very much needs this or wants this, rather. So if you want to get deeper awareness of your cat's moods or feelings,

I guess how health, um, exactly how grumpy is your cat? Uh, if you have grumpy cat, maybe it's just always grumpy. Um, and it's based on actually this, this,

actual scale called the feline grimace scale um to to kind of detect uh how how upset your cat is it's pretty cute yeah this is pretty interesting uh you know you point your camera at it you take a photo of his face and then apparently it can give you these predictions of uh

apparently is it true that my cat enjoys the way I pet it or something like that which you know cats can be hard to read you know it could be useful honestly as someone who lives with a cat so yeah it's a cute story and if this actually works then you know another win for AI I guess yeah

And we have another fun story this week to wrap things up. We have man defense against package these using machine learning, AI flower and a very loud sirens. And this is from PC Gamer. So this is a little write up about a YouTube video created by a YouTuber who makes different hacks, technology, basically little fun kind of creations. And here was

camera that watched the front porch and he hooked it up with TensorFlow and recognized when a package is on a porch or not. And also recognized for when it's taken, if it's by someone who is not him. So there's like a little kind of list of people that are allowed. And if it was taken, there was a siren and also this like flower gun.

So, you know, just a fun little hack basically, but maybe a fun watch if you want to go into like how to hack something together in a month using machine learning. This is an example of what you can do with relatively little expertise.

I hope he sells the system on Amazon. I feel like a lot of people would have wanted this. I think the record for me is my package was stolen in two minutes as spotted by the Nest Cam. So, yeah, I mean, package sleeves. I mean, this would have been great early in the pandemic. You know what? Yeah, I think you're right. I think this could make a real product. I actually think it could. I don't know.

It's pretty funny. Yeah. So while funny, I actually, I think like I would have been super down to buy it. Yeah.

Yeah, and this guy Ryan Calm Down, or Ryder Calm Down is a YouTube channel, has also made some other fun hacks. Like he has a video called the dog detector to make me feel better, which just detected when dogs walk outside his house so he can look at them.

This was during quarantine. That's really cute. Yeah, it's nice to see some just fun hacks with AI as opposed to a lot of serious stuff we cover. That's really fun. All right, and that's it for us this episode. If you've enjoyed our discussion of these stories, be sure to share and review our podcast. We'd appreciate it a ton. And now be sure to stick around for a few more minutes to get a quick summary of some of the cool news stories from our very own newscaster, Daniel Bashir.

Thanks, Andre and Sharon. Now I'll go through a few other interesting stories we haven't touched on. First off, on the research side. As VentureBeat reports, a paradigm shift has occurred in natural language processing to an approach called prompt-based learning.

The pre-training and fine-tuning paradigm that preceded this approach involved training a model like BERT to complete a range of different language tasks, which would allow it to learn general-purpose language features. Those pre-trained models could then be adapted with task-specific optimizations.

Prompt-based learning allows researchers to manipulate a pre-trained language model's behavior to predict a desired output, sometimes without task-specific training. For example, we might want to classify the sentiment of the movie review "Don't watch this movie." We could append a prompt to the sentence to get "Don't watch this movie. It was _____."

and the language model would likely assign higher probability to the word "terrible" than "great" for the next word in that sentence. Prompts are not easy to create in all cases, and a number of limitations exist, but studies suggest prompt-based learning is a promising area of study and will be for the years to come.

Our second story turns to the business side. Seoul-based edtech startup Mathpresso wants to disrupt the tutoring industry. It operates the mobile app Q&A, where students take photos of math problems and the app's AI system searches for answers. According to Forbes, the startup raised a $50 million investment round led by SoftBank earlier this month.

After the round, the company's total funds amount to $105 million at a valuation under $500 million. According to Mathpresso's website, the Q&A app has solved 2.5 billion math problems from nearly 10 million users who come from more than 50 countries. In Korea, two-thirds of the student population uses Q&A.

Mathpresso plans to use the new funding to develop algorithms to create personalized learning content, which will recommend questions similar to those students might be struggling with. According to their CEO, Mathpresso has plans to go public in the near future. And finally, a story about AI and society.

In an opinion piece for the New York Times, law professors Frank Pasquale and Gianclaudio Malgieri state that Americans have good reason to be skeptical of AI. They give as examples a number of unfortunate incidents involving AI systems, as well as the fact that even when AI seems to be an unqualified good, datasets might not be racially representative.

The professors consider the EU draft Artificial Intelligence Act and claim that some parts do not go far enough.

For example, the list of prohibited AI systems should include applications like ethnicity attribution and lie detection. While, as the professors acknowledge, the EU Act has its issues, they call attention to the fact that the problems posed by unsafe or discriminatory AI do not seem to be a high-level priority for the Biden administration.

While states like California have started discussing legislation, there does seem to be a lack of national leadership in this area. Pasquale and Malgier think that the United States should follow the EU's lead and learn from its approach, prioritizing respect for fundamental rights, ensuring safety, and banning unacceptable uses of AI.

Thanks so much for listening to this week's episode of Skynet Today's Let's Talk AI podcast. You can find the articles we discussed today and subscribe to our weekly newsletter with even more content at skynetoday.com. Don't forget to subscribe to us wherever you get your podcasts and leave us a review if you like the show. Be sure to tune in when we return next week.