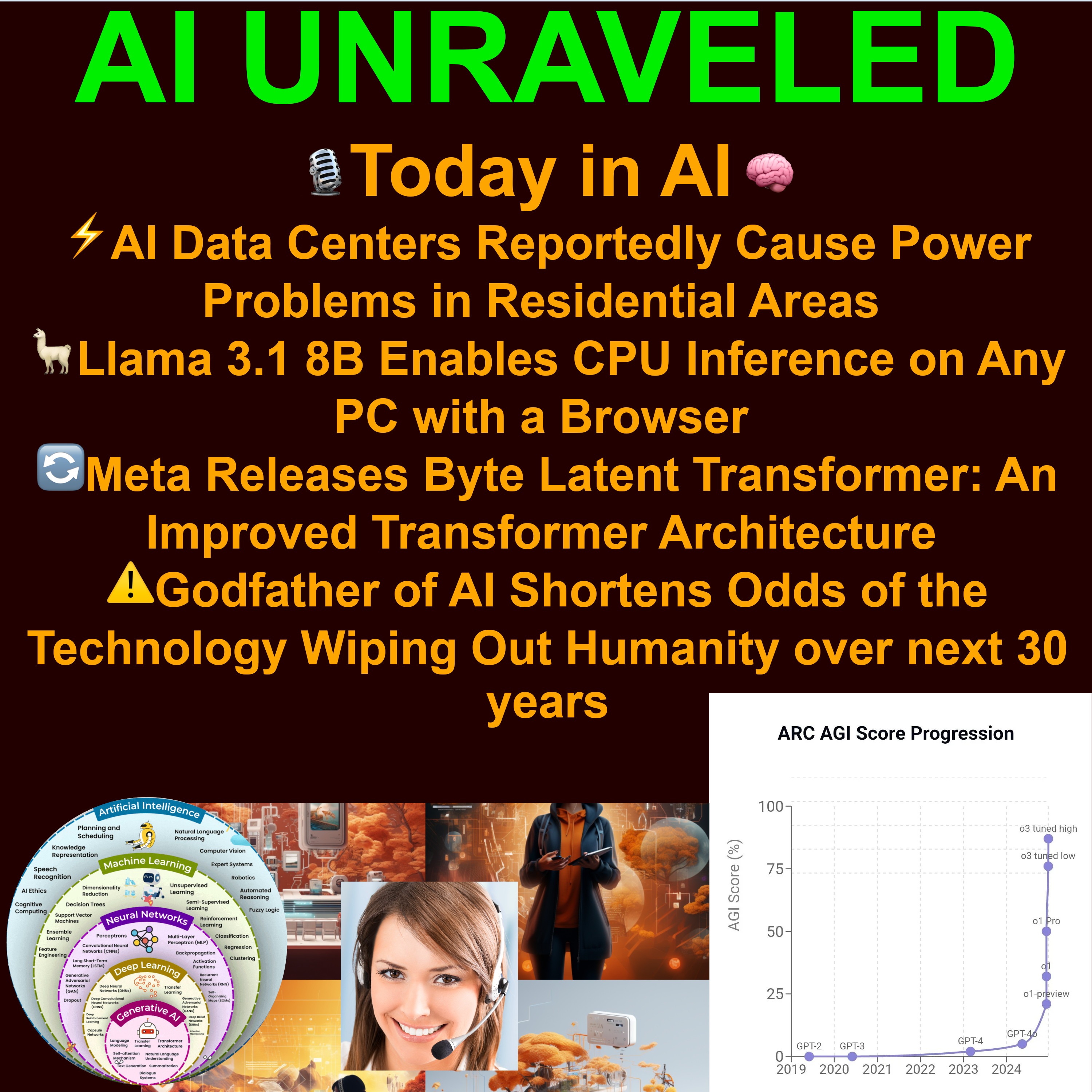

Today in AI: ⚡AI Data Centers Reportedly Cause Power Problems in Residential Areas 🦙Llama 3.1 8B Enables CPU Inference on Any PC with a Browser ⚠️Godfather of AI Shortens Odds of the Technology Wiping Out Humanity

AI Unraveled: Latest AI News & Trends, GPT, ChatGPT, Gemini, Generative AI, LLMs, Prompting

Deep Dive

Why are AI data centers causing power problems in residential areas?

AI data centers are causing shorter lifespans for appliances and potential strain on the power grid due to their massive energy consumption, impacting nearby residents.

What is Llama 3.1 8B, and why is it significant?

Llama 3.1 8B is a language model from Meta that can now run on any PC via a browser using PV tuning compression, making high-performance AI accessible without requiring supercomputers.

What warning did Geoffrey Hinton, the 'Godfather of AI,' issue about AI?

Geoffrey Hinton warned that AI could potentially wipe out humanity within the next 30 years, highlighting existential risks associated with advanced AI development.

How is AI being used in NASCAR, and why?

AI is being used to revamp NASCAR's playoff format to address fan criticism about predictability, aiming to inject more excitement and unpredictability into races.

What is Semicong, and how is it revolutionizing chip design?

Semicong is the world's first open-source semiconductor-focused language model, trained on chip design and manufacturing data. It aims to make chip design faster, more efficient, and accessible, potentially accelerating innovation across the tech industry.

What is the Byte Latent Transformer, and how does it improve AI processing?

The Byte Latent Transformer is a new architecture from Meta that processes raw bytes of data instead of tokenized text, allowing for more holistic and efficient information processing, capturing nuances often missed by traditional models.

What is OpenAI's definition of AGI, and why is it controversial?

OpenAI defines AGI as an AI system capable of generating $100 billion in profits, which has sparked debate over whether AI development is overly focused on corporate gains rather than broader societal benefits.

What are the key differences between OpenAI's O3 and DeepSeek v3?

O3 excels in creative text generation, while DeepSeek v3 is stronger in natural language understanding, semantic search, and knowledge representation. DeepSeek v3 is also more computationally efficient, making it suitable for real-world applications.

What is Google's Gemini 2.0, and how does it differ from other AI models?

Gemini 2.0 is Google's multimodal AI model capable of processing text, images, audio, and video. Its versatility makes it suitable for applications like video analysis, medical imaging, and more, setting it apart from primarily language-focused models.

What are some potential future applications of AI in healthcare and climate modeling?

AI could revolutionize personalized medicine by tailoring treatments to individual genetic and lifestyle factors. In climate modeling, AI can analyze vast datasets to improve predictions and develop strategies for mitigating and adapting to climate change.

- AI data centers causing power problems in residential areas

- Geoffrey Hinton's warning about AI wiping out humanity

- Llama 3.1 8B enabling CPU inference on any PC

- Accessibility of powerful AI tools and potential for misuse

Shownotes Transcript

Buckle up, everyone. We're diving headfirst into the latest and greatest AI developments that dropped in late December 2024. Yeah, we've got a lot to cover. We're talking daily reports, technical papers, even a model comparison overview. It's been a busy few days in the world of AI. That's for sure. So consider this your crash course on all things cutting edge AI. Let's get started. All right. First up, a bit of a reality check from the Daily Chronicle. Apparently those

massive AI data centers everyone's been raving about might be causing some problems for the folks who live nearby. Oh, really? What kind of problems? We're talking shorter lifespans for appliances and potential strain on the power grid. Hmm. That's definitely something to consider.

It seems like we need to think about the bigger picture when it comes to these advancements. Exactly. Progress shouldn't come at the expense of people's everyday lives, right? Absolutely. And speaking of potential downsides, we can't ignore the warnings from Geoffrey Hinton, the so-called godfather of AI.

Yeah, he's been pretty vocal lately. He's saying there's a real chance that AI could wipe out humanity within the next 30 years. That's a pretty stark warning. It is. So on one hand, we've got AI potentially causing issues at the local level. And on the other hand, we're talking about existential threats. It's a lot to take in. Definitely a lot to think about. But let's move on to some more positive news.

Remember Lama 3.1, that impressive language model from Meta? Yeah, I remember that one. Well, now you can run it on any PC right in your browser thanks to something called PV tuning compression. Oh, wow. That's a game changer in terms of accessibility. It is. Before you needed a supercomputer to even touch this kind of AI power. PV tuning is a really clever way to compress these massive language models without sacrificing too much performance. So now it's literally at everyone's fingertips.

Exactly. And it's not just limited to Lama 3.1. We're seeing this trend of democratization across the AI landscape. Like with DeepSeek v3, right? Exactly. DeepSeek v3 has a staggering 671 billion parameters. Hold on, wait a minute. What exactly are parameters for those of us who aren't AI experts? Ah, good question.

Think of parameters as the knobs and dials that control how an AI model learns and processes information. More parameters generally mean a more powerful AI. Okay, so more parameters, more power. Got it. Yeah. But this whole idea of incredibly powerful AI tools becoming widely available...

It's a bit of a double-edged sword, isn't it? It is. On one hand, it could unleash a wave of creativity and innovation, giving individuals and small teams the power to develop solutions that were previously only possible for large corporations. Like giving superpowers to the masses. Exactly. But of course, there are risks.

The potential for misuse is a real concern. Things like the spread of misinformation, deepfakes, autonomous weapons systems. It's a lot to consider. It is. We need to be asking the tough questions about how to manage this new reality. Absolutely. Speaking of shaking things up, AI has even found its way into the world of NASCAR. NASCAR? Really? Yeah. Apparently they're using AI to revamp their playoff format.

Interesting. Why is that? They've been getting some criticism from fans who felt like the old system was too predictable. Ah, I see. So they're hoping AI can inject some excitement back into the races. Exactly. It's fascinating to see how AI is being applied in these unexpected places. It is. And in another surprising twist, we have Semicong, the world's first open source semiconductor focused language model. Now that's interesting. Tell me more.

It's essentially an AI that's been trained on a massive amount of data related to chip design and manufacturing. So instead of writing columns or composing music, this AI is helping to design the chips that power our devices. Precisely. The goal is to make the design process faster, more efficient, and more accessible. That could be revolutionary.

The semiconductor industry is incredibly complex. And resource intensive. Yes. Making chip design more accessible could really accelerate innovation across the entire tech industry. So Semicon is like a force multiplier for the whole tech world. I like that analogy. But let's shift gears for a moment and talk about advancements in AI's core capabilities. Meta has been making waves with something called the bite-latent transformer. Oh, yes. I've heard about that. One, it's a new type of architecture that

that could significantly improve the efficiency of AI processing. So how does it work? Well, traditional language models rely on tokenization, which means breaking down text into individual words or parts of words.

But this can be computationally expensive and sometimes lose important context. OK, I'm following so far. The byte latent transformer works directly with bytes of data, which are essentially the raw building blocks of digital information. Ah, so it's like seeing the forest instead of just the individual trees. Exactly.

This allows the model to process information more holistically, capturing nuances that might be missed by token-based models. So it's faster, more accurate, and more adaptable. Potentially, yes. And to really drive home how capable AI is becoming, we have to talk about the robot that's been making headlines for syncing those impossible basketball shots. Oh yeah, the one that's using AI to analyze the physics of the shot in real time. It's incredible. It's like having a pro basketball player's brain inside a robot's body.

It really highlights the potential of AI in robotics and other fields,

that require precise control and real-time adaptation. It's both exciting and a little bit terrifying to think about where this could all lead. I agree. But speaking of potential downsides, there's been a lot of buzz around some leaked OpenAI documents. The ones about how they define artificial general intelligence. Exactly. Apparently, they define AGI as an AI system capable of generating $100 billion in profits. That's an interesting way to define it.

Some might say it's a bit narrow minded. It certainly raises some questions about the motivations behind AI development. Are we primarily focused on creating AI that can boost corporate profits or are we aiming for something more ambitious, something that can truly benefit humanity as a whole? Those are the questions we need to be asking. And on that note, we'll pause here for now. But don't go anywhere, because in part two, we'll be diving into a comprehensive overview of the leading AI models that are shaping the landscape.

Looking forward to it. Welcome back, folks. Ready for round two of our AI deep dive. Absolutely. Let's pick up where we left off. All right. So last time we were talking about some of the potential downsides of AI, like those leaked OpenAI documents and their definition of AGI. Right. The whole...

profit driven approach to defining AGI. Exactly. It sparked quite a debate. But let's shift gears for now and zoom out to look at the broader AI landscape. Sounds good. What do you have for us? Well, we've got a great overview from Jamutech that breaks down the leading AI models as of late 2024. Perfect timing. I'm actually really curious to hear which models are at the top of the leaderboard right now. Well, OpenAI is still a major force in the field.

Their latest model, O3, is really pushing the boundaries of what's possible with large language models. O3, that rings a bell. Didn't we talk about that earlier in relation to the leaked OpenAI document? That's right.

They're the ones who define AGI as an AI system capable of generating $100 billion in profit. Right, which raised some eyebrows to see the least. But putting those concerns aside for a moment, O3 is undeniably a powerful and influential model. Absolutely. It's being used in a wide range of applications, from chatbots and virtual assistants to content creation and scientific research. So it's a

pretty versatile tool. It is. One of its most impressive capabilities is its ability to generate human quality text. It can write stories, poems, articles, even code with remarkable fluency and coherence. So if I need an AI to help me write a screenplay or compose a song, O3 is a good option. It's definitely a strong contender in those areas.

But what's really fascinating is that we're seeing a number of other models emerge that are challenging OpenAI's dominance. Like DeepSeek v3, for example. Exactly. DeepSeek v3 is gaining a lot of traction, and its accessibility is a key factor in its growing popularity. Right.

Right. Because of PV tuning, anyone can now run DeepSeek v3 on their PC. Exactly. And that's opening up a world of possibilities for researchers, developers, and even hobbyists who want to experiment with cutting-edge AI without needing access to massive computing resources. Okay. But what are the key differences between O3 and DeepSeek v3? Are they designed for different purposes, or is it more about performance and capabilities? It's a bit of both.

While both models are capable of handling a wide range of tasks, they do have some distinct strengths and weaknesses. O3, as we discussed, is known for its impressive language generation abilities. It's great at producing creative text formats, but DeepSeq V3 excels in tasks that require a deeper understanding of context and relationships.

Interesting. So if I need an AI to help me research a complex topic or analyze a large data set, DeepSeq v3 might be the better choice. That's a good way to put it. It's particularly strong in areas like natural language understanding, semantic search, and knowledge representation. It's also designed with efficiency in mind.

It can process information quickly and with relatively low computational requirements, which is a major advantage of many real-world applications. So we've got OpenAI's O3 and DeepSeek P3, both incredibly powerful AI models with their own unique strengths. What about Google? Where do they fit into all of this? I asked Google. They've been relatively quiet on the AI front for much of the year, but they recently unveiled Gemini 2.0, their latest and most advanced AI model. Gemini 2.0, the name sounds pretty impressive. What can you tell us about it?

Well, Gemini 2.0 is Google's answer to the growing competition in the AI space. It's a multimodal model, which means it's capable of processing and understanding different types of data, including text images, audio, and even video. Whoa, multimodal. That sounds like a major leap forward. Most of the models we've talked about so far have been focused primarily on language. That's right. But Gemini 2.0 is designed to be much more versatile.

It can analyze and interpret information from a variety of sources, making it suitable for a wider range of applications. So what are some of the potential use cases for a multimodal AI like Gemini 2.0? The possibilities are truly vast. Imagine an AI that can understand the context of a video, generate captions and summaries, and even answer questions about the content.

or an AI that can analyze medical images, identify potential abnormalities, and assist doctors in making diagnoses. Wow, that's incredible. It sounds like Gemini 2.0 could have a huge impact on fields like healthcare, education, and even entertainment. Absolutely. And it's likely just the tip of the iceberg. As AI models become more sophisticated and capable of handling multiple modalities, we can expect to see a wave of new and innovative applications emerge.

So to recap, we have OpenAI's O3 DeepSeq v3 and Google's Gemini 2.03, incredibly powerful AI models, all vying for dominance. What's the takeaway message here? What does this all mean for the future of AI? The key takeaway is that the field of AI is evolving at an unprecedented pace.

We're seeing a rapid increase in model complexity performance and accessibility. And as we've discussed throughout this deep dive that comes with both incredible opportunities and some very real challenges. Exactly. We need to be mindful of the potential risks and ensure the AI is developed and deployed responsibly. But we also need to embrace the incredible potential of these technologies to solve some of the world's most pressing problems. Absolutely. From climate change and disease to poverty and inequality, AI has the potential to make a real difference in the world.

It's a powerful tool that we need to learn to use wisely. Agreed. Speaking of using AI wisely, remember that story about NASCAR using AI to redesign its playoff format? Yeah, that was a fascinating example of how AI is being applied in unexpected ways. It really highlights AI's versatility and its potential to optimize and improve systems across a wide range of industries.

From finance and healthcare to transportation and entertainment, we're seeing AI being applied in the ways that were unimaginable just a few years ago. It's like AI is becoming the ultimate Swiss army knife of technology. I like that analogy. But let's shift gears for a moment and revisit a development that has some potentially profound implications.

Semicong, the open source semiconductor focused large language model. Right. Semicong, the AI that's helping to revolutionize chip design. Exactly. By making chip design more accessible and efficient, Semicong has the potential to accelerate innovation in key technological fields. And as we discussed earlier, semiconductors are the building blocks of modern technology. So advancements in chip design could have ripple effects across countless industries.

Absolutely. Imagine faster computers, more powerful smartphones, and even new breakthroughs in areas like artificial intelligence and renewable energy.

Semicon could play a key role in driving those advancements. It's really exciting to think about the possibilities. But as we marvel at these advancements, it's crucial to remember that AI is a tool. And like any tool, it can be used for good or for ill. That's an important point to remember. We need to be having thoughtful conversations about the potential impact of AI on society and work together to ensure that it's used in a way that benefits all of humanity.

All right, we're back for the final stretch of our AI deep dive. It's been a whirlwind tour of cutting edge advancements, but all good things must come to an end, right? Yeah, time flies when you're talking about AI. It really does. So before we wrap things up, I have to ask the big question. What's next for AI? What does the future hold?

Well, that's the million dollar question, isn't it? The field is evolving so rapidly, it's hard to say for sure, but there are definitely some trends that are worth watching. Okay, so put on your future predicting hat. Yeah. What do you see on the horizon? Well, one area that I'm particularly excited about is personalized medicine.

we're already seeing AI being used to develop tailored treatments based on an individual's unique genetic makeup lifestyle and environmental factors. So instead of a one-size-fits-all approach to healthcare, we're moving towards treatments that are specifically designed for each patient. Exactly. It's like having a custom-made suit, but for your health. I like that analogy. And what about other fields? Are there any other areas where you see AI having a major impact in the near future? Absolutely.

Another area that's ripe for disruption is climate modeling. AI is already being used to analyze vast amounts of data about the Earth's climate and to develop more accurate predictions about future climate change. So AI could help us get a better handle on this whole climate change thing. Exactly. It could help us to understand the complex dynamics of the climate system.

and to develop more effective strategies for mitigation and adaptation. That's a really important application. And those are just two examples. The potential applications of AI are truly limitless. It's going to touch every aspect of our lives in the years to come. I completely agree. It's hard to overstate

the transformative potential of AI. It's going to change the way we work, the way we learn, the way we interact with the world around us. It's both exciting and a little bit daunting to think about. It is. There are definitely challenges that we need to address, but I'm optimistic about the future.

I think AI has the potential to make the world a better place if we use it wisely. And that's the key, right? Using it wisely. We've talked a lot about the potential downsides of AI, the risks of misuse, the ethical considerations. We have. It's important to be aware of those risks and to develop safeguards. But we also need to be mindful of the potential benefits, the potential to solve some of the world's most pressing problems. Like climate change, disease, poverty, inequality.

Exactly. AI is a powerful tool. And like any tool, it can be used for good or for evil. It's up to us to make sure that it's used for the benefit of all humankind. Well said. So as we wrap up this deep dive into the world of AI, what's the one message you want our listeners to take away? Hmm.

That's a tough one. But I think the most important thing to remember is that the future of AI is not predetermined. It's something that we're actively shaping each and every day. Through our choices, our investments, and our engagement in these critical conversations, we can help to steer AI in a direction that benefits all of humanity. I love that. It's a powerful reminder that we're not just...

passive observers in this technological revolution, we have a role to play in shaping the future. Absolutely. So to our listeners out there, stay informed, stay engaged, and keep asking the tough questions. The future of AI is in our hands. Couldn't have said it better myself. And with that, we'll bring this episode of The Deep Dive to a close. Thanks for joining us on this journey into the fascinating world of AI. It's been a pleasure. Until next time, keep exploring, stay curious, and never stop learning.