#91 - DeepMind Mafia, DishBrain, PRIME, ZooKeeper AI, Instant NeRF

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to SkyNet Today's Last Week in AI podcast, where you can hear AI researchers chat about what's going on with AI. As usual, in this episode, we will provide summaries and discussion of last week's most interesting AI news. You can also check out our Last Week in AI newsletter at lastweekin.ai for articles we did not cover in this episode. I am one of your hosts, Andrey Kurenkov.

And I am not Dr. Sharon Zhou, but filling in, I am Daniel Beshear.

This week, we'll be discussing a few different articles on the applications and business side. We'll be looking at the new startups that have been created by the DeepMind mafia. We'll also look at some new technology from NVIDIA. On the research and advancement side, we'll be looking at a neural network with biological neurons, a new deep learning approach for generating AI chip architectures,

On the society and ethics side, we'll look at US-China collaboration on AI papers, as well as the vulnerability of banks using AI to rush and sabotage. Finally, we'll take a look at Ubisoft's new machine learning tool for modeling animals and an NVIDIA AI model that can turn 2D snapshots into a 3D rendered scene.

All right, so a fun episode, and it's fun to have Daniel filling in. He's been involved with the podcast since its inception, I think. So, yeah. And before we start, just for fun, we have a couple of new reviews on Apple Podcasts. So I just want to shout them out.

Yeah, yeah. And they're quite, quite nice. One of them says, the title is perfect, quick, clear, wide ranging insights. So pretty positive. And the review says that although this is not my field, I felt AI is something I should know a little bit about. And then it goes on to say that we go through a wide ranging handful of real example of AI work.

and make it possible to get a little handle on what's going on. So yeah, that's great. That's what we aim to do for anyone, especially outside of AI, to get a glimpse of what's going on and also for people in sort of the weeds of it to get a perspective outside of just research.

And yes, let's go ahead and dive in. First up in applications and business, we have Meet the DeepMind Mafia. These 18 alumni from Google AI Research Lab are raising millions for their startups from climate to crypto.

So as the title says, this is an article surveying a bunch of companies being started by people who used to work at DeepMind, often prominent researchers there. And this is on Business Insider. So just to give you a bit of details from there, we won't go through all of them, but DeepMind is quite big now. They have 1,200 employees now.

And it's, you know, pretty old now, or at least has been around since about 2014. So a lot of people have joined and worked there. And so these people that are starting new companies, there's kind of a variety that includes the original co-founder from DeepMind, whose last name is Suleiman.

and they're starting this startup, Inflection, with another co-founder, which is pretty new. There's not much details about it, but that just got reported. Then there's also ex-DeepMinder Jack Kelly starting Open Climate Fix. There's also engineers, Miljan Mardik and Peter Toff starting a web-free venture, Cosnet Labs.

And there's a few more. So there is the company personal assistant Saiga. There is actually a nonprofit called Africa I know and another one called Inductiva AI from a founding member of the AlphaFold team. So really a whole bunch of different companies with different applications of AI. And yeah, pretty cool to see all of these people who have done a lot of exciting work go on to

develop new companies that will spin out and do a whole bunch of useful things. Yeah, it's great to see and I guess not even that much of a surprise given just the massive concentration of AI talent and deep mind. But it's nice in the sense that I feel like in terms of looking at the impacts in general, it feels like the different areas that could benefit from AI

almost better served than, you know, having by having that concentrated talent disperse out a bit and start all of these new ventures. So I'm excited to see where this all goes. Yeah, I agree. I think DeepLine has been doing more and more sort of applied work in the vein of AlphaFold, where they address a particular task. But there's still sort of a general AI research lab focused on basically advancing AI on a whole bunch of fronts.

So these people, like the founding member of the AlphaFold team, Hugo Penedones, obviously have a lot of expertise in these areas and having them move on and then really focus in on these problems, I think is really exciting. We'll just have to watch and see where this all goes.

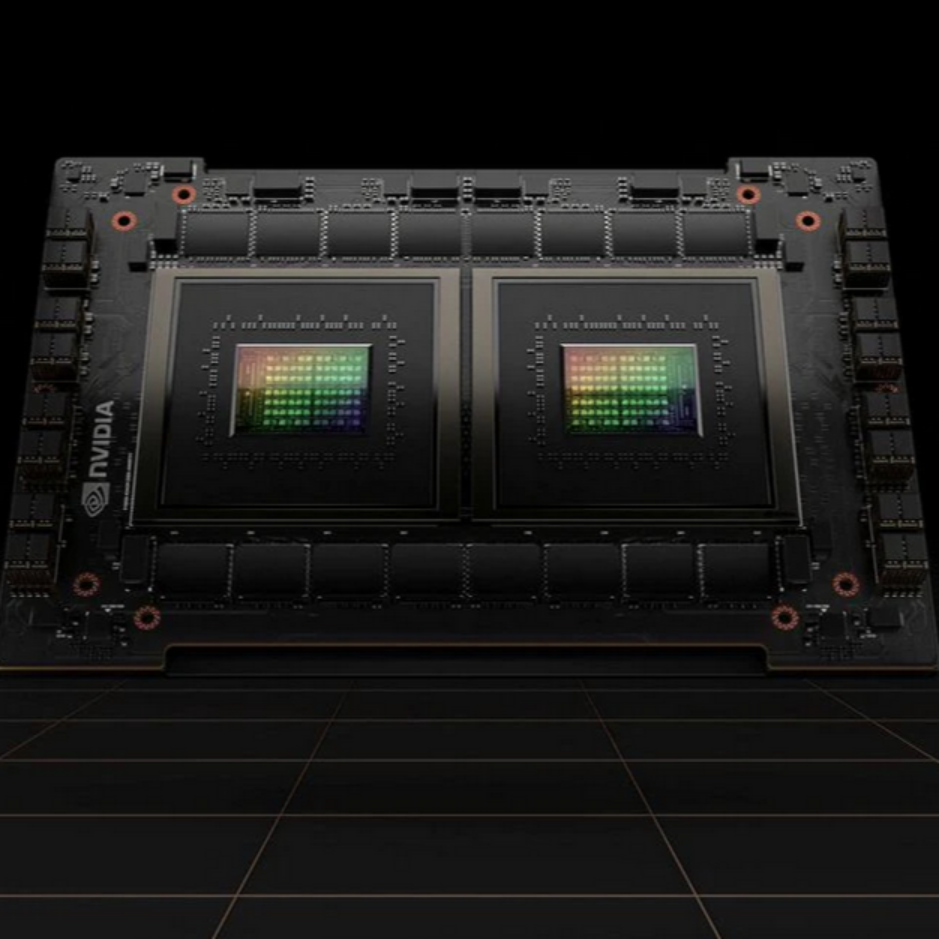

So for our second piece on this front, we're going to look at some new technology that was recently unveiled by NVIDIA. And really for all these innovations, the big focus

is, as you'd expect, on speeding up AI. So the first one is the new H100 chip. This is superseding NVIDIA's previous top-of-the-line A100 chip. It's named after Grace Hopper and is actually the first ARM-based chip to be released from NVIDIA since its deal to buy ARM fell apart. It also unveiled a new supercomputer called EOS, which it claims will be the world's fastest AI system.

But I think for a lot of folks, the H100 chip is a really big deal right now.

Jensen Huang, the CEO, called H100 the engine of AI infrastructure. Another really interesting thing is that the H100 actually hasn't been released yet despite the fact that it's been announced. So a lot of others in the ecosystem are actually kind of wondering what sort of game NVIDIA is playing here. Perhaps they're trying to lock up the market, hard to say. But

In terms of what the H100 is actually offering over its predecessor, there's a couple of interesting things I just wanted to call out because I think that they confirm and sort of comment on a lot of the development that's come in the AI space recently, both on the software and hardware side.

One particularly interesting bit is that they introduced something called a transformer engine, really a specific part of the hardware that is literally just focused on optimizing transformers. So they claim up to nine times transformer model speed up for training on a cluster. They also have a sparsity feature,

But with the transformer engine, another cool thing there is how it manages operations between different data types. So again, we said they claim nine times faster AI training, as well as 30 times faster AI inference speedups over the A100.

There's a lot of really interesting projections in terms of the H100's performance. Time will tell us what things look like, but it really does come across as a validation and I guess indicative of the huge bets that the hardware industry is making on AI right now. So I thought this was a really interesting announcement.

Yeah, same. I think we've obviously been seeing a trend of gigantic models being more and more common. I mean, generally there's been a trend of increasing model size, but lately even more so. So obviously I think there will be a need for this sort of advanced hardware. And the fact that they're adding these sort of optimizations specifically for AI is

make a lot of sense, right? Because now with companies like Google and Facebook and OpenAI, they really are devoting a huge amount of their cloud infrastructure and computing infrastructure specifically to AI. And, you know, I mean, obviously Google already does this with their TPU chips or systems, which are, you know, tensor something, something, right?

That's a processing unit, I think. Yeah, yeah, yeah. So they already are on this train of creating their own hardware. I think Meta or Facebook also did this sort of thing. So clearly there's a need and it's cool to see NVIDIA, which, you know, in case anyone doesn't know, where GPUs power AI for, I guess, most companies, most researchers, etc.,

It's exciting to see them going more in this sort of optimized route. Definitely. I think it's a pretty interesting development and it really does seem like pretty much every large company at this point, you know, Amazon included, are really throwing a lot of their chips into these acceleration architectures. Mm hmm. For sure.

And now on to our lightning round where we just quickly go over some other stories we did not have time to summarize. First up, we have robotic exoskeleton using machine learning to help users with mobility impairments. So here they're using machine learning to basically let the exoskeleton guess the intentions of a user and thereby be more reactive and easy to control. Next up, we have

Kroger and Nvidia partnered to reinvent the shopping experience with AI and digital twin simulations. Not much details here, but basically there is some sort of strategic collaboration. And Kroger is a huge shopping chain, in case you don't know, in much of the US. So it is kind of interesting to see and it'll be cool to see what they actually develop.

Absolutely. Next on, we have top AI execs, including Richard Stocher, are launching a $50 million AI-focused venture fund called AIX Ventures, which should be really interesting to see, I think, especially as so much venture money is flowing into AI these days. But I'm curious to see what it'll look like as maybe folks who really spent a lot of time in the AI research space are starting to create their own funds and where that goes.

And the final one is that Mayo is launching an AI startup program with assists from Epic and Google. This is called the Mayo Clinic Platform Accelerate. It's a 20 week initiative aimed at helping four companies in its initial cohort become market ready. Yeah, so a lot of activity in the business front as usual.

And now moving on to our stories in research and advancements for AI. First up, we have researchers from Cortical Labs develop DishBrain, a neural network with biological neurons. So this Australian company, Cortical Labs,

is integrating neurons into digital systems, basically trying to use biological neurons processing capability to build the synthetic biological intelligence.

So they looked at neural networks grown from mouse and human cells. And then from there, they developed DishBrain, which is a system that demonstrates natural intelligence by using those neurons intrinsic adaptive computation in a structured context. So specifically, they made this biological neural net

play a game similar to Pong. And so a sequence of electrodes in this biological neural network, what they called BNN, was triggered based on the game state, delivered sensory input, and then other electrodes controlled the up and down motion of the paddle. So basically they, you know, grew a little brain, sounds like, to play Pong

And yeah, I think this is quite interesting. I think, you know, I do think there might be limits to what we can do with traditional computing paradigms. Even as we kind of scale up, there might be a limit. So if we can actually do the sort of biological neural network training, that could be a big deal. Although this is obviously very early on.

Yeah, this is definitely pretty early stages, but...

I am also glad that people are still exploring other approaches to synthetic intelligence besides just the software-based neural network paradigm. I think that seems to right now just be leading us down this road of let's create larger and larger models and throw even more stupid amounts of data and compute at them, which it's really interesting to watch and see what kinds of new capabilities come out of those

scaling laws. But at the same time, it's a little bit tiring and you really wonder, OK, is this the path we want to go down if we're thinking back to like when the field of AI was started, what people were trying to get at back then? So I'm glad that people are still pushing on different approaches here. And I hope we'll see we'll see more coming out of that.

Yeah, I think there's been also a lot of excitement or a lot of work in the past decades on so-called neuromorphic chips, which kind of do a combination of what this is doing and what we have in AI right now, which are chip designs that are meant to emulate

uh neuron activity kind of take more direct inspiration from um how the human brain works or how animal brains work but still in sort of more traditional silicon-based activations you know with transistors and so on so and yeah that's still being worked on by many groups so far gpus have

been much more kind of successful in part due to them being a general purpose and easy to program and easy to work with. But I could see your market after architecture is becoming more of a big deal in the coming decades. As we saw, Nvidia has already created this more AI optimized sort of

chip and it may well be that neuromorphic architectures will ultimately enable better progress over the long term. That makes a lot of sense. I think it's really going to be this interplay between the software and the hardware, right? Because in some ways, the software, the models, the new developments that are going on in the space and computing in general are going to have a big impact on

on the hardware that gets developed as we are seeing in the development of AI and just domain specific accelerators today. But then at the same time, you see the reverse trend of how the hardware that is available does limit what sorts of algorithms actually get built, right? Just because certain sorts of computations like parallel computations on GPUs, for example, are going to work better on those. So

I think it's really going to be this game of back and forth going on in the future. But I'm definitely curious as well to see where, if, and how neuromorphic architectures start to enter the game. So our next story on this front actually brings us back to the old AI chip scene. And this is about a new deep learning approach called Prime, developed by some Google AI and UC Berkeley researchers.

This actually generates AI chip architectures by drawing from existing blueprints and performance figures. Now, this is actually following on some recent work that I think also comes out of places like Google AI, where they were actually using reinforcement learning for things like chip planning. So this definitely isn't the first instance where people are trying to figure out, okay, how can we use AI in order to design hardware better?

Now, this is looking, of course, specifically at hardware accelerators. And when you want to find this balance between computing and memory resources and communication bandwidth, it's a little bit hard to meet design limitations. So Prime offers this data-driven optimization approach that generates AI chip architectures by using log data without having to do further hardware simulation, which actually also saves a lot of time.

And what's really cool about this too is they claim that this allows data from previous experiments to be reused in a zero-shot fashion, even when you're targeting a different set of applications. They also claimed a couple of interesting results in particular, although they didn't train Prime to reduce chip area, it actually increased latency over Edge TPU, a previous approach.

by 2.69 times and lower chip area by 1.5 times. So...

I guess a lot of what we're seeing here is the introduction of machine learning techniques, not just using hardware, but into the creation of hardware itself. And the fact that we are seeing results that indicate improvement on existing hardware is really interesting. And I think that we're going to start seeing a lot more work on this front in the future and not just in the planning of chips,

But can the actual machine learning compilers that get used for AI accelerators and so on?

Yeah, exactly. I think when we discussed that story about using reinforcement learning for chip design, it was already very exciting. And I think at that point, Google had said that they actually incorporated some of these design findings into their TPU and that improved their performance.

And yeah, these results are again very striking. I mean reducing chip size by 50% here or reducing latency by almost a factor of two. These are major changes, major improvements.

almost, you know, maybe it's a little bit too good to be true. It's not too clear how directly usable these are. But

even if it's not quite as effective as in this results, I think it's still obviously could be very impactful. And I, it seems to make sense that in the future, you know, professionals, electrical engineers who do chip design would use these sorts of AI tools, uh, from the onset to, you know, really optimize things. And, um,

you know, our computers will be faster, hopefully. So that'll be good. Yeah, I think definitely in addition to the points you made about performance improvements, like reducing latency, also just the fact that, you know, you can get a sense of, um,

generating these AI chip architectures without further hardware simulation and just the fact that these approaches save time in the actual development as well. So, you know, hopefully we won't just have better hardware for training AI models, but we'll be able to iterate on and get it faster, which is really exciting. Yeah, yeah, I agree. So very cool new results in this area.

Onto our lightning round, we see the first story here is seeing an elusive magnetic effect through the lens of machine learning. So an MIT team incorporates AI to facilitate the detection of an intriguing material phenomenon that can lead to electronics without energy dissipation.

pretty cool. Then we got ML researchers from Oxford propose a forward mode method to compute gradients without back propagation. A lot of jargon there for people not so aware, but basically this sort of thing could help optimize neural networks faster potentially. So it could be a big deal if, you know,

this turns out to be a better way to optimize neural networks. Exciting. So for our next few stories, first off, machine learning can predict wind energy efficiency. An article recently published in the journal Energies presented this comparative study of efficient wind power predictions using machine learning methods, which is definitely an area that I hadn't quite thought about machine learning being applied to.

Another area is on machine learning techniques speeding up glacier modeling by a thousand times. And these sorts of models can be a pretty valuable tool to assess potential future contributions of glaciers to sea level rise.

Yeah, so as always, lots going on. Moving on to our society and ethics stories. First up, we have US-China collaboration in AI papers drops amid ongoing tech war, according to a Stanford report. And this is from the South China Morning Post.

So the basis of this article is recently the AI index report got released, which we talked about a bit in prior episodes. And this was released by the Stanford Institute for Human-Centered AI. And in that report, it was shown that

Last year, there were approximately 9,600 papers that were co-offered by researchers affiliated with both U.S. and Chinese institutions, which was down from more than 10,000 the year before that. So this article basically infers that this collaboration drop happened as a result of this ongoing tech war situation.

where some US-based Chinese researchers have been accused of intellectual property theft and some researchers, some American schools, including MIT, have caught research ties with Chinese tech firms like Huawei.

So these claims are, I don't know, pretty interesting. It seems like there have been a lot of tensions in the past few years with these sorts of controversies, I guess, about

tech companies from China and how it relates to the US. At the same time, it's worth noting that Stanford HAI, their own article is called China and the United States unlikely partners in AI. And it highlights that AI cooperation between China and the US leads the world. It has increased five times since 2010.

And they produce 2.7 times more AI papers between them than other countries between the United Kingdom and China. So still a lot of collaboration. And China and the US are some of the biggest publishers of AI conference papers. So...

there is a lot of collaboration, perhaps unsurprisingly. So I guess some mixed signals, but something that probably is worth being aware of. Yeah, this is definitely a bit unsurprising, but still really interesting. Just the level of AI collaboration between the US and China amidst what many are saying are, of course, you know, souring relations,

some pretty virulent rhetoric at times. And I think also just a lot of rhetoric around trying to decouple ourselves from China in particular. I think that this just speaks to how closely tied our ecosystems are technologically. And even if we really did want to decouple from China, I think that

It's really difficult for a number of reasons. And, you know, this isn't just commenting on things in general, but when you look to the AI space, for example, you look at how much talent the United States attracts from China. You look at the cooperation between researchers from institutions and the fact that so many U.S. based companies also have pretty large research centers over in China. So I think that this hybridization

high level of cooperation is something I expect to continue. What I'm really interested in is what that's going to look like in the midst of potentially tensions rising even higher than they are already. Yeah, yeah, we'll have to keep an eye on it. It's quite interesting.

It's probably likely, I would imagine, that this raw opening collaboration is specific to these big tech companies that have been controversial. So Huawei, for instance, has been kind of accused of enabling information gathering and basically spying with their networking technologies.

And as we know now, a lot of AI research comes from industry in addition to academia. So I would imagine some collaboration drought may be specific to industry and R&D that comes from industry as opposed to collaboration within academia. I think academia generally, you know, these kind of tech war kind of things don't matter too much.

So I'm not too worried, but definitely interesting to see these sorts of socio-political or economic and political tensions leading to impacts on research collaboration.

Sure. Yeah. And I guess another thought there is just that it's always good to see international collaborations and, you know, AI research. And I guess you mentioned that this is maybe more likely to affect industry. But I do think that, you know, this sort of collaboration does always tend to push the field forward. You know, people can bring a diversity of perspectives to the table.

Of course, that's just very broad strokes thoughts on this. But I do wonder about how things will differ in the future if, especially in industry, people actually are able to decouple a little bit and do less collaboration.

Yeah. Yeah. Also on that note, another thing worth noting is often in media, there's been a discussion of sort of this race between China and the U.S. to win in AI research or development or something.

which is a simple narrative that, you know, really cast it the US and China as opponents where one can win in sort of AI dominance. And that's oversimplistic. And there's a lot of reasons why this framing is basically wrong. And as we see here, this large amount of collaboration is one instance where you can see it's not sort of a versus relationship necessarily.

Definitely. I'm glad you called that out. I do think that a lot of the coverage tends to be fairly simplistic and does paint us as bitter enemies, but really the picture is a lot more complicated. So I guess moving on to our next story here, this concerns another international actor that has been in the news quite a lot lately.

So experts have started to warn that banks and other financial institutions that are utilizing artificial intelligence could be uniquely susceptible to retaliatory Russian cyber attacks.

Now, the reason people are worried about this is that banks and global financial institutions have played a pretty integral role in the whole sanctions regime against Russia. So they've blocked money flows from Russian banks, denied them access to international markets, even frozen the assets of Putin.

And what's interesting here is that at the same time as banks have started to use AI systems more themselves, there are a lot of vulnerabilities in those AI systems that are significant and pretty widely overlooked at many of these financial institutions.

Now, this comes in a lot of ways. So AI systems can be subject to, for example, data poisoning attacks where you can sort of screw with the model's predictions by basically infecting the data that is put into it. You can do a lot of different things there as well. There's worries about the privacy of data that is fed into AI models and

What's worrying about that, though, is that this is a little bit of a harder problem than software vulnerabilities in certain ways. Machine learning vulnerabilities can't really be patched in the same way that you patch other software. So a potential attack could actually last a lot longer when it's been done against an AI system.

And I think these security weaknesses are being called out by a lot of folks. So government leaders like President Biden worry Russia may start using cyber attacks to lash out against these financial institutions as sanctions take a continued toll. So it'll be interesting to watch what, if anything, Russia does.

in terms of attacking these financial institutions. But I think there's a lot of urgency there, especially as they start trying to deploy these applications. Yeah, I think this seems fairly speculative at this point. I'm not sure to what extent banks do use AI. Certainly, they're not using it instead of core security aspects where, you know, AI makes any sort of decisions

But it is a pretty notable topic, at least, where as more and more companies integrate AI neural networks in different things, like spam detection or network monitoring or things like that.

probably you couldn't sort of breach from to get passwords or, you know, steal money, but you could probably mess and sort of interrupt operations, I would imagine. So, and yeah, I think there's, it's a kind of very fresh area and the types of cyber attacks and the kinds of impacts that result are not very well known. So yeah,

Certainly for the state actors like Russia that invest a lot in their cyber war capabilities, this will be an area where they do a lot of R&D and try to find these vulnerabilities before maybe they're known and find basically new ways to attack. So...

Certainly, I think not too worrying for me at the moment, but does bring up a topic that I think is important to be aware of and especially for these companies, obviously, to be mindful of in this coming decade. That's a good call out that this is a pretty speculative worry. I do think, though,

As you also kind of affirmed, it's definitely valid that in the future, this sort of thing could become more of a problem. And I think that there is a lot of demonstrated interest by banks and financial institutions to start to use AI models and sort of their day-to-day operations. A lot of them are even interested in, you know, larger models like GPT-3 and using those for different things. So I guess

I guess even if this is a pretty early worry, hopefully, you know, maybe as banks are starting to consider more using these AI systems for, you know, more and more integral operations that they be really careful about how they do so and how they integrate them and the security practices around them as well. Mm hmm.

Yeah, and I suppose it's worth noting on the research side, there has been a lot of work over the past maybe half decade focused on security of AI systems and exports. And there's been a lot of sort of weird exports that have been found where

One notable example is if you take an image of some object or some animal like a panda and just change one pixel very slightly or a bunch of pixels very slightly such that it still looks exactly the same to the human eye, the neural network gets fooled. And yeah, there's these different kinds of vulnerabilities

and exploits already found in academia. And so certainly I think there are kind of unexpected fronts on which AI will lead to new ways that could be attacked. And already there's research on how to counteract some of the ones we know, but there have been examples of these forms of attack and

Certainly, there is some basis to believe these concerns based on that. Absolutely.

And onto our lightning round. First up, kind of following up on what we just discussed, there's a story called Invading Ukraine Has Appended Russia's AI Ambitions and Not Even China May Be Able to Help. This is more of an editorial article that says, you know, likely this will be very bad for the country's ambitions to be a leader in AI.

Pretty speculative, you know, maybe not, but interesting read given the situation. Then next up we have automation will erase knowledge jobs before most blue color jobs, according to the Future Today Institute CEO. So

This is basically citing the Future Today Institute CEO Amy Webb saying that high skilled professions may be more susceptible to replacement by AI, which is also the conclusion of prior kind of research and reports on this. So I think many people expect it to be lower skilled workers pay less. But in fact, current predictions are more sort of middle class or

kind of mid-range jobs being susceptible to replacement? Yeah, I think the earlier MIT Future of Work report or one of those task forces came to a pretty similar conclusion. For our next story, we're seeing that Ukraine has actually been using facial recognition software to identify the bodies of Russian soldiers killed in combat.

And they then trace their families to inform them of their deaths, which I guess is an interesting, possibly humane way of using the technology. Our last story in this lightning round is that software vendors are pushing explainable AI that often isn't. And this really kind of comes on the tail of a lot of concerns about explainable AI. What's really going on here is

As more and more people using AI systems, both within companies but then also buyers outside of companies, have started to question, "Hey, how do these systems actually work?" We have an entire research field that's really been dedicated to figuring out, "Okay, how do you explain the way these systems work to people?"

What's kind of unfortunate about it is, you know, there's a lack of standards in that explainability. And also a lot of the real life tools that have been developed for these explainability purposes don't actually seem to achieve what they set out to. There have been a number of studies that have shown that.

that different explanation methods actually really just convince people that they understand the AI systems they're looking at when they actually don't understand them at all. So this really isn't new news to us, but it's good to see that there's more coverage of this problem and the hope

I think for me is that some of these vendors that are pushing explainable AI will maybe come to their senses a little bit, will start to look at these practices and start pushing something that's a little bit more reasonable and really trying to achieve what they set out to, making sure that people actually understand these AI systems and not just using explainable AI as a marketing tool.

Yeah, exactly. This is pretty interesting. It's covering an older paper that was published in the Lancet publication about medical research. And the title of this paper is pretty fun. The false hope of current approaches to explainable AI in healthcare. So as you said, I think it's good that

We have researchers looking and analyzing these situations. Actually, this article also cites a more recent paper with disagreement problem and explainable machine learning. So it's really doing an overview of this kind of research. And we've seen before research also pointing out bias in these deployed sort of models for facial recognition and so on. So good to see a lot of this sort of critical examination.

And on to our fun and neat stories. First up, we have Ubisoft shows off machine learning tool for modeling animals. So in a tweet, Ubisoft announced, Ubisoft is a video game company, in case you don't know, and they showed off the Zoo Builder AI tool in a video that was actually posted in a tweet.

So it's kind of like a mini documentary. In three minutes, it goes over how they built this tool that is meant to help with animating animal motion. So...

Animation these days in video games for humans relies a lot on mocap, on having actual human actors move around because that generates much more realistic motion than with animation. But for animals, you know, mocap isn't very easy to do, especially for wild animals. So they are hand animated.

And this AI tool basically promises to take videos of animals moving around in their environment and then use AI to convert that to animation. And it does this by sort of tracking the joint states of animal and then converting those joint states to some sort of animated model that can replicate that motion.

So yeah, check out this article. They embed the tweet in there so you can actually watch the video. And it's quite fun to see, you know, this video of, I don't know, a cheetah or a lion moving around, and then you can use that for a digital animal. This is still a prototype, so it's not being used for game development, but it's very easy to see how it would be down the line.

It's definitely pretty neat to see this work that could advance, I guess, the realistic nature of the things we see in games. Our Nexo Reacts we share is quite a bit in common with this one that we just read. So NVIDIA released an AI demo pretty recently, and it has this tool called Instant Nerf that quickly turns a few dozen 2D snapshots into a 3D rendered scene.

And the way this method works is by looking at the color and light intensity of different 2D shots and then generating data to connect these images from different vantage points. From there, it renders a finished 3D scene. And what's really cool about this is it can be used to create avatars or scenes for virtual world or train robots and self-driving cars to actually understand the size and shape of real-world objects by capturing 2D images.

It's also kind of mentioned that you could capture video conference participants and their environments in 3D, which I'm not quite as excited about. But it's a pretty impressive tool. The NVIDIA researchers said they were able to export scenes at a resolution of 1920 by 1080 in just tens of milliseconds. They also released the source code for the project. So if you want to play around with it, re-implement their methods, it's all out there for you.

Yeah, yeah, this is actually really exciting. Again, if you go to our article, you can check out a little video, which really showcases the potential here. Or you can actually go to last week in .ai, where in our summary, text summary of the news, we also embed it there. So for a bit more context, this article also covers how this thing, NERF, Neural Radiance Fields,

It was first introduced in 2020 and has since been really picked up on and being developed a lot within AI. It was probably one of the biggest or most exciting developments lately in AI.

And one of the limitations of this Nerf thing is actually generating these 3D scenes fast because you need to optimize basically per scene or I don't know. I won't get into the technical stuff, but this is really addressing one of the major limitations pretty impressively.

And yeah, I think a lot of people predict that nerf-like techniques will have a huge impact on a lot of things. And seeing this progress is pretty mind-blowing. Early on, if you look back at 2020, there were initial results that were pretty cool, but there were various kind of limitations. And now in just two years, there has been a huge amount of progress and it really showcases

the ability of the AI research community to push things forward incredibly fast. It is truly impressive. Whenever I guess the AI community gets really excited about a problem, you can see this pretty amazing progress. And it is also kind of worth noting also how it's exciting that there is this culture in AI of even in industry with industry labs,

They operate many times similarly to academia. They publish these papers publicly and they open source their code. And that's in large part why the AI community can move so fast is people share code, they share trained models, they keep papers free, not locked behind paywalls, all of those sort of things. So great to see NVIDIA also doing that in this case.

It's a great trend. Yeah, I think we've seen, I guess, a lot of instances in the past where people have looked at certain studies that were released and, you know, journals like Nature and realized, hey, this is actually just like marketing for Google or something like that, because they didn't really release any.

anything that could help people reproduce their experiments. So I do think, you know, there are ways in which the AI field is still not perfect in terms of allowing for this reproducibility. But I do think that the culture has definitely shifted quite a bit. And this is something that people seem to care a lot more about now.

Yeah, agreed. So again, you can check out the associated videos with the stories at lastweekend.ai where we have a newsletter, which is also where this podcast was derived from. So a little bit of history for you.

And that's it. Thank you so much for listening to this week's episode of SkyNet Today's Last Week in AI podcast. Once again, you can find articles similar to what we discussed at our text newsletter at lastweekin.ai. As always, we would appreciate positive reviews, as you've seen. We have been really enjoying reading the sort of reviews that we talk about at the beginning of the episode.

It's just great to hear your feedback and really makes this effort worthwhile. So we do appreciate it and we do, you know, get a lot of excitement from it. So if you enjoy the podcast, you know, just drop a quick review and we'd appreciate it.

And thanks, Daniel, for filling in. It was a lot of fun having you on. Sharon, I think we'll be back soon, but it's great that we could do this episode and you could fill in. Yeah, thanks for having me co-host today, Andre. It was really great to do this. All righty. And that's it. Be sure to tune in to next week's Last Week in AI.