AI for climate modeling, Deepfakes are here, Boston Dynamics in a fight

Last Week in AI

Deep Dive

Shownotes Transcript

Hello and welcome to SCADA Today's Last Week in AI podcast, where you can hear AI researchers chat about what's going on with AI. As usual in this episode, we will provide summaries and discussion of some of last week's most interesting AI news. I'm Dr. Sharon Zhou.

And I am Andrey Krenkov and this week we'll discuss AI for Alzheimer's prediction and for climate modeling, some research into detecting phishing and for benchmarking everyday life activities, stuff about deep fakes and bias bounties and a few fun stories about robots. Let's jive.

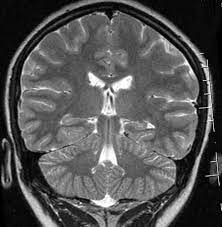

So let's dive straight in with our first story of applications, which is titled Algorithm developed by Lithuanian researchers can predict possible Alzheimer's with nearly 100% accuracy. And this is from the University of Connors, University of Technology, Lithuania, where this is from. And as the title implies, in this paper, analysis of features of Alzheimer's disease, detection of blah, blah, blah,

These researchers share that they apparently could predict a possible onset of Alzheimer's disease with an accuracy of over 99%, which seems like a big deal if true, but also seems a little surprising given they only worked with 138 subjects.

or the data they got was from those 103 subjects. So yeah, what was your impression, Sharon? Having worked in this area-ish medical imaging, I'm highly suspect.

that this result actually can generalize. I very much suspect that this is basically overfitting to those 138 subjects, even though even if that means tens of thousands of images from those hundred so subjects,

It still doesn't matter that it's still probably overfitting to that, given what we know about these models. So I'd also be curious about, you know, if we were to dive into the paper a bit more, like, was this a prospective study? How is the data split? Obviously, it sounds like all these patients are from the same institution. So it's really hard to say. That said, I'm really glad people are continuing to push on this and thinking

think about this as a problem because Alzheimer's as a disease is just such an important part of later life health. And there are approximately 24 million people affected worldwide. And this is expected to double every 20 years. So that's huge.

Yeah, yeah. That's also something I like about this article. It doesn't just talk about the accomplishment. It also contextualizes it with a discussion of how big a deal this disease is and how as populations age, as is the case in a lot of the world now, it'll be even more important to

And I agree that it seems likely that there is some flaw here. I mean, they had 50,000 images for training and 25,000 for testing. So they do at least split that. But, you know, they categorize into six different things. And particularly because it was only 138 people, it does seem like there's a good chance it won't work as well when...

when it attempts to be expanded. But they're looking into that now, so hopefully they follow up with it and expand on this and see how it goes.

And onto our next article titled Columbia to launch $25 million AI-based climate modeling center. And this is Columbia University. And it is the National Science Foundation, the NSF, that's chosen Columbia University to lead in the creation of this climate modeling center. And it's called Learning the Earth with Artificial Intelligence and Physics Leap.

So this is a really important collaboration with both NCAR, the National Center for Atmospheric Research, and GISS, NASA's Goddard Institute for Space Studies. And this will very much be pushing the next generation of data-driven physics-based climate models. So bringing in the physics, but also the deep learning side or data-driven models

learning side of neural networks and AI in general that have been making these big leaps and putting them all together to try to help with climate science. So this is a very important collaboration and just seed of what is what will be something big. And I think this is

Also very big because the essentially the next generation of students going into Columbia University and other universities right now care a lot about climate change. And so I think this will be very relevant and be a very popular center. Yeah, yeah, I completely agree. I think so.

As you said, this article notes that the center will train a new wave of students that will be fluent both in climate science and with big data sets and modern machine learning, which I presume is somewhat rare. At least, you know, a lot of people who are in AI definitely aren't as aware of the physics of climate science. And it looks like also from the article, the center will do some other things like

create infrastructure for it. So it says in collaboration with Cloud and Microsoft, they will create a platform to allow researchers to share and analyze data. So that's also seemingly kind of a big deal.

And so, yeah, I think it's nice to see this climate modeling center, given that's really a central task. And it's nice to see the NSF enabling this sort of initiative through this big investment. And on to our research articles. The first titled Machine Learning Technique Detects Phishing Sites Based on Markup Visualization. And this is about a...

And this is about a paper that's come out using machine learning models trained on the website code, the raw HTML to improve detection of phishing sites. So one thing that's really cool is they use something called binary visualization. And what that means is they look at all the markdown and they, sorry, they look at all the markup and they,

They basically encode different things, different colors. So, for example, printable ASCII characters are assigned a blue color, null spaces, null and spaces are different.

black and white colored. And so they create this basically visualization of what the HTML markup could look like. And they feed those visualizations into a neural network or machine learning model and be able to

see the difference between a phishing attack and the actual website. And this is, I think, a really interesting approach of representing and pre-processing that website data. That's where that's where a lot of that innovation is coming from.

Yeah, I also found it very neat that they used this visualization technique of just turning HTML text, just taking the text and then making an image out of it, this kind of rectangular image, because, of course, convolutional neural nets can process images pretty easily. And they have examples in this article of what a legitimate web page looks like versus a phishing web page. And

At least for this example, it's pretty stark, the difference. And I suppose some kind of fake websites, you would expect the code to maybe have that hint, even if it doesn't look that different from the legitimate website. So this is a neat concept. And kind of interesting why they did not go with some sort of text processing approach and instead converted it to an image and went that way. But...

Yeah, cool to see that this approach works and that, you know, this is another application where I wasn't aware of machine learning being usable, you know, detecting fish in sites. And now I know it appears to work pretty well.

I definitely think a text-based solution would work. And just directly looking at raw HTML, I think would work for a text-based model. So I think this is just one way of representing that data. And yeah, I can imagine that a text-based solution is forthcoming or perhaps is already out there as well.

And I think one just one other note about this that's interesting. There is this, you know, cat mouse game of like phishing attacks and then ways to detect it. Something that I find really interesting about this approach and just looking at the raw HTML is that I don't think a lot of people doing phishing would be would want to put in the effort to phish.

fool this algorithm. Like this actually, I think could eliminate a lot of different phishing attacks, unless there's like some phishing creation platform or site, um, that can help you format it so that it looks exactly like, uh,

a non-phishing website, but I think this is a great example of something that, you know, in practice could actually be very useful, either the text-based solution or the visual representation. Just using the raw HTML, I think is a good way at it.

Yeah, I also think that. And just browsing the paper a bit, it does, as far as I can tell, there's not a huge amount of prior work they're citing on using machine learning for phishing detection. Of course, this has been done to some extent for emails, where it's another big problem of phishing.

So they're presumably they use text based approaches. But as you said, it's a cat and mouse game. Presumably the hackers will improve their HTML to look more legitimate. But at least for now, you know, we are a bit ahead. So that's good. And on to our next research story, we have Stanford's behavior benchmarks.

We have Stanford's Behavior Benchmarks 100 Activities from Everyday Life for Embodied AI. So this is about a paper titled Behavior: Benchmark for Everyday Household Activities in Virtual, Interactive, and Ecological Environments.

So as the title suggests, the idea is to create a simulated virtual benchmark where agents, AI agents, could interact with the environment in a kind of household setup.

to perform everyday activities. And the ecological part means that it, you know, it looks kind of like a normal apartment. It's not some really weird simulated world, but it's based on real apartments and how they are laid out and what they have in them. So yeah, personally, I think this is pretty cool because so far in robotics and in reinforcement learning, we have different benchmarks, but they are really kind of

somewhat removed from what people do. There's like block stacking, there's using hammer, but nothing that approaches what we could say is a long term goal for AI agents that are embodied to do. And it seems to make sense to say that these everyday household activities are a good measure of how useful AI agents are getting to be. And a hundred of them is pretty impressive. So, yeah. What did you think of it, Sharon?

I think this is a great set of benchmarks to come out, especially since I think we've already been doing this empirically from a lot of pieces of work. I mean, just the act of watching the output of our robots, you know, just...

With my own eyes, oh, yes, this looks about right. But now codifying it and kind of being able to quantify it in a way is really, really useful. And so I thought this was a great way to establish a benchmark for essentially what's normal behavior and whether a robot or some other agent could benefit

perform normal behavior. And so I thought, you know, just to give a few examples of what that means is they had, you know, different things like bringing in wood, collect misplaced items, move boxes to storage, organizing file cabinet, throwing away leftovers, putting dishes away, just things that we as humans like can't

And there's like such normal, easy everyday behavior, but they are actually quite difficult for robots right now. So just being able to say, hey, we're close to this or we're not close to each of these things is very useful and be able to quantify what exactly does that mean? Like, what are all the actions I take for putting dishes away? What do I look like?

Yeah, yeah, exactly. I think it's a nice formalization of this very broad question of how do we benchmark AI agents' ability to do the sort of stuff we do every day.

And there are a hundred activities, so it's kind of interesting to browse, as you said, different examples. A lot of them involve cleaning, cleaning your closet, cleaning your garage, cleaning the oven. And these were actually chosen based on results of the American Time Use Survey.

So they actually did look into what people spend their time doing and then chose a subset that is possible to simulate as far as household chores for everyday life. So they simulate this with a physics simulator and they allow this virtual reality platform to collect demonstrations. And what's interesting is

This paper is purely about the benchmark. So there's some results as far as trying to replicate what people are capable of in virtual reality, but those are quite limited. So it's really just setting up a set of tasks that is really, really difficult by present day standards for reinforcement and robotics and then hoping that in the future we can tackle this challenge, which is not...

Usually what we see and hopefully will lead to a lot of work trying to tackle these challenges. Right. And on to our ethics and society articles. The first is deep fakes in cyber attacks aren't coming. They're already here.

And so this is about, you know, in March, the FBI had released a report declaring that malicious actors will use synthetic content. So deep fakes for cyber and foreign influence operations in the next year or year and a half. And this, of course, includes deep fakes, both video and audio created by AI. And this article is about this.

is about Rick McElroy at VMware's role in doing crisis and incident response. And he works closely with these teams and he spoke with several CISOs

prominent companies about the rise of defake technology that they've seen. And they're concerned right now. They're already seeing, you know, ransomware as a service, RAS, R-A-S. And I think we've covered that a bit in our podcast before. And it's just, it's already here. It's not like it's, it should be some kind of forecasted thing. It's already impacting different incident response teams.

Yeah, so this is kind of a take from this perspective of this Rick McElroy, who's the author. And he shares some interesting details from his conversations with these people from prominent global companies. For instance, he shares an example about Recorded Future, which is an incident response firm, which said that fact actors are, you know,

on the dark web and are offering services and tutorials on how to use visual and audio deepfakes. So there's now these secondary markets where hackers don't have to be experts or don't have to already know how to use these deepfakes, but can sort of be guided through it. And yeah, it seems like there is kind of a tool set being built up for malicious actors.

And in this article, he also says that he spoke with people whose security teams have observed deepfakes being used in phishing attempts or to compromise business email. So personally, I found this kind of interesting. I didn't realize deepfakes already being used in cyber attacks. My impression has been, you know, there's a lot of worry about deepfakes, but you haven't seen any examples of them really causing harm. So personally, I found this quite interesting.

Did this align with your perception of the state of deepfakes, Sharon? I thought there were kind of unsophisticated attacks happening. And I think like people have been more and more just more, I think yourself included, Andre, using kind of deepfakes as like profile pictures or whatever, both benignly, obviously, and maliciously in different ways. So I think...

I know that it's like this undercurrent. I didn't know how rampant it is and how much there have to be defense systems right now. Yeah. So to your note, actually, this is kind of a fun story. Earlier, I think like a month ago or so, I got bored of my profile picture on Twitter and Facebook. And I thought about what to change it to. And I actually...

I uploaded my picture to Artbreeder and then this generative model created a version of it and then I messed around with it. And then I uploaded it as my profile picture on Facebook. And I suppose the good news is people immediately saw that something was off.

And they're actually quite creeped out and called me out on it. So it was kind of funny. So yeah, I guess the good news is so far they aren't really quite photorealistic, especially voice, I think, is something that could conceivably be used in really bad ways. But they're not quite there yet.

But it seems like we are moving in a direction. And yeah, interesting to read this article that kind of shares those details.

I think it really depends since I had actually used one of those a couple of years ago. And then at the time, people didn't think about DeepFace as much since I guess I was one of the earlier users. And it was for a Slack profile picture, so it was really small. And because it was small,

people couldn't tell. And when they clicked in, they were like, Sharon, that doesn't look like you. Why'd you upload someone else's photo? Like, okay, I can see how this is awkward. Yeah. Yeah. I do think, uh, as you said, probably going forward, you know, hackers will have to think of how to use these best. And, you know, we already know that social engineering is one of the best ways to, to do hacking, so to speak, right. Just,

dealing with humans and fooling humans. So I could imagine that deepfakes could definitely help where when people aren't expecting them, at least not yet. And onto our next ethics and society story, we have sharing learnings from the first algorithmic bias bounty challenge from Twitter.

So we discussed this a couple of times. We covered that this was a thing that in August of this year, there was the first algorithmic bias bounty challenge where Twitter invited the ethical AI hacker community to basically inspect its cropping algorithm, which caught some heat, to try and detect issues of it. So bias and other potential harms.

And you've also covered results from that where seemingly it was kind of successful. The winning team did identify some pretty novel things. And in this post, basically the team at Twitter goes into some more details on what they took away from the experience and shares a bit more about it, which I found pretty interesting. It's interesting to see kind of some of...

their playbook and some of the details that they had to deal with while organizing this. And as we say here in this post, it does seem like they want others to take up this practice and then kind of build on it. So nice of them to write an article where they share their learnings.

I think it was a great article. And I also really commend all the winners, as well as the most innovative prize, which I thought was really interesting. It was looking at emojis and the skin color of emojis and how the algorithm preferred lighter skin colored emojis to in addition to just

images of people. And there are some pretty strong analyses in the submissions and in the winners. So, yeah, I think it was great to kind of speak about the learnings and showcase the winners once more. Yeah, I also found that emoji prize quite innovative. And I also found it interesting that the third place submission was

analyze linguistic bias for English over Arabic script and memes, which is another thing I wouldn't have thought was part of what people are looking at. And that's kind of the main takeaway learning that they talk about as far as kind of specifics.

They really highlight the difficulty they had with figuring out a rubric to judge the participants' submissions. So make a rubric that is broad enough to allow teams to look at a variety of potential harms, even things that the team didn't expect.

And also they encourage qualitative analysis as opposed to just quantitative so as not to restrict the range of sort of things you could explore. And they I believe that, yeah, they actually shared this rubric. So again, it seems like they are really making it easier for others to to do this sort of algorithmic bias bounty in the future, which which I'm quite excited about. I do think this is pretty promising.

And on to our fun articles. The first is, could robots from Boston Dynamics beat me in a fight? And this is kind of a reflective article about the Boston Dynamics videos that had come out. You know, the dancing robots, the parkour robots, and also the companion video that goes with the original video.

showing the human side of the people who, who built these robots, that these robots are not perfect. And I just thought it was an interesting reflection. I too, as I was watching the videos, definitely had concerns, you know, there was this like undercurrent about they're going to take over the world. But I think also the article is trying to

bring people to that level of consciousness and just reflection of it's not, it's not just like a fun video. This is also serious about, about where robots are going. Yeah. I think it's kind of interesting. It, it kind of, um, you know, um, shares what I guess a lot of people feel, uh, in a big medium. So this is, I think an opinion piece in the New York times, um,

And it goes into that emotional response for sure. And also talks about an earlier video from Boston Dynamics talking about their dancing moves, right? Like, do you love me? And this article posits that these sorts of videos make us used to robots and distract us away from potential negative outcomes. Right.

We kind of go to, I don't know, humanize them, I suppose, and kind of maybe feel more okay with them, even though they might be dangerous. But yeah, I mean, it's a short read. It's really just this kind of reflection. I would have liked to see more discussion of the actual details of how these robots work and how they are limited in various ways. They don't have really general AI and so on.

And I think it would have been nice if this article worked a bit more to dispel some of the fear that may not be really justified. But yeah, I do think it was an interesting sort of reflection on this author's feelings about it. I don't know. What do you feel when you see these Boston Dynamics parkour videos?

I mean, like I said, it's like fun stuff, but also just thinking about, you know, reflecting about how powerful they are. Yeah, they are really big and bulky. So you could really imagine it being hard to fight them. Although this article also notes that any decent runner could easily, you know, get away, you know, push them from behind and so on. So yeah.

I think it's funny how, you know, objectively, there's not much to worry from these robots, but they're kind of doing more than you might expect from robots is already nerve wracking in a sense. But onto our next and last article, we have

iRobot's newest Roomba uses AI to avoid dog poop. So apparently this is a big problem I wasn't aware of, where people's Roombas, which are AI-enabled vacuum cleaners, little circular things,

Apparently people have an issue where their Roombas catch some dirt and in some instances some dog poop and then it spreads it out, right? And that's a whole big mess. And it's kind of funny. This article actually is all about how the latest model, the Roomba J7 Plus, you know,

they really worked hard for this problem specifically. You know, this new Roomba has a camera and a computer vision model, and apparently they collected a data set with these sorts of objects and then worked very hard to guarantee that this won't happen with this $850 vacuum cleaner. So, yeah, I guess AI has really come a long way.

It's such a gross application because you know exactly what happens if it, uh, does, uh, if it, if it catches the dog poop. Yeah. Yeah. It's not a fun thing to imagine. So, you know, uh, maybe they'll get people to pay more than $800 for it. Uh, honestly, I'm a little surprised it took this long for them to be able to do it, but, um,

They seem very, very sure that this feature will work 100% of the time in this article. Well, even if it doesn't work 100% of the time, I feel like it'd be helpful, right? Because I think if there's actually poop on the ground, you don't know it's there. So...

Yeah, even if it can sometimes work. That's true. And to be fair, they also say that it's used as a camera to also identify other obstacles like socks, shoes and headphones. So in general, it does represent kind of this pretty much sure AI and robotics application. These vacuum cleaners have been around for like 20 years.

getting better and better over time. So now they map your house, they intelligently move around, and now they can avoid small obstacles like socks and shoes and headphones. So one day we'll get the perfect robot vacuum cleaner and never have to vacuum ourselves, hopefully. Absolutely. That would be fantastic. Yeah, I think right.

Yeah, personally, I've had a cheap one myself for quite a while when I lived in an apartment and I liked it a lot. Just like nothing fancy, $200 robot, leave it on while I go out. It was very handy.

I think it's very useful since we recently upgraded a little bit and made the, got a slightly better robot and it was significantly less organization beforehand. Because I think before we had a really cheap one and we had to like organize and almost clean the whole house for the robot to start cleaning and prep the whole house for the robot.

That's true, actually. That reminds me, like, when I let it loose in the kitchen, I used to put down chairs sideways. Yeah, that was funny. And yeah, now I think you can get fancy, like tell it, you know, which parts of the house to move around and stuff like that. And this one apparently talks to you through your phone and asks, you know, what is an obstacle. So yeah, cool to see this advance and

you know, I guess seeing computer vision really getting deployed to the edges of edge devices or, I don't know, kind of becoming ubiquitous, I suppose. And that's a wrap for this episode. If you've enjoyed our discussion of these stories, be sure to share and review our podcast. We'd appreciate it a ton. And now be sure to stick around for a few more minutes to get a quick summary of some other cool news stories from our very own newscaster, Daniel Bashir.

Thanks Andre and Sharon. Now we'll go through a few other interesting stories we haven't touched on. Our first is on the research side. If deep learning is to live up to its promise, it will need to become far more capable than it is now. According to VentureBeat, DeepMind is working on bridging the worlds of deep learning and classical algorithms.

Researchers at DeepMind are interested in problems like getting a neural network to divulge what it doesn't know or to learn more quickly. Their collaborations have brought forth a new line of research called Neural Algorithmic Reasoning, or NAR. The thesis of NAR is that if deep learning methods could better mimic algorithms, then they would possess the generalization capabilities that algorithms have.

It turns out that this is surprisingly hard, even for very simple algorithms. Asking a deep learning algorithm to simply copy its input by training it on the numbers one through 10 will not result in it knowing what to do with numbers close to a thousand.

There are a number of differences between the two paradigms, and the way to marry them is not entirely clear, but it seems like a promising and important research direction. Our second story is about business.

In its latest round, enterprise AI startup Databricks secured $1.6 billion in its Series H funding round. According to Ben Dixon of BD Tech Talks, this round of investment follows on the heels of a recent $1 billion round, giving Databricks a valuation of $38 billion.

The company offers products and services for unifying, processing, and analyzing data stored in different ways and locations. Enterprise AI companies, which include Databricks, as well as Snowflake and C3.ai, are addressing some of the biggest challenges in the way of companies trying to launch machine learning products to cut down operations costs, improve products, and increase revenue.

Thanks to cloud services like AWS and Azure, these companies have been able to collect massive amounts of data. But putting that data to use is another problem entirely. Data might be spread around different systems and under different standards, using a variety of schemas. This makes it difficult to consolidate data and prepare it for consumption by machine learning models.

Databricks' main cloud service, Lakehouse, uses its founders' previous work to bring different sources of data together and enable data scientists and analysts to run workloads from a single platform. Their services have been used by AstraZeneca and HSBC, among others.

The market is competitive and it remains to be seen whether the valuations of these companies are justified. Big tech players are also entering the market. Dixon, for his part, would be unsurprised if Databricks' present partnership with Microsoft turns into an acquisition. Finally, our society story concerns a personal project that used OpenAI's GPT-3.

According to Gadgets360, AI researcher and game designer Jason Rohrer used the language model to create a chatbot named Samantha during the pandemic. He had programmed Samantha to be friendly, warm, and curious. He also allowed others to customize the chatbot, and one man turned it into a proxy of his dead fiancée. When OpenAI learned about the project, it asked him to dilute it to prevent possible misuse or shut it down.

He was also asked to add an automated monitoring tool. When he refused, OpenAI finally told Roar he was no longer allowed to use its technology. He responded by asking others to stop using OpenAI's technology, accusing them of callousness and destroying people's life's work. It's fair to be concerned about possible misuses of such capable technologies, but it is worth considering who should be policing their use.

Thanks so much for listening to this week's episode of Skynet Today's Let's Talk AI podcast. You can find the articles we discussed today and subscribe to our weekly newsletter with even more content at skynetoday.com. Don't forget to subscribe to us wherever you get your podcasts and leave us a review if you like the show. Be sure to tune in when we return next week.