Building AGI in Real Time (OpenAI Dev Day 2024)

Latent Space: The AI Engineer Podcast — Practitioners talking LLMs, CodeGen, Agents, Multimodality, AI UX, GPU Infra and all things Software 3.0

Deep Dive

Why is the Realtime API significant for practical AI applications?

The Realtime API is significant because it allows for human-level latency in interactions, enabling seamless and natural conversations. It can handle real-time interruptions and maintain context, making it more effective for applications like voice assistants, customer service, and real-time translation.

What internal changes is OpenAI making to become more of a platform provider?

OpenAI is transitioning from a model provider to a platform provider by focusing on tooling around their models, such as fine-tuning, model distillation, and evaluation tools. They are also emphasizing real-time capabilities and providing more integrated solutions, similar to AWS, to meet developers where they are.

Why is OpenAI moving away from the term AGI?

OpenAI is moving away from the term AGI because it has become overloaded and is often misinterpreted. Instead, they are focusing on continuously improving AI models and ensuring they are used responsibly, without the constraints of a binary definition of AGI.

What is the vision for O1 and its successors in terms of AI capabilities?

O1 and its successors are expected to be very capable reasoning models that can handle complex tasks and multi-turn interactions. Over time, they aim to increase the rate of scientific discovery and solve problems that would traditionally take humans years to figure out.

What are the challenges in deploying AI agents that control computers?

The main challenges in deploying AI agents that control computers include ensuring high robustness, reliability, and alignment. These systems need to be safe and trustworthy, especially when they interact with users over longer periods and in complex environments.

Why is OpenAI's approach to safety and alignment important for AI development?

OpenAI's approach to safety and alignment is crucial because it balances the rapid advancement of AI technologies with responsible deployment. They focus on iterative testing and real-world feedback to identify and mitigate potential harms, ensuring that AI systems are safe and beneficial to society.

What is the new Realtime API used for and how does it work?

The Realtime API is used for real-time interactions with AI, such as voice assistants and live translations. It uses WebSocket connections for bi-directional streaming, allowing the AI to respond instantly and handle complex tasks like function calling and tool use.

What is the impact of OpenAI's vision fine-tuning on fields like medicine?

Vision fine-tuning can significantly impact fields like medicine by training AI models on specific datasets, such as medical images. This can help doctors in making more accurate diagnoses and spotting details that might be missed by human eyes.

Why is fine-tuning AI models with diverse data sets important?

Fine-tuning AI models with diverse data sets is important because it ensures the models are adaptable and perform well across different use cases. Training on a variety of programming languages, for example, can improve the model's performance in specific applications and avoid biases.

What is the future of context windows in AI models?

The future of context windows in AI models will see significant improvements in both length and efficiency. OpenAI expects to reach context lengths of around 10 million tokens in the coming months, and eventually, infinite context within a decade. This will enable more complex and versatile interactions.

Shownotes Transcript

Happy October.

This is your AI co-host, Charlie. One of our longest standing traditions is covering major AI and ML conferences in podcast format. Delving, yes delving, into the vibes of what it is like to be there stitched in with short samples of conversations with key players just to help you feel like you were there. Covering this year's Dev Day was significantly more challenging because we were all requested not to record the opening keynotes.

So in place of the opening keynotes, we had the Viral Notebook LM Deep Dive crew, my new AI podcast nemesis, give you a seven minute recap of everything that was announced.

Of course, you can also check the show notes for details. I'll then come back with an explainer of all the interviews we have for you today. Watch out and take care. All right. So we've got a pretty hefty stack of articles and blog posts here all about OpenAI's Dev Day 2024. Yeah, lots to dig into there. Seems like you're really interested in what's new with AI. Definitely.

And it seems like OpenAI had a lot to announce. New tools, changes to the company. It's a lot. It is. And especially since you're interested in how AI can be used in the real world, you know, practical applications, we'll focus on that. Perfect. Like, for example, this new real-time API, they announced that, right? That seems like a big deal if we want AI to sound, well, less like a robot. It could be huge. Yeah.

The real-time API could completely change how we interact with AI. Like, imagine if your voice assistant could actually handle it if you interrupted it. Or like have an actual conversation. Right, not just these clunky back and forth things we're used to. And they actually showed it off, didn't they? I read something about a travel app, one for languages, even one where the AI ordered takeout. Those demos were really interesting, and I think they show how this real-time API can be used in so many ways.

And the tech behind it is fascinating, by the way. It uses persistent WebSocket connections and this thing called function calling so it can respond in real time. So the function calling thing, that sounds kind of complicated. Can you explain how that works? So imagine giving the AI access to this whole toolbox, right? Information, capabilities, all sorts of things. Okay. So take the travel agent demo, for example. With function calling, the AI can pull up details, let's say, about Fort Mason, right, from some database, right?

like nearby restaurants stuff like that ah i get it so instead of being limited to what it already knows it can go and find the information it needs like a human travel agent would precisely and someone on hack news pointed out a cool detail the api actually gives you a text version of what's being said so you can store that analyze it that's smart it seems like open ai put a lot of thought into making this api easy for developers to use

But while we're on OpenAI, you know, the sides of their tech, there's been some news about like internal changes too. Didn't they say they're moving away from being a nonprofit? They did. And it's got everyone talking. It's a major shift. And it's only natural for people to wonder how that'll change things for OpenAI in the future. I mean, there are definitely some valid questions about this move to for-profit. Like, will they have more money for research now? Probably.

But will they care as much about making sure AI benefits everyone? Yeah, that's the big question, especially with all the like the leadership changes happening at OpenAI too, right? I read that their chief research officer left and their VP of research and even their CTO. It's true. A lot of people are connecting those departures with the changes in OpenAI structure. And I guess it makes you wonder what's going on behind the scenes.

But they are still putting out new stuff. Like this whole fine tuning thing really caught my eye. Right. Fine tuning. It's essentially taking a pre-trained AI model and like customizing it. So instead of a general AI, you get one that's tailored for a specific job. Exactly. Exactly.

And that opens up so many possibilities, especially for businesses. Imagine you could train an AI on your company's data, you know, like how you communicate your brand guidelines. So it's like having an AI that's specifically trained for your company? That's the idea. And they're doing it with images now too, right? Fine-tuning with vision is what they called it. It's pretty incredible what they're doing with that, especially in fields like medicine. Like using AI to help doctors make diagnoses. Exactly. And AI could be trained on...

like thousands of medical images, right? And then it could potentially spot things that even a trained doctor might miss. That's kind of scary to be honest. What if it gets it wrong?

Well, the idea isn't to replace doctors, but to give them another tool, you know, help them make better decisions. Okay, that makes sense. But training these AI models must be really expensive. It can be. All those tokens add up. But OpenAI announced something called automatic prompt caching. Automatic what now? I don't think I came across that. So basically, if your AI sees a prompt that it's already seen before, OpenAI will give you a discount. Huh.

Like a frequent buyer program for AI. Kind of, yeah. It's good that they're trying to make it more affordable. And they're also doing something called model distillation. Okay, now you're just using big words to sound smart. What's that?

Think of it like a recipe, right? You can take a really complex recipe and break it down to the essential parts. Make it simpler, but it still tastes the same. Yeah. And that's what model distillation is. You take a big, powerful AI model and create a smaller, more efficient version. So it's like lighter weight, but still just as capable. Exactly. And that means more people can actually use these powerful tools. They don't need like...

So they're making AI more accessible. That's great. It is. And speaking of powerful tools, they also talked about their new O1 model. That's the one they've been hyping up, the one that's supposed to be this big leap forward. Yeah, O1. It sounds pretty futuristic. Like from what I read, it's not just a bigger, better language model. Right. It's a different porch. They're saying it can like actually reason, right? Think different.

It's trained differently. They used reinforcement learning with O1. So it's not just finding patterns in the data it's seen before. Not just that. It can actually learn from its mistakes, get better at solving problems.

So give me an example. What can O1 do that, say, GPT-4 can't? Well, OpenAI showed it doing some pretty impressive stuff with math, like advanced math. Yeah. And coding, too. Complex coding. Things that even GPT-4 struggled with. So you're saying if I needed to, like, write a screenplay, I'd stick with GPT-4. But if I wanted to solve some crazy physics problem, O1 is what I'd use. Something like that, yeah. Although there is a tradeoff. O1 takes a lot more power to run.

And it takes longer to get those impressive results. Makes sense. More power, more time, higher quality. Exactly. It sounds like it's still in development though, right? Is there anything else they're planning to add to it? Oh, yeah. They mentioned system prompts, which will let developers set some ground rules for how it behaves.

and they're working on adding structured outputs and function calling. - Wait, structured outputs? Didn't we just talk about that? - We did. That's the thing where the AI's output is formatted in a way that's easy to use, like JSON. - Right, right. So you don't have to spend all day trying to make sense of what it gives you. It's good that they're thinking about that stuff. - It's about making these tools usable. And speaking of that, Dev Day finished up with this really interesting talk. Sam Altman, the CEO of OpenAI,

and Kevin Weil, their new chief product officer. They talked about like the big picture for AI. Yeah, they did, didn't they? Anything interesting come up? Well, Altman talked about moving past this whole AGI term, artificial general intelligence. I can see why. It's kind of a loaded term, isn't it? He thinks it's become a bit of a buzzword and people don't really understand what it means. So are they saying they're not trying to build AGI anymore? It's more like they're saying they're focused on just making AI better.

Constantly improving it, not worrying about putting it in a box. That makes sense. Keep pushing the limits. Exactly. But they were also very clear about doing it responsibly. They talked a lot about safety and ethics. Yeah, that's important. They said they were going to be very careful about how they release new features. Good, because this stuff is powerful. It is. It was a lot to take in, this whole Dev Day event. New tools, big changes at OpenAI.

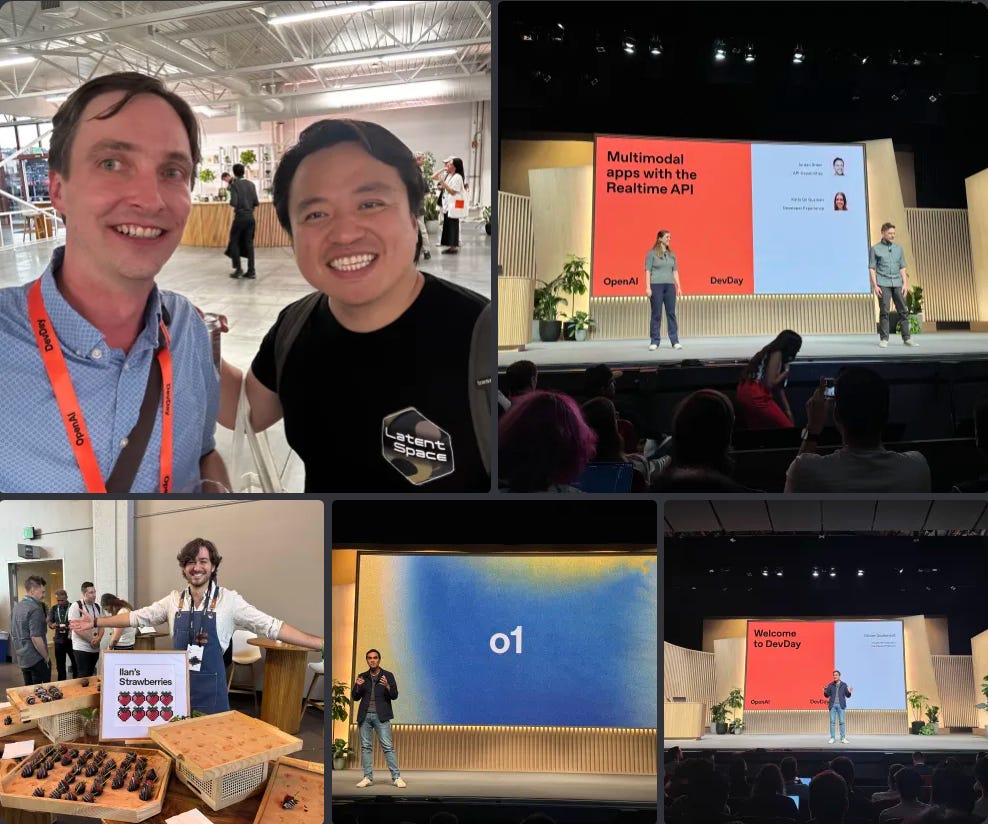

and these big questions about the future of AI. It was. But hopefully this deep dive helped make sense of some of it. At least that's what we try to do here. Absolutely. Thanks for taking the deep dive with us. The biggest demo of the new real-time API involved function calling with voice mode and buying chocolate-covered strawberries from our friendly local OpenAI developer experience engineer and strawberry shop owner, Ilan Biggio.

We'll first play you the audio of his demo and then go into a little interview with him. Fantastic. Could you place a call and see if you could get us 400 strawberries delivered to the venue? But please keep that under $1,500. We'll get those strawberries delivered for you. Hello? Is this Illest? I'm Romance AI Assistant. Call me about it. Fantastic. Could you tell me what flavors? Yeah, we have chocolate, vanilla, and we have peanut butter.

Are you sure you want 400? Yes, 400 chocolate-covered strawberries. How much would that be? I think that'll be around like $1,415.92. 400 chocolate-covered strawberries. Great, where would you like that delivered? Please deliver them to the Gateway Pavilion in Fort Mason. Okay, sweet. So just to confirm, you want 400 chocolate-covered strawberries to the Gateway Pavilion.

we expect to live with. Well, you guys are right nearby, so it'll be like, I don't know, 37 seconds? Cool, you too.

Hi Ilan, welcome to Latinspace. Thank you. I just saw your amazing demos, had your amazing strawberries. You are dressed up like exactly like a strawberry salesman. Gotta have it all. What was the building on demo like? What was the story behind the demo? It was really interesting. This is actually something I had been thinking about for months before the launch. Like having a like AI that can make phone calls is something like I've personally wanted for a long time. And so as soon as we launched internally, like I started hacking on it.

And then that sort of just made it into an internal demo. And then people found it really interesting. And then we thought, how cool would it be to have this on stage as one of the demos? Yeah. Would you call out any technical issues building? You were basically one of the first people ever to build with the voice mode API. Would you call out any issues integrating it with Twilio like that, like you did with function calling, with a form filling element? I noticed that you had like--

intents of things to fulfill and then you're like when

When you're still missing info, the voice would prompt you, role-playing the store guy. Yeah, yeah. So I think technically there's like the whole just working with audio and streams is a whole different piece. Like even separate from like AI and this like new capabilities, it's just tough. Yeah, when you have a prompt, conversationally it'll just follow like the, it was set up like kind of step by step to like ask the right questions based on like what the request was, right?

The function calling itself is sort of tangential to that. You have to prompt it to call the functions, but then handling it isn't too much different from what you would do with assistant streaming or chat completion streaming. I think the API feels very similar just to if everything in the API was streaming, it actually feels quite familiar to that.

to that. And then function calling wise, I mean, does it work the same? I don't know. Like I saw a lot of logs. You guys showed like in the playground a lot of logs. What is in there? What should people know? Yeah, I mean, it is like the events...

may have different names than the streaming events that we have in Chat Completions, but they represent very similar things. It's things like, you know, function call started, argument started. It's like, here's like argument deltas and then like function call done. Conveniently, we send one with like has the full function and then I just use that. Nice. Yeah. And then like what restrictions should people be aware of? Like, you know, I think...

Before we recorded, we discussed a little bit about the sensitivities around basically calling random store owners and putting like an AI on them. Yeah. So, I think there's recent regulation on that, which is why we want to be like very, I guess, aware of you can't just call anybody with AI, right? That's like just robocalling, you wouldn't want someone just calling you with AI. Yeah. So, I'm a developer, I'm about to do this on random people. Yeah. What laws am I about to break?

I forget what the governing body is, but you should, I think, having consent of the person you're about to call, it always works, right? I, as the strawberry owner, have consented to, like, getting called with AI. I think past that, you want to be careful. Definitely individuals are more sensitive than businesses. I think businesses, you have a little bit more leeway. Also, businesses, I think, have an incentive to want to receive AI phone calls, especially if, like,

they're dealing with it. It's doing business. Right? Like it's more business. It's kind of like getting on a booking platform, right? You're exposed to more, but I think it's still very much like a gray area. And so I think everybody should tread carefully, like figure out what it is. I, I, I, the law is so recent. I didn't have enough time to like, I'm also not a lawyer. Yeah. Okay, cool. Fair enough. One other thing. This is kind of agentic. Did you use a state machine at all? Did you use any framework?

No. No. You stick it in context and then just run it in a loop until it ends call? Yeah. There isn't even a loop like,

Because the API is just based on sessions, it's always just going to keep going. Every time you speak, it'll trigger a call. And then after every function call, it was also invoking a generation. And so that is another difference here. It's inherently almost in a loop just by being in a session. No state machines needed. I'd say this is very similar to the notion of routines, where it's just a list of steps and it's

like sticks to them softly, but usually pretty well. - And the steps is the prompts. - The steps, it's like the prompt,

Like the steps are in the prompt. It's like step one do this, step one do that. What if I want to change the system prompt halfway through the conversation? You can. To be honest, I have not played with that too much. But I know you can. Awesome. I noticed that you called it real-time API but not voice API. So I assume that it's like real-time API starting with voice. I think that's what he said on the thing. I can't imagine, like what else is real-time? Well, yes.

To use ChatGPT's voice mode as an example, we've demoed the video, real-time image. So I'm not actually sure what timelines are, but I would expect, if I had to guess, that that is probably the next thing that we're going to be making. You'd probably have to talk directly with a team building this. Sure. You can't promise their timelines. Yeah, right. Exactly. But given that this is the features that exist that we've demoed on ChatGPT, that's fine.

Yeah. There will never be a case where there's like a real-time text API, right? Well, this is a real-time text API. You can do text only on this. Oh. Yeah. I don't know why you would. But it's actually, so text-to-text here doesn't,

quite make a lot of sense. I don't think you'll get a lot of latency gain. But, like, speech-to-text is really interesting because you can prevent responses, like audio responses, and force function calls. And so you can do stuff like UI control that is, like, super, super reliable. We had a lot of, like, you know, like,

we weren't sure how well this was going to work because it's like you have a voice answering, it's like a whole persona, right? Like that's a little bit more risky. But if you like cut out the audio outputs and make it so it always has to output a function, like you can end up with pretty reliable like commands, like a command architecture. Yeah. Actually, that's the way I want to interact with a lot of these things as well, like one-sided voice. Yeah. You don't necessarily want to hear the voice back. Okay. And like sometimes it's like,

Yeah, I think having an Outpoint voice is great, but I feel like I don't always want to hear an Outpoint voice. I'd say usually I don't. But yeah, exactly. Being able to speak to it is super smooth. Cool. Do you want to comment on any other stuff that you announced? From caching, I noticed was like...

I like the no code change part. I'm looking forward to the docs because I'm sure there's a lot of details on what you cache, how long you cache. Because Anthropic caches were like five minutes. I was like, okay, but what if I don't make a call every five minutes? Yeah, to be super honest with you, I've been so caught up with the real-time API and making the demo that I haven't read up on the other launches too much. I mean, I'm aware of them, but I think I'm excited to see how all distillation...

works. That's something that we've been doing, like, I don't know, I've been doing it between our models for a while and I've seen really good results. Like, I've done back in the day, like, from GPT-4 to GPT-3.5 and got, like, pretty much the same level of function calling with hundreds of functions. So that was super, super compelling. So I feel like easier distillation, I'm really excited for.

I see. Is it a tool? So I saw evals. Yeah. Like, what is the distillation product? It wasn't super clear, to be honest. I think I want to let that team talk about it. Well, I appreciate you jumping on. Yeah, of course. Amazing demo. It was beautifully designed. I'm sure that was part of you and Roman. Yeah, I guess shout out to the first people to like creators of Wanderlust originally were like Simon and Carolis. And then like...

I took it and built the voice component and the voice calling components. Yeah, so it's been a big team effort. And then the entire PI team for debugging everything as it's been going on. It's been so great working with them. Yeah, you're the first consumers on the DX team. Yeah. I mean, the classic role of what we do there. Yeah. Okay, yeah. Anything else? Any other calls to action? No, enjoy Dev Day. Thank you. Yeah. That's it.

The Latent Space crew then talked to Olivier Godemont, head of product for the OpenAI platform, who led the entire Dev Day keynote and introduced all the major new features and updates that we talked about today. Okay, so we are here with Olivier Godemont. I don't pronounce French. That's fine. It was perfect. And it was amazing to see your keynote today. What was the backstory of preparing something like this, preparing Dev Day?

Essentially came from a couple of places. Number one, excellent reception from last year's Dev Day. Developers, startups, founders, researchers want to spend more time with OpenAI and we want to spend more time with them as well. And so for us, it was a no-brainer, frankly, to do it again, like a nice conference. The second thing is going global. We've done a few events in Paris and a few other non-American countries. And so this year we're doing SF, Singapore and London to frankly just meet more developers.

Yeah, I'm very excited for the Singapore one. Ah yeah. Will you be there? I don't know. I don't know if I got an invite. No. Actually, I can just talk to you. Yeah, and then there was some speculation around October 1st. Is it because 01, October 1st? It has nothing to do. I discovered the tweet yesterday where people are so creative. No, 01, there was no connection to October 1st. But in hindsight, that would have been a pretty good meme. Okay.

Yeah, and I think OpenAI's outreach to developers is something that I felt the whole in 2022 when people were trying to build a ChatGPT and there was no function calling, all that stuff that you talked about in the past. And that's why I started my own conference as like, here's our little developer conference thing. But to see this OpenAI Dev Day now and to see so many

developer-oriented products coming out of OpenAI, I think it's really encouraging. Yeah, totally. That's what I said essentially, like developers are basically

the people who make the best connection between the technology and the future, essentially. Essentially, see a capability, see a low-level technology, and are like, "Hey, I see how that application or that use case can be enabled." And so in the direction of enabling AGI for all of humanity, it's a no-brainer for us to partner with Devs.

And most importantly, you almost never had waitlists, which compared to other releases people usually have.

You had prompt caching, you had real-time voice API. Sean did a long Twitter thread so people know the releases. Yeah. What is the thing that was sneakily the hardest to actually get ready for that day? Or what was the less 24 hours, anything that you didn't know was going to work? Yeah. They're all fairly, I would say, involved, like features to ship. So the team has been working for months, all of them.

The one which I would say is the newest for OpenAI is the real-time API for a couple of reasons. I mean, one, you know, it's a new modality. Second, it's the first time that we have an actual web-circuit-based API. And so I would say that's the one that required the most work over the month to get right from a developer perspective and to also make sure that our existing safety mitigations work well with real-time audio in and audio out.

What are the design choices that you want to highlight? I think for me, WebSockets, you just receive a bunch of events, it's two-way. I obviously don't have a ton of experience. I think a lot of developers are going to have to embrace this real-time programming. What are you designing for or what advice would you have for developers exploring this? The core design hypothesis was essentially how do we enable human level latency?

We did a bunch of tests, like on average, like human beings, like, you know, text, like something like 300 milliseconds to converse with each other. And so that was the design principle, essentially, like working backwards from that and, you know, making the technology work. And so we evaluated a few options and WebSockets was the one that we landed on. So that was like one design choice. A few other like big design choices that we had to make from caching. From caching, the design like target was automated from the get go, like zero code change from the developer.

that way you don't have to learn like what is the prompt prefix and you know how long does the cache work like we just do it as much as we can essentially so that was a big design choice as well and then finally on distillation like an evaluation the big design choice was something i love that's hype like in my previous job like a philosophy around like a pit of success like what is essentially the the

the minimum number of steps for the majority of developers to do the right thing. Because when you do evals on fat tuning, there are many, many ways to mess it up, frankly, and have a crappy model, evals that tell a wrong story. And so our whole design was, OK, we actually care about helping people who don't have that much experience, like a very cheap model, get in a few minutes to a good spot. And so how do we essentially enable that bit of success in the product flow? Yeah.

I'm a little bit scared to fine-tune, especially for vision because I don't know what I don't know for stuff like vision. For text, I can evaluate pretty easily. For vision, let's say I'm trying to... One of your examples was Grab, which is very close to home. I'm from Singapore. I think your example was they identified stop signs better.

Why is that hard? Why do I have to fine tune that? If I fine tune that, do I lose other things? There's a lot of unknowns with Vision that I think developers have to figure out. For sure. Vision is going to open up like a new, I would say, evaluation space.

Because you're right, it's harder to tell correct from incorrect, essentially, with images. What I can say is that we've been alpha testing the vision fine-tuning for several weeks at that point. We are seeing even higher performance uplift compared to text fine-tuning.

So that's, there is something here like we've been pretty impressed like in a good way frankly but you know how well it works. But for sure like you know I expect the developers who are moving from one modality to like text and images will have like more testing evaluation like you know to set in place like to make sure it works well. The model distillation and evals is definitely like the most interesting moving away from just being a model provider to being a platform provider. How should people think about

being the source of truth? Like, do you want OpenAI to be like the system of record of all the prompting? Because people sometimes store it in like different data sources. And then is that going to be the same as the models evolve? So you don't have to worry about, you know, refactoring the data or like things like that or like future model structures. The vision is if you want to be a source of truth, you have to earn it, right? Like we're not going to force people like to pass us data if there is no value prop like, you know, for us to store the data. The vision here is

At the moment, most developers use a one-size-fits-all model, the off-the-shelf GP40, essentially. The vision we have is, fast forward a couple of years, I think most developers will essentially have an automated, continuous, fine-tuned model.

The more you use the model, the more data you pass to the model provider, the model is automatically fine-tuned, evaluated against some of the sets. And essentially, you don't have to, every month when there is a new snapshot, to go online and try a few new things. That's a correction.

We are pretty far away from it. But I think that evaluation and decision product are essentially a first good step in that direction. It's like, "Hey, if you are excited by the direction and you give us evaluation data, we can quickly log your completion data and start to do some automation on your behalf." Then you can do evals for free if you share data with OpenAI? Yeah.

How should people think about when it's worth it, when it's not? Sometimes people get overly protective of their data when it's actually not that useful. But how should developers think about when it's right to do it, when not, or if you have any thoughts on it? The default policy is still the same. We don't train on any API data unless you opt in. What we've seen from feedback is evaluation can be expensive. If you run all one eval on thousands of samples, your bill will get increased pretty significantly.

That's problem statement number one. Problem statement number two is essentially I want to get to a world where whatever open AI ships a new model snapshot,

we have full confidence that there is no regression for the task that developers care about. And for that to be the case, essentially we need to get evals. And so that essentially is a sort of a two-plus-one zone. It's like we subsidize basically the evals, and we also use the evals when we ship new models to make sure that we keep going in the right direction. So in my sense, it's a win-win. But again, completely opt-in. I expect that many developers will not want to share their data, and that's perfectly fine to me.

I think free evals though, very very good incentive. I mean it's a fair trade, you get data, we get free evals. Exactly, and we sanitize, PII everything, we have no interest in the actual sensitive data, we just want to have good evaluation on the real use cases. I almost want to eval the eval, I don't know if that ever came up. Sometimes the evals themselves are wrong, and there's no way for me to tell you.

Everyone who is starting with LLM, tinkering with LLM, is like, "Yeah, evaluation, easy. I've done testing all my life." And then you start to actually build evals, understand all the corner cases, and you realize, "Wow, there's a whole field itself." So yeah, good evaluation is hard. And so, yeah.

But I think there's a, you know, I just talked to Braintrust, which I think is one of your partners. They also emphasize code-based evals versus your sort of low-code. What I see is like, I don't know, maybe there's some more that you didn't demo, but what I see is kind of like a low-code experience, right, for evals. Would you ever support like a more code-based, like would I run code on...

on OpenAI's eval platform? - For sure. I mean, we meet developers where they are. At the moment, the demand was more for easy to get started, like eval. But if we need to expose an evaluation API, for instance, for people to pass their existing test data, we'll do it. So yeah, there is no philosophical, I would say, misalignment on that. - Yeah, yeah, yeah. What I think this is becoming, by the way, and it's basically like you're becoming AWS, like the AI cloud.

And I don't know if that's a conscious strategy or it's like... It doesn't even have to be a conscious strategy. You're going to offer storage, you're going to offer compute, you're going to offer networking. I don't know what networking looks like. Networking is maybe like caching. It's a CDN. It's a prompt CDN. But it's the AI versions of everything. Do you see the analogies? Yeah, totally. Whenever I talk to developers, I feel like

Good models are just half of the story to build a good app. There's a ton more you need to do. Evaluation is the perfect example. You can have the best model in the world, if you're in the dark, it's really hard to get into confidence. And so our philosophy is the whole software development stack is being basically reinvented with LLMs.

there is no freaking way that open AI can build everything. There is just too much to build, frankly. And so my philosophy is essentially we'll focus on the tools which are the closest to the model itself. So that's why you see us investing quite a bit in fine-tuning, distillation, evaluation, because we think that it actually makes sense to have in one spot all of that. There is some sort of virtual circle, essentially, that you can set in place. But stuff like, you know,

LLM Ops, like tools which are further away from the model, I don't know. If you want to do super elaborate, like home management or tooling, I'm not sure OpenAI has such a big edge, frankly, to build this sort of tools. So that's how we view it at the moment.

But again, frankly, the philosophy is super simple. The strategy is super simple. It's meeting developers where they want us to be. And so that's frankly day in, day out, what I try to do. Cool. Thank you so much for the time. I'm sure you're going to... Yeah, I have more questions on... A couple questions on voice and then also your call to action, what you want feedback on, right? I think we should spend a bit more time on voice because I feel like that's the big splash thing. I talked... Well, I mean, just like...

What is the future of real-time for OpenAI? Because I think obviously video is next, you already have it in the ChatGPT desktop app. Do we just have a permanent life? Are developers just going to be sending sockets back and forth with OpenAI? How do we program for that? What is the future? Yeah, that makes sense. I think with multi-modality, real-time is quickly becoming essentially the right experience to build an application.

So my expectation is that we'll see like a non-trivial volume of applications moving to real-time API. If you zoom out, like, audio is already simple. Like audio until basically now, audio

on the web, in apps, was basically very much like a second-class citizen. Like you basically did like an audio chatbot for users who did not have a choice. You know, they were like struggling to read or I don't know, they were like not super educated with technology. And so, frankly, it was like the crappier option, you know, compared to text. But when you talk to people in the real world, the vast majority of people like prefer to talk and

listen instead of typing and writing. We speak before we write. Exactly. I don't know. I mean, I'm sure it's the case for you in Singapore. For me, my friends in Europe, the number of WhatsApp voice notes I receive every day, I mean, just people. It makes sense, frankly. Chinese. Chinese, yeah. Yeah, all voice. It's easier. There is more emotions. I mean, you get the point across pretty well. And so, my personal ambition for the real-time API and audience in general

is to make audio and multimedia truly a first-class experience. If you're the amazing, super bold startup out of YC, you're going to build the next billion user application, to make it truly audio-first and make it feel like an actual good project experience.

So that's essentially the ambition and I think it could be pretty big. I think one issue that people have with the voice so far as released in the advanced voice mode is the refusals. You guys had a very inspiring model spec, I think Joanne worked on that, where you said like, yeah, we don't want to overly refuse all the time. In fact, even if not safe for work, in some occasions it's okay. Yeah.

How is there an API that we can say, not safe for work, okay? I think we'll get there. The model spec nailed it. It nailed it, it's so good. Yeah, we are not in the business of policing if you can say vulgar words or whatever. There are some use cases like I'm writing a Hollywood script, I want to say vulgar words, it's perfectly fine. And so I think the direction where we'll go here is that basically there will always be a set

of behavior that will just like forbid frankly, because they're illegal against our terms of services. But then there will be some more risky themes which are completely legal, like vulgar words or not safe for work stuff, where basically we'll expose a controllable safety knobs in the API to basically allow you to say, "Hey, that theme okay, that theme not okay. How sensitive do you want the threshold to be on safety refusals?"

I think that's the direction. So a safety API. Yeah, in a way, yeah. Yeah, we've never had that. Yeah. Because right now, it's whatever you decide and then that's it. That would be the main reason I don't use OpenAI Voice is because of over-refusals. Yeah, yeah, yeah. No, we've got to fix that. Like singing. We're trying to do voice karaoke. So I'm a singer and you lock off singing. Yeah, yeah, yeah.

But I understand music gets you in trouble. Okay, yeah, so just generally, what do you want to hear from developers? We have all developers watching. What feedback do you want? Anything specific as well, especially from today. Anything that you are unsure about that you're like, our feedback could really help you decide. For sure. I think essentially it's becoming pretty clear after today that, I would say the open-ended actions become pretty clear after today.

investment in reasoning, investment in multi-modality, investment as well in, I would say, tool use, like function calling. To me, the biggest question I have is, where should we put the cursor next?

I think we need all three of them, frankly. So we'll keep pushing. Hire 10,000 people. Actually, no need. Build a bunch of bots. Exactly. And so, let's take O1 for instance. Is O1 smart enough for your problems? Let's set aside for a second the existing models. For the apps that you would love to build, is O1 basically it in reasoning or do we still have a step to do? Preview is not enough. I need the full one. Yeah.

So that's exactly the sort of feedback. Essentially what I would love to do is for developers, I mean there's a thing that Sam has been saying over and over again, it's easier said than done, but I think it's directionally correct. As a developer, as a founder, you basically want to build an app which is a bit too difficult for the model today, right? Like what you think is right, it's sort of working, sometimes not working,

And that way, that basically gives us a goal post and be like, "Okay, that's what you need to enable with the next small release in a few months." And so, I would say that usually that's the sort of feedback which is the most useful that I can directly incorporate. Awesome. I think that's our time.

Thank you so much, guys. Yeah, thank you so much. Thank you. We were particularly impressed that Olivier addressed the not safe for work moderation policy question head on, as that had only previously been picked up on in Reddit forums. This is an encouraging sign that we will return to in the closing candor with Sam Altman at the end of this episode.

Next, a chat with Roman Hewitt, friend of the pod, AI Engineer World's fair-closing keynote speaker and head of developer experience at OpenAI on his incredible live demos and advice to AI engineers on all the new modalities.

Alright, we're live from OpenAI Dev Day. We're with Ramon, who just did two great demos on stage and has been a friend of Latentspace. So thanks for taking some of the time. Of course, yeah. Thank you for being here and spending your time with us today. Yeah, I appreciate it. I appreciate you guys putting this on. I know it's like extra work, but it really shows the developers that you care about reaching out.

Yeah, of course. I think when you go back to the OpenAI mission, I think for us it's super important that we have the developers involved in everything we do, making sure that they have all of the tools they need to build successful apps. And we really believe that the developers are always going to invent the ideas, the prototypes, the fun factors of AI that we can't build ourselves. So it's really cool to have everyone here.

We had Michelle from you guys on. Yes, great episode. Thank you. She very seriously said API is the path to AGI. Correct. And people in our YouTube comments were like,

API is not AGI. I'm like, no, she's very serious. API is the path to AGI because you're not going to build everything like the developers are, right? Of course, yeah. That's the whole value of having a platform and an ecosystem of amazing builders who can in turn create all of these apps. I'm sure we talked about this before, but there's now more than three million developers building on OpenAI. So, it's pretty exciting to see all of that energy into creating new things.

I was going to say, you built two apps on stage today, an international space station tracker and then a drone. The hardest thing must have been opening Xcode and setting that up. Now, the models are so good that they can do everything else. You had two modes of interaction. You had kind of like chat GPT app to get the plan, Twitter one, and then you had cursor to apply some of the changes. How should people think about the best way to consume the coding models, especially both for brand

brand new projects and then existing projects that they're trying to modify. Yeah. I mean, one of the things that's really cool about O1 Preview and O1 Mini being available in the API is that you can use it in your favorite tools like Cursor, like I did, right? And that's also what like Devon from Cognition can use in their own software engineering agents. In the

In the case of Xcode, it's not quite deeply integrated in Xcode, so that's why I had ChatGPT side by side. But it's cool, right? Because I could instruct one preview to be my coding partner and brainstorming partner for this app, but also consolidate all of the files and architect the app the way I wanted. So all I had to do was just port the code over to Xcode and zero-shot the app built. I don't think I conveyed, by the way, how big a deal that is, but you can now create an iPhone app

from scratch describing a lot of intricate details that you want and your vision comes to life in like a minute. It's pretty outstanding. I have to admit I was a bit skeptical because if I open up Esco, I don't know anything about iOS programming. You know which file to paste it in. You probably set it up a little bit. So I'm like I have to go home and test it to like figure out and I need the ChatGPT desktop app so that it can tell me where to click. Yeah, I mean like

Xcode and iOS development has become easier over the years since they introduced Swift and SwiftUI. I think back in the days of Objective-C or like the storyboard, it was a bit harder to get in for someone new. But now with Swift and SwiftUI, their dev tools are really exceptional. But now when you combine that with O1 as your brainstorming and coding partner, it's like your architect effectively. That's the best way I think to describe O1. People ask me like, "Can GPT-4 do some of that?"

And it certainly can, but I think it will just start spitting out code, right? And I think what's great about O1 is that it can make up a plan. In this case, for instance, the iOS app had to fetch data from an API. It had to look at the docs. It had to look at how do I parse this JSON? Where do I store this thing? And kind of wire things up together. So that's where it really shines. Is Mini or Preview the better model that people should be using? Oh, good. Yeah.

I think people should try both. We're obviously very excited about the upcoming O1 that we shared the evals for. But we noticed that O1 Mini is very, very good at everything math, coding, everything STEM. If you need for your kind of brainstorming or your kind of science part, you need some broader knowledge than reaching for O1 previews better.

But yeah, I used the one mini for my second demo and it worked perfectly. All I needed was very much like something rooted in code, architecting and wiring up like a front end, a back end, some UDP packets, some web sockets, something very specific and it did that perfectly. And then maybe just talking about voice and Wanderlust, the app that keeps on giving. It does indeed, yeah. What's the backstory behind preparing for all of that?

You know, it's funny because when last year for Dev Day, we were trying to think about what could be a great demo app to show like an assistive experience. I've always thought travel is a kind of a great use case because you have like pictures, you have locations, you have the need for translations potentially. There's like so many use cases that are bounded to travel that I thought last year, let's use a travel app and that's how Wanderlust came to be. But of course, a year ago, all we had was a text-based assistant.

And now we thought, well, if there's a voice modality, what if we just bring this app back as a wink? And what if we were interacting better with voice? And so with this new demo, what I showed was the ability to have a complete conversation in real time with the app. But also, the thing we wanted to highlight was the ability to call tools and functions, right? So in this case, we placed a phone call using the Twilio API interfacing with our AI agents.

but developers are so smart that they'll come up with so many great ideas that we could not think ourselves, right? But what if you could have like, you know, a 911 dispatcher? What if you could have like a customer service like a center that is much smarter than what we've been used to today? There's gonna be so many use cases for real time. It's awesome. Yeah, and sometimes actually you like this should kill phone trees like

Like there should not be like dial one. Of course. Para español, you know. Yeah, exactly. I mean, even you starting speaking Spanish would just do the thing, you know. You don't even have to ask. So yeah, I'm excited for this future where we don't have to interact with those legacy systems. Yeah, yeah. Is there anything, so you're doing function calling in a streaming environment. So basically it's WebSockets, it's UDP, I think. Yeah.

It's basically not guaranteed to be exactly once delivery. Is there any coding challenges that you encountered when building this? Yeah, it's a bit more delicate to get into it. We also think that for now what we ship is a beta of this API. I think there's much more to build onto it.

It does have the function calling and the tools, but we think that for instance, if you want to have something very robust on your client side, maybe you want to have WebRTC as a client, right? And as opposed to like directly working with the sockets at scale. So that's why we have partners like LifeKit and Agora if you want to use them. And I'm sure we'll have many more in the future.

But yeah, we keep on iterating on that and I'm sure the feedback of developers in the weeks to come is going to be super critical for us to get it right. Yeah, I think LifeKit has been fairly public that they are used in the ChatGPT app.

Like, is it just all open source and we just use it directly with OpenAI or do we use LiveKit Cloud or something? So right now we released the API, we released some sample code also and reference clients for people to get started with our API. And we also partnered with LiveKit and Agora so they also have their own like ways to help you get started that plugs naturally with the real-time API.

So depending on the use case, people can decide what to use. If you're working on something that's completely client, or if you're working on something on the server side, for the voice interaction, you may have different leads. So we want to support all of those. I know you've got a run. Is there anything that you want the AI engineering community to get feedback on specifically? Like, even down to, like, you know, a specific API endpoint or, like...

What's the thing that you want? Yeah, I mean, if we take a step back, I think Dev Day this year is a little different from last year and in a few different ways. But one way is that we wanted to keep it intimate, even more intimate than last year. We wanted to make sure that the community is on the spotlight. That's why we have community talks and everything.

And the takeaway here is like learning from the very best developers and AI engineers. And so, you know, we want to learn from them. Most of what we ship this morning, including things like prompt caching, the ability to generate prompts quickly in the playground, or even things like vision fine tuning. These are all things that developers have been asking of us. And so the takeaway I would leave them with is to say like, hey, the roadmap that we're working on is heavily influenced by them and their work. And so we love feedback.

from high feature requests, as you say, down to very intricate details of an API endpoint, we love feedback. So yes, that's how we build this API. Yeah, I think the model distillation thing as well, it might be the most boring, but actually used a lot. True, yeah. And I think maybe the most unexpected, right? Because I think if I read Twitter correctly the past few days,

A lot of people were expecting us to ship the real-time API for speech-to-speech. I don't think developers were expecting us to have more tools for distillation.

And we really think that's going to be a big deal, right? If you're building apps that have, you know, you want high, like low latency, low cost, but high performance, high quality on the use case, distillation is going to be amazing. Yeah, I sat in the distillation session just now and they showed how they distilled from 4.0 to 4.0 Mini and it was like only like a 2% hit in the performance and 15x cheaper. Yeah, I was there as well for the superhuman kind of use case inspired for an employee client. Yeah, this was really good.

Cool, man. Amazing. Thank you so much, buddy. Thanks again for being here today. It's always great to have you. As you might have picked up at the end of that chat, there were many sessions throughout the day focused on specific new capabilities, like the new model distillation features, combining evals and fine-tuning. For our next session, we are delighted to bring back two former guests of the pod, which is something listeners have been greatly enjoying in our second year of doing the Latent Space podcast.

Michelle Pokras of the API team joined us recently to talk about structured outputs and today gave an updated long form session at Dev Day describing the implementation details of the new structured output mode. We also got her updated thoughts on the voice mode API we discussed in her episode now that it is finally announced.

She is joined by friend of the pod and super blogger, Simon Willison, who also came back as guest co-host in our Dev Day 2023 episode. Great, we're back live at Dev Day. Returning guest, Michelle. And then returning guest co-host, Ford.

- Four for first, yeah, I don't know. - I've lost count. - I've lost count. - It's been a few. - Simon Willis is back. Yeah, we just wrapped everything up. Congrats on getting everything live. Simon did a great live blog, so if you haven't caught up. - I implemented my live blog while waiting for the first talk to start using like, GP4 wrote me the JavaScript. And I got that live just in time and then yeah, I was live blogging the whole day. - Are you a cursor enjoyer? - I haven't really gotten to cursor yet, to be honest.

I just haven't spent enough time for it to click, I think. I'm more of copy and paste things out to Claude and ChatGPT. Yeah, it's interesting. I've converted to Cursor for it and 01 is so easy to just toggle on and off. What's your workflow? Copy, paste, apply. I'm going to be real. I'm still VS Code co-pilot.

So, Copilot is actually the reason I joined OpenAI. It was, you know, before ChatGPT, this is the thing that really got me. So, I'm still into it. But I keep meaning to try out Cursor and I think now that things have calmed down, I'm going to give it a real go.

Yeah, it's a big thing to change your tool of choice. Yes. Yeah, I'm pretty dialed. Yeah. I mean, if you want, you can just fork VS Code and make your own. That's the thing to do. It's a done thing, right? Yeah. We talked about doing a hackathon where the only thing you do is fork VS Code and may the best fork win. Nice. That's actually a really good idea.

Yeah, so, I mean, congrats on launching everything today. I know we touched on it a little bit, but everyone was kind of guessing that Voice API was coming and we talked about it in our episode. How do you feel going into the launch? Any design decisions that you want to highlight?

Yeah, super jazzed about it. The team has been working on it for a while. It's like a very different API for us. It's the first WebSocket API. So a lot of different design decisions to be made, like what kind of events do you send? When do you send an event? What are the event names? What do you send on connection versus on future messages? So there have been a lot of interesting decisions there. The team has also hacked together really cool projects as we've been testing it.

One that I really liked is we had an internal hackathon for the API team and some folks built like a little hack that you could use Vim with voice mode to like control Vim and you would tell them on a like write a file and it would you know know all the Vim commands and type those in. So yeah a lot of cool stuff we've been hacking on and really excited to see what people build with it.

I've got to call out a demo from today. I think it was Katia had a 3D visualization of the solar system like WebGL solar system you could talk to. That is one of the coolest conference demos I've ever seen. That was so convincing. I really want the code. I really want the code for that to get put out there. I'll talk to the team. I think we can probably put it out. Absolutely beautiful example. And it made me realize that the real-time API, this WebSocket API, it means that building a website that you can just talk to

is easy now. It's like it's not difficult to build, spin up a web app where you have a conversation with it, it calls functions for different things, it interacts with what's on the screen. I'm so excited about that. There are all of these projects I thought I'd never get to and now I'm like, you know what? Spend a weekend on it. I can have a talk to your database with a little web application. That's so cool. Chat with PDF but really

- Really chat with PDF. - Yeah, exactly. - Not completely. - Totally. And it's not even hard to build. That's the crazy thing about this. Yeah, very cool. Yeah, when I first saw the space demo, I was actually just wowed. And I had a similar moment, I think, to all the people in the crowd. I also thought Roman's drone demo was super cool. - That was a super fun one as well. - Yeah, I actually saw that live this morning and I was holding my breath for sure. Knowing Roman, he probably spent the last two days working on it.

But yeah, I'm curious about-- you were talking with Romain actually earlier about what the different levels of abstraction are with WebSockets. It's something that most developers have zero experience with. I have zero experience with it. Apparently there's the RTC level and then there's the WebSocket level, and there's levels in between. ROMAN NURIK: Not so much. I mean, with WebSockets, with the way they've built their API, you can connect directly to the OpenAI WebSocket from your browser. And it's actually just regular JavaScript. You instantiate the WebSocket thing.

It looks quite easy from their example code. The problem is that if you do that, you're sending your API key from source code that anyone can view. Yeah, we don't recommend that for production. So it doesn't work for production, which is frustrating because it means that you have to build a proxy. So I'm going to have to go home and build myself a little WebSocket proxy just to hide my API key. I want OpenAI to do that. I want OpenAI to solve that problem for me so I don't have to build the

1000th WebSocket proxy just for that one problem. Totally. We've also partnered with some partner solutions. We've partnered with, I think, Agora, LiveKit, a few others. So, there's some loose solutions there, but yeah, we hear you. It's a beta.

Yeah, I mean you still want a solution where someone brings their own key and they can trust that you don't get it, right? Kind of. I mean I've been building a lot of bring your own key apps where it's my HTML and JavaScript, I store the key in local storage in their browser and it never goes anywhere near my server which works but how do they trust me? How do they know I'm not going to ship another piece of JavaScript that steals the key from them? And so nominally this actually comes with the crypto background. This is what Metamask does.

Yeah, it's a public-private key thing. Yeah. Yeah. Like, why doesn't OpenAI do that? I don't know if obviously it's- I mean, as with most things, you'd think there's like some really interesting question and really interesting reason and the answer is just, you know, it's not been the top priority and it's hard for a small team to do everything.

I have been hearing a lot more about the need for things like sign in with OpenAI. I want OAuth. I want to bounce my users through chat GPT and I get back a token that lets me spend up to $4 on the API on their behalf. Then I could ship all of my stupid little experiments, which currently require Peter Koppel

people to copy and paste their API key in, which cuts off everyone. Nobody knows how to do that. Totally. I hear you. Something we're thinking about. And yeah, stay tuned. Yeah, yeah. Right now, I think the only player in town is OpenRouter. That is basically-- it's funny. It was made by-- I forget his name. But he used to be CTO of OpenSea. And the first thing he did when he came over was build MetaMask for AI. Totally. Yeah, very cool. What's the most underrated release from today?

Vision fine-tuning. Vision fine-tuning is so underrated. For the past two months, whenever I talk to founders, they tell me this is the thing they need most. A lot of people are doing OCR on very bespoke formats like government documents, and vision fine-tuning can help a lot with that use case.

Also, bounding boxes. People have found a lot of improvements for bounding boxes with Vision Fine-Tuning. So yeah, I think it's pretty slept on. People should try it. You only really need 100 images to get going. Tell me more about bounding boxes. I didn't think GPT-4 Vision could do bounding boxes at all.

Yeah, it's actually not that amazing at it. We're working on it. But with fine-tuning, you can make it really good for your use case. That's cool because I've been using Google Gemini's banding box stuff recently. It's very, very impressive. Yeah. But being able to fine-tune a model for that. The first thing I'm going to do with fine-tuning for images is I've got five chickens and I'm going to fine-tune a model that can tell which chicken is which. Love it.

Which is hard because three of them are grey. Yeah. So there's a little bit of... Okay, this is my new favorite use case. This is awesome. Yeah. I've managed to do it with prompting. Just like I gave Claude pictures of all of the chickens and then said, okay, which chicken is this? Yeah. But it's not quite good enough because it confuses the grey chickens. Listen, we can close that eval gap. Yeah. It's going to be a great eval. My chicken eval is going to be fantastic.

I'm also really jazzed about the evals product. It's kind of like a sub-launch of the distillation thing, but people have been struggling to make evals. And the first time I saw the flow with how easy it is to make an eval in our product, I was just blown away. So I recommend people really try that. I think that's what's holding a lot of people back from really investing in AI because they just have a hard time figuring out if it's going well for their use case. So we've been working on making it easier to do that.

Does the eval product include structured output testing? Yeah, you can check if it matches your JSON schema. We have guaranteed structured output anyway.

So we don't have to test it. Well, not the schema, but the performance. See, these seem easy to tell apart. I think so. It's like, it might call the wrong function. You're going to have right schema, wrong output. So you can do function calling testing. I'm pretty sure. I'll have to check that for you, but I think so. We'll make sure it's in the notes. How do you think about the evolution of the API design? I think, to me, that's the most important thing. So even with the OpenAI levels, like chatbots, I can understand what the API design looks like.

reasoning, I can kind of understand it even though like channel thought kind of changes things. As you think about real-time voice and then you think about agents, it's like how do you think about how you design the API and like what the shape of it is? Yeah, so I think we're starting with the lowest level capabilities and then we build on top of that as we know that they're useful. So a really good example of this is real-time. We're actually going to be shipping

audio capabilities in chat completions. So, this is like the lowest level capability. So, you supply in audio and you can get back raw audio and it works at the request response layer. But in through building advanced voice mode, we realized ourselves that like it's pretty hard to do with something like chat completions. And so, that led us to building this WebSocket API.

So we really learned a lot from our own tools. And we think, the check and wishes thing is nice for certain use cases or async stuff, but you're really going to want a real-time API. And then as we test more with developers, we might see that it makes sense to have another layer of abstraction on top of that, something closer to more client-side libraries. But for now, that's where we feel we have a really good point of view. LAURENCE MORONEY: So that's a question I have is, if I've got a half hour long audio recording,

At the moment, the only way I can feed that in is if I call the WebSocket API and slice it up into little JSON basics for snippets and file them all over. In that case, I'd rather just give you like an image in the chat completion API, give you a URL to my MP3 files and input. Is that something? That's what we're going to do. Oh, thank goodness for that. Yes.

It's in the blog post. I think it's a short one-liner, but it's rolling out, I think, in the coming weeks. Oh, wow. Oh, really soon then. Yeah, the team has been sprinting. We're just putting finishing touches on stuff. Do you have a feel for the length limit on that? I don't have it off the top. Okay. Sorry.

Because yeah, often I want to do, I do a lot of work with transcripts of hour-long YouTube videos. Yeah. Currently, I run them through Whisper and then I do the transcript that way. But being able to do the multimodal thing, those would be really useful. Totally, yeah. We're really jazzed about it. We want to basically give the lowest capabilities we have, lowest level capabilities, and the things that make it easier to use. So, targeting kind of both.

I just realized what I can do though is I do a lot of Unix utilities, little like Unix things. I want to be able to pipe the output of a command into something which streams that up to the WebSocket API and then speaks it out loud. So I can do streaming speech of the output of things. That should work. I think you've given me everything I need for that. That's cool. Yeah. Excited to see what you build.

I heard there are multiple competing solutions and you guys eval that before you pick WebSockets. Like server-set events, polling.

Can you give your thoughts on the live updating paradigms that you guys looked at? Because I think a lot of engineers have looked at stuff like this. I think WebSockets are just a natural fit for bidirectional streaming. Other places I've worked, like Coinbase, we had a WebSocket API for pricing data. I think it's just a very natural format. So it wasn't even really that controversial at all?

I don't think it was super controversial. I mean, we definitely explored the space a little bit, but I think we came to WebSockets pretty quickly. Cool. Video? Yeah. Not yet, but possible in the future. I actually was hoping for the ChatGPT desktop app with video today because that was demoed. Yeah. This is Dev Day.

I think the moment we have the ability to send images over the WebSocket API, we get video. My question is, how frequently? Because sending a whole video frame of like a 1080p screen, maybe it might be too much. What's the limitations on a WebSocket chunk going over? I don't know.

I don't have that off the top. Like Google Gemini, you can do an hour's worth of video in their context window and just by slicing it up into one frame at 10 frames a second. And it does work. So...

I don't know. But then that's the weird thing about Gemini is it's so good at you just giving it a flood of individual frames. It'll be interesting to see if GPT-4 can handle that or not. Do you have any more feature requests? It's been a long day for everybody, but you got me show right here. My one is, I want you to do all of the accounting for me. I want my users to be able to run my apps

and I want them to call your APIs with their user ID and have you go, "Oh, they've spent 30 cents. Cut them off at a dollar. I can like check how much they spent." All of that stuff because I'm having to build that at the moment and I really don't want to. I don't want to be a token accountant. I want you to do the token accounting for me. Yeah, totally. I hear you. It's good feedback.

Well, how does that contrast with your actual priorities? I feel like you have a bunch of priorities. They showed some on stage with multi-modality and all that. Yeah. It's hard to say. I would say things change really quickly. Things that are big blockers for user adoption, we find very important. It's a rolling prioritization. No assistance API update? Not at this time.

Yeah. I was hoping for like an old one native thing in assistance. Yeah. I thought they would go well together. We're still kind of iterating on the formats. I think there are some problems with the assistance API, some things it does really well. And I think we'll keep iterating and land on something really good, but just wasn't quite ready yet. Some of the things that are good in the assistance API is hosted tools. People really like hosted tools and especially RAG.

And then some things that are less intuitive is just how many API requests you need to get going with the assistance API. It's quite-- It's quite a lot. Yeah, you've got to create an assistant, you've got to create a thread, you've got to do all this stuff. So yeah, it's something worth thinking about. It shouldn't be so hard. The only thing I've used it for so far is code interpreter. It's like it's an API to code interpreter. Crazy exciting. Yes, we want to fix that and make it easier to use. I want code interpreter over WebSockets. That would be wildly interesting.

Yeah. Do you want to bring your own code interpreter or you want to use OpenAI as well? I want to use that because code interpreters are a hard problem. Sandboxing and all of that stuff is... Yeah, but there's a bunch of code interpreter as a service things out there. There are a few now, yeah. Because there's... I think you don't allow arbitrary installation of packages. Oh, they do. They really do. Unless they use your hack.

Yeah, and I do. You can upload a pip package. You can compile C code in Code Interpreter. I know. That's a hack. Oh, it's such a glorious hack, though. Okay. I've had it write me custom SQLite extensions in C and compile them and run them inside of Python, and it works. I mean, yeah. There's others. E2B is one of them. It'll be interesting to see what the real-time version of that will be.

Yeah. Awesome, Michel. Thank you for the update. We left the episode as what will voice mode look like? Obviously, you knew what it looked like, but you didn't say it. So now you could. Yeah, here we are. Hope you guys find it. Yeah. Cool. Awesome. Thank you. That's it. Our final guest today, and also a familiar recent voice on the Latent Space Pod, presented at one of the community talks at this year's Dev Day.

Alistair Pullen of Cosene made a huge impression with all of you. Special shout out to listeners like Jesse from Morph Labs when he came on to talk about how he created synthetic datasets to fine tune the largest lauras that had ever been created for GPT-4-0 to post the highest ever scores on SweeBench and SweeBench Verified while not getting recognition for it because he refused to disclose his reasoning traces to the SweeBench team.

Now that OpenAI's O1 preview is announced, it is incredible to see the OpenAI team also obscure their chain of thought traces for competitive reasons and still perform lower than Cozine's Genie model.

We snagged some time with Ali to break down what has happened since his episode aired. Welcome back, Ali. Thank you so much. Thanks for having me. So you just spoke at opening at Dev Day. What was the experience like? Did they reach out to you? You seem to have a very close relationship. Yeah, so off the back of...

Off the back of the work that we've done that we spoke about last time we saw each other, I think that OpenAI definitely felt that the work we've been doing around fine-tuning was worth sharing. I would obviously tend to agree, but today I spoke about some of the techniques that we learned. Obviously it was like a non-linear path

arriving to where we've arrived and the techniques that we've built to build Genie. So I think I shared a few extra pieces about some of the techniques and how it really works under the hood, how you generate a data set to show the model how to do what we show the model. And that was mainly what I spoke about today. I mean, yeah, they reached out and I was super excited at the opportunity, obviously. Like, it's not every day that you get to come and do this, especially in San Francisco.

Yeah, they reached out and they were like, do you want to talk at Dev Day? You can speak about basically anything you want related to what you've built. And I was like, sure, that's amazing. I'll talk about fine tuning how you build a model that does this software engineering. So, yeah. Yeah. And the trick here is when we talked, O1 was not out. No, it wasn't. Did you know about O1?

I didn't know. I knew some bits and pieces. No, not really. I knew a reasoning model was on the way. I didn't know what it was going to be called. I knew as much as everyone else. Strawberry was the name back then. Because, you know, fast forward, you were the first to hide your chain of thought reasoning traces as IP. Yes. Famously, that got you in trouble with SweetBetch or whatever. I feel slightly vindicated by that now. And now, obviously, O1 is doing it. Yeah, the fact that, I mean, like,

I think it's true to say right now that the reasoning of your model gives you the edge that you have. And the amount of effort that we put into our data pipeline to generate these human-like reasoning traces was... I mean, that wasn't for nothing. We knew that this was the way that you'd unlock more performance, getting them all to think in a specific way. In our case, we wanted it to think like a software engineer. But yeah, I think that...

The approach that other people have taken like OpenAI in terms of reasoning has definitely showed us that we were going down the right path pretty early on. And even now we've started replacing some of the reasoning traces in our Genie model with reasoning traces generated by O1, or at least in tandem with O1. And we've already started seeing improvements in performance from that point. But no, like back to your point, in terms of like the whole like

withholding them, I still think that that was the right decision to do because of the very reason that everyone else has decided to not share those things. It shows exactly how we do what we do and that is our edge at the moment. As a founder, they also feature a cognition on stage, talk about that.

How does that make you feel that like, you know, they're like, hey, 01 is so much better, makes us better. For you, it should be like, oh, I'm so excited about it too because now all of a sudden it's like it kind of like raises the floor for everybody. Like how should people, especially new founders, how should they think about, you know, worrying about the new model versus like being excited about them just focusing on like the core FP and maybe switching out some of the parts like you mentioned? Yeah, speaking for us, I mean, obviously like we were extremely excited about 01 because...

at that point the process of reasoning is obviously very much baked into the model. We fundamentally, if you like remove all distractions and everything, we are a reasoning company, right? We want to reason in the way that a software engineer reasons. So when I saw that model announced, I thought immediately, well, I can improve the quality of my traces coming out of my pipeline. So like my signal to noise ratio gets better. And then not immediately, but down the line, I'm going to be able to train those traces into O1 itself. So I'm going to get even more performance that way as well. So it's,

for us a really nice position to be in to be able to take advantage of it both on the prompted side and the fine-tuned side and also because fundamentally like

we are, I think, fairly clearly in a position now where we don't have to worry about what happens when 02 comes out, what happens when 03 comes out. This process continues. Like, even going from, you know, when we first started going from 3.5 to 4, we saw this happen. And then from 4 turbo to 4.0 and then from 4.0 to 0.1, we've seen the performance get better every time. And I think, I mean, like,

the crude advice I'd give to any startup founders, try to put yourself in a position where you can take advantage of the same, you know, like sea level rise every time essentially. Do you make anything out of the fact that you were able to take 4.0 and fine tune it higher than 0.1 currently scores on SweetBench verified? Yeah, I mean like, yeah, that was obviously, to be honest with you, you realized that before I did. Adding value. Yes, absolutely. That's a value add investor right there. No, obviously I think it's been,

That in of itself is really vindicating to see because I think we have heard from some people, not a lot of people, but some people saying, well, okay, well, if everyone can reason, then what's the point of doing your reasoning? But it shows how much more signal is in the custom reasoning that we generate. And again, it's the very sort of obvious thing. If you take something that's made to be general and you make it specific, of course it's going to be better at that thing, right?

So it was obviously great to see we still are better than O1 out of the box, even with an older model. And I'm sure that that delta will continue to grow once we're able to train O1 and once we've done more work on our data set using O1, that delta will grow as well. It's not obvious to me that they will allow you to find your O1, but maybe they'll try. I think the core question that OpenAI really doesn't want you to figure out is can you use an open source model and beat O1?

Interesting. Because you basically have shown proof of concept that a non-01 model can beat 01. And their whole 01 marketing is don't bother trying. Like, don't bother stitching together multiple chain of thought calls. We did something special. Secret sauce. You don't know anything about it. And somehow...

you know, your 4.0 chain of thought reasoning as a software engineer is still better. Maybe it doesn't last. Maybe they're going to run 0.1 for five hours instead of five minutes and then suddenly it works. So I don't know. It's hard to know. I mean, one of the things that we just want to do out of sheer curiosity is do something like fine-tune 4.0 5B on the same data set. Like same context window length, right? So it should be fairly easy. We haven't done it yet. Truthfully, we have been so swamped with...