The Agent Reasoning Interface: o1/o3, Claude 3, ChatGPT Canvas, Tasks, and Operator — with Karina Nguyen of OpenAI

Latent Space: The AI Engineer Podcast — Practitioners talking LLMs, CodeGen, Agents, Multimodality, AI UX, GPU Infra and all things Software 3.0

Shownotes Transcript

Welcome back. From Sam Altman to Satya Nadella, many people are saying that 2025 is the year of agents. Since our podcast conversations about DeepSeek, the mainstream narrative has become obsessed with DeepSeek R1 and what it means to have a competitive open weights reasoning model from China.

SWIX wrote a viral blog post about the reasoning price war of January 2025, and today, OpenAI has responded by slashing the price of O1 Mini from $12 per million tokens to $4.40, and also released O3 Mini in ChatGPT and to Level 3 and above API users for the exact same price.

Given the O3 mini matches or exceeds O1 especially with medium or high reasoning effort, this is an enormous leap in performance per dollar. In the meantime, the rest of OpenAI has been busy shipping. ChatGPT has slowly accelerated from shipping canvas during the 12 days of Shipmas last month to shipping recurring tasks and most recently operator, the hosted virtual agent response to Claude's computer use.

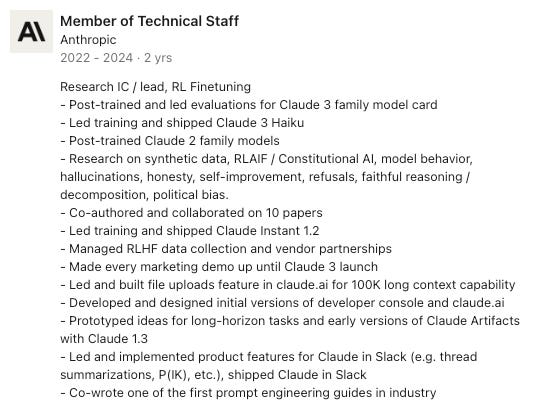

We are very proud to host today's guest, Karina Wynn, who was at Anthropic for the launch of Clawed 3 and wrote the first 50,000 lines of Clawed.ai before joining OpenAI to work on the future of what she calls reasoning interfaces. We are very proud to also announce that Karina will be the closing keynote speaker for the second AI Engineer Summit in New York City from February 20th to 22nd.

This is the last call for applications for the AI leadership track for CTOs and VPs of AI. If you are building agents in 2025, this is the single best conference of the year. Our new website now lists our speakers and talks from DeepMind, Anthropic, OpenAI, Meta, Jane Street, Bloomberg, BlackRock, LinkedIn, and more.

Look for more sponsor and attendee information at apply.ai.engineer and see you there. Watch out and take care. Hey, everyone. Welcome to the Latent Space Podcast. This is Alessio, partner and CTO at Decibel, and I'm joined by my usual co-host, Swix. Hey, and today we're very, very blessed to have Karina Nguyen in the studio. Welcome. Nice to meet you. We finally made it happen. We finally made it happen. First time we tried this, you were working at a different company, and now we're here. Fortunately, you had some time, so thank you so much for joining us.

Karina, your website says you lead a research team in OpenAI creating new interaction paradigms for reasoning interfaces and capabilities like ChatGPT Canvas and most recently ChatGPT Tasks. I don't know, is that what we're calling it? Streaming chain of thought for O1 models and more via novel synthetic model training.

What is this research team? Yeah, I need to like clarify this a little bit more. I think it changed a lot. Like since the last time we launched... So we launched Canvas and it was like the first like project that I was attacking basically. And then...

I think over time I was trying to refine what my team is, and I feel like it's at an intersection of human-computer interaction, defining what the next interaction paradigms might look like with some of the most recent reasoning models, as well as actually trying to come up with novel methods, how to improve those models for certain tasks if you want to. So for Canvas, for example, one of the most common use cases is basically writing and coding.

And we're continually working on, okay, how do we make Canvas coding to go beyond what is possible right now? And that requires us to actually do our own training and coming up with new methods of synthetic data generation. The way I'm thinking about it is that my team is going from very full stack, from training models all the way up to deployment and making sure that we create novel product features for

that is coherent to what ChachiPT can become. There are different types of features like canvas, tasks, but all those components that go, they compose together to evolve ChachiPT into something completely new, I think, in the new year. It's evolving. I like your tweet about that it's kind of modular. You can compose it with the stocks feature, the...

Creative writing feature. I forgot what else. We have a list of other use cases, but we don't have to go into that yet. Can we maybe go back to when you first started working with LLMs? I know you had some early UX prototypes with GPT-3 as well and kind of like maybe how that is informed, the way you build products.

I think my background was mostly like working on computer vision applications for like investigative journalism back when I was like at school at Berkeley. And I was working a lot with like human rights center and like investigative journalists from various media. And that's how I learned more about like AI, like with vision transformers. And at that time, I was working with some of the professors at Berkeley AI Research. Yeah.

There are some Pulitzer Prize winning professors, right, that teach there? No. So it's mostly like was reporting for like teams like the New York Times, like the AP Associated Press. So it was like all in the context of like Human Rights Center. Got it. Yeah. So that was like in computer version. And then I saw Chris Sala's work around, you know, like interpretability from Google and

And that's how I found out about Logantropic. And at that time, I was just like,

like I think it was like the year when like Ukraine's war happened and I was like trying to find a full-time job and it was kind of like all got distracted. It was like kind of like spraying and I was like very focused on like figuring out like what to do. And then my best option at that time was just like continue my internship at the New York Times and convert to like full-time. At the New York Times, it was just like working on like mostly like

product engineering work around R&D prototypes, storytelling features.

on the mobile experience, so kind of like storytelling experiences. And at that time, we were thinking about how do we employ NLP techniques to scrape some of the archives from the New York Times or something. But then I always wanted to get into AI, and I knew OpenAI for a while, since I was in Berkeley. So I kind of applied to Anthropic just on the website.

And I was rejected the first time, but then at that time they were not hiring for like anything like product engineering, like front-end engineering, which was something that was like, at that time I was like interested in. And then there was like a new opening at Antarpic was like, kind of like you are front-end engineer. And so I applied and that's how my journey began. But like the earlier prototypes was mostly like, I used like Clip,

for like fashion recommendation search. So it was like one of those successful projects, I think. And I was like, before even coming to Antarctica, I was like thinking maybe I should just like do my own startup. But I feel like I didn't have like enough confidence and conviction in myself that I could do that. But it was like one of the early like prototypes. And I think Twitter is a good platform to like for side projects.

That's fantastic. Especially for something visual. Yeah. We'll briefly mention that the Ukrainian crisis actually hit home more for you than most people because you're from the Ukraine and you moved here like for school, I guess. Yeah. Yeah. We'll come back to that if it comes up. But then you joined Anthropic, not just as a front-end engineer. You were the first. Is that true? Designer? Yeah.

Yes, I think like I did both product design and foreign engineering together. And like at that time it was like pre-CHPT, it was like, I think August 2022.

And that was a time when Antwerp really decided to do more product-related things. And the vision was like, we need to fund research and building product is the best way to fund safety research, which I found quite admirable. So the really first...

product that on topic was like cloud and Slack. And it was sunsetted not long after, but like, it was like one of the first, I think I still come back to that idea of like cloud operating inside some of the organizational workplace, like Slack.

And it's something magical in there. And I remember we built like ideas, like summarize the threads, but you can like imagine having automated like ways of like, maybe cloud should like summarize multiple channels every week, custom for what you like or for what you want. And then we built some like really cool features like tag content.

Claude and then asked to summarize what happened in the thread, suggest new ideas.

But we didn't quite double down because you could imagine Cloud having access to the files or Google Drive that you can upload in Slack, just connectors, connections in the Slack. Also, the UX was kind of constraining. At that time, I was thinking, oh, we wanted to do this feature, but Slack interface kind of constrained us to do that. And we didn't want to be dependent on the platform like Slack.

And then after like Chai Chai Petit came out, I remember the first two weeks,

My manager made me this challenge, like, can I, like, reproduce kind of, like, a similar interface in, like, two weeks? And one of the early mistakes being in engineering is, like, I said yes. Instead, I should have said, like, you know, it's 2x the time. Sure. And this is how, like, Cloud.ai was kind of, like, born. Oh, so you actually wrote Cloud.ai as your first job? Yeah, like, I think, like, the first, like...

50 000 code of lines yeah without any reviews at that time because there's no one um yeah it was like very small team it was like six seven team who we were called a deployment team yeah on mine i actually interviewed for uh anthropic around that time i got i was given cloud and sheets oh and that was my other form factor i was like oh yeah this needs to be in a table so we can we can just copy paste yeah

and just span it out, which is kind of cool. The other rumor that we might as well just mention this, Raza Habib from Human Loop often says that, you know, there was some, there's some version of ChatGPT in Anthropic. Like you had the chat interface already. Like you had Slack. Why not launch a web UI? Like basically like how did, how did OpenAI beat Anthropic to ChatGPT basically? Yeah.

It seems kind of obvious to have it. I think the GPT model itself came out way before we decided to launch Cloud 2 necessarily. And I think at that time, Cloud 1.3 had a lot of hallucinations, actually. So I think one of the concerns is I don't think the leadership had the conviction that this is the model that we want to deploy or something. So there was a lot of discussions around that time. But Cloud 1.3 was like, I don't know if you...

I played with that, but it was extremely creative and it was really cool. Nice. It's still creative. And you had a tweet recently that you said things like Canvas and Tasks could have happened two years ago, but they were not. Do you know why they were not? Was it too many researchers at the labs not focused on UX? Was it just not a priority for the labs?

Yeah, I come back to that question a lot. I guess like I was working on something similar to like Canvasy, but for Cloud at that time in like 2023, it was the same similar idea of like Cloud workspace where a human and a Cloud could have like a shared workspace. And that's Artifacts. No, no, no. This is Cloud Projects. I don't know. I think it kind of evolved. I think like at that time I was like in product engineering team and

And then I switched to like research team and the product engineering team grew so much. They had their own ideas of like artifacts and like projects. So not necessarily, maybe they looked at my like previous explorations, but like, you know, when I was exploring like cloud documents or like cloud workspace was like, I don't think anybody was thinking about UX as much, but

Or like not many like researchers understood that. And I think the inspiration actually for, I still have like all the sketches, but the inspiration was like from the Harry Potter, like Tom Riddler diary. That was an inspiration, like having Claude writing into the document or something and communicate that. So like in the movie, you write a little bit and then it answers you. Yeah. Okay. Interesting. But that was like in the only,

in the context of, like, writing, I think Canvas is, like, more... also serves, like, coding, one of the most common use cases. But, yeah, I think, like, those ideas could have happened, like, two years ago. Just, like, maybe...

I don't think it was like a priority at that time. It was like very unclear. I think like AI landscape at that time was very nascent, if that makes sense. Like nobody like, even when I would talk to like some of the designers at that time, like product designers, they were not even thinking about that at all. They did not have like AI in mind and like...

It's kind of interesting. Except for one of my design friends. His name is Jason Yuan. Yeah. Who was thinking about that. And Jason now is a new computer. Yes. We'll have them on at some point. I had them speak at my first summit. And you're speaking at the second one, which will be really fun. Nice. We'll stay on Anthropic for a bit and then we'll move on to more recent things. I think the other big project that you were involved with was just Cloud3. Just tell us the story. What was it like to launch one of the biggest launches of the year? Yeah, I think like...

I was... So, Cloud3... This is haiku, sonnet, opus all at once, right? Yes. It was a Cloud3 family. I was a part of the post-training fine-tuning team. We only had, like, what, like 10, 12 people involved. And it was really, really fun to, like, work together as friends. So, yeah, I was mostly involved in, like, Cloud3 haiku post-training side projects.

and then evaluations, like developing new evaluations and literally writing the entire model card. And I had a lot of fun. I think the way you train the model is very different, obviously, but I think what I've learned is that you will end up with, I don't know, 70 models and every model will have its own brain damage.

So it's just like kind of just bugs. Like personality-wise or performance benchmarks? I think every model is very different. And I think like it's like one of the interesting like research questions is like how do you understand like the data interactions as you like train the model? It's like if you train the model on like contradictory data sets,

how can you make sure that there won't be like any like weird like side effects and sometimes you get like side effects and like the learning is that you have to like iterate very rapidly and like have to like debug and detect it and make like address it with like interventions and actually some of the techniques from like software engineering is very like

useful here. It's like, how do you debug code? Yeah, exactly. So I really empathize with this because datasets, if you put in the wrong one, you can basically kind of screw up like the past month of training. The problem with this for me is the existence of YOLO runs. I cannot square this with YOLO runs. If you're telling me like you're taking such care about datasets, then every day I'm going to check in, run evals and do that stuff. But then we also know that YOLO runs exist. Yes. So how do you square that?

Well, I think it's like dependent on how much compute you have, right? So it's like, it's actually a lot of questions and like research is on like, how do you most effectively use the compute that you have?

And maybe you can have like two to three runs that is only like YOLO runs. But if you don't have a luxury of that, like you kind of need to like prioritize ruthlessly. Like what are the experiments that are most important to like run? Yeah. I think this is what like research management is basically. It's like, how do you...

Funding efforts. Yeah. Prioritizing. Take research bets and make sure that you build the conviction and those bets rapidly such that if they work out, you double down on them. Yeah. You almost have to ablate data sets too and do it on the side channel and then merge it in. Yeah. It's kind of super interesting. Tell us more. What's your favorite? So I have this in front of me, the model card. You say constructing this table was slightly painful. Yeah.

Just pick a benchmark and what's an interesting story behind one of them. I would say GPQA was kind of interesting. I think it was like the first, I think we were the first lab, like Antarpic was the first lab to like run. Oh, because it was like relatively new after New Rips? Yeah, yeah. Okay. Published GPQA like numbers. And I think one of the things that we learned was that I personally learned about that

like any evals is like some evals are like very like high variance and like GPAs like happen to be like a huge like high variance like evaluation so like one thing that we did is like having like run the average of like five and like take the average but like the hardest thing about like the

model card just like none of the numbers are like apples to apples so you actually need to like go back to like I don't know like GPT for model card and like read the appendix just to like make sure that like

the settings were the same as you're running the settings too. So it's like never an apples to apples. Yeah. But it's interesting how like, you know, when you market models as products, like customers don't necessarily know. They're just like, my MMLU is 99. What do you mean? Yeah, exactly. Why isn't there an industry standard harness, right? There's this Eleuther's thing, which it seems like none of the model labs use.

And then openly I put out a simple eval and nobody uses that. Why isn't there just one standard way everyone runs this? Because the alternative approach is you rerun your evals on their models. And obviously the numbers, your numbers will be lower. Yeah. And they'll be unhappy. So that's why you don't do that.

I think it operates on an assumption that the models, the next generation of the model or the model that you produce next is going to behave the same. So for example, I think the way you prompt a one or like a cloud three is going to be very different from each other.

I feel like there was a lot of like prompting that you need to do to get the evals to run correctly. So sometimes the model will just like output like new lines and the way you'd parse will be like incorrect or something. This has happened with like Stanford, I remember. Like when Stanford had this also like, they were like running benchmarks. Yeah, Helm. And somehow like Cloud was like always like not performing well. And that's because like the way they prompted it was kind of wrong. So it's like a lot of like techniques,

It's just very hard because nobody even knows. Has that gone away with chat models instead of just raw completion models? Yeah, I guess each eval also can be run in a very different way. Sometimes you can ask the model to output in XML tags, but some models are not really good at XML tags. So it's like, do you change the formatting per model or do you run the same format across all models?

And then like the metrics themselves, right? Like maybe, you know, accuracy is like one thing, but maybe you care about like some other metrics, like F-score or like some other like things, right?

You know, it's like hard. I don't know. And talking about O1 prompting, we just had a O1 prompting post on the newsletter, which I think was... Apparently it went viral within OpenAI. Yeah. I don't know. I got pinged by other OpenAI people. They were like, is this helpful to us? I'm like, okay. I think it's like maybe one of the top three most read posts now. And I didn't write it. Exactly. Anyway.

What are your tips on O1 versus like cloud prompting or like what are things that you took away from that experience? And especially now, I know that with 4.0 for Canvas, you've done RL after on the model. So yeah, just general learning. So now to think about prompting these models differently. Yeah.

I actually think like a one, I did not even harness the magic of like a one prompting, but like one thing that I found is that like, if you give a one like hard, like constraints of like what you're looking for, basically the model will be, will have a much easier time to like kind of like select the candidates and match like the candidate that is most like fulfilled the criteria that you gave. Yeah.

And I think there's a class of problems like this that O1 excels at. For example, if you have a question, like a bio question on like some, or like in chemistry, right? Like if you have like very specific criteria with the protein or like some of the chemical bindings or something, like then the model will be really good at like determining the exact candidate that will match the certain criteria. Yeah.

I have often thought that we need a new IFEval for this because this is basically kind of instruction following, isn't it? Yes. But I don't think IFEval has like multi-step IFEval. Yeah. So that's what basically I use AI News for. I have a lot of prompts and a lot of steps and a lot of criteria and O1 just kind of checks through each kind of systematically. And we don't have any evals like that.

Yeah. Does OpenAI know how to prompt O1? I think that's kind of like the... You know, Sam is always talking about incremental deployments and kind of like having people getting used to it. When you release a model, you obviously do all the safety testing, but...

But do you feel like people internally know how to get 100% out of the model? Or are you also spending a lot of time learning from the outside on how to better prompt a one and all these things? Yeah, I certainly think that we learn so much from external feedback too on how people use a one. I think a lot of people use a one for really hardcore coding questions.

I feel like I don't fully know how to best use a one, except for like, I use the one to just like do some like synthetic data explorations, but that's it. Do people inside of OpenAI, once the model is coming out, do you get like a company-wide memo of like, hey, this is how you should try and prompt this, especially for people that might not be close to it during development, you know, or I don't know if you can share anything, but I'm curious how internally this...

These things kind of get shared. I feel like I'm like in my own little corner in like research. I don't really like to look at some of the Slack channels. It's very, very big. So I actually don't know if something like this exists. Probably it might be exist because we need to share to like customers or like, you know, like some of the guides on like how to use this model. So probably there is something.

I often say this, the reason that AI engineering can exist outside of the model labs is because the model labs release models with capabilities that they don't even fully know because you never train specifically for it. It's emergent. And you can rely on basically crowdsourcing the search of that space or the behavior space to the rest of us. Yeah. So like you don't have to know. That's what I'm saying. Yeah. I think,

I think an interesting thing about O1 is that it's really, for average human, sometimes I don't even know whether the model produced the correct output or not. It's really hard for me to verify. Even hard STEM questions, I don't know. If I'm not an expert, I usually don't know. So the question of alignment is actually more important for these complex reasoning models.

to like, how do we help humans to like verify the outputs of these models?

It's quite important. And I feel like, yeah, like learning from external feedback is kind of cool. For sure. One last thing on cloud three, you had a section on behavioral design. Yes. Anthropics very famous for the HHH goals. What was your insights there? Or, you know, maybe just talk a little bit about what you explored. Yeah. I think like behavioral design is like a really cool, I'm glad that I, I made it like a section around this. And it's like really cool. I think like, like you weren't going to publish the one and then you insisted on it or what? Like,

I just like put the section inside it and like, yeah, Jared, my like one of my most favorite researchers was like, yeah, that's cool. Let's do that, I guess. Yeah, like nobody had this like term of like behavioral design necessarily for the models. It's kind of like a

new little field of like extending like product design into like the model design, right? Like, so how do you create a behavior for the model in certain contexts? So as for example, like in Canvas, right? Like one of the things that we had to like think about was like, okay, like now the model enters like more collaborative environment, more collaborative contexts.

So like, what's the most appropriate behavior for the model to act like as a collaborator? Should it ask like more follow-up questions? Should it like change? What's the tone should be? Like, what is the collaborator's tone? It's different from like a chat, like conversationalist versus like collaborator. So how do you shape the persona and the personality around that? It has like some philosophical questions too, like...

Yeah, behavioral. I mean, like, I guess, like, I can talk more about, like, the methods of, like, creating the personality. Please. It's the same thing

same thing as like you would create like a character in a video game or something it's kind of like charisma intelligence wisdom what are the core principles helpful harmless honest yeah and obviously for Claude this was my is much easier than I would say like for Charged PD for Claude is like it's like baked in and like the mission right it's like honest harmless helpful helpful and

But the most complicated thing about like the model behavior or like the behavioral design is that like sometimes two values would contradict each other. I think this happened in Cloud 3. One of the main things that we were thinking about was like, how do we balance this like honesty versus like homelessness or like helpfulness? It's like, we don't want the model to always like refuse even to like innocuous queries, like some like creative writing prompts.

but also if you don't want the model to be act like a be harmful or something. So it's like there's always a balance between those two. And it's more like art than the science necessarily. And this is what data sets craft is, is like more of an art than a literal science. You can definitely do like empirical research on this.

But it's actually like, this is the idea of synthetic data. If you look back to a constitutional AI paper, it's around how do you create completions such that you would agree to certain principles that you want your model to agree on. So it's like, if you create the core values of the models, how do you decompose those core values into specific scenarios? So how does the model need to express that?

its honesty in a variety of kind of like scenarios. And this is where like generalization happens.

when you craft the persona of the model. Yeah. It seems like what you described, behavior modification or shaping as a side job that was done. I mean, I think Anthropic has always focused on it the first and the most. But now it's like every lab has sort of vibes officer. For you guys, it's Amanda. For OpenAI, it's Rune. Yeah.

And then for Google, it's Steven Johnson and Ryza who we had on the podcast. Do you think this is like a job? Like it's like a, like every company needs a tastemaker? I think the model's personality is actually the reflection of the company or the reflection of the people who create that model. So like for Klaw,

I think Amanda was doing a lot of Cloud character work and I was working with her at the time. But there's no team, right? Cloud character team. Now there's a little bit of a team. Isn't that cool? But before that, there was none. I think actually with Cloud 3, we kind of doubled down on the feedback from Cloud 2. We didn't even think, but people said Cloud 2 is so much better and...

at writing and has a certain personality, even though it was unintentional at all. And we did not pay that much attention. We didn't know even how to productionize this property of model being better personality. Until with Cloud3, we kind of had to double down because we knew that we would launch in chat. We wanted to collect

Cloud Honesty is really good for enterprise customers. So if you kind of wanted to make sure the hallucinations went, like factuality would go up or something, we didn't have a team until or after Cloud 3, I guess. Yeah. I mean, it's growing now. I think everyone's taking it seriously. I think on OpenAI, there was a team called Model Design. It's Jan, the PM. She's leading that team. And I work very closely with those teams. We were working on actually writing improvements that we did with ChatGPT.

last year and then I was working on like this collaboration like how do you make Chachapiti Akhlaq's collaborator for like Canvas and then yeah we worked together with on some of the projects I don't think it's publicly known his his actual name other than Rune but he's mostly he's mostly doxxed yeah

We'll beep it and then people can guess. Do we want to move on to OpenAI and some of the recent work, especially you mentioned Canvas. So the first thing about Canvas is like, it's not just a UX thing. You have a different model in the backend, which you've

post-trained on O1 preview distilled data, which was pretty interesting. Can you maybe just run people through, you come up with a feature idea maybe, then how do you decide what goes in the model, what goes in the product, and just that process? Yeah, I think the most unique thing about ChaiGPT Canvas was that it was also the team formed out of the air. So it was like July 4th or something during the break. Like Independence Day, they just like...

Okay. I think it was there was some like company break or something. And I remember I was just like taking a break. And then I was like pitching this idea to like Barrett's.

Zoff, who was my manager at that time. She's like, I just want to like create this like canvas or something. And I really didn't know how to like navigate OpenAI. It was like my first, like, I don't know, like first month at OpenAI. And I really didn't know how to like navigate, how do I get product to work with me? Or like some of the ideas, like some of the things like this was like, so I'm really grateful for like,

Actually, Barrett and Mira, who helped me to, like, staff this project, basically. And I think that was really cool. And it was, like, this 4th of July, and, like, Barrett was like, yeah, actually, who's, like, an engineering manager is like, yeah, we should, like, staff this project with, like, five, six engineers or something. And then Karina can be, like, a researcher on this project. And I think, like...

This is how the team was formed. This was kind of like out of the air. And so like, I didn't know anyone there at that time, except for Thomas Dimson. He did like the first like initial like engineering prototype of the canvas and it kind of like ripped off. But I think the first, we learned a lot on the way how to work together as product and research. And I think this is one of the first projects at OpenAI where research and product are

work together from the very beginning. And we just made it like a successful project in my opinion, because like designers, engineers, PM and research team were all together and we would like push back on each other. Like if like it doesn't make sense to do it on a model side, like we had to like collaborate with like applied engineers to like make sure this is being handled on the applied side.

But the idea is you can go that far with like prompted baseline. Prompted Chachi PT was kind of like the first thing that we tried. It was like a canvas as a tool or something. So how do we define the behavior of the canvas? But then like we've found a bunch of like different like edge cases that we wanted to like fix.

And the only way to like fix some of this edge cases is actually through post-training. So we actually, what we did was actually retrain the entire 4.0 plus our canvas stuff. And this is like, there are like two reasons why we did this. It's because like the first one is that we wanted to ship this as a better model and

in the drop-down menu. We could, like, rapidly iterate on users' feedback as we ship it and not going through the entire, like, integration process into, like, this, like, new one model or something, which took some time, right? So, like, from beta to, like, GA, it took, I

I think, three months. So we kind of wanted to ship our own model with that feature to learn from the user feedback very quickly. So that was one of the decisions we made. And then with Canvas itself, we just had a lot of different behavioral... Again, it's behavioral engineering. It's various behavioral craft around when does Canvas need to write comments?

When does it need to update or edit the document? When does it need to rewrite the entire document versus edit a very specific section of the user asks? And when does it need to trigger the canvas itself? It was one of those behavioral engineering questions that we had. At that time, I was also working on writing quality.

So that was like the perfect way for us to like literally both teach the model how to use Canvas, but also like improve writing quality. If writing was like one of the main use cases for Chacha PD. So I think that was like the reasoning around that. There's so many questions. Oh my God. Quick one. What does improve writing quality mean? What are the evals? What are the evals? Yeah, so...

The way I'm thinking about it is like have two various directions. The first direction is like, how do you improve the quality of the writing of the current use cases of Chachapihi? And those, most of the use cases are mostly like non-fiction writings. It's like email writing or like some of the, maybe blog posts, cover letters is like one of the main use cases. But then the second one is like, how do we teach the model to literally think more

more creatively or like write in a more creative manner such that it will just create novel forms writing. And I think the second one is like much of a longer term, like research question, while the first one is more like, okay, we just need to improve data quality for the writing use cases.

that between the models are. It is more straightforward question, but the way we evaluated the writing quality, actually I worked with Jan's team on the model design. So they had a team of like model writers and we would work together and it's just like a human eval. It's like internal human eval where we would just- Always like that. Yeah, on the prompt distribution that we cared about, like we want to make sure that the models that we like use-

that we trained were always like better or something. Yeah. So like some test set of like a hundred prompts that you want to make sure you're good on. I don't know how big the prompt distribution needs to be because you are literally catering to everyone. Right. Yeah. I think it was much more opinionated way of like improving writing quality because we worked together with like model designers to like come up with like core principles of what makes

this particular writing good? Like what does make email writing good? And we had to like craft like some of the literally like rubric on like what makes it good. And then make sure during the eval, we check the marks on this like rubric. Yeah. That's what I do. Yeah. That's what school teachers do. Yeah. It's really funny. Like, yeah, that's exactly how we grade essays. Yes. Yeah. I guess my question is why,

when do you work the improvements back in the model? So the Canvas model is better at writing. Why not just make the core model better too? So for example, I built this small podcasting thing for a podcast and I have the Foro API and I asked it to write a write-up about the episode based on the transcript and then I've done the same in Canvas. The Canvas one is a lot better. Like the one from the raw Foro, the podcast delves and I was like, no, I'm not delving the third word.

Why not put them back in 4.0 core? Or is there just like... I think you put it back in the core, no? Yeah, so like, so the 4.0 canvas now is the same as 4.0? Yeah, you must have missed that update. Yeah, what's the process to... But I think the models are still a little bit

different it's just like an ab test almost right to me it feels i mean i've only tried it like three times but it feels the canvas the canvas output feels very different than the api output yeah yeah i think like there's always like a difference in the model quality i would say like the original better model that we released this canvas was actually much more creative than even right now when i use like 4.0 with canvas i think it's just like the complexity of like

the data and the complexity of the it's kind of like versioning issues right here it's like okay like your version 11 will be very different from like version 8 right it's like even though like the stuff that you put in is like the same or something it's a good time to say that i have used it a lot more than three times i'm a huge fan of canvas i think it is uh

It's weird when I talk to my other friends, they don't really get it yet or they don't really use it yet. I think because it's maybe sold as writing help when really it's the scratch pad. Yeah, what are the core use cases? Oh yeah, I'm curious. Literally drafting anything. I want to draft a copy from my conference that I'm running. I'll put it there first and then it'll just have the canvas up and I'll just say what I don't like about it and it changes. I will maybe edit...

stuff here and paste in so for example like I wanted to draft a brainstorm list of reasons of signs that you may be an NPC just for fun just like a blog post for fun nice and I was like okay I'll do 10 of these and then I want you to generate the next 10 so I wrote 10 I pasted it into chat GPT and they generated the next 10 and they all sucked

All horrible. But it also spun up the canvas with the blog post. And I was like, okay, self-critique why your output sucks and then try again. And it just iterates on the blog post with me as a writing partner. And it is so much better than, I don't know, like intermediate steps. I was like, that would be my primary use case. It's like literally drafting anything.

I think the other way that I'll put it, I'm not putting words in your mouth. This is how I view what Canvas is and why it's so important. It's basically...

An inversion of what Google Docs is, wants to do with Gemini. So Google Docs on the main screen and then Gemini on the side. And what ChatGPT has done is do the chat thing first and then the docs on the side. But it's kind of like a reversal of what is the main thing. Like Google Docs starts with the canvas first that you can edit and whatever. And then maybe sometimes you call in the AI assistants. But ChatGPT, what you are now is you're kind of AI first with the site output being Google Docs.

I think we definitely want to improve like writing use case in terms of like, how do we make it easier for people to format or like do some of the editing? I think there is still a lot of room for improvement, to be honest. I think the other thing is like coding, right? I feel like one of the things that we like doubling down is actually like executing code inside the canvas. And there's a lot of questions like, how do we evolve this? It's

It's kind of like IDE for both. And I feel like this is where I'm coming from is like the ChachiPT evolves into this blank interface, which can morph itself in whatever you try. Like the model should try to like derive your true intent.

and then modify the interface based on your intent. And then if you like writing, it should become like the most powerful, like writing IDE possible. If it's like coding, it should become like a coding IDE or something. I think it's a little bit of a odd decision for me to call those two things the same product name. Mm-hmm.

Because they're basically two different UIs. One is Code Interpreter++ and the other one is Canvas. I don't know if you have other thoughts on Canvas. No, I'm just curious, maybe some of the harder things. So when I was reading, for example, forcing the model to do targeted edits versus like for rewrite, it sounds like it was like really hard in the

AI engineer mind, maybe sometimes it's like just pass one sentence in the prompt. It's just going to rewrite that sentence, right? But obviously it's harder than that. What are maybe some of the like hard things that people don't understand from the outside and building products like this? I think it's always hard with any new like product feature, like canvas or tasks or like any other new features that you don't know how people understand.

would use this feature. And so how do you even build evaluations that would simulate how people would use this feature? And it's always really hard for us. Therefore, we try to lean on to iterative deployment of this.

in order to learn from user feedback as much as possible. Again, we didn't know that code diffs was very difficult for a model, for example. Again, it's like, do we go back to fundamentally improve code diffs as a model capability? Or do you do a workaround where the model will just rewrite the entire document, which is yield to higher accuracy?

And so those are like some of the decisions that we had to like make as yeah. How do you like improve the bar to the product quality, but also make sure the model quality is also a part of it. And like what kind of like cheat ups you're okay to do. Again, I think it's like new way of product development is more

more like product research, model training and like product development goes like together hand in hand. This is like one of the hardest things, like defining the entire like model behavior. I think just like, there's so many edge cases that might happen, especially when you like do Canvas with like other tools, right? Like Canvas plus DALI, Canvas plus search. If you like select certain section and then like ask for search, like

how do you build such evals? Like what kind of like features or like behaviors that you care the most about? And this is how you build evals. You tested against every feature of ChachiBT? No. Oh, okay. I mean, I don't think there's that many that you can... Right. It will take forever. But it's the same indecision boundary between like...

Python ADA advanced data analysis versus Canvas is one of the most trickiest decision boundary behaviors that we had to figure out. How do you derive the intent from the human user query? Yeah. And how do I say this?

Deriving the intent, meaning does the user expect canvas or some other tool and then make sure that it's maximally like the intent was, is actually still one of the hardest problems. Especially with agents, right? You don't want agents to go for like...

five minutes and do something on the background and then come back with some mid answer that you could have gotten from a normal model or the answers that you didn't even want because it didn't have enough context. It didn't follow up correctly. You said the magic word. We have to take a shot every time you say it. You said agents. Agents, yeah. So let's move to TAS. You just launched TAS. What was that like? What was the story? I mean, it's your baby, so...

Now that I have a team, I actually like tasks was purely like my residence projects. I was mostly a supervisor. So I kind of like delegated a lot of things to my resident. His name is like Vivek.

And I think this is like one of the projects where I learned management, I would say. Yeah. But it was really cool. I think it's very similar model. I'm trying to replicate Canvas operational model. How do we operate with product people or like product applied people?

orgs was research and the same happened. I was trying to replicate like the methods and replicate the operational process with tasks. And actually tasks was developed less than like two months. So if Canvas took like, I don't know, four months, then tasks took like two months. And I think again, like it's kind of very similar process of like, how do we build evals? You know, some people like ask for like reminders in actual child GPT, but then like,

Even though they know it doesn't work. Yeah, it doesn't work. So there is some demand or desire from users to do this. And actually, I feel like task is a simple feature, in my opinion. It's something that you would want from any model, right? But then the magic is when... Actually, because the model is so general, it knows...

how do you search or like canvas or like create sci-fi stories and create Python puzzles. When coupled with tasks, it actually becomes like really, really powerful. It was like the same ideas of like, how do we shape the behavior of the model? Again, we shipped it as like as a beta model in the model dropdown. And then we are working towards like making that feature integrated in like the core model. So I feel like the principles of like everything should be like in one model, but

Because of some of the operational difficulties, it's much easier to deploy as a separate model first to learn from the user feedback and then iterate very quickly and then improve into the core model, basically. Again, this project was also together at the beginning. From the very beginning, designers, engineers, researchers were working all together and

Together with model designers, we were trying to come up with evals, evaluations, and testing and bug bashing. And it's a lot of cool synergy. Evals, bug bashing. I'm trying to distill. I would love a canvas for this. Distill what the ideal product management or research management process is. Start from, do you have a PRD? Do you have a doc that does these things? Yes. And then from PRD, you get funding, maybe? Yeah.

Or like, you know, staffing resources, whatever. Yes. And then prototype, maybe. Yeah. Prototype. I would say like prototype was prompted baseline. It's all, all, everything starts with like prompted baseline. And then like we craft like certain like evaluations that you want to like capture. Okay. They want to like measure progress at least with the model. Yeah. And,

And then make sure that evals are good and make sure that the prompted baseline actually fails on those like evals, because then you have like, if you allow to like hill climb on. And then once you start iterating on the model training, it's actually very iterative. So like every time you train the model or you like look at the benchmark, like look at your evals and that goes up, it's like good. But then also you don't want to like

You want to make sure it's not like super overfitting. Like that's where you run on other evals, right? Like intelligence evals and then like... You don't want regressions on the other stuff. Yes. Okay. Is that your job or is that like the rest of the company's job to do?

mainly my like really job of the people who like because regressions are going to happen and you don't necessarily own the data for the other stuff what's happening right now is that like you basically you only like update your your data sets right so it's like you compare on the baseline you compare like the regressions on the baseline model model training and then book bash and that's that's about it okay

Actually, I did the course with Andrew Ang, who there was like one little lesson around this. Okay. I haven't seen it. Product research. You tweeted a picture with him and it wasn't clear if you were working on a course. I mean, it looked like the standard course picture with Andrew Ang. Yes. Okay. There was a course with him. What was that like working with him? No, I'm not working with him. I just like did the course with him. Yeah.

How do you think about the tasks? So I started creating a bunch of them. Like, do you see this as being, going back to like the composability, like composable together later? Like you're going to be scheduled one task that does multiple tasks chained together. What's the vision? I would say task is like a foundational component.

module. Obviously, it should generalize to all sorts of behaviors that you want. Sometimes I see people have three tasks in one query. And right now, I don't think the model handles this very well. I think that ideally, we learn from the user behavior

And ideally, the model will just be more proactive in suggesting of like, oh, I can either do this for you every day because I've observed that you do that every day or something. So it's like more becomes like a proactive behavior. Yeah.

I think right now you have to be more explicit, like, oh yeah, like every day, like remind me this. But I think like the, the ideally, the model will always think about you on the background and like kind of suggest, okay, like I noticed you've been reading

this particular like how can you use articles? Maybe I can try to suggest you like every day or something. So it's like, it's just like much more like of a natural like friend, I think. Well, there is an actual startup called Friend that is trying to do that. Yes. We'll have, we'll interview Avi at some point. But like, it sounds like the guiding principle is just what is useful to you. It's a little bit B2C, you know, is there any B2B push at all? Or?

Or you don't think about that? I personally don't think about that as much, but I definitely feel like B2B is cool. Again, I come back to like Cloud and Slack. It's like one of the first interfaces where the model was operating inside your organization. It would be very cool for the model to handle, to become a productive member of your organization. And then either even process, like I

like i right now like i'm thinking like processing like user feedback i think it'd be very cool if the model would just like start doing this for us and like we don't have to hire a new person on this just for this or something and like you have like very simple like data analysis like data analytics so like how this feature is like do you do this analysis yourself or do you have a data science team that tells you insights i think there are some data scientists okay who

I've often wondered, I think there should be some startup or something that does automated data insights. Like I just throw you my data, you tell me. Yeah, exactly. Because that's what a data team at any company does. Right. Which is just give us your data, we'll like make PowerPoints. Yeah. Yeah, that'd be very cool. I think that's a really good vision. You had thoughts on agents in general. There's some more proactive stuff. You actually had tweeted a definition, which is...

Kind of interesting? I did. Well, I'll read it out to you. You tell me. If you still agree with yourself. This is five days ago. Agents are a gradual progressional task starting off with one-off actions, moving to collaboration, ultimately fully trustworthy long horizon. I know it's uncomfortable to have your tweets read to you. I have had this done to me.

Ultimately, fully trustworthy long horizon delegation in complex environments like multiplayer, multi-agents, tasks, and canvas fall within the first two. What is the third one? One of my weaknesses is I like writing long sentences. I feel like I need to learn how to... That's fine. Is that your definition of agents? Like...

What are you looking for? I'm not sure if this is my definition of agents, but I feel like it's more like how I think. It makes sense, right? Like I feel like for me to like trust an agent with my passwords or my credit card, I actually need to build trust with that agent that it will handle my tasks correctly and reliably.

And the way I would go about this is how I would naturally like collaborate with other people. Is it like we first, even if it's any project, right? Like we first came, when we first come, like we don't even know each other. Like we don't know how each other's like working style, like what I prefer, what do they prefer? How do they prefer to communicate, et cetera, et cetera. So like you spend like the first, like, I don't know, like two weeks to just like learn their style of working, right?

And then like over time you adapt to their working style and then this is how you create the collaboration. And then like at the beginning, you don't have much trust. So like, how do you build more trust? Especially like, it's the same thing as like with a manager, right? Like it's like, how do you build trust with your manager? What does he need to know about you? What do you need to know about them? Over time, as you build trust and trust builds either through collaboration, which is why I feel like

building Canvas was kind of like the first steps towards like more collaborative agents. I think with humans, like you can, you should need to show a consistent effort to each other, like consistent effort that you care about each other is that you like work together very well or something. So consistency and like collaborations, like what creates trust. And then, you know,

I will naturally will try to delegate tasks to a model because I know the model will not fail me or something. So it's kind of like building out like the intuition for the form factor of like new agents. Because sometimes I feel like a lot of researchers or like people in AI community are like so into like, yeah, agents delegate everything like blah, blah. But like on the way towards that, I think like,

collaboration is actually one of the main roadblocks or milestones to get over. Because then you will learn some of the implicit preferences that would help you, that would help towards like this full delegation model. Yeah, trust is very important. I have an AGI working for me and we're still working on the trust issues. Okay.

We are recording this just before the launch of Operator. The other side of agents that is very topical recently is computer use and topic launch computer use recently. You know, you're not saying this, but OpenAI is rumored to be working on things. And like there's a lot of labs are like exploring this, like sort of drive a computer generally. How important is that for agents?

I think it would be one of the core capabilities of agents. Yeah, computer using, or agents using desktop or like your computer.

It's, like, the delegation part. Like, when you might want to, like, delegate an agent to, like, order a book for me or, like, order a flight or, like, search for a flight and then order things for me. And I feel like this idea was flying around, like, for a long time since at least, like, 2022 or something. And, like, finally we are here. It's just, like, there's a lot of, like, lag between idea and, like, full execution in the orders, like, two to three years. Yeah.

The vision models had to get better. Yeah. A lot better. The perception and something. But I think like it's really cool. I feel like it has like implications for like consumers, definitely like delegation. But again, like I think like latency is like one of the most important factors here. It's like you don't want to make sure that the model correctly understands what you want. And then if it doesn't understand or if it doesn't know like full context, it should like ask for a follow-up question and then like use that to perform the task. Yeah.

Like the agent should know if it has enough information to complete the task at the maximal, if it's a maximal success or not. And I think this is like still an open kind of like research question, I feel like. Yeah. And the second idea is that like, I think it also enables new class of like research questions of like computer use agents,

Like, can we use it in Aral? Right? Like, this is kind of, like, very cool, like, nascent area of, like, research. What's one thing that you think by the end of this year people will be using computer use agents a lot for? I don't know. It's really hard to predict. Um...

Maybe for coding? I don't know. For coding? I think right now with Canvas, we are thinking about this paradigm of real-time collaboration to asynchronous collaboration. So it would be cool if I can just delegate to a model, like, okay, can you figure out how to do this feature or something? And then the model can just test out that feature in its own virtual environment or something.

I don't know, like maybe this is a weird idea. Obviously, there will be a lot of use cases around like consumers, consumer use cases like, hey, like shop for me or something. I was going to say everyone goes to booking plane tickets. That's like the worst example because you only book plane tickets, what, two or three times a year, you know? Or like concert tickets. I don't know. Concert tickets, yeah. Like Taylor Swift. I want a Facebook marketplace bot that just scrolls Facebook marketplace for free stuff. Yeah. And then it's cool and get it. Yeah.

I don't know. What do you think? I have been very bearish in computer use because they're slow. They're expensive. They're imprecise. Like the accuracy is horrible. Still, even with Anthopics new stuff, I'm really waiting to see what opening I might do to change my opinions. And really what I'm trying to do is like Jan last year versus December last year, I changed a lot of opinions. What am I wrong about today? And computer use is probably one of them where I'm like, I don't think...

I don't know if by end of the year we'll still be using them. Will my chat GBT have like every GBT instance, will they have a virtual computer? Maybe? I don't know. Coding, yes. Because he invested in a company that does that for the code sandboxes. There are a bunch of code sandbox companies. E2B is the name. But then in browsers, yes. Computer use is like coding plus browsers plus everything else. There's a whole operating system. And it's very like you have to be pixel precise. You have to OCR. I think OCR is basically solved.

But like pixel precise and like understand the UI of what you're operating. And like, I don't know if the models are...

There you go. Yeah, yeah. Two questions. Like, do you think the progress of, like, mini models, like, 03 mini or, like, 01 mini, I guess, like, it came back to, like, the Cloud 3 Haiku, Cloud 1.2 Instant, like, this, like, gradual progression of, like, small models becoming really powerful, which are very also, like, fast. Like, I'm sure, like, the computer use agents, like, would be able to, like...

with those small models, that will solve some of the latency issues, in my opinion. I think in terms of other operating systems, I think a lot about it these days is we're entering this task-oriented operating system or something where also a generative OS. In my opinion, people in a few years will click on websites more

I want to see the plot of like website clicks over time. But then my prediction is like it will go down and like people's access to the internet will be through the model's lens. Either you see what the model's doing or you don't see what the model's doing on the internet. Yeah. I think my personal benchmark for computer use this year is expense reports. So I have to do my expense report every month. Oh yeah. Yeah.

But what you need to do... So, for example, I expense a lunch. I have to go back on the calendar and see who I was having lunch with. Then I need to upload the receipt of the lunch. And I need to tag the person in the expense report, blah, blah, blah. It's very simple on a task-by-task basis. But you have to go to every app that I use. You have to go to the Uber app. You have to go to the camera roll to get the foot of the receipt, all these things. You cannot actually do it today. Right.

But it feels like a tractable problem. You know that probably by the end of the year we should be able to do it. Yeah, this reminds me of like the idea of you kind of want to show to computer use agents how you would want, how you want or how you like...

booking your flights. It's kind of like a few shot demonstrations of like, maybe there is more efficient way that you do things that the model should learn to do it in that way. And so it's kind of like, again, comes back to like personalized tasks too. It's like right now, task is just like where you're like rudimentary, but in the future, tasks should become like much more personalized for your preferences. Yeah.

Okay. Well, we mentioned that. I'll also say that I think one takeaway I got from this conversation is that ChatGPT will have to integrate a lot more with my life. Like you will need my calendar. You will need my email. Yes. For sure. And maybe you use MCP. I don't know. Have you looked at MCP? No, I haven't. It's good. It's got a lot of adoption. Okay. Anything else that we're forgetting about or like maybe something that people should use more? Yeah. I don't know. Before we wrap on like the OpenAI side of things.

I think like search product is kind of cool, like ChachiPT search. I think this idea of like, you know, like right now I'm thinking a lot of us like, you know, the magic of ChachiPT when it first came out was like, you know, you ask something, any like instruction, and then like it would like follow the instruction that you gave to a model, like write a poem and we'll give you a poem. But I think like the magic of the next generation of ChachiPT is like actually, and we are like, we are marching towards that. It's like,

When you ask a question, it's not just going to be in the text output. The ideal output might be like in some form of like a React app on the fly or something. So like this is happening with like search, right? Like give me like Apple stock and then it gives you the chart and gives you like this like generative UI. And I feel like this is what I mean by like

the evolution of ChachiPT becomes like more of a generative OS with a task orientation or something. So it's like, and then UI will adapt to what you like. So like, if you really like 3D visualizations, I think the model should give you as much visualization as possible. Like, you know, if you really like certain way of like the UIs, like maybe you like round corners or I don't know, it's just like some color schemes that you're like, it's just like the UI becomes like more dynamic and like,

becomes like a custom custom model like personal model right like from a personal computer to like a personal model I think yeah takes overall you are one of the rare few people actually maybe not that rare to work at both OpenAI and Anthopic not anymore yeah cultural difference what are general takes that people like only like you see

I love both places. I think I've learned so much at Anthropic and I'm really, really grateful to the people and I'm still like friends with a lot of people there. And I was really sad when John left OpenAI because I came to OpenAI because I wanted to work with him the most or something. What's he doing now? But I think it changed a lot. So I think like when I first joined Anthropic, there were like, I don't know, 60, 70 people. When I left, there were like 700 like people. So it's like a massive like growth there.

OpenAI and Anthropic is different in terms of more like maybe product mindset. Maybe OpenAI is much more willing to take some of the product risks and explore different bets. And I think Anthropic is much more focused. And I think it's fine. They have to prioritize, but they definitely double down on enterprise maybe more than consumers or something. I don't

I don't know, it's just like some of the product mindsets might be different. I would say like research, I've enjoyed like both like research cultures, both in Anthropic and like Opening Eye. I feel like they're more, on a daily basis, I feel like it's more similar than different. I mean, no surprise. Like how you run experiments, it's kind of like very similar. I'm sure the Anthropic, I mean, you know, Dario used to be VP Research, right? So he set the culture at Opening Eye. So yeah, it makes sense. Yeah.

Maybe quick takes on people that you mentioned Barrett, you mentioned Mira. What's one thing you learned from Barrett, Mira, Sam, maybe? Something like that. Like one lesson that you would share to others.

I wish I like worked with them way longer. I think what I've learned from Mira is actually her like interdisciplinary mindset. She's really good at like connecting dots between like product and like kind of balancing like product research and like create this like comprehensive, like coherent story. Because sometimes like there are like researchers who like really hate doing product and there are researchers who really love doing product. And it's like,

kind of dichotomy between two and also like safety is like a part of this process. So kind of you kind of want to like create this coherent, like think from like systems perspective, like think of a bigger picture. And I think I learned a lot from her on that. I definitely feel like I have much more creative freedom at OpenAI and that's because the environment that the leaders set is

like enables me to do that. So it's like, if I have an idea, if I want- Propose it. Yeah, exactly. On your first month. There's like more like creative freedom and like resource reallocation, especially in research is like being adaptable to like new technologies and like change your views based on like empirical results.

or kind of like changed research directions. I've seen a lot of like, sometimes I've seen researchers who would just like get stuck on the same directions for like two to three years and it would never like work out or something, but they would still be like stubborn. So it's like adaptability to like new directions and like new paradigms is kind of like,

one of those things that this is a Barrett thing or is it a general culture general kind of culture I think cool yeah and just to wrap up we just usually have a call to action founders usually want people to work at their companies do you want people to give you feedback do you want people to join your team oh yeah of course I'm definitely

hiring for like research engineers who are like more product minded people. So it's like people who know how to train the models, but also like interested in like deploying into like the products and developing like new product features. I'm definitely looking for those archetypes of like research engineers or like research scientists.

So yeah, if you're like looking for a job, if you're like interested in joining my team, I'm like really happy to just reach out, I guess. And then just like generally, what do you want people to do more of in the world, whether or not they work with you? Like, you know, call to action as in like everyone should be doing this. I think this is something that I tell to a lot of like designers is that like, I think people should like spend more time just like play around with the models and

And the more you play with the model, the more creative ideas you'll get around what kind of new potential features of the products or new kind of interaction paradigms that you might want to create with those models. I feel like we are bottlenecked by human creativity.

completely changing the way we think about the internet or some of the way we think about software. AI right now pushes us to rethink everything that we've done before, in my view. And I feel like not enough people either double down on those ideas or I'm just not seeing a lot of human creativity in this interface design or like

product design mindsets so i feel like it'd be really great for people to just like do that and especially right now it's like research some research becomes like much more product oriented so it's like you actually can train the models for the things that you want to do in a product something yeah and you define the process now now this is my go-to for how to manage a process i think it's pretty common sense but it's nice to hear from you that because you actually did it

That's nice. Thank you for driving interface design and the new models at OpenAI and Anthropic. And we're looking forward to what you're going to talk about in New York. Yeah, thank you so much for inviting me here. I hope my job will not be automated by the time I come to New York. Well, I hope you automate yourself. Yeah, I hope so too. We'll do whatever else you want to do. That's it. Thank you. Thanks.