The new Claude 3.5 Sonnet, Computer Use, and Building SOTA Agents — with Erik Schluntz, Anthropic

Latent Space: The AI Engineer Podcast — Practitioners talking LLMs, CodeGen, Agents, Multimodality, AI UX, GPU Infra and all things Software 3.0

Deep Dive

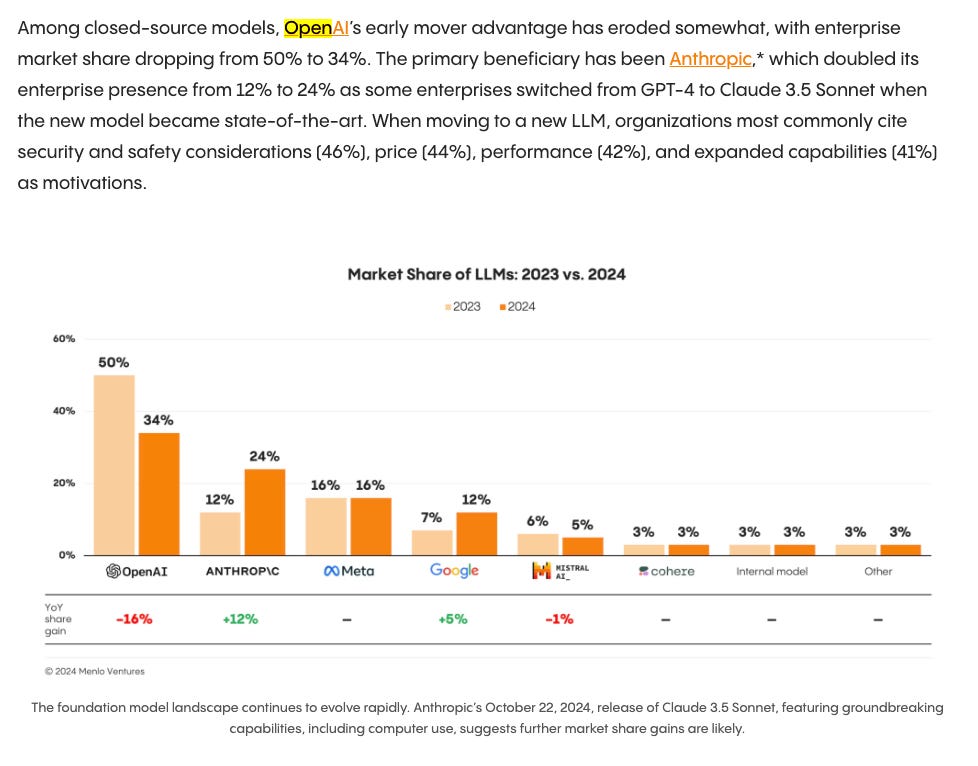

- Claude 3.5 Sonnet's popularity has persisted despite newer models.

- It's the preferred model for AI engineers, even being exclusively used by new code agents.

- Bolt.new, using Claude Sonnet, achieved $4m ARR in 4 weeks.

Shownotes Transcript

Everyone, welcome to the latent space pakia. This is A S U. Partner and C T O, A disciple partners. And today um we're in the new studio um with my usual cohoes shown from small eye hey .

and today we are very blessed have extras from anthropic with us. welcome. Hi.

thanks very much. I'm exelon. I'm a member of technical staff at anthropic working on tool use, computer use uh .

and sweden tion yeah um well how did you get into just the whole A I journey? Uh I think um you spit some time basic as well yeah and tics yeah there is a lot of overlap ity like the verlie s people in A I people and maybe like there's some interlake or interest between language models for robots right now IT may be IT may just a little bit of back on on how you got .

to where you are. Yeah sure up. I was space like a long time ago, but before joining anthropic, I was the CTO and cofounder of cobalt robotics. We built security and inspection robots.

These are out of five foot talk robots that would patrol through an office building or a warehouse, looking for anything out of the ordinary, very friendly, no tasters or anything. We were just have call at the Operator. If we saw anything.

We have about one hundred to those out in the world, and had a team of about one hundred. We actually got acquired about six months ago. But I have left cobol t about a year ago now because I was starting to get a lot more excited about A I.

I had been writing a lot of my code with things like copilot. And as I cloud, this is actually really cool. Like if you told me ten years ago that A I would be writing a lot of my code, I would say, hey, I think that's agi.

And so I kind of realized that we had passed this this level work wow. This is actually really useful for engineering work that got me a lot more excited about AI and learning about large language models. So I ended up taking a spatial um and doing a lot of sort of reading and research myself and they decided, hey, I I want to go be at the core of this and joined anthropic.

And why why anthropic did you're going to her other labs you you consider maybe some of the robotics companies.

So I think at the time, I was a little burnt out of robotics. And so also for the rest of this, any any sort of negative things I say about robotics or hardware as as coming from the place for now. No, I reserve my right to change, change my opinion in a few years.

Yeah, you know, I looked around, but ultimately I knew a lot of people that I really trusted. And I thought we're incredibly smart at anthropic. And I think that was the big deciding factor to come there, if okay. This team's amazing. They are not just brilliant, but fit of like the most nice and kind of people that I know and I felt could be a really good culture fit and ultimately like I do care a lot about A I safety and making sure that, you know, I don't want to build something that's used for bad purposes and I felt like that the best chance of that was joining metrology c .

in front the outside, these labs kind of look like huge organizations that have dislike, obscure waste to organize. How did you get you just in topic that you already know you we're going to work on like sweet bench and some of the stuff you publish or you're going to join and then you figure out where you land and think people always here to to learn more.

Yeah i've been very happy that anthropic is very bottoms up and so a very sort of receptive to whatever you're interest are um and so I joined to have been very transparent of okay, i'm most excited about code generation and A I that can actually go out and sort of touched the world or sort of help people build things. And you know those weren't my initial initial project.

I also came and said, hey, I want to do the most of available possible thing for this company and help anthropos s succeeded. And, you know, like, let me find the baLance of those. So I was working on lots of things at the beginning functions, calling to use and then inserted as IT became more and more relevant as, like, okay, like let's it's time to go work on coding agents. And so what i've started looking at, sweet bench is so have a really good benchmark uh, for that.

So let's get right into sweet enc. That's one of the many claims to fame. I feel like there's just been a series of releases related with five three, five, eight round about two three months ago, five unit came out um and IT was IT was a step ahead in terms of a lot of people immediately follow with that for coding.

And then last month, uh, you released a new date, a version of, of course, on we're not going to talk about the training for that because that's still confidential, but I think and is done a really good job like applying the model to different things. So you took the lead on sweet bench, but then also were going to talk a little bit about computer use leader on. So ah maybe just give us the context about like why you looked at you verified and you you actually I came over the whole system for building agents that you know wouldn't maximum use the model.

So i'm on a sub team called product research and basically a of the idea of product research is to really understand like what end customers care about and want in the models and in work to try to make that happen. So you know we're not focused on sort of these more abstract general benchMarks like math problems or mml u, but we really care about like finding the things that are really valuable and making sure the models are great to those.

And so because I ve been interested in coding agents, i'm sort of I knew that this would be a really valuable thing. And I knew there were a lot of startups in our customers trying to build coding agents with their models. And so I said, hey, this is going to be a really good benchmark.

It's be able to measure that and do all that. And you know wasn't the first person and anthropic define sweep entry and you know there are lots of people that already know about IT and were had done some eternal efforts on IT IT felt the me as sort of both implement the benchmark and which is very tRicky. And then also to sort of make sure we had an agent and basically like a reference agent, maybe i'd call IT, they could do very well on IT.

Ultimately, we want to provide how we implemented that reference agent so that people can build their own agents on top of our system and get sort of the most out of IT as possible. So at this blog post, uh, we were released on sweet bench. We released the exact tools and the prompt that we gave the model to be able to do for .

people who don't know who may be, have invited in the sweep entry. I think the general perception is there, like test that a soft engineer could do. I feel like that's a inaccurate description because IT is basically uh, one is a subset of the twelve boss is everything they could find that every issue with, like a imagine commit that could be tested.

So that's not every commit. And then sweep entry verified this further, manually filtered by open the eye. Is that an acre description in anything you .

change about that? Yes, sweet anch is IT certainly is a subset of know all tasks. It's first well, it's only python repose. I'm so already fairly well limited there and it's just twelve of these popular open source repose. And the as its only ones where there were tests that passed at the beginning and also new tests that were introduced that the test, the new feature that's added. So know if IT is, I think, a very limited subset of real engineering tasks, but I think it's also very valuable because it's even though it's a subset, IT is true engineering tasks.

And I think a lot of other benchMarks are really kind of these much more artificial setups of even if they're related to coding, they're more like coding interview style questions or puzzles that I think are very different from like day to day what you went up doing. I got to know how frequently you all like get to use recursion. I had a job but I whenever I do is like a treat and I think IT is of is almost comical and a lot of people joke about this in the industries is like how different interview questions like yeah actually from but I think the one of the most interesting things about sweep entry is that all these other benchMarks are usually just isolated puzzles.

And you're starting from scratch where a swedien ch, you're starting in the context of an entire repository. And so IT adds an entirely new dimension to the problem of finding the relevant files. I know this is a huge part of real engineering.

As you know, it's actually again, pretty rare that you're starting something totally Green field. You need to go and figure out where in a code base you're going to make a change and understand how your work is going to interact with the rest of the systems. And I think swe benched does a really good job of like presenting that that problem.

Why do we still use you money? Well, it's like nine, two percent. I think I don't even know you can actually get hundred percent because some of the data not actually solvable. You see benchMarks like that.

They should just get sunset IT because when you look at like the model, which senses I go like many to inside of ably, eighty nine ninety percent on you money value versus the superbly ch verified is that you have forty nine percent right, which is like before forty five percent was stayed of the art by me, like six months ago was like thirty percent something like that. So is that a benchmark that you think is going to replace human bl? Or do you think they're just going to unappalled?

I think there is still need for sort of a many different variety VS, like sometimes you do really care about just sort of Green field co generation. And so I don't think that everything needs to go to sort of an agent's set up sweep. Ch, very expensive, yes.

And the other thing I was going to say is that sweet enter is certainly hard implement and expensive to run because each task you have to parse, know a lot of the repo to understand word, to put your code, and a lot of times you take many tries of writing code, running IT, editing IT. We can use a lot of tokens a compared to something like human evalu. So I think there's definitely a space for these more traditional coating eval's that are sort of easily implement, quick to run. And do get you some signal. And maybe, hopefully, there's just sort of harder versions of human events get created.

How do we get sweet bench verified to ninety percent? Do you think that something where is that line outside to IT? Or is like, you know we need a lot of things to go, right?

Yeah yeah and actually maybe i'll start with sweet bench for a sweet bench verified, which is something something I missed her earlier. So we benches, as we described this big set of of tasks that we're scraped .

twelve thousand or .

something um yeah I think it's um it's two thousand in the final set but a lot of those even though a human did them, they're actually impossible given the information and that comes with the task. The most classic example of this is the test looks for a very specific area string you know like a cert message equals are something, something, something.

And unless you know that's exactly what you're looking for, there's no way the model is gonna ite. That exact same error messaging with the test are going to fail. So sweep entry verified um was actually made in partnership with OpenAI and they hired humans to go review all these tasks and pick out a subset to try to remove any obstacles like this that would make the tasks impossible.

So in theory, uh, all of these tasks should be fully doable by the model. And they also had humans grade how difficult they thought the problems would be between, like fifteen less than fifteen minutes, I think, fifteen minutes to an hour, an hour to four hours, and greater s and four hours. So this kind of this interesting sort, how big the problem is as well you get to sweep entry verify in ninety percent actually, maybe will also start off with some of the remaining failures that I see.

Like when running our model on sweet bench, I said the biggest cases are the model sort of Operates at the wrong level of abstraction. And what I mean by that is the model puts in maybe a smaller bandage when really the task is asking for a bigger reactor. And some of those, you know, is the models fault.

But a lot of times, if you're just seeing the if you're just what of seeing that get up issue, it's not exactly clear like which way you should do. So even though these tasks are possible, there are still some ambiguity ah in how the tasks are described. That being said, I think in general, like language models frequently will produce like a smaller diffin possible rather than trying to do a big reactor.

I think another areas. So at least the agent we created didn't have any multi model abilities even though our models are very good division. So I think that is the missed opportunity. And if I read through some of the traces, there's some funny things where, especially the tasks on that plot lib, which is a graphic library, the test script will like saving image in the model. Just say, okay, that looks great, you know, without looking at looking at IT.

So they certainly extra duce to squeak there, just making sure the model really understands all the sides of the input that is given, including multi model yeah I think like getting to ninety two percent. So this is something that I have not looked up, but i'm very curious about. I want someone to look at like what is the union of all of the different tasks that have been solved by at least one attempt.

It's we Better verified there is a ton of submissions to the benchmark. And so I would be really curious to see how many of those five hundred tasks, at least someone has solved. And I think that there's probably a bunch that none of the attempts have ever solved. And I think you would be interesting to look at those and say, hey, is there some problem with these? Like are these impossible or they just really the hard and human do?

Yeah specifically, is there a category problems that are still unreactive by any L M.

agent? Yeah yeah. I think you're definitely are. The question is, are those fairly uh, inaccessible or they just impossible because of the descriptions? But I think certainly some of the tasks, especially the ones that the human greater ers, uh, reviewed, is like taking longer than four hours, extremely. I think we did if we got a few of them right, but not very many at all in .

the benchmark lesson, four hours did lesson .

than four hours.

Is there correlation of length of act time with the human estimated time you want? I mean or do we have sort more of the paradox types situations where it's something super easy for a model um but hard for human? I actually .

haven't done like some stats on that, but I think i'll be really interesting to see if like how many tokens does that take into? How that is that correlate with difficulty? What is the likelihood of success divulge I think actually a really interesting thing um that I saw one of my co workers who was also working on this named Simon he was focusing just specifically on the very hard problems, the ones that are said to take longer than four hours and he ended up sort of creating a much more detailed prompt I used and he got a higher score on the most difficult subset of problems, but a lower score overall, the whole benchmark, and a prompt that I made, which is sort of much more simple and bare bones, got a higher score on the overall benchmark of lower score on the really hard problems.

And I think some of that is the the really detail prompt to made the model sort of overcomplicate a lot of the easy problems because honestly a lot of the sweep entry problems, they really do just asked for abandon and more its okay this crashes if this is not. And really all you need to do is put a check, if none. And so sometimes like trying to make the model think really deeply like IT, it'll think in circles and overcomplicate, something which certainly human engineers are capable of as well. But I think there's some interesting thing of like the best problem for hard problems .

might not be the best problem trees. How do we fix that? Are you supposed to fix that at the model level? Like how do I know what prompt them supposed to use?

Yeah and i'll say this was a very small effect sizes. And so I think this is not I think this isn't like worth obsessing over. But I would say that as people are building systems around agents, I think the more you can separate out the different kinds of work the agent needs, do that Better.

You can tell her a prompt for that task. And I think that also creates a lot of look. For instance, if you were trying to make an agent that could both, you know, solve hard programing tasks and you could just like, you know, right, quick test files for something that someone also already made the best way to do, those two tasks might be very different.

I see a lot of people build the systems where they first sort of have a classification, and then without the problem to two different problems. And that sort of a very effective thing, because one IT makes the two different prompts much simpler and smaller. And IT means you can have someone work on one of the prompts without any risk of affecting the other tasks. So IT creates like a nice separation of concerns yeah.

And the other model behavior of thing you mention, they prefer to generate like shorter. That's why is that like is way yes, I think that maybe like that they the lazy the lazy model question that people have is like why you're .

not just generating .

the whole exactly. So yeah, yeah, yeah. So there's two different things.

That one is like the i'd say maybe like doing the easier solution rather than hard solution. And I see the second one. I think what you're talking about like a lazy model is like .

when the model says like dot code main.

I think honestly, like that just comes. This is like people on the internet will do stuff like that and like do IT if you're talking to a friend and you asked them like to give you some example code, they would definitely do that yeah the whole thing. And so I think that just a matter of like, you know, sometimes you actually do just want like the relevant changes.

And so I think it's this is something we are a lot of times like, you know the models aren't good in mind reading of like which one you want. So I think that like the more explicit you can be in prompting to say, hey, you know, give me the entire thing, no, no religions ever is. Just give me the off and changes. And that's something, you know, we want to make the models always Better. At following of instructions.

I drop a couple of references, is here, uh, where cording is a day after the reo next treatment. Just drop his five hour pod with dario and amenda and the rest of the crew. And h, dare you actually made this interesting observation that like we actually don't want we complain about models being to chat in text and they're getting enough in code yeah.

And so like getting that right is kind of a awkward bar because, you know, you don't want you to yet in its responses, but then you also wanted to be complete in in code and is sometimes not completely. Sometimes you just wanted to diff, which is something that in topic has also released like the stuff you get. And then the other thing I wanted to double back on is the prompting stuff said he said he was a small effect, but I wasn't noticeable effect in terms of like picking up prompt.

I think we're going to swe agents in a little bit, but I kind of reject the fact that you need to choose one prompts, and I have your whole performance be predicted. And one prompts, I think something that h and topic has done really well, is metal prompting, prompting for a prompt. And so why can I just develop the meta prompt for for all the other prompts? You know, if it's simple tasma simple problem is heart tasma hard prompt.

Obviously, I probably had waving a little bit, but I I will definitely ask people to try the topic work bench made a prompting system if they haven't tried IT yet. I went to to build the recently and topic H Q. And it's the closes I felt to in ega, like learning how to Operate itself. Yeah, it's really magical.

Yet a law is greater writing prompts for clock.

The problem.

yeah, yeah. The way I think about this is that humans, even like very smart humans, still use sort of checklists and use sort of scaffolding themselves. Surgeons will still have checklists, even though they are incredible experts and certainly know a very senior engineer needs less structure in a junior engineer, but there is still is some of that structure that you wanted keep.

And so I always try to answer promotion ze the models and try to think about for a human sort of what is the equivalent. And that sort of you know, how I think about these things is how much instruction would you give a human with the same task you would? You need to give them a lot of instruction or or a little .

bit of instruction like the agent architecture maybe. So first run time, you will let IT run until they it's done or a reaches two hundred k context window. How yeah yeah.

I mean this. So i'd say that a lot of previous agent work build sort of these very hard coded and rigid workflows where the model is to have pushed through certain flows of steps. And I think to some extent, you that's needed with smaller models and models that are less smart.

But one of the things that we really wanted to explore was like, let's really give claude the rains here and not force claude to do anything, but let cloud decide, you know, how would you approach the problem? What steps that should do? And so really, you know, what we did is, like the most extreme version of this is just give IT some tootles that I can call, and it's able to keep calling the tools, keep thinking and then yeah keep doing that until things it's done.

And that's sort of the most the most minimal agent framework that we we came up with. And I think that works very well. I think especially the new song at three point five is very, very good itself.

Correction has a lot of like grit. Claude will try things that fail and then try come back and sort of tried different approaches. And I think that something that you didn't see in a lot of previous models, some of the existing agent frameworks that I looked at, they had whole systems built to try to detective N.

C. O. Is the model doing the same thing, you know, more than three times than we have to pull IT out? And I think like the smarter the models are, the less you need that kind of extra scaffolding. He had just giving the model tools and letting IT keep sample and call tools until things that's done was the most minimal framework that we could think of. So that's what we did.

So you're not printing like bad pets from the context. If he tries to do something that fails, you're just burn all these .

together to i've see the downside of this is that this is sort of a very token expensive but .

still it's very common of proof backpack s because models .

gets stuck yeah but I say that ah three point five is not getting stuck as much as previous models. And so we wanted to at least just try the most minimal thing. Now I would say that there this is definitely an area of future research, especially if we d talk about these problems that are going to take of human more than four hours, those might be things where we're going to need to go pro bad paths in able to let the model be able to accomplish this task within two hundred k jokers. Certainly I think there is like future research to be done in that area, but it's it's not necessary to do well on these matters.

No thing I always have questions about on context, those things. There is a mini cottage industry of code indexes that have sprang up for large ical basis like like the ones in sweet, you didn't need them.

We didn't. And I think I think there's like two reasons for this. One is like sweet bench specific and the other is the more general thing. The more general thing is that I think saw IT is very good at what we call agent's search and what this basically means is letting the model decide how to search for something that gets the results.

And then I can decide, should to keep searching, or is IT done? Does that have everything he needs? So if you read through a lot of the traces of the sweep, enter the model is calling tools to view directories, list out things, view files.

And if IT will do a few those, until in training, IT feels like it's found the file where the bug is. And then I will start working on that file. And I think like, again, this is all everything we did is about just giving cloud the full rains.

So there's no hard coded system. There's no search system that you're relying on getting the correct files into context. This just totally let's cloud .

do IT or emda things into .

a vector date. exactly. Oops, no, no, I know. But again, this is very, very token, expensive. And so certainly animals have taken many, many terms. So certainly, if you want to do something in the single turn, you need to do rag and just pushed up into the first round.

And just to make you clear, it's using the bash tool, busy doing at last looking at files and then doing cat the following contact.

You can do that, but it's a it's file editing tool also has a command in IT called view. They can view a directory. It's very similar to us, but it's just sort of has some nice sort of quality of life improvements like IT will only do.

And as sort of two directories deep so that the model doesn't get overwhelm, the IT does this on a huge file. I would say actually, we did more engineering of the tools than the overall prompt. But the one of the thing I want to say about this agent tic search is that for sweet ens specifically, a lot of the tasks are bugger reports, which means they have a stack trace in them.

And that means right in the first prompt there tells you to IT tells you where to go. So I think this is a very easy case for the model to find the right files versus if you're using this is a general coding assistant, whether isn't the stack race or you're asking IT to in certain new feature, I think there is much harder to know which files to look at. And that might be an area where you would need to do more of this exhaust search, where an agent's search would take way too long.

Someone has spent the last few years in the J S. You're be interesting to see swe bench J S because these statues are useless being so so much visualization that we do. So they're very, very disconnected with um where they actually do that the the code problems are actually .

appearing that makes me feel Better about my limited front and experiences. I like struggle.

not um we've we've got myself into a very, very complicated situation and i'm not sure it's entirely needed. Um but you know if you talk to our friends of ourself.

that is I say sweep entry just released sweep entry multi which I believe is either entirely java script or largely java script and it's entirely things that have visual components of them tackle .

that we will see.

I think it's it's on the listener interest, but no no guarantee yet.

Just as as I know IT ocurred to me, the every model lab, including and topic, but you know the others as well. You should have your own sweep entry, whatever your bug track to like this is a general methodology that you can use to track progress, I guess.

Yeah unting on our own all. Yeah, that's a fun since .

spent so much time on the design. So have this at the tool that can make changes and want not any learnings from that, that you like the A I, I would take in. Is there some special way to like like a files feed them in?

I would take the core of that tool is stringer place. And so we did a few different experiments with like different ways to specify how to edit a file and stringing er replace a basically the model has to write out the existing version of the string and then a new version. And that just got swapped in.

We found that to be the most reliable way to do these edits. Other things that we tried to were like having the model directly, like right a diff, having the model fully regenerate files. That one is actually the most accurate, but IT takes so many tokens.

And if you're in a very big file, it's conspiring tive. This basically a lot of different ways to sort of represent the same task. And they actually have pretty big differences in terms of like model accuracy. I think either they have a really good blog where they they explore some of these different methods for editing files and they post results about them, which I think is interesting.

But I I think this is like a really good example of the broader idea that like you need to iterate on tools rather than just a prompt and I think a lot of people, when they make tools for another, they kind of treated like they're just writing an API for a computer. And it's what a very minimal, it's just the bare bones of what you d need. And honestly, like it's so hard for the models to use those.

I really can I come back to enter promotional ing these models like imagine you're a developer and you just read this for the very first time and you're trying to use IT, like you can do so much Better than like just put at the bare API spec of what you'd often see, like include examples of the description, include that really detailed explanations of how things work. And I think that, again, also think about what is the easiest way for the model to represent the change that he wants to make for file editing. As an example, writing a diff is actually, let's take the most extreme example.

You want the model to literally write a patch file. I think patch files have at the very beginning, like numbers of how many total lines change. That means before the model has actually written the edit IT needs to decide you know how many numbers or how many lies are going to change.

Don't quote me on that. I'm pretty sure I I think it's something like that, but I don't know if that's exactly the different format, but you can certainly have for math easier to express without messing up the others. And I like to think about like think about how much human effort goes into designing human interfaces for things like it's incredible. This is like entirely what from n is about is creating Better interfaces to kind of do the same things. And I think that same amount of attention and effort needs to go into creating agent computer interfaces.

It's a topic with disgust. A C, I, yeah, whatever that looks like. I would also shot out that I think you release some of these two beings as part of computer use as well and people really likes IT um the yeah it's it's all open source if if people anna, check IT out.

I'm curious if there's a there's an environment element of the compliments to tools. So how do you like do have a sandbox? Do you is IT just docker because that can be slow or resource intensive? Do you have anything else that you I can.

I can talk about sort of public details about, private details about how we implement our sand boxing OK. But obviously, you we need to have sort of safe, secure and fast sand boxes for training for the models, be able to practice writing, hold and and working in an environment.

I'm aware of a few startups working on agent sand boxing e to be is is a close friend of hours that less years later around in. But also I think there's others where they are focusing on snapshot ting memory so they can do time travel for tea, bugging computer use, where you can control the mouse, the board, something like that. Where's here? I think that the kids of tools that we offer for IT are very, very limited to coding agent where cases like bashed yeah I think .

the computer use demo that we released if is an extension of that of IT. IT has the same bash at the tools, but IT also has the computer tool that lets to get screen shots and move the mouse and keyboard. Yes, I definitely think that sort of more general tools there.

And again, the tools we released as part as we bench were, i'd take the very specific for like editing files and doing bash. But at the same time, that's actually very general. If you think about IT like anything that you would do on a command line, or like editing files, you can do with those tools. And so we do want those tools to feel like any sort of computer terminal work could be done with the same tools, rather than making tools that were like very specific force we bench like run tests a as the own tool, for instance.

Yeah you a question about test.

Yeah exactly. I saw their new test writer tool. Is that because IT generates the tack, the code, and then you are running again against sweat and ch, anyway. So he doesn't really need to write this store.

yes. So this is this is one of the interesting things about sweep entry, is that the tests that the models output is graded on our hidden from IT that basically so that the model can cheat by looking at the tests and writing the exact solution. But I say typically the model, the first thing that does is IT usually Wrights a little script to reproduce their any.

And most sweat enter tasks are like, hey, here's a bug that I found. I run this and I get this error. So the first thing the model does is try to reproduce that. So it's kind of been rerunning that script as a mini test. But yes, sometimes the model will like accidentally introduce a bug that breaks some other test and IT doesn't know about that.

And should we be redesigning and tall C P S, we're going to talk about this and like having more examples. But i'm thinking even things like q as a query parameter and many PS is like easier for the model to like require then read the queue. I'm sure you learnt the q by this point valley. Is there anything you've seen like building this for as I hey, if I worked to redesign some C I tools, so mayi tool, I would like change the way structure to make a Better for them.

I don't think I ve thought enough about that off the top of my head, but certainly like just making everything more human friendly, like having like a more detailed documentation and examples. I think examples are really good in things like descriptions like so many, like just using the linux command line, like how many time I do you like dash, dash.

Hello, per look at the man page, something it's like, just give me one example of, like how I actually use this. Like I don't want to go through through one hundred flags. Just give me the most common example right again. So you know, things will be useful for human, I think are also very useful for a model.

Yeah I mean, there's one thing that you cannot give to code agents that is useful for human is this access to internet. I wonder how to design that in because one of the issues that I also had with just the idea of a sweep entry is that you can do follow up questions. Yes, you can like look around for similar implementations, these all things that I do when I try to fix code yeah and we don't do that.

It's not wouldn't be fair like you be too easy achieve, but then also kind of not being fair to these agents because they're not Operating in a real world this situation. Like if I had a real world agents, I of course, i'm giving an access and IT because i'm not trying to pass benchmark. I don't have a question in there more, just like I feel like the most obvious tool, access city international, is not being used.

I think that that's really important for humans. But honestly, the models have so much as general knowledge from retraining that it's it's like less important for them, not like versioning.

You know.

if you're working on a newer thing that was like that came after the knowledge put off, then yes, I think that's very important. I think actually this is like a broader problem that there is a divergence between swe bench and like what customers will actually care about who are working on a coating agent for real use. And I think one of those there is like internet access and being able to like how do you pull an outside information?

I think another one is like if you have a real coating agent, you don't want to have to start on a task and like spin its wheels for hours because you gave IT a bad prompt, you wanted to come back immediately and ask follow of questions and like really make sure IT has a very detailed understanding what to do, then go off for a few hours and to work. So I think they like real tasks are going to be much more interactive with the agent rather than this kind of like one shot system. And right now, there's no benchmark that they measure that. And maybe I think you will be interesting to have some bench market that is more interactive. I don't know you're familiar with how bench, but it's a it's a customer service benchmark where there is basically one L M that's playing the user or the customer is getting support in another alem that's playing the, uh, support agent and they interact and try to .

resolve the issue. Yeah, we talked to the elections. They also did.

mt. Bench for people listened. So maybe need swe bench.

yes. Or maybe you know you could have something where like before the sweep entry tasks starts, you have like a few back and forth with kind of like the the author who can answer follow up questions about what they want to test to do. Of course, you need to do that where they doesn't cheat and like just get the exact the exact thing out of the human or out of the sort of user.

But I think that would be a really interesting thing to see. If you look at sort of existing agent work like, uh, applets coating agent, I think one of the really a great ux things they do is like first having the agent create a plan and then having the human approve that plan or give feedback. I think for agents in general, lig having a planning step at the beginning, one just having that plan will improve performance on the downstream past just because it's kind of like a bigger chain of thought.

But also, IT is such a Better U. X. It's way easier for a human to iterate on a plan with a model rather than iterating on the full task that sort of has a much slower time through each loop. If the human has approved this implementation plan, I think that makes the end result a lot more sort of auditable and trust able. So I think I think there's a lot of things sort of outside of sweet venture that will be very important for a real agent usage in the world.

Yeah, I will say also there is a couple comments is on named that you drop the coal pilot also does the plan stage before IT writes code. I feel like those approaches have generally been less twitter successful because it's not prompt to code. It's prompt code.

So there's a little bit of friction in there. But so much like IT, actually you get a lot for what it's worth. And I also like the way that David does IT where you can sort of edit the planets IT goes along and then h the other thing ripped IT.

We had a all city that day pregame with reply and they also come in set about mountains. So like having two agents kind of vince off of each other. I think it's a similar approach to what you're talking about with kind of the few shot example, just as in the prompt of clarifying what the agent wants. But typically, I think this will be implemented that as a tool calling another agent like a subagent. I don't know if you exploit that, you would do like that idea.

I haven't explored this enough, but i've definitely heard of people having good success with this here. I've almost like basically having a few different sort of personas of agents, even if they're all the same.

Hello, I think this one thing that multi agents that a lot of people kind of get confused by, as I think IT has to be different models behind each thing, but really related, usually the same, the same model with different problems, yet having one, having them have different prisoners to kind of bring different sort of thoughts and priorities to the table. I've seen that work very well and sort of create a much more throw and thought our response. I think the downside is, is that IT adds a lot of complexity and that adds a lot of extra tokens. So I think IT depends what you care if you want a plan that's a very thorough in detail. I think it's great if you want a really quick just like write this function, you know you probably to do and a bunch of different calls before IT does this.

And just second about the prompt. Why are x aml tax so good? And cloud, I think, can usually be well, like, oh, maybe you're just getting lucky with example, but it's all obviously, you use them in your agent prompt so they must work. And why is IT so model specific to your family? Yeah.

I think that there's again, i'm not sure how much I can say, but I think there's historical reasons that internally, we've preferred exam data.

I think also the one broader thing i'll say is that if you look at certain kinds of outputs, there is overhead to putting in, Jason, like if you're trying to output a code in j on, there is a lot of extra escaping that needs to be done and that actually hurt t model performance across the board where verses like if you're in just a single exam l tag, there's none of that. What of escaping that is tapping up? That being said, I haven't. Having IT right, you know HTML and XML, which maybe then you start running into into we're escaping things there, i'm not sure, but I say that some historical reasons and there's there's less overhead of escaping.

I use like in other models as well, and it's just a really nice way to make sure that the thing that ends is this is tight to the thing that starts. The only way to do code dances where you're pretty sure like example one start, example one end like that is the one could .

use the unit because the exact that .

would be my simple reason I go for everyone, not just call, was just the first one to popular.

is that I think I do definitely prefer to reexamine, then read.

So any other details that are like maybe underappreciated, any other example you have the absolute pattern versus relative any other have fun nugas? Yes, I think .

that's a good sort of anechoic mention about iterating on tools. Blinker said that you know spend time prompt engineering your tools and don't just write the prompt but like write the prom or write the tool and then actually give IT to the model and like read a bunch of transcripts about how the model tries to use the tool.

And I think you will find like by doing that, you will find areas where the model misunderstands the tool or makes mistakes, and then basically change the tool to make IT foolproof. And there's this japanese uh term poke yoke about like making tools mistake proof. You know, the classic ideas as you have, like you can have like a plug e that can fit either way and that's dangerous.

Or you can make IT asem tric so that like you can't fit this way. IT has to go like this and like that's a Better tool because you can use a their own way. So for this example of like absolute paths, one of the things that we saw while testing these tools as oh, if the model has like you know, done C, D and move to a different directory, IT would often get confused when trying to use the tool because it's like now in a different directory ends with the paths are winding up.

So we said, I like, let's just force the tool to always require an absolute path. And then you know that easy for the models, understand? IT IT knows sort of where IT is, that knows where the files are.

And then once we have IT always giving absolute patthern never messes up, even like no matter where IT is because they just if you're using that solepa doesn't matter where you are. So like iterations like that, you let us make the tool full proof for the model. I see there's other categories of things where we see how if the model you know opens vim, like you know it's never gonna .

turn and to the did you get stuck yeah .

yeah yeah because the .

tool is like IT just texted in text out, it's not interactive. So if it's not like the model doesn't know how to get out of them, is that the way that the tool is like hooked up to the computer, not interact?

Yes, I the me of one, you know, basically we just added instructions in the the tools I hate don't launch command that don't return like yeah I don't launch them, don't launch whatever if you do need to do something, you know, put an ampersand and after to launching in the background. And so like just, you know, putting kind of instructions like that just right in the description for the tool really helps the model. And I think like that an underutilized space of prompt engineering or like people might try to do that in the overall prompt, but just put that in the tools so the model knows that it's like for this tool. Yes, this is what well.

you said. You worked on the function calling in two years before you actually started the swept ch work. Was there any surprises? Because you basically became from went from creator of that A P.

I to the user of the A P. I. Any surprises or changes you would make now that you have extensively dog footed? I know of the art agent I .

want us to make like maybe like a little bit less verbose SDK. I think some way right right now IT IT just takes A I think we sort of force people to do the best practices of writing out what are these full, full juice on schemers. But it'll be really nice if you could just pass in a python .

function as a tool. I think a in structure you I don't know if there there's anyone else that is specializing for anthropic, maybe germy Howard and sam Wilson stuff specific stuff that they are working on. exactly.

I also wanted to spend a little bit of time of sweets. Ent IT seems like a very general framework. Like, is there a reason you picked IT.

apart from is the same authors as we spend the main thing we wanted to go with, what is the same authors? Sweeney IT just felt sort of like the safest, most neutral option was very high quality. I was very easy to to modify to work with.

I would say IT also actually their underlying framework is what of this um it's like you know think, act, observe that they can go through this loop, which is like a little bit more hard coated than what we wanted to do. But it's still very close. That's still very general.

So I felt like a good match sort of the starting point for our agent. And we had already have worked with the and talk with a sweep entry people directly. So felt nice to just have know, we really know the author is just to be easy, is to work with.

I'll share a little bit of like this all seems disconnected. But once you figure out the people and where they go to school IT all makes sense. So all princeton yes.

this prevention it's a group out of princeton.

Ah we had you on the pod uh and he came up with the react paradise and and that's like think I act observed like that's all react. So their friends .

yes and you know are if you actually read our traces of our submission, you can actually see like think act observed like in our logs and like we just didn't even changed the printing code like that. So it's it's not actually it's like doing still function calls under the under the head end, the model can do sort of multiple functions calls on a row without thinking in between if he wants to. But yes, so a lot of similarities and a lot of things we inherited from sweet and just as a starting .

point for the framework yeah any thoughts about other agent frameworks? I think there's you know the whole .

gammage from very simple till like very .

complex and graph yeah yeah. I think I haven't explored a lot of them in detail. I would say with agent frameworks in general, they can certainly save you some like boiler plate.

But I think there's actually this like downside of making agents too easy where you end up very quickly, like building a much more complex system that you need. And suddenly, instead of having one prompt, you have five agents that are talking to each other and doing a dialogue. And it's like because the framework made that ten line to do, you end up building something that way too complex.

So I think I would actually caution people to, like, try to start without these frameworks, if you can, because you'll be closer to the raw problems and be able to sort of directly understand what's going on. I think a lot of times these frameworks also, by trying to make everything feel really magical, you end up sort of really hiding what the actual prompt and output of the model is. And that can make IT much harder to debug.

So certainly, these things have a place and I think they do really help with getting rid of boiler plate, but they come with this cost of a Bruce. Gaining was really happening and making IT too easy to very quickly add a lot of complexity. Um I would recommend people to like try IT from scratch and it's like not bad.

Would you rather have like a framework of tools you know you almost see like he like it's maybe easier to get tools that already accurate IT like the ones that you built. You know if I had an easy way to get the um the best tool for you and like you mention the definition or yeah any thoughts on how you want to formalize tool share?

Yeah I think that something that were certainly interested in expLoring and I think there you know is space for sort of these general tools that would be very broadly applicable. But at the same time, most people that are building on these, they do have much more specific things that they're trying to do. You know, I think that might be useful for hobbist and demos, but the ultimate and appliance are going to be a spoke. And so we just want to make sure that the models grade any tool that he uses. But certain ly something .

works bore so everything we spoke, no frameworks.

no anything for yeah say like .

the best thing i've seen these people up from, like build some good util functions and then you can use those .

as building walks yeah yeah I U tail fully, I called, describes my framework is like death call and thrown c and then I just .

put all the devolution I every .

you tells for that you know, use IT enough like it's to start up. You know, like at some point I kind of is, is there is there a maximum length of turns that that that they took? Like.

what's the long? I actually, I mean, we had we IT had basically infinite turns until IT ran two hundred eight context. I should love.

Look this up, I don't know. And so for some of those failed cases that were are eventually ran out of context, I mean, IT was over one hundred turns. I'm trying to remember like the longer successful run, but I think he was definite over one hundred turns .

that some of the times it's a coffee .

break yeah but certainly know these things can be a lot of terns. And I think that because some of these things are really hard or it's going to take you know, many tries to do IT. And if you think about like think about a task that takes a human four hours to do, like think about how many different like files you read and like times you added a file in four hours, like that's a lot more than one hundred.

I made them to have an twitter yeah yes, you get distracted. But if you had a long more compute, what's kind of like the return on the extra compute now? Yeah so like, you know yet thousands of turns or like whatever like how much Better would I get?

Yeah don't know. And I think this is um I think sort of one of the open areas of research in general, what agents is memory and sort of and how do you have something that can do work beyond beyond its context line through you, just purely depending. You mentioned earlier things like pruning bad paths.

I think there's a lot of interesting worker around there. Can you just roll back but summarize, he don't go down this path, there will be dragged that yeah I think that's very interesting that you could have something that that uses way more tokens without ever using at a time more than two hundred k. So I think that's very interesting. I think the biggest thing is like can you make the model should have lost lesser summarized what it's learned from trying different approaches and bring things back um I think that sort of the big chAllenge what about .

different model? So you hi KO, which is like, you know a cheaper like, well, what if I want high school to do a lot of these smaller thanks and then put a back up.

I think curse might have said that they actually have a separate model for file editing. I'm trying to remember I think they were on a maybe the legs for the podcast where they said like they have a bigger model like right, what the coach should be and then a different model like applied. So I think there is a lot .

of interesting in room for stuff like that fireworks.

But I think there's also really interesting things about lic, you know, pairing down and put tokens as well, especially sometimes the models trying to read like a ten thousand line file like that, a lot of tokens and you know most of IT is actually not going to be relevant.

I think IT be really interesting to like delegate that to hi I read this file and just pull out the most relevant uh, functions and then uh you know saw that, reads just those and you save ninety percent on tokens. I think there's a lot of a really interesting room for things like that. And again, we were just trying to do that of the simplest, most minimal thing and show that IT works.

I'm really hoping that people sort of the agent community, build things like that on top of our models. That's again, why we released these tools weren't not going to go and do lots more submissions to sweep and try to try to prompt, engineer this and build the bigger we want people to like the ecosystem to do that on top of our models. So I think that's a really interesting one.

IT. Turns out I think you did do three, five high school with your tools. And IT scored to forty, twenty six. Yes.

yes. So I did. I did very well. IT itself is actually very smart and which is great, but we haven't done any experiments of this like a combination of the two models. I think this one of the exciting things is that how well I Q three point five did on sweet bands shows that sort of even our smallest, fastest model is very good at sort of thinking agenticity and working on hard problems like it's not just sort for writing simple l text anymore.

I know you're not not gonna talk about IT, but like some IT isn't not even supposed to be the best model. No, like opus, it's kind of like we left IT at three that can the point at some point i'm sure the new opus will come out. And if you had oppos plus on IT, that sounds yeah very, very good.

There is a run with three agent plus ops, but that's the official swedien. Ch, guys. Yes, that was the OK. Did you want to word? I mean, you could just change the model name.

I think I think we didn't submit IT, but I think we included IT in our board card OK. We included the scores as comparison yeah saw on that and high q actually I think still the new ones, both they both outperform the original yeah and yeah.

it's a little bit hard to find.

yes. Yeah, it's not an exciting scores who we didn't feel like they did have the bench park .

we can cut over to computer use if are okay with like moving on to topic. If anything else.

I think we're good. I think like anything else, sweet match related IT doesn't .

have to be also just like specifically much, but just your thought of building agents because you are one of the few people that have you reach this leader board on building a coding agent like this is the state of the art. It's surprisingly like not that hard to reach, you know, give him with some good principles, right? But there is obviously a tuna hanging through that we covered. Just your thoughts like if you were to build a coding agent started up like maybe like what next?

I think they are really interesting question for me. For all the starts up out there is like this kind of divergence between the benchMarks and like what real customers will want. So I am curious like maybe the next time you have a coding agent start up on the podcast, you should ask them what are the differences that they are turning? I'm actually very curious what they will see because I also have seen I feel like it's like slowed down a little, but I don't see this start ups submitting to swe bench that much anymore because the traces.

the traces, so we had code on h, they had like fifty on full on events full, which is the hardest one. And they were rejected because they didn't want to limit the traces. yes.

I P you know yeah, I see tomorrow we're talking to boat, which is a cloud customer. You guys actually publish the case study with them, see that you weren't involved with with that. But they are very, very happy of the one of the bigger launches of the year.

yeah. So we actually happened to be sitting in a depth former office. May I take on this is anthropic ship to debt.

There's a feature or like a year, it's still a bit of feature.

But but yes, what was IT like when you try that for the first time was IT was IT IT obvious that class had reached that stage. But you could do computer .

use IT was somewhat of a surprise to me, like I think I actually, I had been on vacation and I came back, and everyone is like, computer use works. So was the kind of this very exciting moment. I mean, after the first was like, you know, go to google.

I think I tried to have a play minecraft or something and had actually, like, installed and like, open minecraft. Well, this is pretty so I was like, yeah, this thing can actually use the computer certainly and IT is still beta. You know there are certain things that it's it's not very good yet, but i'm i'm really excited.

I think most broadly, not just for like new things that weren't possible before, but as a much lower friction way to implement to use. So one anecdote from my days at cobo robotics, we wanted our robots to be able to ride elevators, to go between floors and fully cover the building. The first way that we did this was doing API integrations with the elevator companies.

Some of them actually had API as we attend the request and would move the elevator. Each new company we did took like six months to do because they they were very slow. They didn't really care an elevator.

Yes, yeah even installing like once we had IT with the company, they would have to like literally go installed in A P I box on the elevator that we wanted to use. And that would tom sun, sometimes take six months. So very slow and eventually really okay. This is this is getting really slowing down all of our customer deployments.

And I was like, what if we just add an ARM to the robot? And I added this little ARM that can literally go and press the elevator buttons, and we use computer vision and to do this, and we could deploy that in a single day. And have they were being able to use the elevators at the same time? IT was slower than the API.

IT wasn't quite as reliable. You know, sometimes you would miss and would have to try to depress IT again, but IT would get there, but he was slower and a little bit less reliable. And I can see this as like an analogy to computer use of like anything you can do a computer use today, you could probably write tool use and like integrated with aps up to the language model.

But that's going to take a bunch of software engineering ing to write those integrations. You have to do all the stuff with computer use. Just give the thing a browse ser that's that's logged into what you on integrate with and it's going to work immediately. And I see that like reduction and friction is being incredibly exciting of like imagine like a customer support team where okay, hey, you at this customer support bot, but you need to go integrated with all these things and you don't have any engineers on your customer support team. But if you can just give the thing a browser that logged into your systems that you need IT to have access to, now suddenly in one day, you could be up and rolling with a fully integrated customer service spot they could go to all the action to care about. So I think that's the most exciting thing for me about computer uses, like reducing that friction of integrations to almost sera .

or farming on aircraft. Very is say about this.

This is like the oldest question in robotics or self driving, which is, you know, do drive by vision. Do you have special tools? And vision is the universal tool to claim tools.

There's tradeoffs. But like there are situations in which they will they will come. But you know this week podcast, the one we just put out had stand holder from from dust saying that he doesn't see a future where it's like the significant work course. I think there could be a separation between, maybe like that, the high volume use cases you want A P. S, and then the long tail you want computer use, I told.

great, right? Or you'll start, you'll prototypes something with computer use. And then, hey, this is working like customers have adopted this feature. Okay, like let's go turn you into API and IT will be faster and and use this tokens.

I'd be interested to see a computer agents replace itself by figuring out the A, P, I, and then just dropping out of the the equation altogether.

You know.

if I was running in R P A company, like you would have the R P A scripting R P F for people listening, robotic process automation, where you would descript things that might always show up in sequence so you don't haven't tell them in the loop. And so basically what you need to do is train and alem to code that script. And then you can, you can naturally hand off from computer use to non computer user.

yes, or have some way to turn claudes actions of computer use into a safe that you can then run repeatedly.

I been resting to record that.

What did you decide to not show any like sandbags harness for a computer use? It's kind of, hey, peace. Run a your own rest.

You launched IT with a thing of V M or docker docker .

system but is not for your actual computer right like the docker instance is like runs in the .

docker is not ah IT runs brother. I mean the main reason for that one is sort of security. We don't want the model can do.

So we wanted to give IT a sandbox, not that people do their own computer, at least we have for our default experience. We really care about providing a nice suit of making the default safe, I think is the the best way for us to do IT. And I mean, very quickly, people made modifications to let you run them on your own, this stop, and that's fine.

Someone else you do that. But we don't want that to be the official and public thing to run. I would say also like from a product perspective right now, because this is what is still in beta.

I think a lot of the most useful use cases or like a sandbox, is actually what you you want something where, hey, any I can't mess up anything in here IT only has what I, what I give IT. Also, if using your computer, you can use your computer at the same time. I think you actually like, wanted to have its own screen. It's like you and a person pair programing. But only in my laptop forces .

everyone should totally have a side laptop where computer .

use college is doing a Better experience and less. There's something very explicit you wanted to do for you on your own computer.

IT becomes like you're sort of shelling into a remote machine and you know, maybe checking on IT every on that like I have fun memories of half a audience going to be too Young. Remember this but strix like this top experience like he was a out of remote into A A machine and that someone else was Operating um and for a long time that would be how you did. Like enterprise computing? yes.

Is coming back any other implications of computer use? You know, is that a fun demo? Or is IT like the future of anthropic? I'm very excited about IT.

I think that like there's a lot of sort of very repetitive work that like computer, you should be great for. I think i've seen some examples of people build like coating agents, then also like test the front and the day may. So I think it's very cool, like use computer used to be able of the clothes, the loop on a lot of things that right now just a terminal based agent can do. I think that's that's .

testing exactly yeah .

and sort of front and web testing is something i'm very excited about.

Yeah i've seen AManda also talking uh this would be AManda as all the head of core character SHE goes on a lunch break and IT generates research ideas for her. Giving IT a name like computer use is very practical, as like you are supposed do things, but maybe sometimes is not about doing things about thinking and thinking in the process of thinking. You're using the computer in some way solving sweep ch like you. You should be allowed to use the internet or you should be allowed to use the computer to to solve IT and use your vision and use whatever of shackling IT all these researches because we want to play nice for a benchmark, but really, you know, fully, I wish, will be able to do all these things to think, yeah.

will definite be a reason to google and search for things? Yeah yeah. Pull inspiration.

Can we just do before rap of robotics corner people, people are always curious, especially with somebody that is not trying to hide their own company. What's the state of robotics under hipe? overhyped? yeah.

And i'll say these are, these are my opinions, not anthropos. I get coming from a place of a burned out robotics founder. Take everything with the, with a Green of assault.

I was on the positives like there is really sort of incredible progress that happened in the last five years. Dead, I think, will be a big unlock for robotics. The first is as general purpose language models.

I mean, there is an old saying in robotics that if if to fully describe your task is harder than to just do the task, you can never automate IT cause like it's going to take more effort to even tell the robot how to do this thing than to me, just do IT itself l em, solve that. I no longer need to go exhaustively programme in every little thing I could do. The thing just has common sense and it's going to know how do I make a rubin sandwich.

I'm not going have to go programme that where is before. Like the idea of even like a cooking things like, oh, goliath we are have a team of engineers that are hard coding recipes for the long tail of anything you we have disaster. So I think that's one thing is bringing common sense really is like solves this huge problem describing tasks.

The second big innovation has been diffusion models for path planning. A lot of this work came out of a tweeter research. There's a lot of startups though, that are working on this, like physical intelligence pie, Chelsea finn arted about a stanford.

And the basic idea here is, is using a little bit of the and there may be more inspiration from diffusion rather than diffusion models themselves, but they are a way to basically learn and and and sort of motion control, whether previously all of robotics motion control was sort of very hard coded. You either you know you're programing and explicit motions or your programing in an explicit goal and using an optimization library to find the shortest path to IT. This is now something where you just give you a bunch of demonstrations.

And again, just like deep using learning, it's basically like learning from these examples. What does that mean to go pick up a cup and doing this in a way just like diffusion models where they are uh somewhat conditioned by text, you can have IT the same model, learn many different tasks and then the the hope is that they started generalize that if you've trained IT on picking up coffee cups and picking up books, didn't when I say pick up the backpack, IT knows how to do that too, even though you've never trained IT on that. That's kind of the holy grail here is that you train IT on five hundred different tasks and then that's enough to really get IT, to generalize, to do anything you would need.

I think that's like still a big T. P. T. And these people are working.

Have I measured some degree of generalization? But at the end of the day, it's also like a lambs like, you know, do you really care about the thing, being able to do something that no one is also training data. People feel like a home robot.

There's gonna like a hundred things that people really wanted to do. And you can should make sure as good training for those things, what you do care about them is like generalization within a task about, i've never seen this particular coffee man before. Can I still pick IT up? And those the models do seem very good at.

So these kind of are the two big things that are going for robotics right now, l ms, for common sense and diffusion inspired path mining algorithms. I think this is very promising, but I think there's a lot of hype. And I think where we are right now is where self driving cars were ten years ago.

I think we have very cool demos that work. I mean, ten years ago, you've had videos of people driving a car on the highway, driving a car, you know, on a street with a safety driver. But it's really taken a long time to go from there to.

I took a way out here today, and even that way more is only in S. F. And a few other cities. And I think like IT takes a long time for these things to actually like get everywhere and to get all the educations covered.

I think that for robotics, the limiting factor is going to be reliability, that these models are really good at doing these demos of, like doing laundry or doing dishes if they only work in ninety nine percent of the time. Like that sounds good, but that's actually really annoying. Like humans are really good at these tasks.

Like imagine if like one out of ever one hundred dishes IT washed IT breaks. Like you would not want that robot in your house, or you certainly wouldn't want that in your factory if one of every hundred boxes that in moves IT drops and breaks things inside IT. So I think for these things to really be useful, they're gonna a have to hit a very, very high level of reliability, just like self driving cars.

And I don't know how hard it's going to be for these models to move from like the ninety fight was the reliability ninety nine point nine. I think that's gonna the big thing. Anything also like i'm a little skeptical of how good the unit economics of these things will be.

These robots are going to be very expensive to build. And if you're just trying to replace labor like a one for one purchase kind of sets an upper cap about how much you can charge. And so you know, IT seems like it's not that greater business. I'm also worried about that for the software and car industry.

Do you see most of the applications actually taking some of the older, especially manufacturing machinery, which is like IT, needs to be like very precise even if it's off, but just a few millimetres, I kind of straw, but the thing and be able to adjust at the edge? Or do you think like the net new use cases, maybe like the more interesting, I think you'll .

be very hard to replace a lot of those traditional manufacturing robots because everything relies on that precision. If you have a model that can again, only get there ninety nine percent of the time, you don't want one percent of your cars to have the world in the wrong spot, like it's going to be a disaster. Yes, i'm in a lot of manufacturing is all about getting rid of as much should of variance and uncertainty as possible here.

And what about the harder? A lot of my friends are working robotics. One of the big issues, like sometimes you just have a survey, the fields and you get and takes a bunch of time to like, is that holding back things? Is the software still anyway.

I think both think there's been a lot more progress in the soft in the last few years. I think a lot of the humanoid robot companies now are really trying to build amazing hardware. Harbor is just so hard.

It's something where classic, you know, you build your first robot and that works. Not great. Then you build ten of them, five of them work, three them work cafe the time to them don't work and you build t them all the same.

You don't know why. And it's just like the real world has like this level of detail and differences that. Software doesn't have like imagine if every four loopy wrote, some of them just didn't work.

Some of them were slower than others. Like how do you do that? Like image every binary that you ship to a customer.

Each of those four looks was a little IT differently, was a little different. IT becomes just so hard to scale. And so to maintain quality of these things, I think that's like that.

What makes harbor really hard is not building one of something, but repeatedly ly building something here and making IT work reliably. We're again like you'll you'll buy a batch of hundred motor's and each of those motors will behave a little bit different to the same. And book command, this is your lives experiences yeah. And robotics is all about how do you build something that's robust.

Despite these differences, we can get the .

tolerance tors down to everything.

actually everything.

I mean, one of one of my horror stories was that cobalt t, this was many years ago, we had, we had a thermal camera on the robot that had a USB connection to the computer inside, which is first walls. A big mistake. You are not supposed to use the USB.

IT is not a reliable protocol. It's designed that if there is mistakes, the user are can just unplugged and plugged back in. And so typically things that are USB, they're not designed to the same level of, like very high reliable you need became because they assume someone with some log and replay.

G IT, I heard this two and I didn't listen to, and I really wish had before. Anyway, at a certain point, a bunch of these thermal cameras started falling. And like, we couldn't figure out why, and I asked everyone a team, okay, what's changed? Like the software changed around this node? The hardware design changed? no.

And I was like investigating all the stuff, like looking at like kern nel logs, like what's happening with the this thing. And finally, like the procured person was like, so go you able like I found new vender for USB cables like later, like what you switched, like which vender we're buying USB tables from to get it's like the same exact cable, just like a dollar cheaper and IT turns out this was the problems. This new cable had slightly worse resistance, are slightly worse emi interference, and, you know, didn't IT work most of the time, but one percent of the time these cameras would fail.

And we need to like, reboot a big part of the system. And IT was all just because like the same exact expect, these two different USB cables like slightly different. And so these are the kind of things you deal with .

harder for listeners. We had a with josh outbreak of in view, where did you talk about buying, you know, tens of thousands of GPU and just some of them will just .

not do math yeah yes that's the same thing.

Yes you run run some test to find the bad bash and then you return IT to center because they just G.

P, S. Won't do math right yeah yeah this is the thing um the real world has the level of detail there's eric jang was that he did A I google yeah yeah and then joined one X I see him post on twitter occasionally of like you know complaints about hardware and supply chain. And I we know each other and we joke occasionally that we've like switch. If I went from robotics and to AI and he went from AI into robotics and I mean.

look very, very promising the time of the real world unlimited, right? But just also a lot harder. And I do think like, but something I also tell people about for why working software agents is their infinitely longer.

They always work the same way, mostly M S. year. What are you using? python? yeah. And this is this like the whole thesis I also interest like in you, you dropped a little bit of of A I don't want to sure we don't lose IT like a sceptical self driving uh as a business.

So I I wanted like double click on this a little bit because I mean I I think that that shouldn't be taken away. Um we do have some public more numbers read from from we all pretty public with like their their are stats. They're exceeding one hundred emo trips a week.

If you assume like twenty five dollar right average that's one hundred and thirty million dollar run rate. At some point they will recoup their investment right? Like what are we talking about here?

Like discuss ism? I think anything i'm not an expert. I don't know their financials. yeah.

I would say the thing i'm worried about is like compared to an uber, like I don't know how much uber driver takes home year, but like call that the revenue that a way I was going to be making in that same year. Yeah, those cars are expensive. It's not about if you can hit profitability, it's about your cash conversion cycle.