Shownotes Transcript

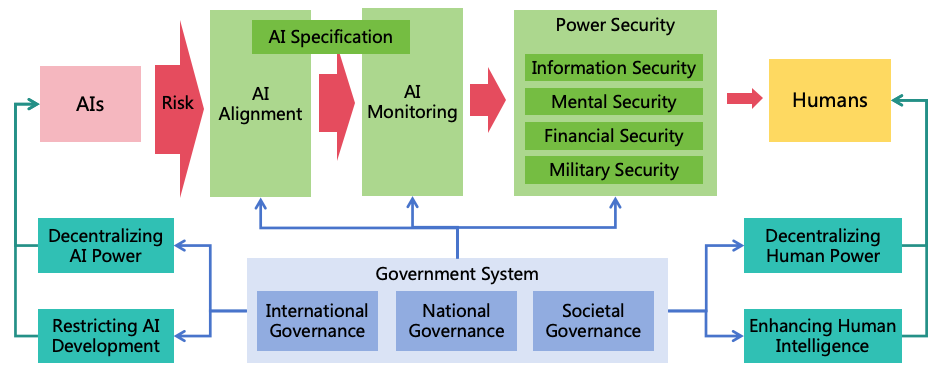

I have a lot of ideas about AGI/ASI safety. I've written them down in a paper and I'm sharing the paper here, hoping it can be helpful. Title: A Comprehensive Solution for the Safety and Controllability of Artificial Superintelligence Abstract: As artificial intelligence technology rapidly advances, it is likely to implement Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) in the future. The highly intelligent ASI systems could be manipulated by malicious humans or independently evolve goals misaligned with human interests, potentially leading to severe harm or even human extinction. To mitigate the risks posed by ASI, it is imperative that we implement measures to ensure its safety and controllability. This paper analyzes the intellectual characteristics of ASI, and three conditions for ASI to cause catastrophes (harmful goals, concealed intentions, and strong power), and proposes a comprehensive safety solution. The solution includes three risk prevention strategies (AI alignment [...]

The original text contained 1 image which was described by AI.

First published: December 18th, 2024

Source: https://www.lesswrong.com/posts/JWJnFvmB8ugi7bHQM/a-solution-for-agi-asi-safety)

---

Narrated by TYPE III AUDIO).

Images from the article:

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.