Shownotes Transcript

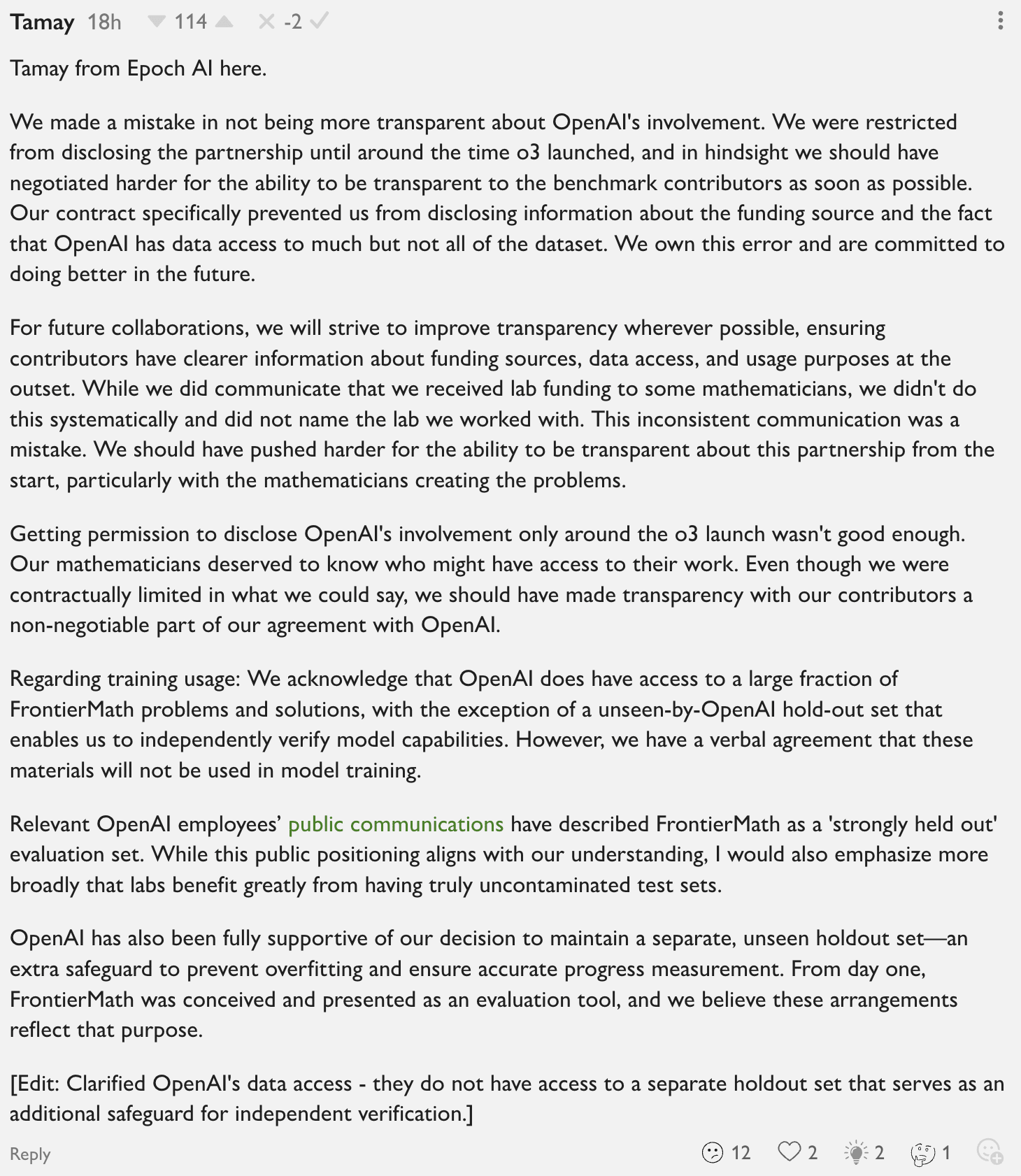

Recently, OpenAI announced their newest model, o3, achieving massive improvements over state-of-the-art on reasoning and math. The highlight of the announcement was the fact that o3 scored 25% on FrontierMath, a benchmark by Epoch AI ridiculously hard, unseen math problems of which previous models could only solve 2%. The events after the announcement, however, highlight that apart from OpenAI having the answer sheet before taking the exam, this was shady and lacked transparency in every possible way and has way broader implications for AI benchmarking, evaluations, and safety.

These are the important events that happened in chronological order:

Epoch AI worked on building the most ambitious math benchmark ever, FrontierMath. They hired independent mathematicians to work on it who were paid an undisclosed sum for each problem they contributed (at least unknown to me). On Nov 7, 2024, they released the first version of their paper [...]

The original text contained 2 images which were described by AI.

First published: January 19th, 2025

Source: https://www.lesswrong.com/posts/8ZgLYwBmB3vLavjKE/broader-implications-of-the-frontiermath-debacle)

---

Narrated by TYPE III AUDIO).

Images from the article: