“Human takeover might be worse than AI takeover” by Tom Davidson

LessWrong (30+ Karma)

Shownotes Transcript

Epistemic status -- sharing rough notes on an important topic because I don't think I'll have a chance to clean them up soon.

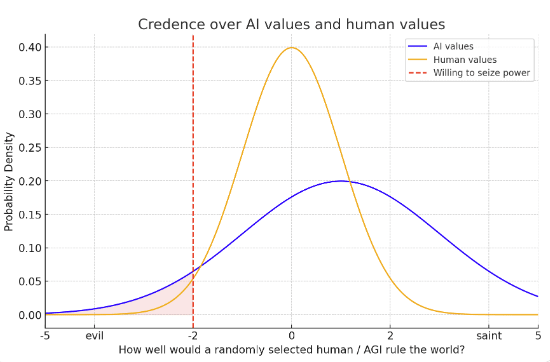

** Summary** Suppose a human used AI to take over the world. Would this be worse than AI taking over? I think plausibly:

In expectation, human-level AI will better live up to human moral standards than a randomly selected human. Because: Humans fall far short of our moral standards. Current models are much more nice, patient, honest and selfless than humans. Though human-level AI will have much more agentic training for economic output, and a smaller fraction of HHH training, which could make them less nice.

Humans are "rewarded" for immoral behaviour more than AIs will be Humans evolved under conditions where selfishness and cruelty often paid high dividends, so evolution often "rewarded" such behaviour. And similarly, during lifetime learning we [...]

Outline:

(00:12) Summary

(02:20) AGI is nicer than humans in expectation

(04:53) Conditioning on AI actually seizing power

(08:18) Conditioning on the human actually seizing power

(09:31) Other considerations

The original text contained 1 image which was described by AI.

First published: January 10th, 2025

Source: https://www.lesswrong.com/posts/FEcw6JQ8surwxvRfr/human-takeover-might-be-worse-than-ai-takeover)

---

Narrated by TYPE III AUDIO).

Images from the article: