“LLM in-context learning as (approximating) Solomonoff induction” by Cole Wyeth

LessWrong (30+ Karma)

Shownotes Transcript

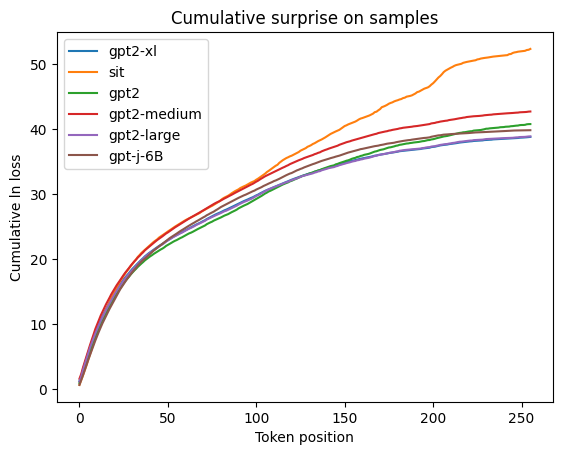

Epistemic status: One week empirical project from a theoretical computer scientist. My analysis and presentation were both a little rushed; some information that would be interesting is missing from plots because I simply did not have time to include it. All known "breaking" issues are discussed and should not effect the conclusions. I may refine this post in the future.

[This work was performed as my final project for ARENA 5.0.]

** Background**

I have seen several claims[1] in the literature that base LLM in-context learning (ICL) can be understood as approximating Solomonoff induction. I lean on this intuition a bit myself (and I am in fact a co-author of one of those papers). However, I have not seen any convincing empirical evidence for this model.

From a theoretical standpoint, it is a somewhat appealing idea. LLMs and Solomonoff induction both face the so-called "prequential problem," predicting a sequence [...]

Outline:

(00:40) Background

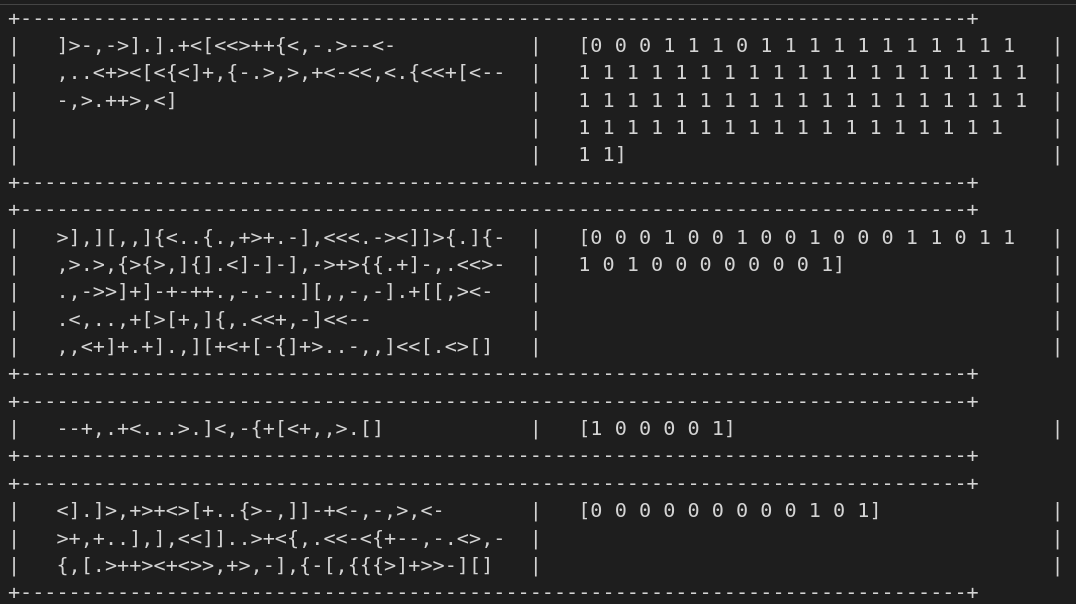

(03:35) Methodology

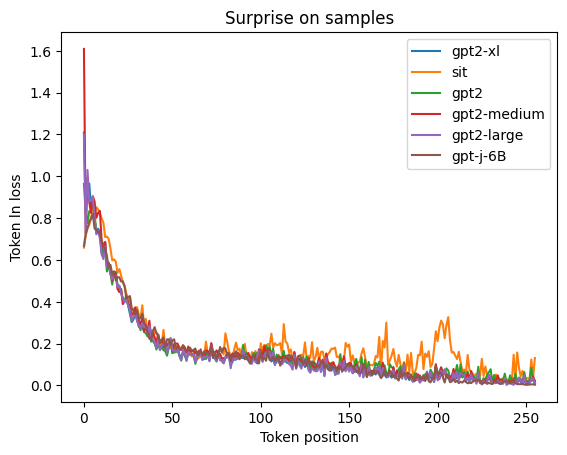

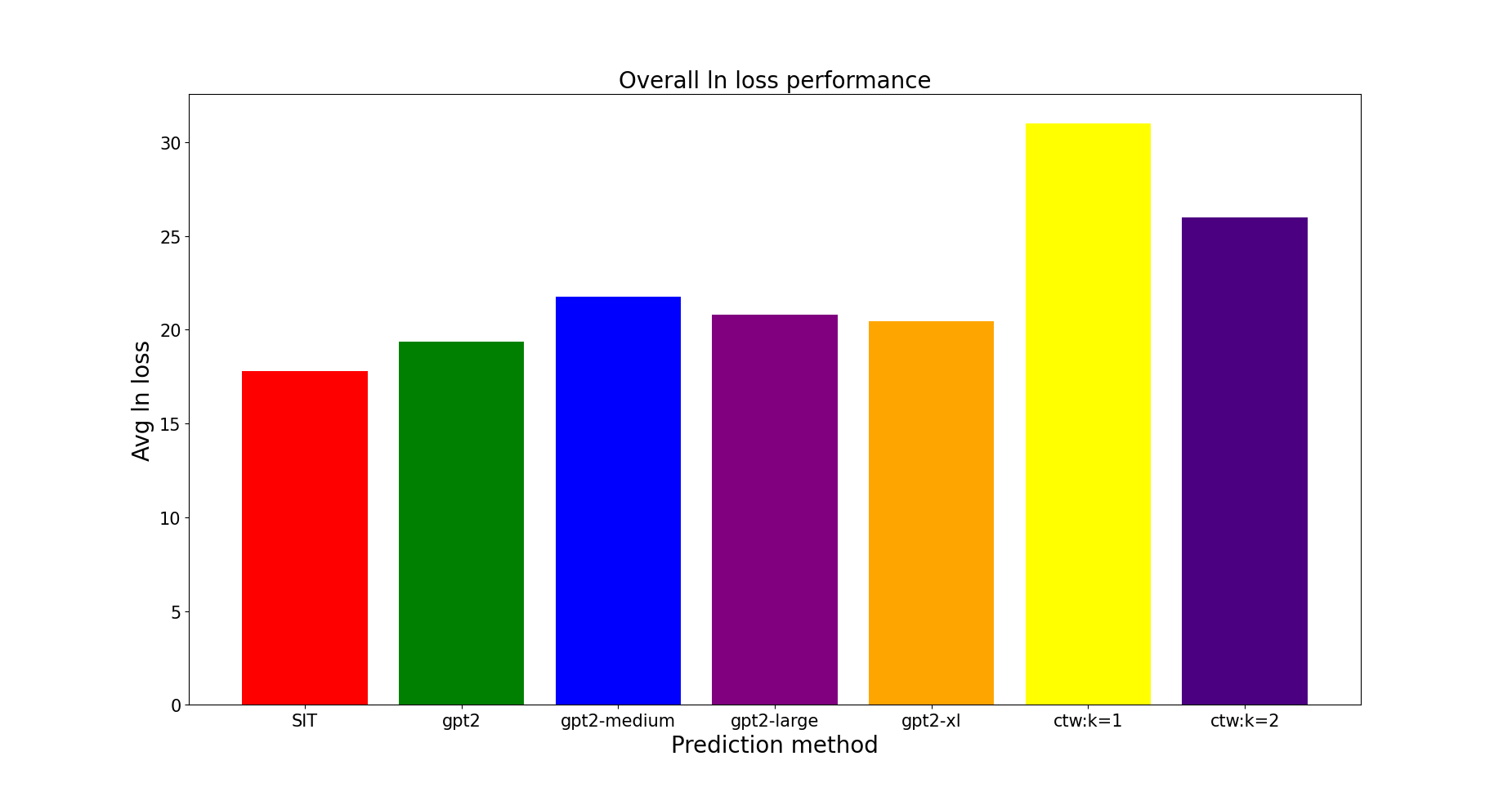

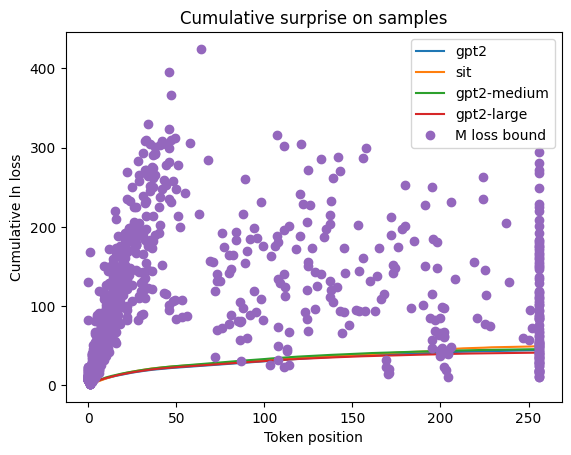

(04:56) Results

(07:21) Conclusions

The original text contained 4 footnotes which were omitted from this narration.

First published: June 5th, 2025

---

Narrated by TYPE III AUDIO).

Images from the article:

)

) )

) )

) )

) )

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.