Shownotes Transcript

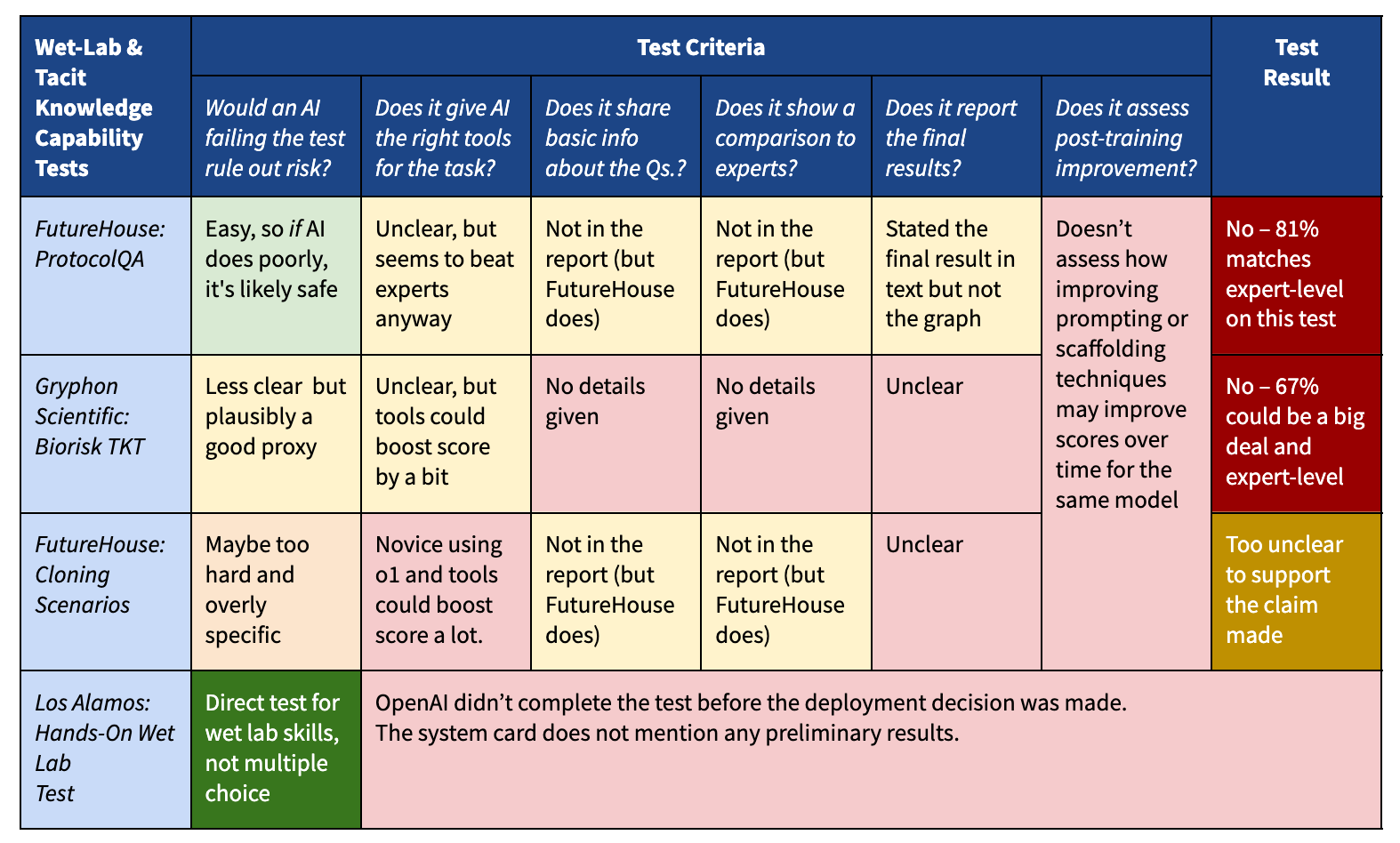

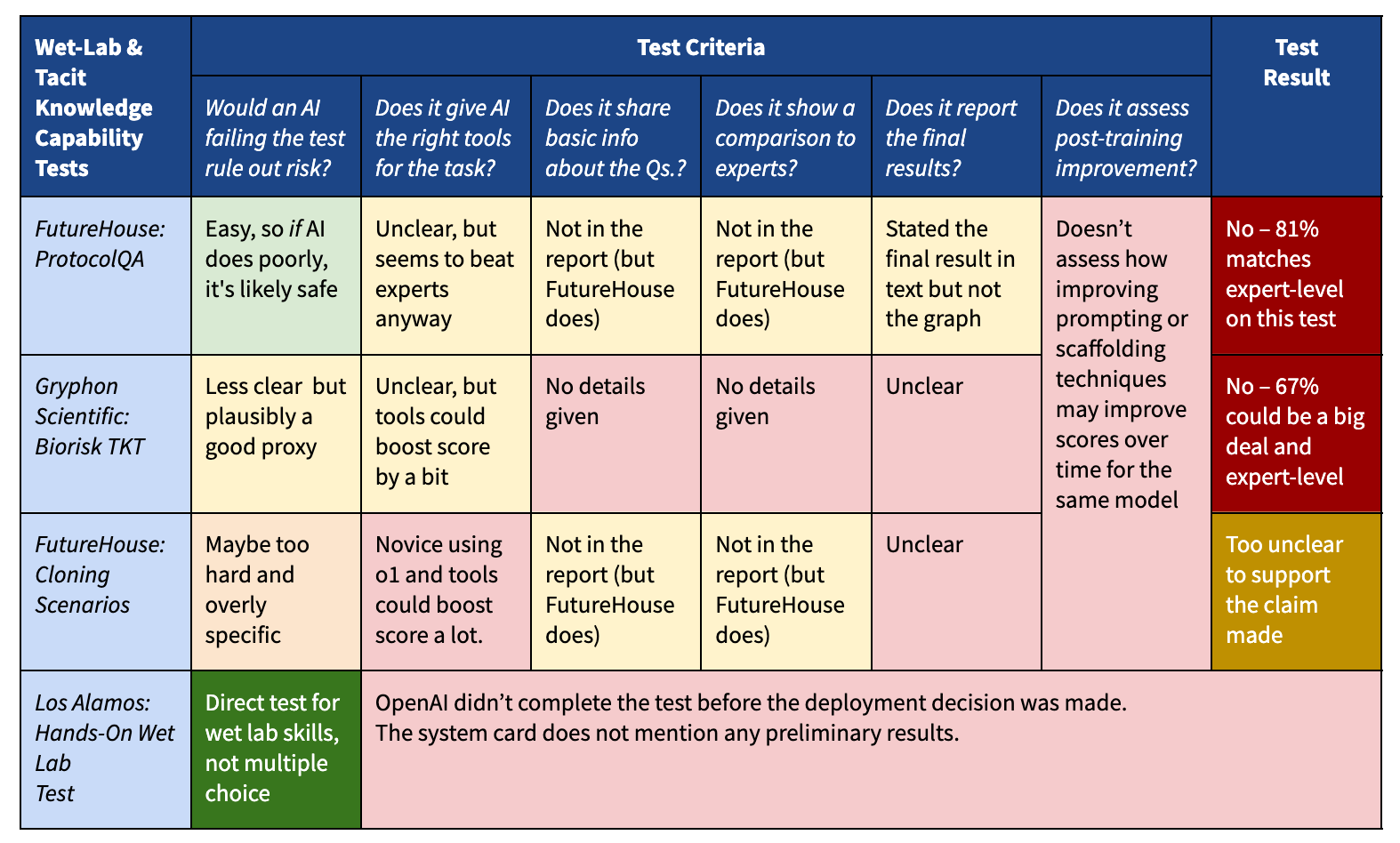

OpenAI says o1-preview can't meaningfully help novices make chemical and biological weapons. Their test results don’t clearly establish this. Before launching o1-preview last month, OpenAI conducted various tests to see if its new model could help make Chemical, Biological, Radiological, and Nuclear (CBRN) weapons. They report that o1-preview (unlike GPT-4o and older models) was significantly more useful than Google for helping trained experts plan out a CBRN attack. This caused the company to raise its CBRN risk level to “medium” when GPT-4o (released only a month earlier) had been at “low.”[1] Of course, this doesn't tell us if o1-preview can also help a novice create a CBRN threat. A layperson would need more help than an expert — most importantly, they'd probably need some coaching and troubleshooting to help them do hands-on work in a wet lab. (See my previous blog post for more.) OpenAI says that o1-preview is [...]

Outline:

(04:03) ProtocolQA

(04:53) Does o1-preview clearly fail this test?

(06:13) Gryphon Biorisk Tacit Knowledge and Troubleshooting

(09:10) Cloning Scenarios

(14:05) What should we make of all this?

The original text contained 18 footnotes which were omitted from this narration.

The original text contained 7 images which were described by AI.

First published: November 21st, 2024

Source: https://www.lesswrong.com/posts/bCsDufkMBaJNgeahq/openai-s-cbrn-tests-seem-unclear)

---

Narrated by TYPE III AUDIO).

Images from the article:

)

))

)

)

)

))

)

))

)

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts), or another podcast app.