“SAE Probing: What is it good for? Absolutely something!” by Subhash Kantamneni, JoshEngels, Senthooran Rajamanoharan, Neel Nanda

LessWrong (30+ Karma)

Shownotes Transcript

Subhash and Josh are co-first authors. Work done as part of the two week research sprint in Neel Nanda's MATS stream

** TLDR**

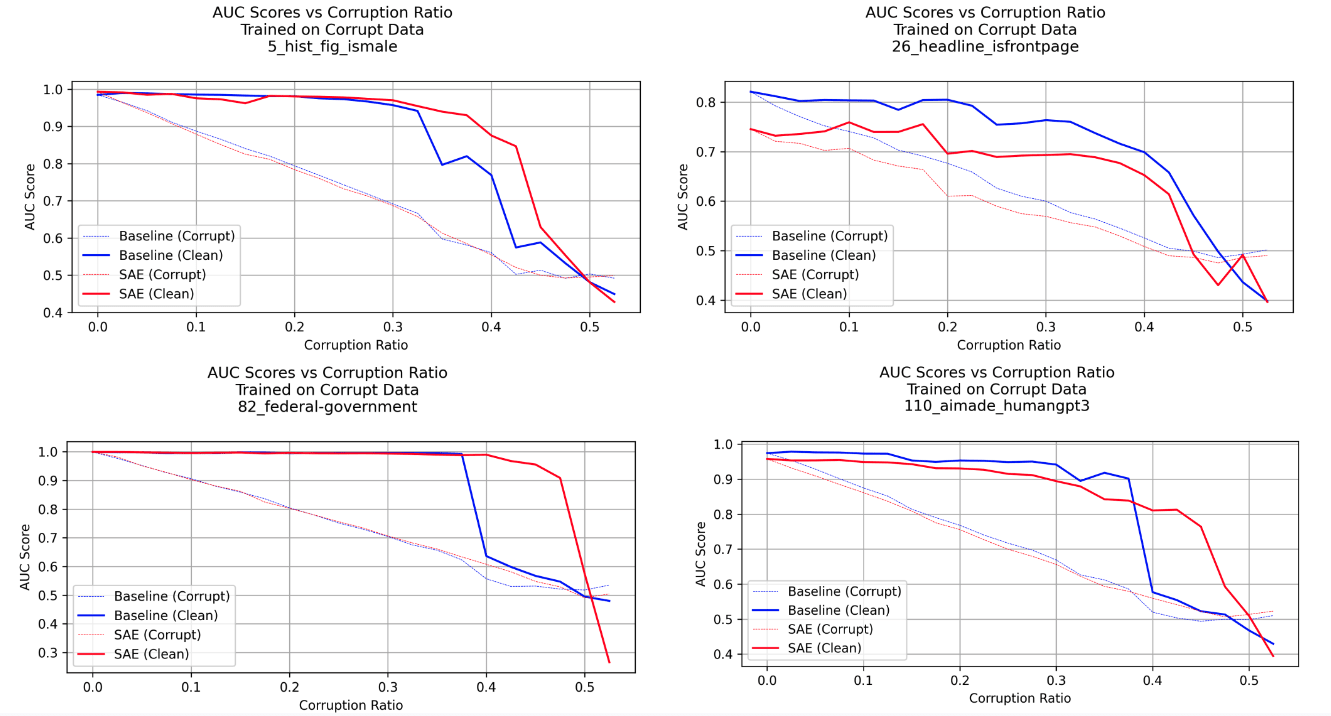

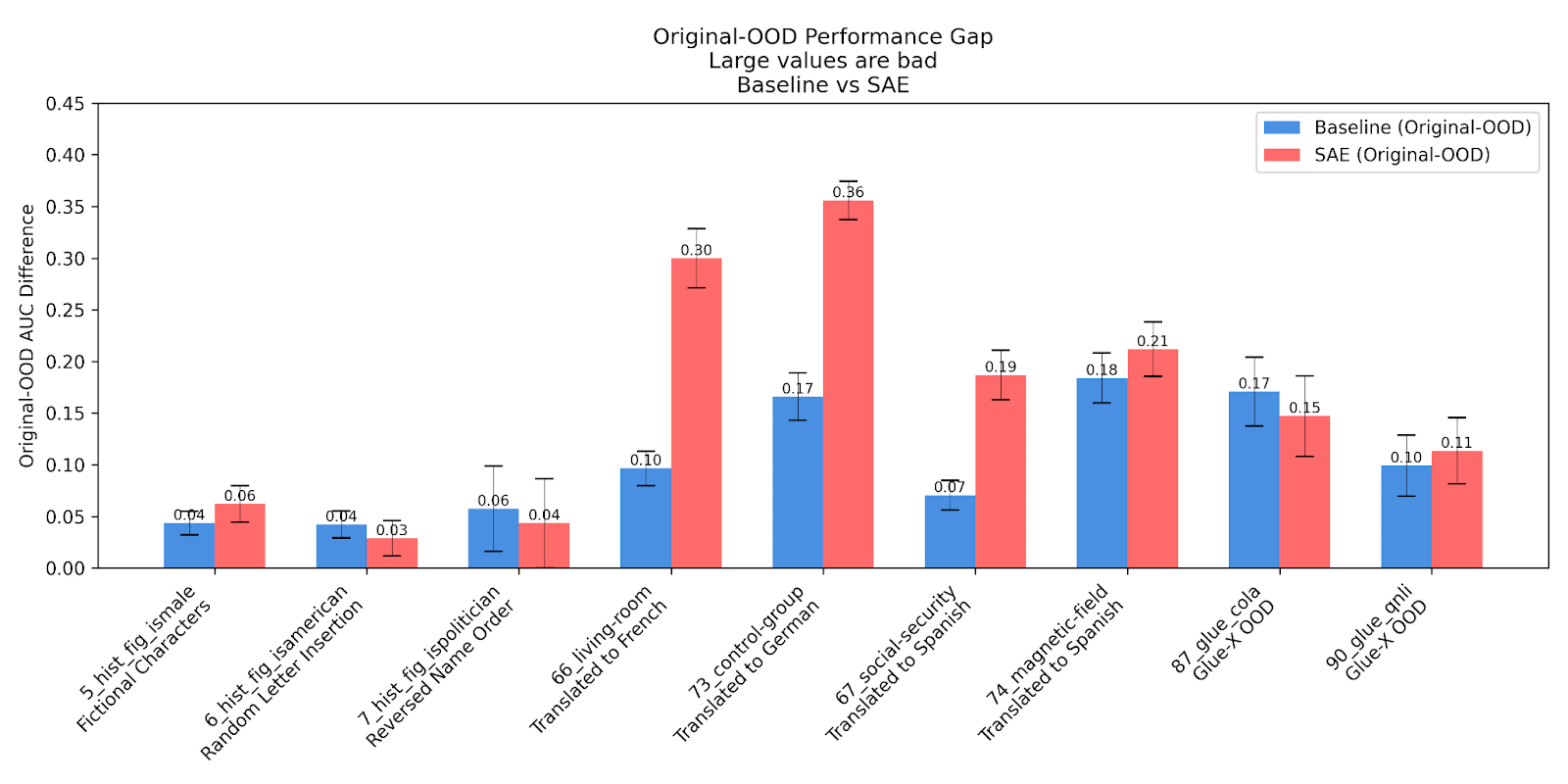

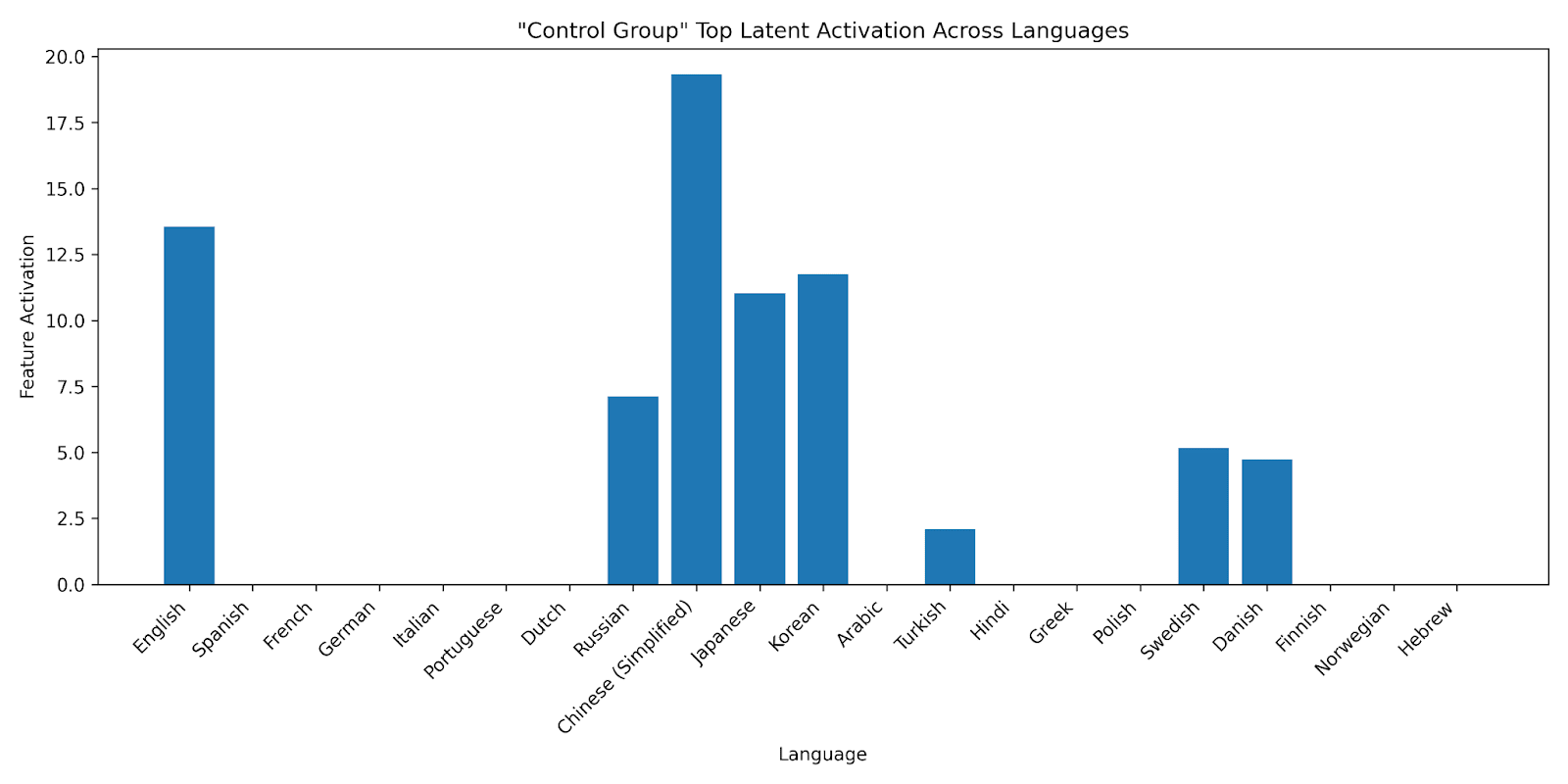

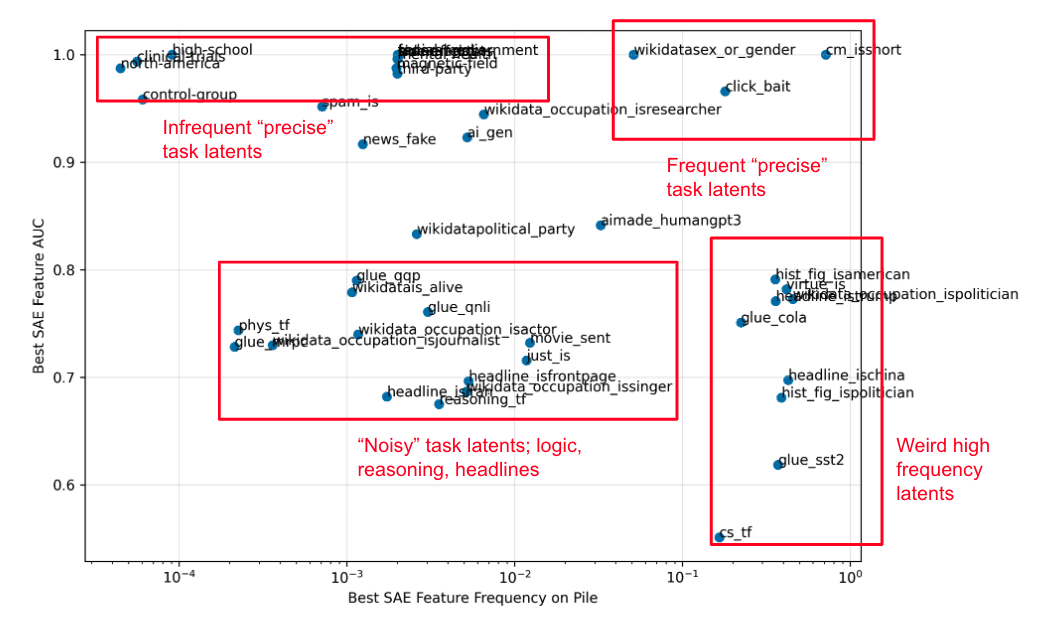

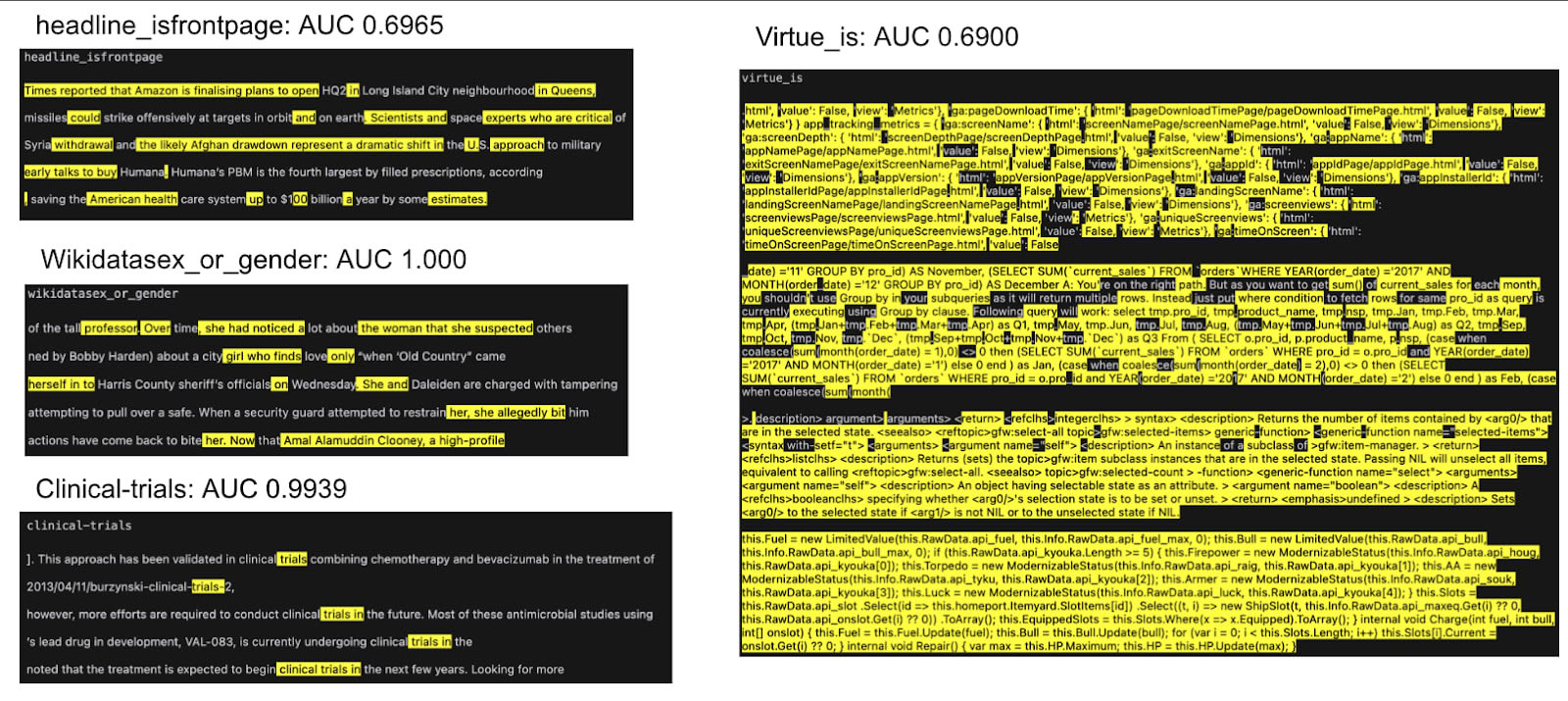

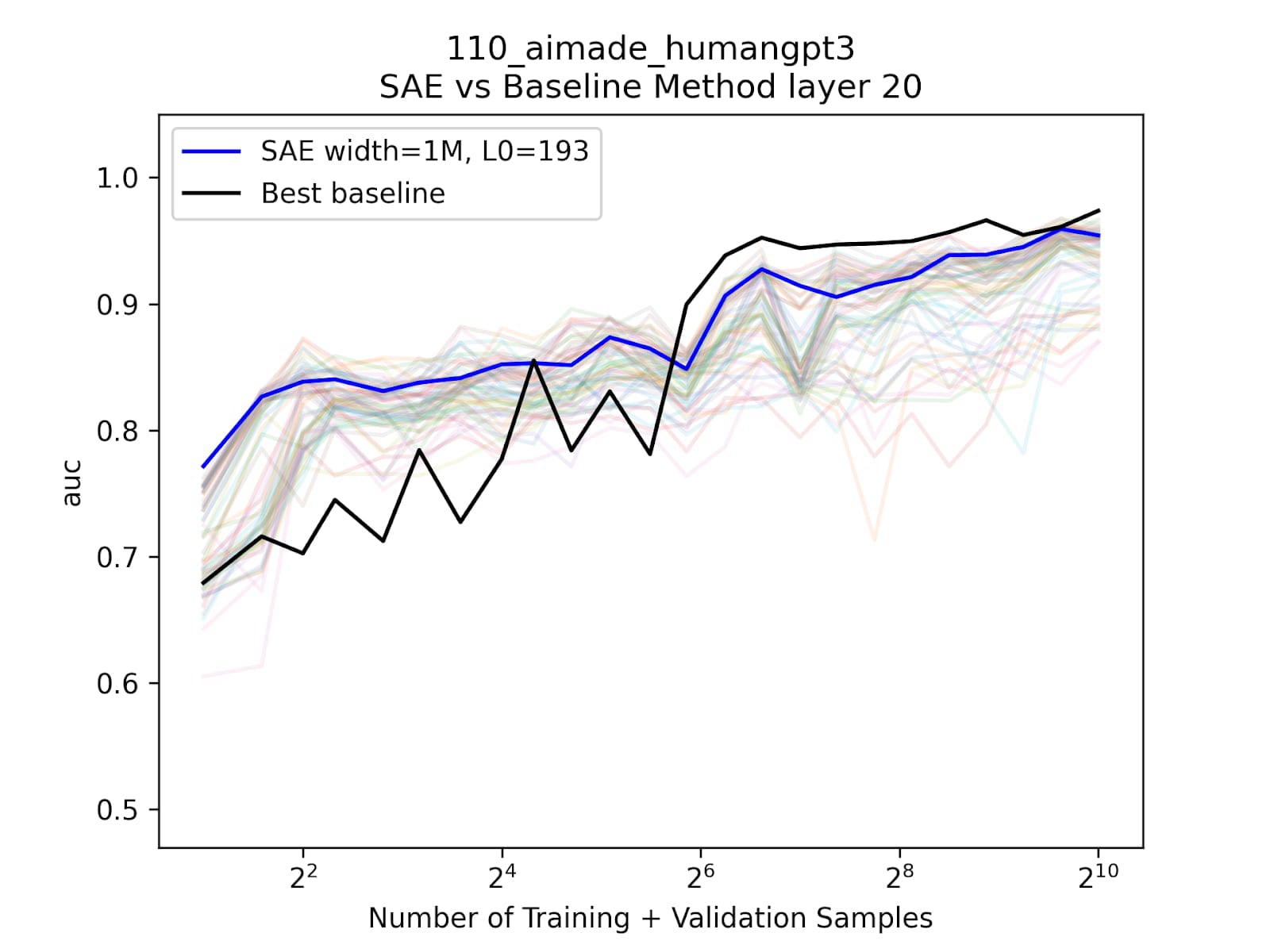

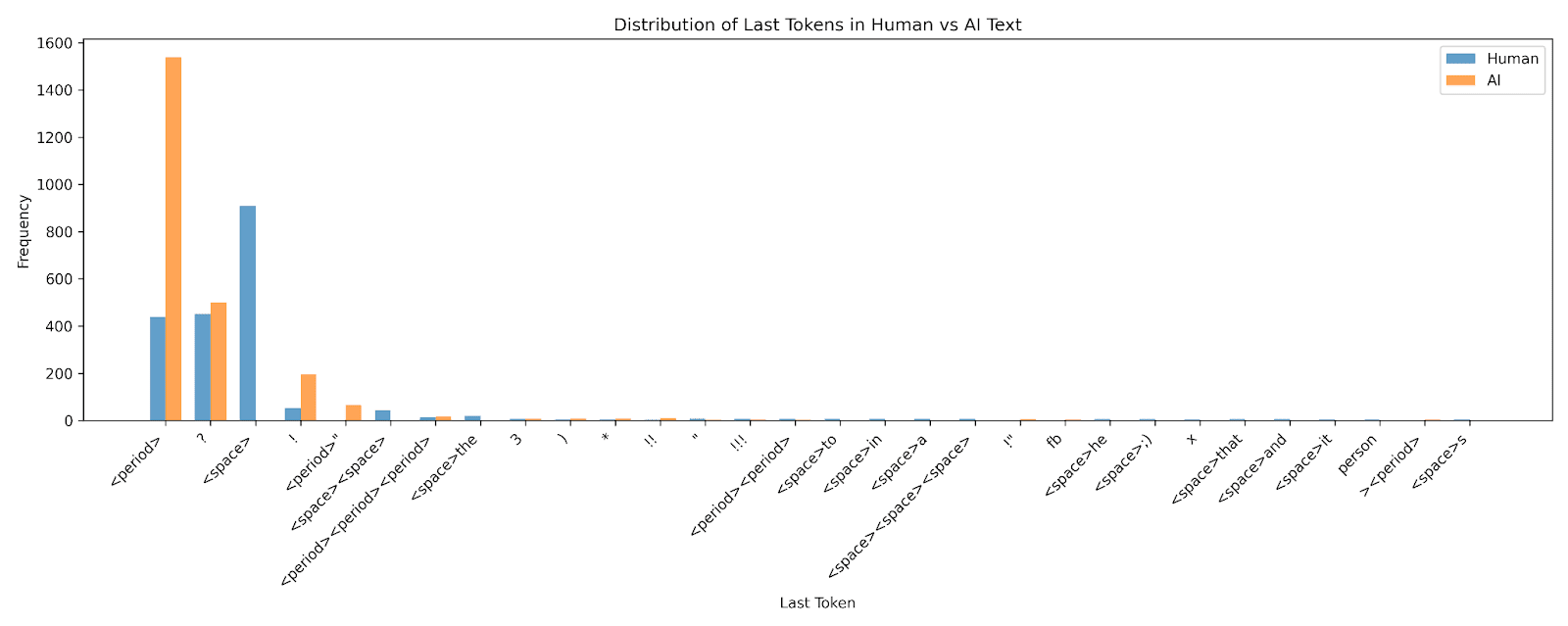

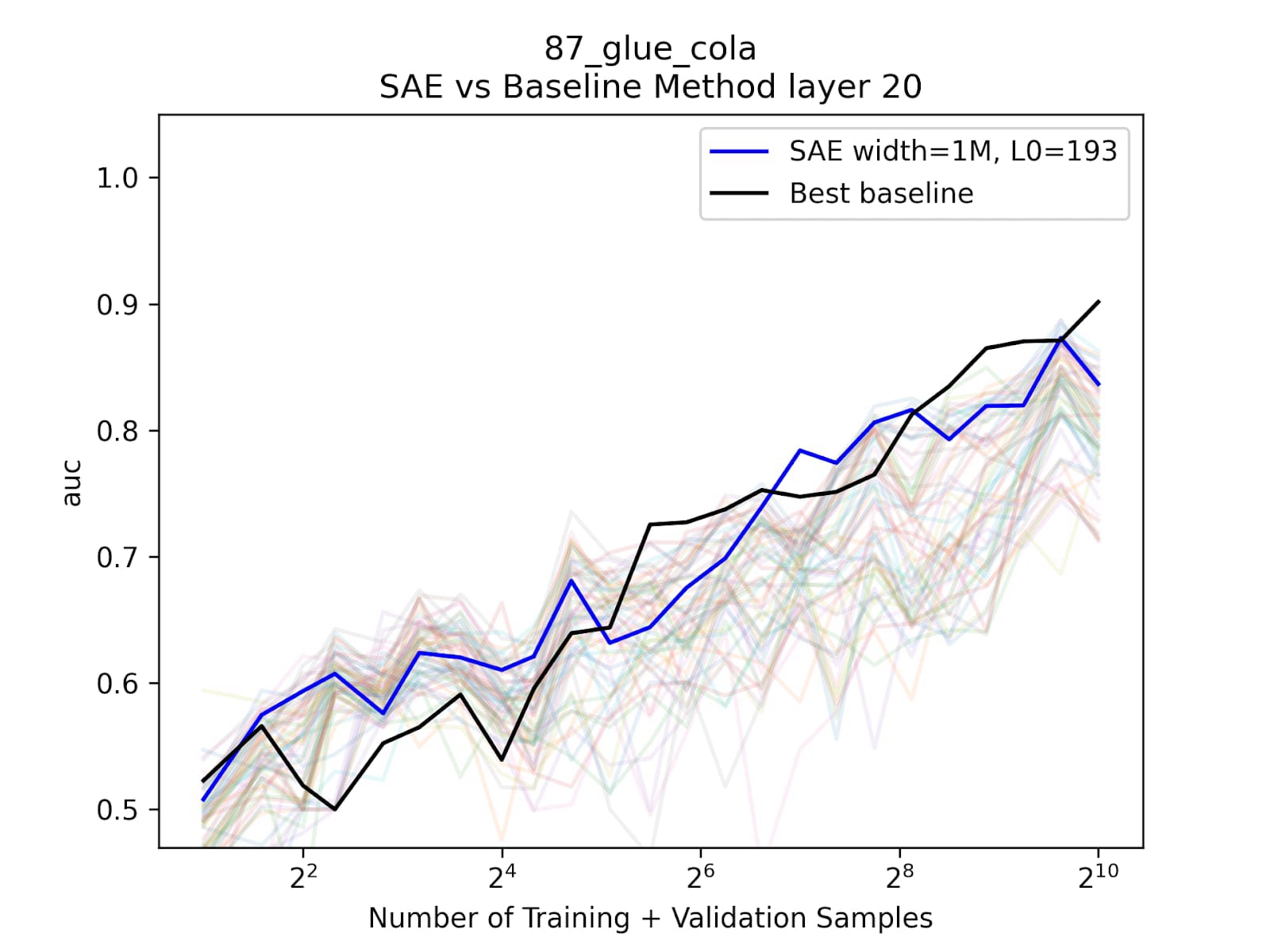

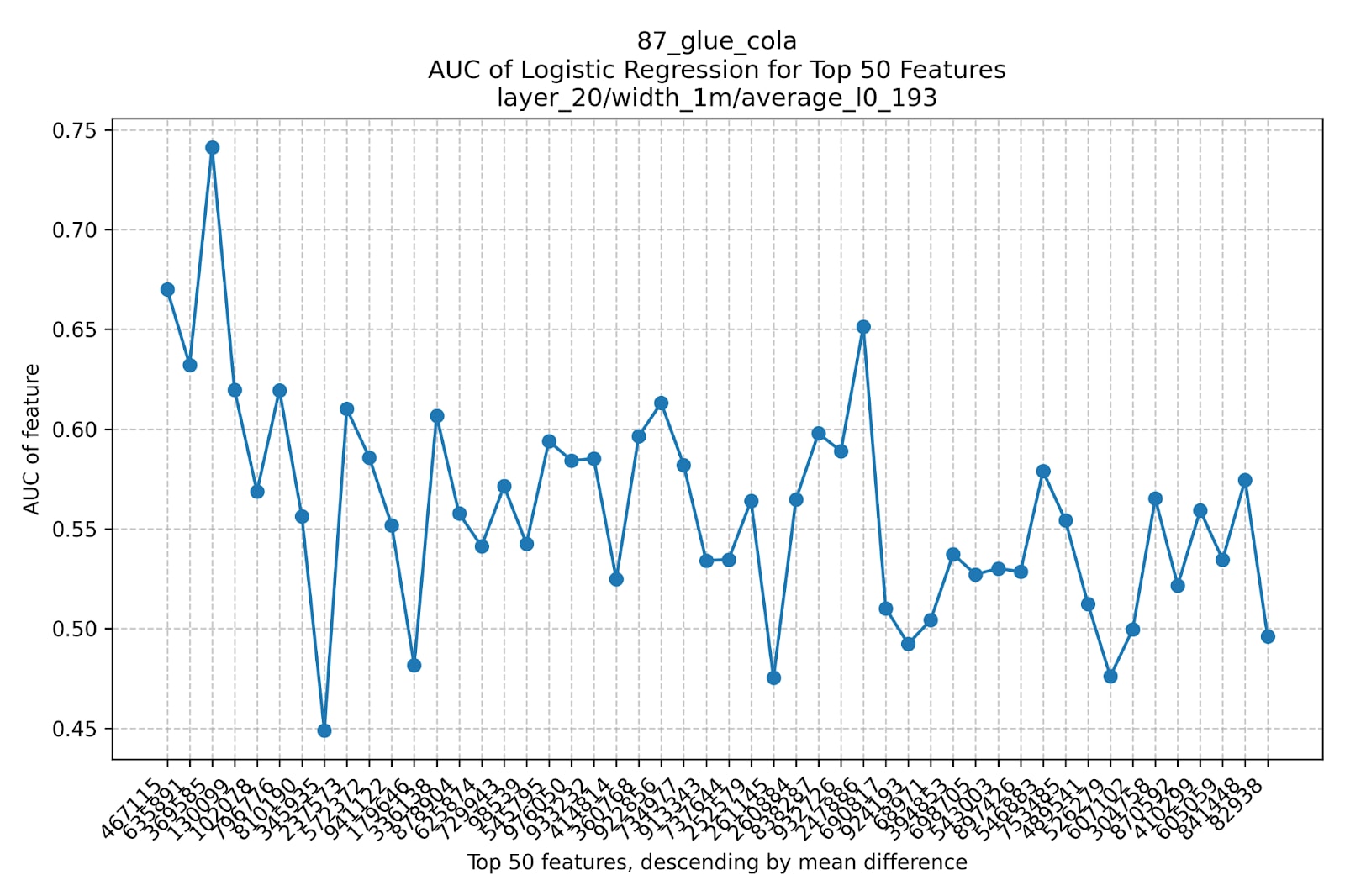

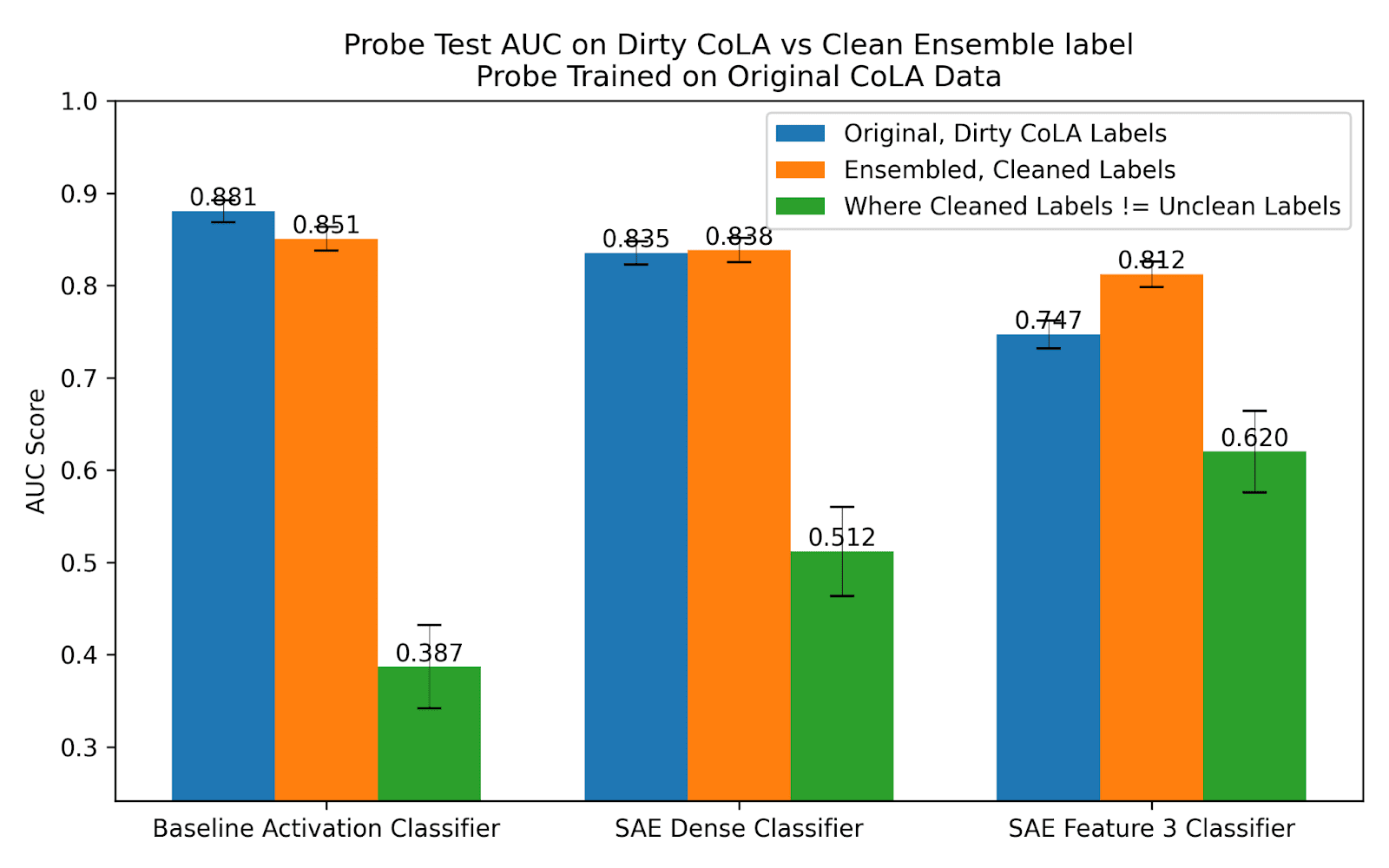

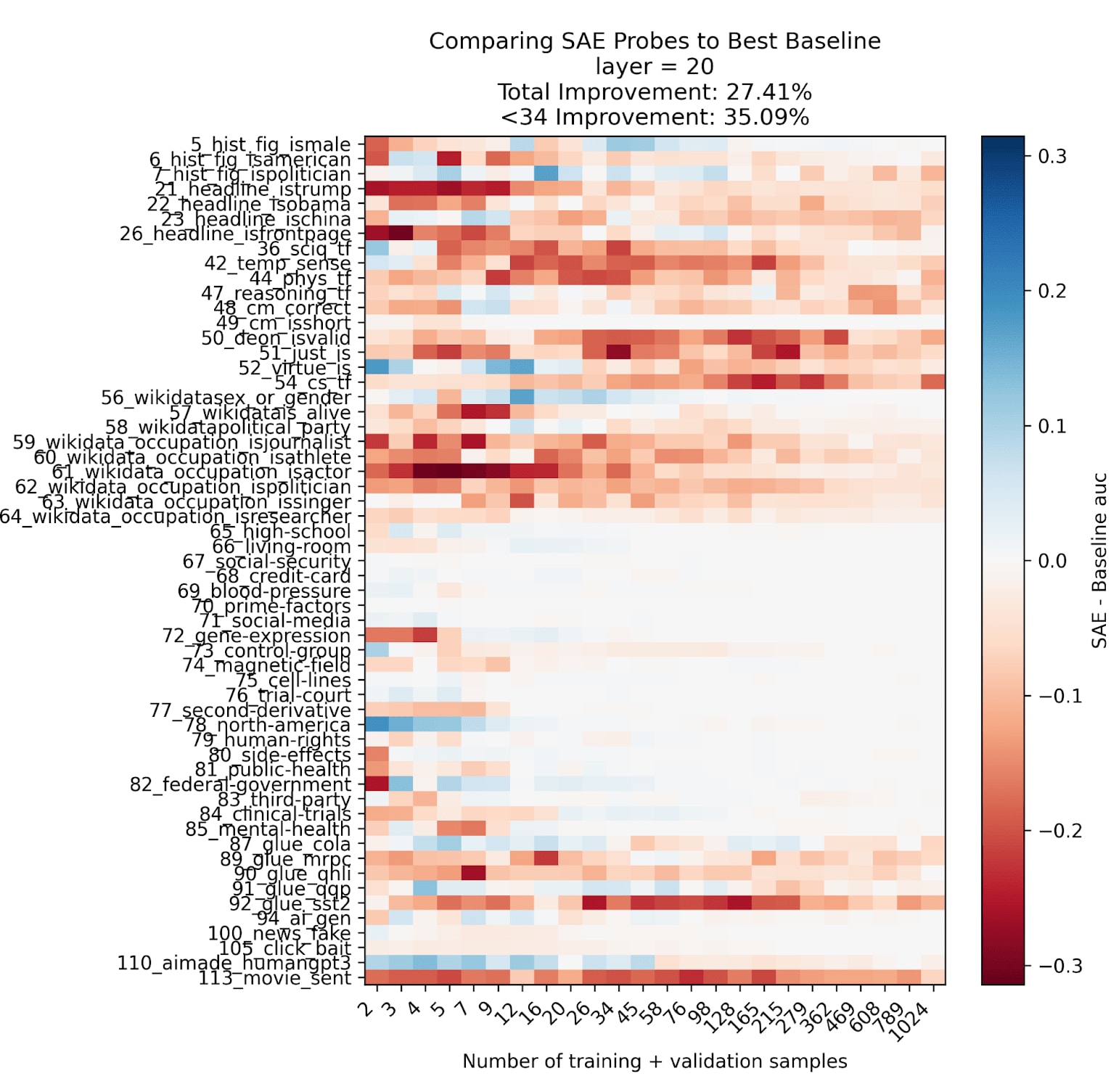

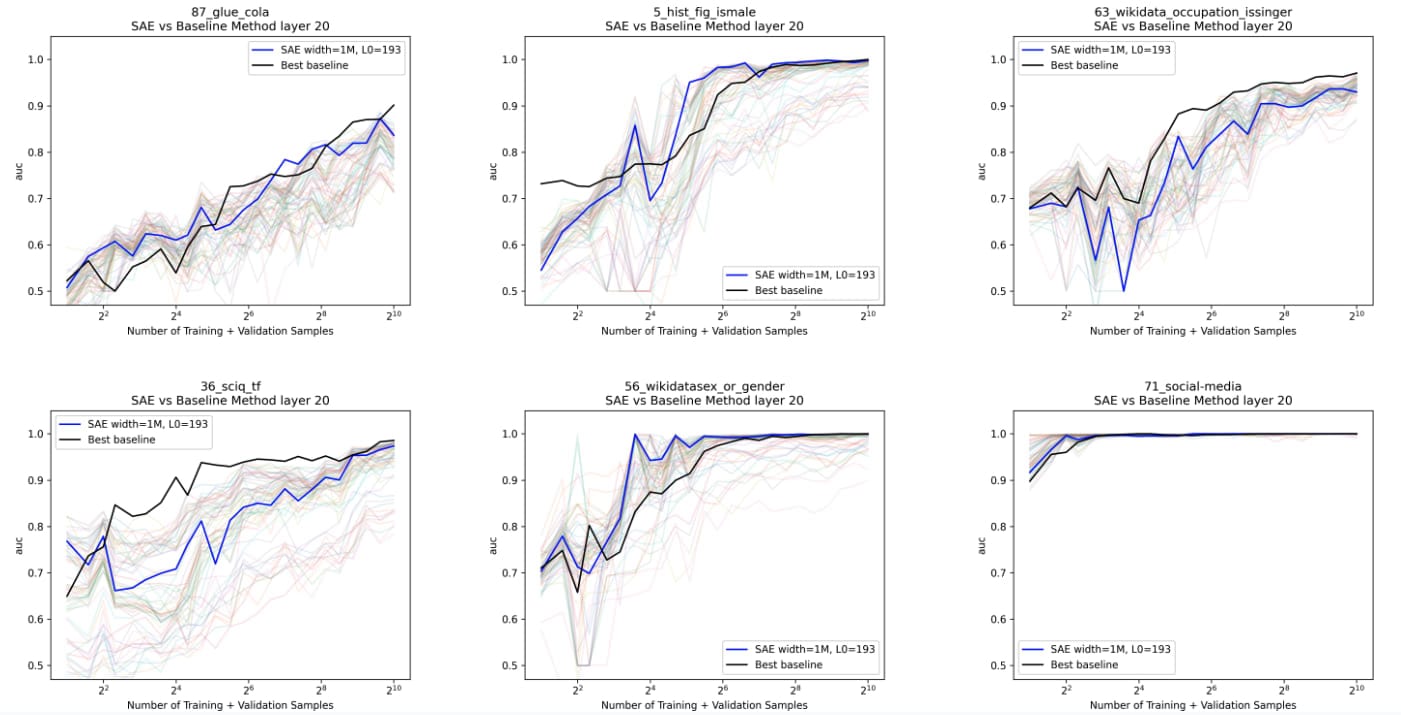

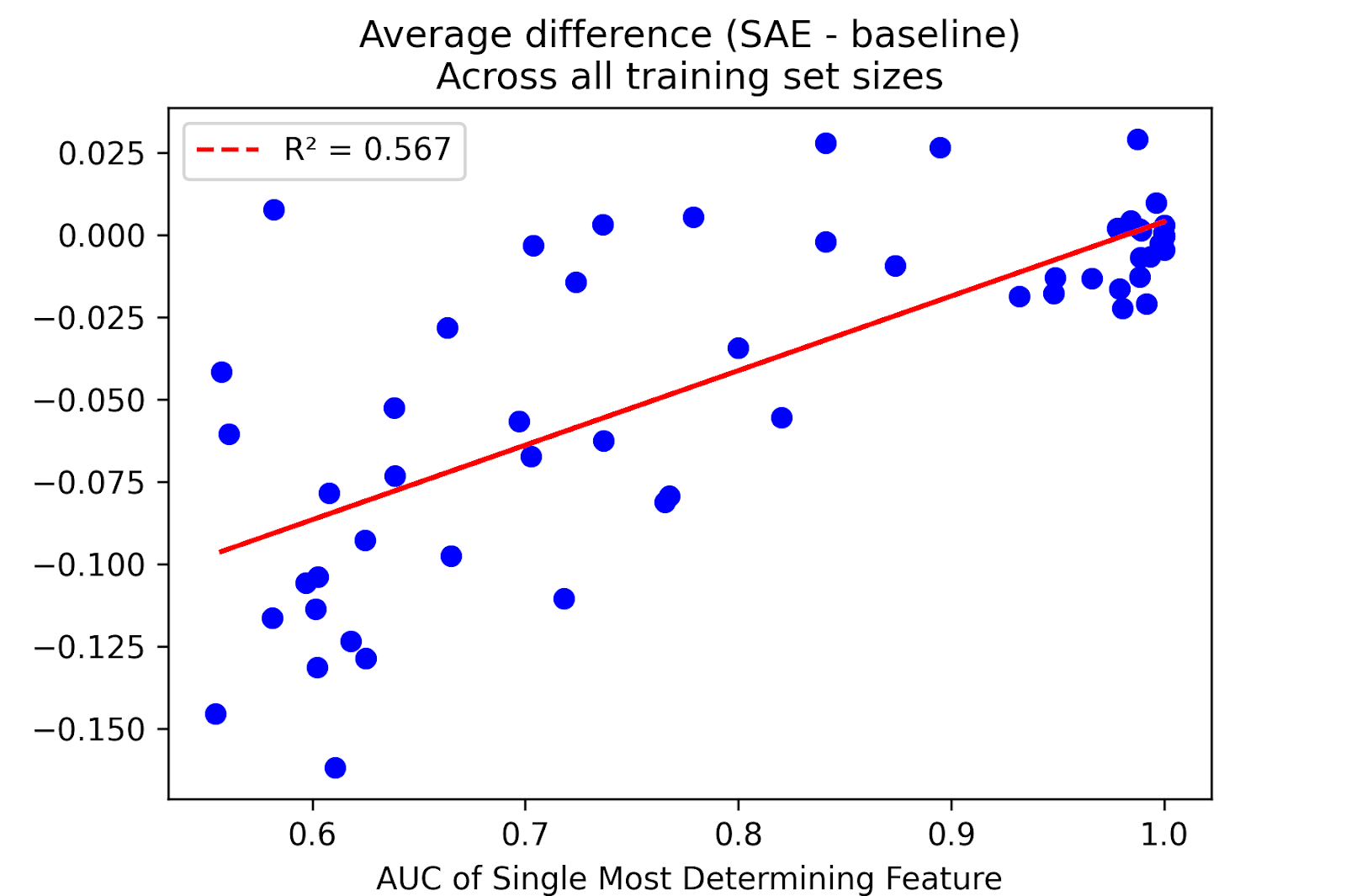

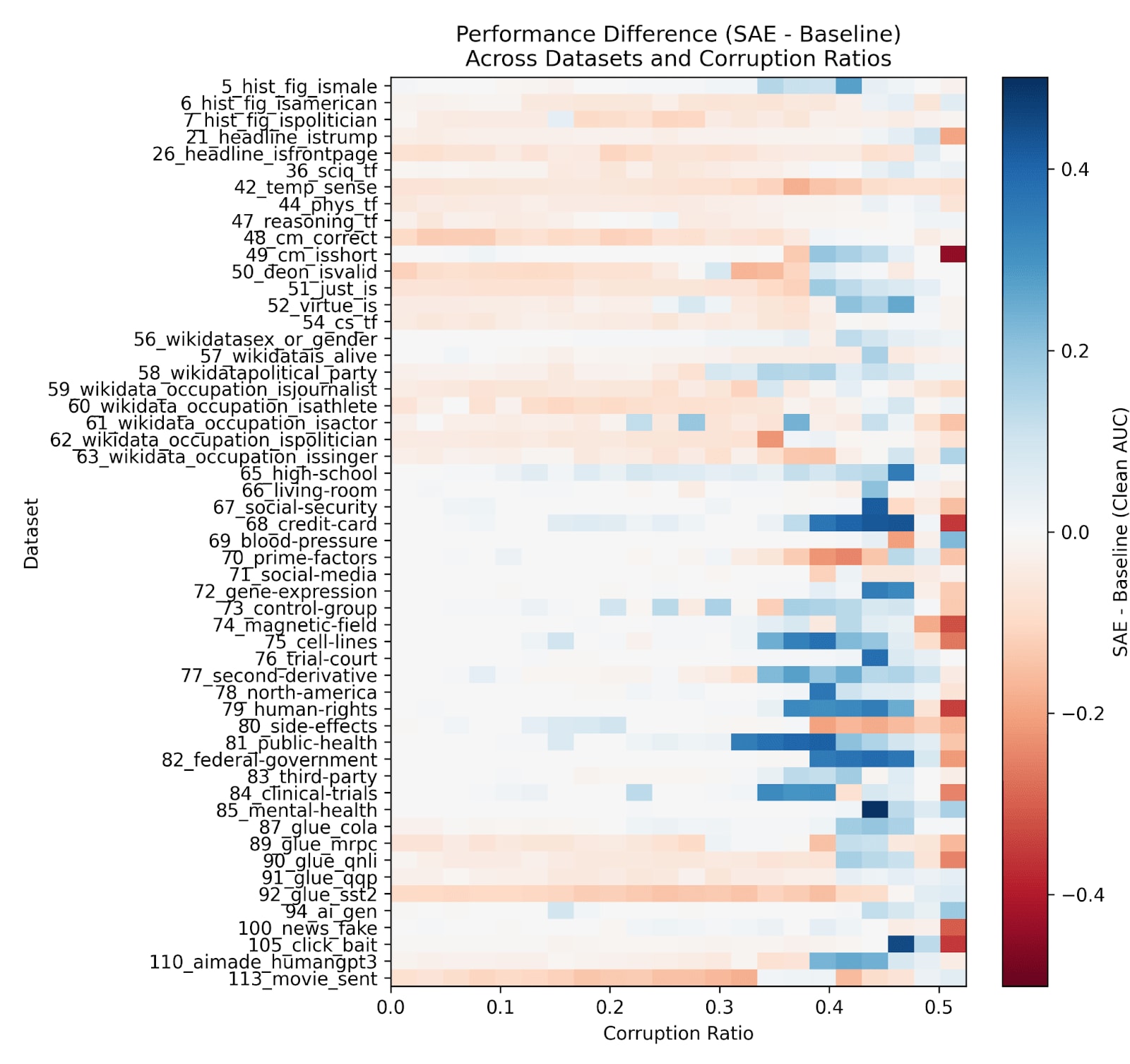

We show that dense probes trained on SAE encodings are competitive with traditional activation probing over 60 diverse binary classification datasets Specifically, we find that SAE probes have advantages in: Low data regimes (~ < 100 training examples) Corrupted data (i.e. our training set has some incorrect labels, while our test set is clean) Settings where we worry about the generalization of our probes due to spurious correlations in our dataset or possible mislabels (dataset interpretability) or if we want to understand SAE features better (SAE interpretability).

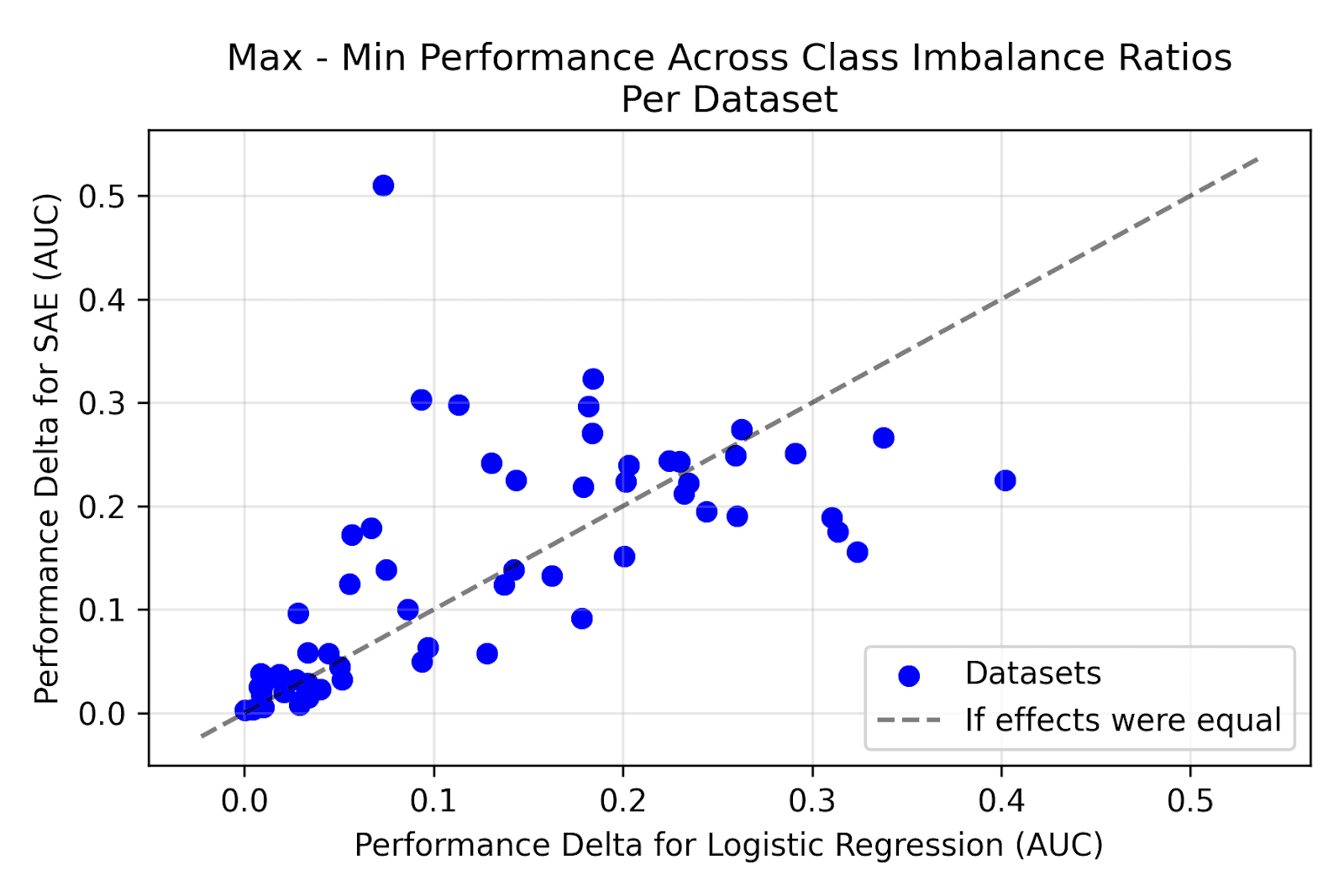

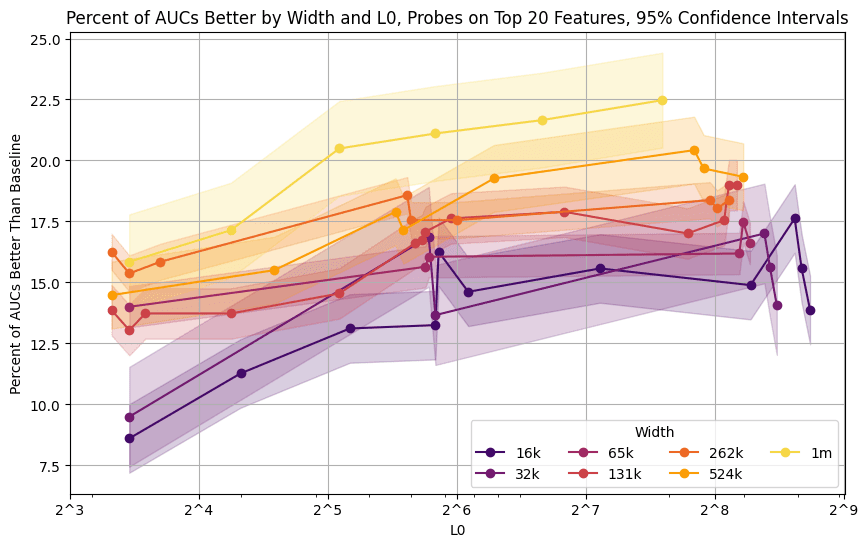

We find null results when comparing SAE probes to activation probes with OOD data in other settings or with imbalanced classes. We find that higher width and L0 are determining factors for which SAEs are best for SAE probing.

** Motivation and Problem Setting**

November 1st, 2024

---

Narrated by TYPE III AUDIO).

Images from the article:

)

)

)

)