Machine learning at the edge: TinyML is getting big. Featuring Qualcomm Senior Director Evgeni Gousev, Neuton CTO Blair Newman and Google Staff Research Engineer Pete Warden

Orchestrate all the Things

Shownotes Transcript

Being able to deploy machine learning applications at the edge is the key to unlocking a multi-billion dollar market. TinyML is the art and science of producing machine learning models frugal enough to work at the edge, and it's seeing rapid growth.

Edge computing is booming. Although the definition of what constitutes edge computing is a bit fuzzy, the idea is simple. It's about taking compute out of the data center, and bringing it as close to where the action is as possible.

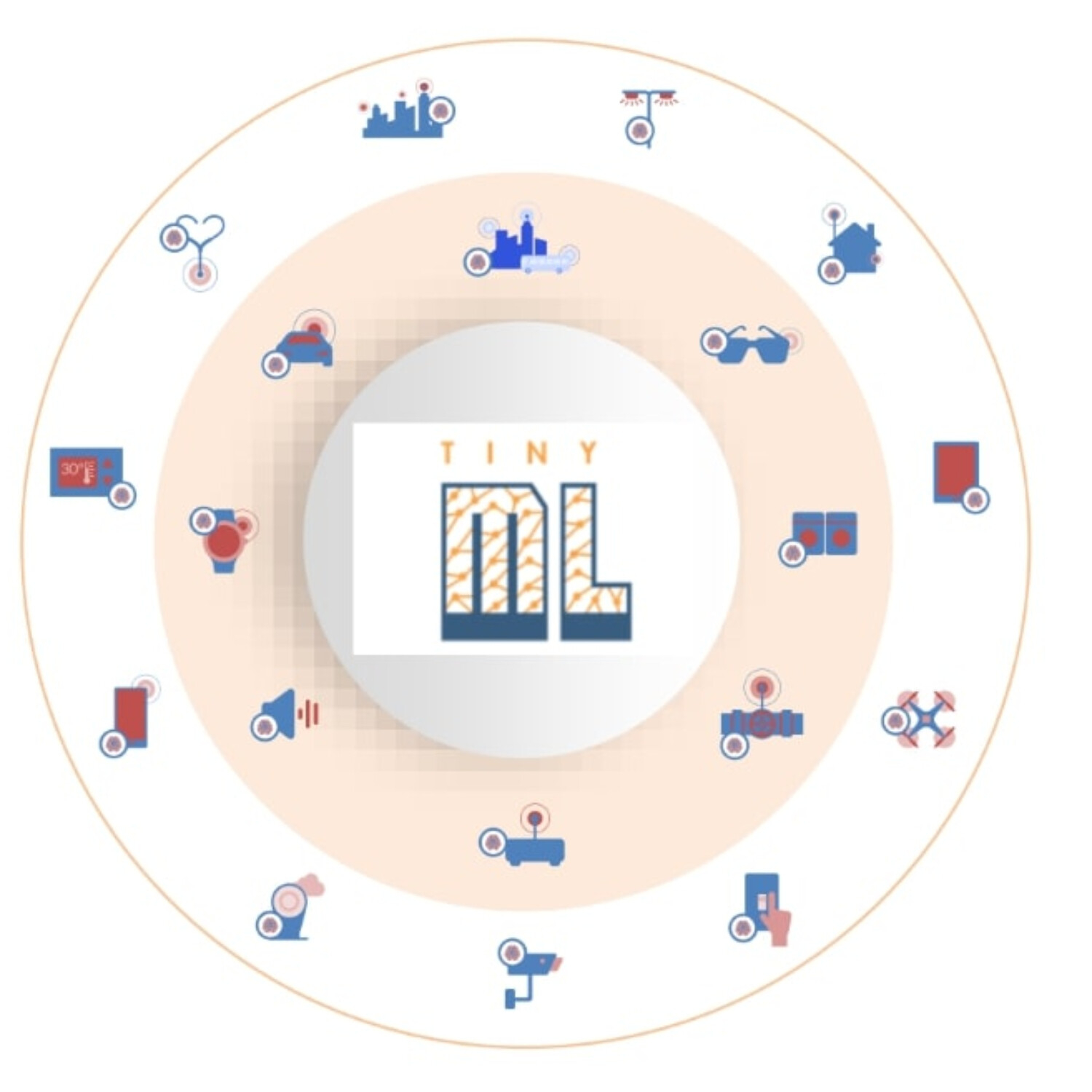

Whether it's stand-alone IoT sensors, devices of all kinds, drones, or autonomous vehicles, there's one thing in common. Increasingly, data generated on the edge are used to feed applications powered by machine learning models.

There's just one problem: machine learning models were never designed to be deployed on the edge. Not until now, at least. Enter TinyML.

Tiny machine learning (TinyML) is broadly defined as a fast growing field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low power, typically in the mW range and below, and hence enabling a variety of always-on use-cases and targeting battery operated devices.